0

AI算法基础 [16]:分组网络GroupConv

source link: https://no5-aaron-wu.github.io/2021/12/29/AI-Algorithm-16-GroupConv/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

AI算法基础 [16]:分组网络GroupConv

发表于2021-12-29|更新于2022-04-25|深度学习

阅读量:21

分组卷积(Group convolution),最早在AlexNet中出现,由于当时的硬件资源有限,训练AlexNet时卷积操作不能全部放在同一个GPU处理,因此作者把feature maps分给多个GPU分别进行处理,最后把多个GPU的结果进行融合。Alex认为group conv的方式能够增加 filter之间的对角相关性,而且能够减少训练参数,不容易过拟合,这类似于正则的效果。

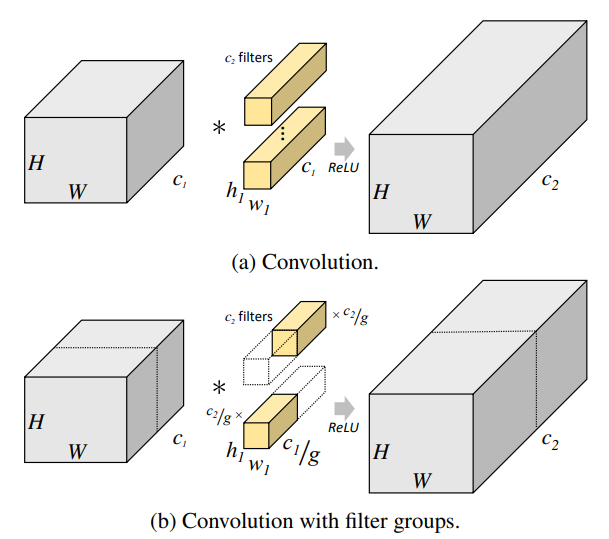

普通卷积和分组卷积的示意图如下所示:

按照NCHW排布来看,对普通卷积而言:

- 单个输入feature map尺寸:$ 1 \times c_1 \times H_{in} \times W_{in}$

- c2c_2c2个卷积核的尺寸:c2×c1×h1×w1c_2 \times c_1 \times h_1 \times w_1c2×c1×h1×w1

- 单个输出feature map尺寸:1×c2×Hout×Wout1 \times c_2 \times H_{out} \times W_{out}1×c2×Hout×Wout

- 参数量(假设无bias):c2∗c1∗h1∗w1c_2 * c_1 * h_1 * w_1c2∗c1∗h1∗w1

- 运算量(仅考虑浮点乘法):c2∗Hout∗Wout∗c1∗h1∗w1c_2*H_{out}*W_{out}*c_1*h_1*w_1c2∗Hout∗Wout∗c1∗h1∗w1

对分为g组的分组卷积(上图中g=2)而言:

- 每组的输入feature map尺寸:$ 1 \times c_1/g \times H_{in} \times W_{in}$

- 每组的卷积核尺寸:c2/g×c1/g×h1×w1c_2/g \times c_1/g \times h_1 \times w_1c2/g×c1/g×h1×w1

- 每组的输出feature map尺寸:1×c2/g×Hout×Wout1 \times c_2/g \times H_{out} \times W_{out}1×c2/g×Hout×Wout,最终各组输出concat到一起

- g组总参数量(假设无bias):c2/g∗c1/g∗h1∗w1∗g=c2∗c1/g∗h1∗w1c_2/g * c_1/g * h_1 * w_1 * g = c_2 * c_1/g * h_1 * w_1c2/g∗c1/g∗h1∗w1∗g=c2∗c1/g∗h1∗w1

- g组总运算量(仅考虑浮点乘法):c2/g∗Hout∗Wout∗c1/g∗h1∗w1∗g=c2∗Hout∗Wout∗c1/g∗h1∗w1c_2/g*H_{out}*W_{out}*c_1/g*h_1*w_1*g=c_2*H_{out}*W_{out}*c_1/g*h_1*w_1c2/g∗Hout∗Wout∗c1/g∗h1∗w1∗g=c2∗Hout∗Wout∗c1/g∗h1∗w1

可以看到,得到相同尺寸的output feature map,分组卷积的参数量和运算量只有普通卷积的1/g1/g1/g,是一种更高效的卷积方式。

从上面也能看出,分组个数g要同时能被输入通道数c1c_1c1和输出通道数c2c_2c2整除。

[1] Deep Roots: Improving CNN Efficiency with Hierarchical Filter Groups

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK