Agile BI, without unit testing you are using the "Max Power Way"

source link: https://www.christoolivier.com/agile-bi-without-unit-testing-you-are-using-the-max-power-way/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Agile BI, without unit testing you are using the "Max Power Way"

“Homer: From now on, there are three ways to do things: the right way, the wrong way, and the Max Power way. Bart: Isn't that just the wrong way? Homer: Yeah, but faster!”

Agile BI has been all the rage for a while now. It is one of those buzz words that everyone loves to throw around. I personally despise buzz words, mainly because they get latched on to by people who do not always have a clear understanding of the fundamentals they encapsulate.

These buzz words then get chucked around in meetings like a magic spell, one that will get a project delivered faster, almost like the "turbo" button you had on your 486 computer back in the day.

In the custom development world agile methodology has been around for a long time and unit testing forms the core of any custom dev project that is truly following an agile approach. It is therefore no wonder that you have a plethora of unit testing frameworks and tools available for almost every conceivable language and framework out there.

The sad fact is that when it comes to BI we have caught up with the buzz word of Agile but we are sorely under equipped to actually take advantage of the methodology due to our lack of unit testing tools.

This twilight zone in which we find ourselves lead to many projects heading down the "Max Power Way" in which we accelerate the development of the system but introduce more and more bugs and issues in the project due to the knock on effect that a change has as the project grows.

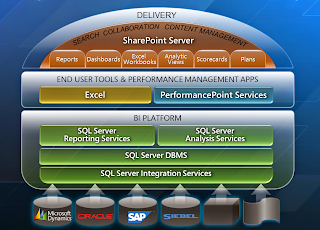

Let me give an example of what I mean. Below is the "BI Burger" slide from Microsoft showing the different layers in a Microsoft BI solution.

Now imagine that you are delivering a BI solution in which you are following agile methodology. You have completed the ETL, Data Warehouse, Cube and Reporting layers of the first sprint and you are about to kick off with sprint two.

The first task of the second sprint is to rename a measure in a fact table and change a couple of data types, one of which is to increase the size of a varchar attribute in a dimension table and the other is changing a measure from decimal to float in a fact table. All of these changes need to happen in the data warehouse.

Because everyone is hyped up on double strength doses of Agile the task is estimated to not take very long. However the impact of these changes on the solution is much bigger than the developers realise. While you can make the changes in a few minutes you have to keep in mind that you would need to change all the parts of the system that interact with the objects that you have changes. Lets take a look at these in more detail.

Potential changes in BI layers

- For the data type changes and column name change you will need to update the metadata of all the SSIS packages that interact with the tables in which this change took place.

- Run some tests to ensure that the changes do no cause truncation of data for the dimension table column that was updated.

- Test that any calculations performed on the measure in the fact table produce the correct results.

Data Warehouse

- Update any views that reference the renamed measure. ( If you are following best practice then this would mean the changes to the views in the data warehouse on top of which you build your cube)

- Update any stored procedures that reference the renamed measure. (Remember there can be quite a large amount of SSRS reports that could use stored procedures against the data warehouse.)

- Test all the calculations of any function, procedure or script that use the measure that had the data type change.

- You would need to update the actual attribute in the dimension which references the column with the increased varchar size.

- You will also need to update the measures to ensure that the name change is corrected in the measure group and that the measure now points to the correct column in the fact table or view.

- You will also need to update the data type of the measure which had it's data type changes in the data warehouse.

- You will need to update all calculated measures that reference the measure that had the name change.

- You will need to test the result of all calculated measures that use either of the two measures to which a change was made. This is to ensure that they still work correctly and that they produce the correct results.

Reporting Layer

- Update all PerformancePoint Server dashboards that reference the measure that had the name change.

- Update all SSRS reports that references the measure that had the name change.

- Update all PowerView reports that are referencing the measure that had the name change.

- Update all Excel Services reports that are referencing the measure that had the name change.

Unit Testing

As you can see from the above list of potential changes, and I am sure there are some that I might have left out, a change that could be seen as trivial by a business user has got a massive ripple effect throughout your BI solution.

The hardest part of making these changes are ensuring that you have not broken any other parts of your system, and that your figures are still coming out correct in the reporting layer for end user consumption. There are two ways to ensure this:

- Test every single area of the system manually to ensure everything functions as expected and that all values across the system are still being calculated correctly after the changes have been made.

- Make use of automated unit testing and kick off the entire batch of unit tests that were created during the development of the system to do the hard work for you.

Conclusion

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK