MPAM-Style cache partitioning with ATP-Engine and gem5

source link: https://community.arm.com/arm-community-blogs/b/architectures-and-processors-blog/posts/gem5-cache-partitioning

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

MPAM-Style cache partitioning with ATP-Engine and gem5

The Memory Partitioning and Monitoring (MPAM) Arm architecture supplement allows for memory resources (MPAM MSCs) to be partitioned using PARTID identifiers. This allows privileged software, like OSes and hypervisors to partition caches, memory controllers and interconnects on the hardware level. This allows for bandwidth and latency controls to be defined and enforced for memory requestors.

To enable system design exploration for designs that incorporate MPAM, 3 main tasks needed to be completed:

- MPAM style policies, implementing the algorithms described in the MPAM supplement, need to be implemented in a simulator.

- Native support for generating MPAM-tagged traffic and enforcing the partitioning policies needs to be added. To best represent implementations of MPAM, this support needs to come as functionality in CPUs and MPAM MSC.

- A synthetic traffic generator needs to be extended to support MPAM tagging. Making a synthetic traffic generator available is key, as it allows for reproducible experiments when testing the partitioning implementation.

The simulator chosen for this is gem5 due to its wide-spread adoption and robust extensibility. The MPAM partitioning policies, as well as CPU and MSC support were implemented as native simulator features. The selected traffic generator is the Arm ATP-Engine, an open-source implementation of the AMBA ATP specification (ARMIHI0082A) that is compatible with gem5 and is capable of generating simulator-native traffic.

How does MPAM cache partitioning policies map to ATP-Engine and gem5?

To enable testing system designs featuring cache-partitioning functionality, cache partitioning policies based on the MPAM architecture are introduced to gem5. These policies include the maximum capacity and cache portion partitioningpolicies:

- Maximum Capacity, or MaxCapacityPartitioningPolicy in gem5: this policy allows for software to program a cache allocation amount for a PARTID as a percentage of the total cache blocks available. When the number of allocated cache entries is reached, cache blocks associated to that PARTID need to be evicted before new ones can be allocated. This policy implements a cache maximum-capacity partitioning control as defined in the MPAM architecture.

- Cache Portion Partitioning, or WayPartitioningPolicy in gem5: this policy allows for a PARTID to be constrained allocating only in specific cache ways. This is an implementation of the MPAM architecture cache-portion partitioning control.

To utilise these policies, a mechanism to store and access packet MPAM information bundles needed to be implemented. To store this data, the PartitionFieldExtention Packet extension was created, storing PartitionID and PartitionMonitoringID values. These values of type unsigned 64-bit integer represent MPAM PartID and PMG ID values. This approach allows for additional system design flexibility, as it makes possible to have multiple gem5 requestors generate traffic under the same PartitionID. This is useful when simulating a system in which multiple CPUs and peripherals may belong to the same software job. Moreover, using a Packet extension to store PartitionID values allows for better future extensibility of new components wanting to make use of these fields or policies - they can simply use the extension in existing Packets.

Support for tagging requests with PartitionIDs is added to ATP-Engine in its recent 3.2 release, allowing testing of the implemented partitioning policies.An additional traffic generator configuration field named FlowID is added, which triggers the creation of a populated PartitionFieldExtention to be added to outgoing packets. This release also includes under-the-hood optimisations in Packet metadata tagging, support for gem5 v22.0+ and compatibility with modern versions of libprotobuf and libabseil.

Gem5 SimObjects are used to implement the policies, with them being used as parameters for the new arm-specific MpamMSC component in gem5. These new components store the configurations for the partitioning policies and handle the required functionality for caches to filter blocks. This allows for policy configurations to be reused between caches and for policies and allocations to be configured as every other part of the simulation. Note that MpamMSC components should not be reused, because of internal counters for each of the policies.The policy allocations are enforced by adding an additional step to the findVictim()and notifyRelease() functions in Cache Tags, which filter the available victim blocks for a request based on the PartitionID allocation and update the policy of a cache block release respectively. Development was mainly targeted towards the BaseSetAssoc tag type, though the partitioning functionality was also implemented for CompressedTags and SectorTags.

Using gem5 MPAM-style cache partitioning polices with ATP-Engine

This section covers adding MPAM-style cache partitioning polices to existing gem5 system configurations. If you are looking, to get started with gem5 go over to the Learning gem5 tutorial.

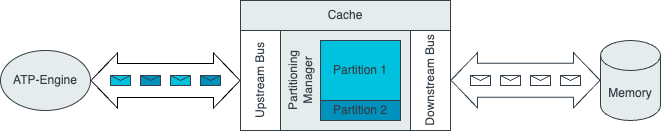

The following section assumes a simple gem5 configuration with ATP-Engine acting as a memory requestor, a SimpleMemory backing store and a cache sitting in between the 2. The process of adding cache partitioning policies to the initial configuration and configuring ATP-Engine to tag traffic with PartitionIDs is described. The final configuration looks like this:

Diagram showing final simulated system architecture

Configuring gem5 cache partitioning policies

Configuring cache partitioning policies in gem5 consists of 2 main steps: configuring the policies and adding them to the MpamMSC of the system cache where the allocations are enforced.

The first step is configuring the allocations, which varies based on the policy used:

- For MaxCapacityPartitioningPolicy the allocations are configured by passing 2 arrays to the SimObject. One holds the PartitionIDs to be considered and the second holds the allocations for each PartitionID. Fractions of the total cache capacity in the [0, 1] range represent the allocations. The complete configuration for the policy looks like so:

policy = MaxCapacityPartitioningPolicy(

partition_ids=[0, 1], capacities=[0.5, 0.5]

)- For WayPartitioningPolicy configuring the allocations is done by passing a list of WayPolicyAllocation objects. Each WayPolicyAllocation representing the allocated cache ways that a PartitionID should use. To instantiate a WayPolicyAllocation object, a PartitionID and valid list of allocated ways must be passed as such:

allocation1 = WayPolicyAllocation(

partition_id=0,

ways=[0, 1, 2, 3]

)

allocation2 = WayPolicyAllocation(

partition_id=1,

ways=[4, 5, 6, 7]

)After creating the allocations, the next step is to configure the WayPartitioningPolicy by passing the list of allocations like so:

policy = WayPartitioningPolicy(

allocations=[allocation1, allocation2]

)At this point the configured policies are supplied to the cache MpamMSC and used for partitioning:

part_manager = MpamMSC (

partitioning_policies=[ policy ]

)

system.cache = NoncoherentCache(

size="512KiB"

assoc=8,

partitioning_manager=part_manager,

...

)As shown above, a cache MpamMSC can use multiple policies at a time to allow for more complex configurations and instantiated policies can be reused for multiple MpamMSC. Note that that there is no support for reusing MpamMSC between caches.

At this stage the cache uses the provided configuration to allocate cache blocks, but the incoming traffic is not tagged by ATP-Engine. Running the simulation as is results in the cache size appearing to be halved. This is because all the incoming traffic is assigned to the default PartitionID of 0 and both the provided policies allocate half the cache capacity to PartitionID 0. So, the next step is to configure the ATP-Engine traffic profiles to tag packets with PartitionIDs 0 and 1.

Configuring ATP-Engine traffic profiles

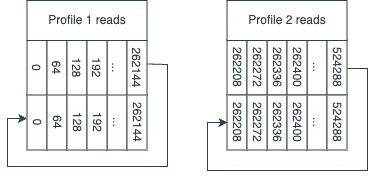

The ATP-Engine configuration requires two configured traffic profiles, as traffic profile can only be associated with one PartitionID at any time. Using 2 traffic profiles is also reflective of real-world scenarios in which requestors running in parallel cause system resource contention. The traffic profiles proposed for this simulation both perform 2 reads of 4096 consecutive memory blocks for a total of 8192 reads. The first read is done to warm-up the cache, and the second to test the contents. As each block of memory being read is 64 bits, the total memory read per requestor would be 256 KiB, or all together 512 KiB. To avoid any cache interference between the 2 traffic profiles, the beginning addresses of the profiles are offset by 256 KiB.

Diagram showing the reads performed by each of the profiles.

Configuring the profiles to behave as described requires the pattern directive to be configured as shown below:

Fullscreen pattern { address { base: 0 stride { Stride: 64 Xrange: "262144B" size: 64 pattern {

address {

base: 0

}

stride {

Stride: 64

Xrange: "262144B"

}

size: 64

} |

Fullscreen pattern { address { base: 262208 stride { Stride: 64 Xrange: "262144B" size: 64 pattern {

address {

base: 262208

}

stride {

Stride: 64

Xrange: "262144B"

}

size: 64

} |

After that, the traffic profiles are configured to tag outgoing packets with PartitionIDs by adding the flow_id attribute to the profile configuration as so:

profile {

...

flow_id: 2

...

}Experiment results

To test the cache partitioning policies, simulations using the Way and Max Capacity partitioning policies are run several times with different partition configurations. The number of reported cache misses from each simulation is examined to evaluate the effect of the policies. As the total cache size perfectly fits the 2 requests for the 2 profiles, a 50/50 split of the cache should have no effect on the cache miss ratio.

The Max Capacity partitioning policy is tested first, with the simulation being run 5 times with 5 different partitioning allocations. The experiments included a 50/50 split, 40/60, 30/70, 20/80 and 10/90. The allocations below 50% show increasing numbers of cache misses with each decrease in capacity, while the misses for allocations at or above 50% remains a constant 4096. This is due to the allocation for requestor with PartitionID 1 being over what the requestor requires. The full results are available in Table 1:

| Cache Allocation 0 | Cache Allocation 1 | Misses 0 | Misses 1 |

| 0.5 | 0.5 | 4096 | 4096 |

| 0.4 | 0.6 | 4916 | 4096 |

| 0.3 | 0.7 | 5735 | 4096 |

| 0.2 | 0.8 | 6964 | 4096 |

| 0.1 | 0.9 | 8192 | 4096 |

Table 1: cache misses per PartitionID when using varying allocations with the Max Capacity Partitioning policy.

Running equivalent experiments for the Way partitioning policy, with the 2 requestors initially being allocated 4 cache ways each initially (out of the total of 8). The allocations are then changed to 3/5, 2/6 and 1/7 split. Again, the equal allocation results in both requestors experiencing the baseline cache miss count of 4096, with the lower allocations increasing the number of misses. Increasing the allocated cache way count did not affect the number of cache misses similarly to the previous experiment. The full results of the experiment are available in Table 2:

| Cache Allocation 0 | Cache Allocation 1 | Misses 0 | Misses 1 |

| 4 | 4 | 4096 | 4096 |

| 3 | 5 | 5120 | 4096 |

| 2 | 6 | 6144 | 4096 |

| 1 | 7 | 8192 | 4096 |

Table 2: cache misses per PartitionID when using varying allocations with the Way Partitioning policy.

Conclusions and next steps

The results of the partitioning policies tests show that the cache partitioning mechanism implemented in gem5 functions as intended. It allows for processes to function in isolation, especially in scenarios in which system resource contention is present. The implemented Max Capacity and Way partitioning policies reflect the MPAM specification and allow for design exploration in the area. The MPAM team is looking forward to systems designed using these new features.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK