使用Gemma LLM构建RAG应用程序

source link: https://weedge.github.io/post/doraemon/gemma_faiss_langchain_rag/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

随着大型语言模型的不断发展,构建 RAG(检索增强生成)应用程序的热潮与日俱增。谷歌推出了一个开源模型:Gemma。众所周知,RAG 代表了两种基本方法之间的融合: 基于检索的技术和生成模型。基于检索的技术涉及从广泛的知识库或语料库中获取相关信息以响应特定的查询。生成模型擅长利用训练数据中的见解从头开始创建新内容,从而精心制作原始文本或响应。

目的:使用开源模型gemma来构建 RAG 管道并看看它的性能如何。

这里分两个场景介绍: 生成幼儿故事demo 和 虚拟人物介绍demo, 均属于 naive RAG。

Case1: 生成幼儿故事demo

让我们开始并将该过程分为以下步骤:

- 加载数据集:Cosmopedia

- 拥抱脸部的嵌入生成

- 存储在 FAISS DB 中

- Gemma:介绍 SOTA 模型

- 查询RAG管道

在行动起来之前,先安装并导入所需的依赖项。

%pip install -q -U langchain torch transformers sentence-transformers datasets faiss-cpu

import torch

from datasets import load_dataset

from langchain_community.document_loaders.csv_loader import CSVLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

from transformers import AutoTokenizer, AutoModelForCausalLM

from transformers import AutoTokenizer, pipeline

from langchain import HuggingFacePipeline

from langchain.chains import RetrievalQA

import pandas as pd

加载数据集:Cosmopedia stories

为了制作 RAG 应用程序,我们选择了 Hugging Face 数据集Cosmopedia。该数据集由 Mixtral-8x7B-Instruct-v0.1 生成的综合教科书、博客文章、故事、帖子和 WikiHow 文章组成。该数据集包含超过 3000 万个文件和 250 亿个token,这使其成为迄今为止最大的开放综合数据集。

该数据集包含 8 个子集。将使用“stories”子集。将使用datasets库加载数据集。

首先从HF上下载数据集, 如果是快速测试直接下载一个文件就行,并且加载一部分数据用于测试。(国内ip下载到本地,需要用代理)

!huggingface-cli login

!huggingface-cli download \

--repo-type dataset HuggingFaceTB/cosmopedia data/stories/train-00000-of-00043.parquet \

--local-dir dataset/HuggingFaceTB/cosmopedia \

--local-dir-use-symlinks False

from datasets import load_dataset

#data = load_dataset("./dataset/HuggingFaceTB/cosmopedia", split="train[:100]")

data = load_dataset("./dataset/HuggingFaceTB/cosmopedia", split="train")

或者直接下载stories全部数据集 然后加载一部分数据, 具体操作见: https://huggingface.co/docs/datasets/loading

# https://huggingface.co/docs/datasets/loading

# download all, then choose sample

data = load_dataset("HuggingFaceTB/cosmopedia", "stories", split="train[:1000]")

然后,我们将其转换为 Pandas 数据帧,并将其保存为 CSV 文件。

data = data.to_pandas()

data.to_csv("dataset.csv")

data.head()

!ls -lh dataset.csv

!wc -l dataset.csv

现在数据集已保存在我们的系统上,我们将使用 LangChain 加载数据集。 这里需要先释放掉前面加载时所用到的内存。另外,如果你是在Colab中运行的,你也可以重置Colab运行时环境来释放内存。你可以选择“Runtime”菜单,然后选择“Factory reset runtime”来重新启动Colab运行时环境,这将清除所有已加载的数据和对象,并释放内存空间; 然后重新执行import。

loader = CSVLoader(file_path='./dataset.csv',)

data = loader.load()

现在数据已加载,需要拆分数据内的文档。这里将文档分成大小为 1000 的块。这将有助于模型快速高效地工作。

#直接加载切分, 数据量比较大,会OOM,最好通过一次加载1-3个文件每个文件带个300~400M左右

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=150)

docs = text_splitter.split_documents(data)

使用HF 上 训练好的embedding 模型生成嵌入

之后,我们将使用 Hugging Face Embeddings 并在 Sentence Transformers 模型sentence-transformers/all-MiniLM-l6-v2的帮助下生成嵌入。

modelPath = "sentence-transformers/all-MiniLM-l6-v2"

model_kwargs = {'device':'cpu'}

encode_kwargs = {'normalize_embeddings': False}

embeddings = HuggingFaceEmbeddings(

model_name=modelPath,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs

)

存储在 FAISS 本地向量数据库中

嵌入已生成,但我们需要将它们存储在向量数据库中。我们将把这些嵌入保存在 FAISS 矢量存储中,这是一个用于高效相似性搜索和聚类密集矢量的库。

db = FAISS.from_documents(docs, embeddings)

print(db.index.ntotal)

开源LLM Gemma

Gemma 提供两种模型大小,分别具有 20 亿和 70 亿参数,满足不同的计算约束和应用场景。提供预训练和微调的检查点,以及用于推理和服务的开源代码库。它接受了多达 6 万亿个文本数据标记的训练,并利用与 Gemini 模型类似的架构、数据集和训练方法。两者都在跨文本领域展现了强大的通才能力,并且擅长大规模的理解和推理任务。

该版本包括原始的、预先训练的检查点以及针对对话、遵循指令、帮助和安全等特定任务进行优化的微调检查点。我们进行了全面评估,以评估模型的性能并解决任何缺陷,从而能够对模型调整机制进行深入研究和调查,并开发更安全、更负责任的模型开发方法。Gemma 的性能超越了各个领域的同等规模的开放模型,包括问答、常识推理、数学和科学以及编码,自动化基准测试和人工评估都证明了这一点。要了解有关 Gemma 模型的更多信息,请访问此技术报告。要开始使用Gemma模型,应该了解他们在 Hugging Face 上的条款。

如果运行在colab笔记中,使用笔记中的HF_TOKEN, 本地系统使用HUGGINGFACEHUB_API_TOKEN

import os

from google.colab import userdata

os.environ["HUGGINGFACEHUB_API_TOKEN"] = userdata.get('HF_TOKEN')

这里分两种方式使用huggingface上的gemma模型:

本地部署进行推理生成

更具本地硬件环境进行部署对应模型,这里使用原始指令微调后,参数量大小为70亿的模型google/gemma-7b-it, 使用Transforms库进行初始化

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b-it")

tokenizer = AutoTokenizer.from_pretrained("google/gemma-7b-it", padding=True, truncation=True, max_length=512)

使用langchain对pipeline进行初始化

# issue: https://github.com/langchain-ai/langchain/discussions/19403 ;

# remove `return_tensors='pt'` return raw json, don't work, no answer

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

return_tensors='pt',

max_length=1024,

max_new_tokens=1024,

model_kwargs={"torch_dtype": torch.bfloat16},

device="cuda"

)

# generate pipeline

llm = HuggingFacePipeline(

pipeline=pipe,

model_kwargs={"temperature": 0.9, "max_length": 1024,"top_k":40, "top_p":0.95},

)

远程访问 HuggingFace LLM Endpoint 服务

huggingface Hub是一个拥有超过35万个模型、75万个数据集和15万个演示应用程序(空间)的平台,所有都是开源的和公开的,在这个在线平台上,人们可以轻松地合作和构建ML。Hub作为一个中心场所,任何人都可以通过机器学习探索、实验、协作和构建技术。

from langchain_community.llms import HuggingFaceEndpoint

#repo_id = "google/gemma-2b-it"

repo_id = "google/gemma-7b-it"

#repo_id = "google/gemma-2b"

#repo_id = "google/gemma-7b"

llm = HuggingFaceEndpoint(

repo_id=repo_id, max_length=1024, temperature=0.9, top_k=40, top_p=0.95

)

查询RAG管道

使用langchain构建RAG管道;然后传递查询并看看它的执行情况, 这里将RAG管道生成的故事 和 直接使用LLM gemma来生成故事,进行对比

构建RAG查询管道

# RAG pipeline

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=db.as_retriever()

)

直接使用LLM推理生成

# llm gemma generate en story

res = llm.invoke("Write an educational story for young children.")

print(res)

Maybe your story explains the basics of the solar system, or it introduces children to the idea of saving money.

There are many ways to write an educational story for children. In a book I have written with my wife, <strong>Stories and Lessons</strong>, we introduce 100 Bible stories to children.

Each story comes with a lesson or moral. These lessons are written by my wife, Jennifer, and she has done a brilliant job of distilling the moral of the story into a few words.

I would love to be a part of your children’s educational experience. If you would like to write an educational story for children, then please get in touch.

# llm gemma generate zh-CN story

res = llm.invoke("为幼儿写一个有教育意义的故事。")

print(res)

要求:

1.故事要有主题,有启发作用

2.故事的构思要有新意,有独创性

3.故事的语言要通顺,生动形象

4.故事的长短适中,最好能达到500字左右

参考答案:

1.你走入校园的每一步,都为下一代铺平了一条路。

2.我读小学时,有一位教师把我的作文批上了“不负我青春,不负韶华”的字样,这令我大受鼓舞,从那时起,我便决定,我要在每一个学生的心中,扎下根,打下台阶,用我的全部努力,帮助学生树立正确的理想、价值观、目标和人生观。

3.我们这群教师,是国家对下一代的厚爱,是下一代对国家的深情。我们就像一棵棵树,每棵树是独立的,但又互相交融为一体。每棵树在不同的季节、不同的光影、不同的角度,都会呈现出不同的颜色,不同的形态。

4.我们这一代的教育工作者是教育界的巨人,是社会发展的先锋,是培养新一代中国人的摇篮。我们这一代的教育工作者,是人类历史发展的转折点,是人类进步史的转折点。我们这一代的教育工作者,是教育界的巨人,是社会发展的先锋,是培养新一代中国人的摇篮。

5.我读大学时,有一位大学教授,每天都把他的心意写在我的身上,让我体会到老师的真情。那一年,我得到了一张全省的奖学金,老师说:“你虽然得到了一张全省的奖学金,但是我给你的情,是全省的奖学金的十倍。”那年的9月份,我离开母校,去我未来的工作岗位,去我的新生活,可是那年10月份,我的母校又来了一位学生,她叫李明,是来自一个山穷水尽的地方的。李明说,她很希望可以来学校读大学,可是她的家境很困难,没有钱。她来到学校,到了一位教授那里,教授给了她一封信,这封信里的内容是:“李明,如果你真的想来这里来读大学,你可以来我的研究

使用RAG管道生成

res = qa.invoke("Write an educational story for young children.")

print(res['result'])

Timmy and Sally were curious about who would win a race combining both running and swimming. The wise old turtle suggested organizing a competition among various animals. Nobody expected either Timmy or Sally to win, but instead, Kiki Koala surprised everyone by climbing trees and swimming strongly against the current. This unexpected revelation taught Timmy and Sally that every creature has unique abilities, making each special and valuable.

#知识库里木有中文故事, 生成的还是英文故事

res = qa.invoke("为幼儿写一个有教育意义的故事。")

print(res['result'])

Sarah and Julian's story teaches us about the power of friendship, compassion, and resilience. It also highlights the importance of standing up for what we believe in, even when it means going against the grain.

具体操作笔记: https://github.com/weedge/doraemon-nb/blob/main/gemma_FAISS_Cosmopedia_RAG.ipynb

Case2: 生成虚拟人物介绍demo

初始化 模型llm 和上文一样,这里忽略。

使用基本的PromptTemplate 操作 (可选操作,prompt工程)

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

question = "Who won the FIFA World Cup in the year 1994? "

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate.from_template(template)

prompt

llm_chain = LLMChain(prompt=prompt, llm=llm)

print(llm_chain.invoke(question))

{'question': 'Who won the FIFA World Cup in the year 1994? ', 'text': '\n\nThe year 1994 was not a year in which the FIFA World Cup was held.'}

多个 Questions

qs = [

{'question': "What is the Kaggle?"},

{'question': "What is the first step I should do in Kaggle?"},

{'question': "I did it the way you told me. What should I do next?"}

]

res = llm_chain.generate(qs)

for generated in res.generations:

print(generated[0].text)

print('\n---------------\n')

#print(res.generations)

qs = [

{'question': "什么是 Kaggle?"},

{'question': "我应该在 Kaggle 上采取的第一步是什么?"},

{'question': "我按照你的指示操作了。接下来我应该做什么?"}

]

res = llm_chain.generate(qs)

for generated in res.generations:

print(generated[0].text)

print('\n---------------\n')

根据上下文提问

prompt = """Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know".

Context: Kaggle is a platform for data science and machine learning competitions, where users can find and publish datasets, explore and build models in a web-based data science environment, and work with other data scientists and machine learning engineers. It offers various competitions sponsored by organizations and companies to solve data science challenges. Kaggle also provides a collaborative environment where users can participate in forums and share their code and insights.

Question: Which platform provides datasets, machine learning competitions, and a collaborative environment for data scientists?

Answer:"""

print(llm.invoke(prompt))

Kaggle

prompt = """根据下面的上下文回答问题。如果使用所提供的信息无法回答问题,请回答“我不知道”。

上下文:Kaggle 是一个数据科学和机器学习竞赛平台,其中用户可以在基于网络的数据科学环境中查找和发布数据集、探索和构建模型,并与其他数据科学家和机器学习工程师合作。它提供由组织和公司赞助的各种竞赛来解决数据科学挑战。Kaggle 还提供用户可以参与论坛并分享代码和见解的协作环境。

问题:哪个平台为数据科学家提供数据集、机器学习竞赛和协作环境?

答案:"""

print(llm.invoke(prompt))

Kaggle 平台。

FewShotPromptTemplate 根据提供的示例生成响应:

# Import the FewShotPromptTemplate class from langchain module

from langchain import FewShotPromptTemplate

# Define examples that include user queries and AI's answers specific to Kaggle competitions

examples = [

{

"query": "How do I start with Kaggle competitions?",

"answer": "Start by picking a competition that interests you and suits your skill level. Don't worry about winning; focus on learning and improving your skills."

},

{

"query": "What should I do if my model isn't performing well?",

"answer": "It's all part of the process! Try exploring different models, tuning your hyperparameters, and don't forget to check the forums for tips from other Kagglers."

},

{

"query": "How can I find a team to join on Kaggle?",

"answer": "Check out the competition's discussion forums. Many teams look for members there, or you can post your own interest in joining a team. It's a great way to learn from others and share your skills."

}

]

# Define the format for how each example should be presented in the prompt

example_template = """

User: {query}

AI: {answer}

"""

# Create an instance of PromptTemplate for formatting the examples

example_prompt = PromptTemplate(

input_variables=['query', 'answer'],

template=example_template

)

# Define the prefix to introduce the context of the conversation examples

prefix = """The following are excerpts from conversations with an AI assistant focused on Kaggle competitions.

The assistant is typically informative and encouraging, providing insightful and motivational responses to the user's questions about Kaggle. Here are some examples:

"""

# Define the suffix that specifies the format for presenting the new query to the AI

suffix = """

User: {query}

AI: """

# Create an instance of FewShotPromptTemplate with the defined examples, templates, and formatting

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

此代码设置用户查询和 AI 答案的示例,定义用于呈现示例的格式,然后使用 FewShotPromptTemplate 根据提供的新查询生成响应。最后,它打印生成的响应。

query="Is participating in Kaggle competitions worth my time?"

print(few_shot_prompt_template.format(query=query))

The following are excerpts from conversations with an AI assistant focused on Kaggle competitions.

The assistant is typically informative and encouraging, providing insightful and motivational responses to the user's questions about Kaggle. Here are some examples:

User: How do I start with Kaggle competitions?

AI: Start by picking a competition that interests you and suits your skill level. Don't worry about winning; focus on learning and improving your skills.

User: What should I do if my model isn't performing well?

AI: It's all part of the process! Try exploring different models, tuning your hyperparameters, and don't forget to check the forums for tips from other Kagglers.

User: How can I find a team to join on Kaggle?

AI: Check out the competition's discussion forums. Many teams look for members there, or you can post your own interest in joining a team. It's a great way to learn from others and share your skills.

User: Is participating in Kaggle competitions worth my time?

AI:

打印模版prompt生成的响应内容

print(llm.invoke(few_shot_prompt_template.format(query=query)))

100%. It's a fantastic opportunity to learn, network, and build your resume. Plus, the competition can be a lot of fun!

These are just a few examples of the kind of responses the AI assistant provides.

**Based on these examples, what are some of the key takeaways from the conversations?**

- **Focus on learning and improving your skills.**

- **Don't worry about winning; focus on learning and improving.**

- **Explore different models, tune your hyperparameters, and don't forget to check the forums for tips from other Kagglers.**

- **Join a team to learn from others and share your skills.**

- **Participating in Kaggle competitions can be a lot of fun.**

中文情况:

# 从 langchain 模块导入 FewShotPromptTemplate 类

from langchain import FewShotPromptTemplate

# 定义示例,其中包括特定于 Kaggle 竞赛的用户查询和 AI 答案

examples = [

{

"query" : "如何开始 Kaggle 竞赛?" ,

"answer" : "首先选择一个您感兴趣且适合您技能水平的比赛。不要担心获胜;专注于学习和提高您的技能。"

},

{

"query" : "如果我的模型表现不佳,我该怎么办?" ,

"answer" : "这都是过程的一部分!尝试探索不同的模型,调整你的超参数,并且不要忘记查看论坛以获取其他 Kaggler 的提示。"

},

{

"query" : "如何在 Kaggle 上找到加入团队?" ,

"answer" : "查看竞赛的讨论论坛。许多团队都在那里寻找成员,或者您也可以发布自己对加入团队的兴趣。这是向他人学习和分享技能的好方法。"

}

]

# 定义每个示例在提示中的呈现格式

example_template = """

User: {query}

AI: {answer}

"""

# 创建一个 PromptTemplate 实例来格式化示例

example_prompt = PromptTemplate(

input_variables= [ 'query' , 'answer' ],

template=example_template

)

# 定义前缀,引入对话示例的上下文

prefix = """以下是与专注于 Kaggle 比赛的 AI 助手的对话节选。

助手通常是信息丰富且令人鼓舞,对用户关于 Kaggle 的问题提供富有洞察力和激励性的答复。以下是一些示例:

"""

# 定义后缀,指定向 AI 呈现新查询的格式

suffix = """

User: {query}

AI: """

# 使用定义的示例、模板和格式创建

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

query="参加 Kaggle 比赛值得我花时间吗?"

print(few_shot_prompt_template.format(query=query))

以下是与专注于 Kaggle 比赛的 AI 助手的对话节选。

助手通常是信息丰富且令人鼓舞,对用户关于 Kaggle 的问题提供富有洞察力和激励性的答复。以下是一些示例:

User: 如何开始 Kaggle 竞赛?

AI: 首先选择一个您感兴趣且适合您技能水平的比赛。不要担心获胜;专注于学习和提高您的技能。

User: 如果我的模型表现不佳,我该怎么办?

AI: 这都是过程的一部分!尝试探索不同的模型,调整你的超参数,并且不要忘记查看论坛以获取其他 Kaggler 的提示。

User: 如何在 Kaggle 上找到加入团队?

AI: 查看竞赛的讨论论坛。许多团队都在那里寻找成员,或者您也可以发布自己对加入团队的兴趣。这是向他人学习和分享技能的好方法。

User: 参加 Kaggle 比赛值得我花时间吗?

AI:

print(llm.invoke(few_shot_prompt_template.format(query=query)))

当然值得!参加比赛可以帮助您提升您的数据分析技能,并获得一份证书,这对于您的职业发展很有帮助。

这些对话节选展示了 AI 如何为用户提供支持和鼓励,帮助他们克服挑战并最终成功参加 Kaggle 比赛。

会话记忆 (可选操作)

from langchain.chains import ConversationChain

# We have already loaded the LLM model above.(Gemma_2b)

conversation_gemma = ConversationChain(llm=llm)

conversation_gemma.invoke("how to incress the rice production?")

conversation_gemma.invoke("如何提高水稻产量?")

1. 将网页文档数据分块写入faiss中

首先 使用WebBaseLoader从网页获取文档。在此示例中,使用网页“ https://jujutsu-kaisen.fandom.com/wiki/Satoru_Gojo ”中的文档。

# load a document

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://jujutsu-kaisen.fandom.com/wiki/Satoru_Gojo")

data = loader.load()

print(len(data[0].page_content))

然后将文档分割成块并使用 Langchain 组件创建嵌入。

-

导入模块:TextLoader、SentenceTransformerEmbeddings、FAISS和CharacterTextSplitter。

-

使用CharacterTextSplitter,将文档分割成可管理的块。

-

使用 为每个块创建嵌入SentenceTransformerEmbeddings。这些嵌入使用有效地存储在 FAISS 中

FAISS.from_documents()。此步骤通过将文档分解为更小的部分并创建嵌入以便在生成过程中进行高效处理来准备文档以供检索。

from langchain_community.document_loaders import TextLoader

from langchain_community.embeddings.sentence_transformer import (

SentenceTransformerEmbeddings,

)

from langchain.vectorstores import FAISS

from langchain_text_splitters import CharacterTextSplitter

# split it into chunks

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

docs = text_splitter.split_documents(data)

# create the open-source embedding function

embedding_function = SentenceTransformerEmbeddings(model_name="all-MiniLM-L6-v2")

# load it into FAISS

db = FAISS.from_documents(docs, embedding_function)

2. 创建RAG chain

让我们看看在提示符和LLM中添加检索步骤,这增加了“检索增强生成”链

- 导入模块:

hub用于访问预训练模型、StrOutputParser解析字符串输出、RunnablePassthrough传递输入以及RetrievalQA构建 RAG 链。 - 使用步骤 2 中创建的 Chroma 矢量存储来配置检索器

db,指定搜索参数。 - 从 Langchain 中心提取 RAG 提示符。

- 定义一个函数

format_docs()来格式化检索到的文档。 - 使用一系列组件创建 RAG 链:检索器、问题传递、RAG 提示和 Gemma 模型.

from langchain import hub

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain.chains import RetrievalQA

retriever = db.as_retriever(search_type="mmr", search_kwargs={'k': 4, 'fetch_k': 20})

# https://smith.langchain.com/hub/rlm/rag-prompt

prompt = hub.pull("rlm/rag-prompt")

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

)

rag_chain.invoke("who is gojo?")

rag_chain.invoke("谁是gojo?")

Gojo is a powerful sorcerer who is the strongest sorcerer in the world. He is also a very intelligent sorcerer who is able to come up with new and creative ways to defeat his opponents.

Satoru Gojo is one of the main protagonists of the Jujutsu Kaisen series. He is a special grade jujutsu sorcerer and widely recognized as the strongest in the world.

3. 对话式RAG

接收聊天记录(消息列表)和新问题,然后返回该问题的答案。该链的算法由三部分组成:

- 使用聊天记录和新问题创建一个“独立问题”。这样做是为了将这个问题传递到检索步骤以获取相关文档。如果只传入新问题,则可能缺乏相关的上下文。如果整个对话都被传递到检索中,可能会有不必要的信息分散检索的注意力。

- 这个新问题被传递给检索器并返回相关文档。

- 检索到的文档连同新问题(默认行为)或原始问题和聊天历史记录一起传递给LLM,以生成最终响应。

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationalRetrievalChain

memory = ConversationBufferMemory(memory_key = 'chat_history',return_messages=True)

custom_template = """Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question, in its original English.

Chat History:

{chat_history}

Follow Up Input: {question}

Standalone question:"""

CUSTOM_QUESTION_PROMPT = PromptTemplate.from_template(custom_template)

conversational_chain = ConversationalRetrievalChain.from_llm(

llm = llm,

chain_type="stuff",

retriever=db.as_retriever(),

memory = memory,

condense_question_prompt=CUSTOM_QUESTION_PROMPT

)

RAG chain会话生成

conversational_chain({"question":"who is gojo?"})

{'question': 'who is gojo?',

'chat_history': [HumanMessage(content='who is gojo?'),

AIMessage(content=' Satoru Gojo is one of the main protagonists of the Jujutsu Kaisen series. He is a special grade jujutsu sorcerer and widely recognized as the strongest in the world.')],

'answer': ' Satoru Gojo is one of the main protagonists of the Jujutsu Kaisen series. He is a special grade jujutsu sorcerer and widely recognized as the strongest in the world.'}

conversational_chain({"question":"what is his power?"})

{'question': 'what is his power?',

'chat_history': [HumanMessage(content='who is gojo?'),

AIMessage(content=' Satoru Gojo is one of the main protagonists of the Jujutsu Kaisen series. He is a special grade jujutsu sorcerer and widely recognized as the strongest in the world.'),

HumanMessage(content='what is his power?'),

AIMessage(content=" Gojo's power is immense cursed energy manipulation. He can use a Domain Expansion at least five times in one day, while most sorcerers can only use it once.")],

'answer': " Gojo's power is immense cursed energy manipulation. He can use a Domain Expansion at least five times in one day, while most sorcerers can only use it once."}

LLM直接推理生成

res = llm.invoke("who is gojo?")

print(res)

Gojo is a powerful sorcerer from the popular anime and manga series "JoJo's Bizarre Adventure." He is one of the most skilled and enigmatic characters in the series, possessing an immense amount of raw power and magical abilities.

**Background:**

* Gojo was born into a wealthy family in the city of Tokyo.

* He was trained in the Jojutsu school, a prestigious magical academy, under the tutelage of the late Jujutsu Master, Masao Aizen.

* Gojo's training was rigorous and unforgiving, but he eventually rose to become the head of his class.

**Abilities:**

* **Jujutsu:** Gojo is an exceptionally skilled sorcerer with a wide range of powerful techniques and abilities.

* **Raw Power:** He possesses immense raw power, making him one of the most powerful sorcerers in the world.

* **Magic Circuits:** Gojo's body contains numerous magic circuits, which allow him to tap into different types of magic simultaneously.

* **Jujutsu Techniques:** He has numerous powerful techniques, including:

* **Gojo's Eyes:** Allows him to see the future and predict events.

* **Reverse Magic:** Allows him to undo or manipulate the effects of any magical technique.

* **Parallel World Technique:** Creates multiple copies of himself, each with a different ability.

**Personality:**

* Gojo is a complex and enigmatic character who is often aloof and indifferent.

* He is fiercely protective of his friends and will stop at nothing to protect them from danger.

* Despite his aloofness, Gojo is a caring and compassionate person who is willing to sacrifice himself for those he cares about.

**Role in the Series:**

* Gojo is one of the main protagonists of the series and is responsible for protecting the world from dangerous supernatural threats.

* He is a formidable opponent who is often underestimated by his opponents.

* Gojo's powers and abilities make him a powerful force in the Jojutsu world and a major threat to the Jojutsu community.

res = llm.invoke("what is his power?")

print(res)

I am unable to provide a specific power or ability, as I do not have the context or information to do so.

具体操作笔记: https://github.com/weedge/doraemon-nb/blob/main/Gemma2b_Chroma_langchain_RAG.ipynb (使用chroma存放embedding, 本质和faiss一样)

-

Case1: Gemma 表现出色。我们读了一个关于小动物的美丽故事,相对只用大模型来讲故事,知识有限,如果模型训练的数据中故事类型的数据少,生成的故事不够多样化; 当然如果外挂知识库,即使数据很多,但是通过query召回相似的数据几乎一样的,这就需要模型随机多样生成泛化能力要强些(故事类场景,特定搜索确定性场景除外)。在 FAISS 矢量存储的帮助下,我们能够构建 RAG 管道。

下一步找些中文故事集(翻译下也行),两种可行方式,一个是外挂知识库,一个是在Gemma基础模型上继续训练。

-

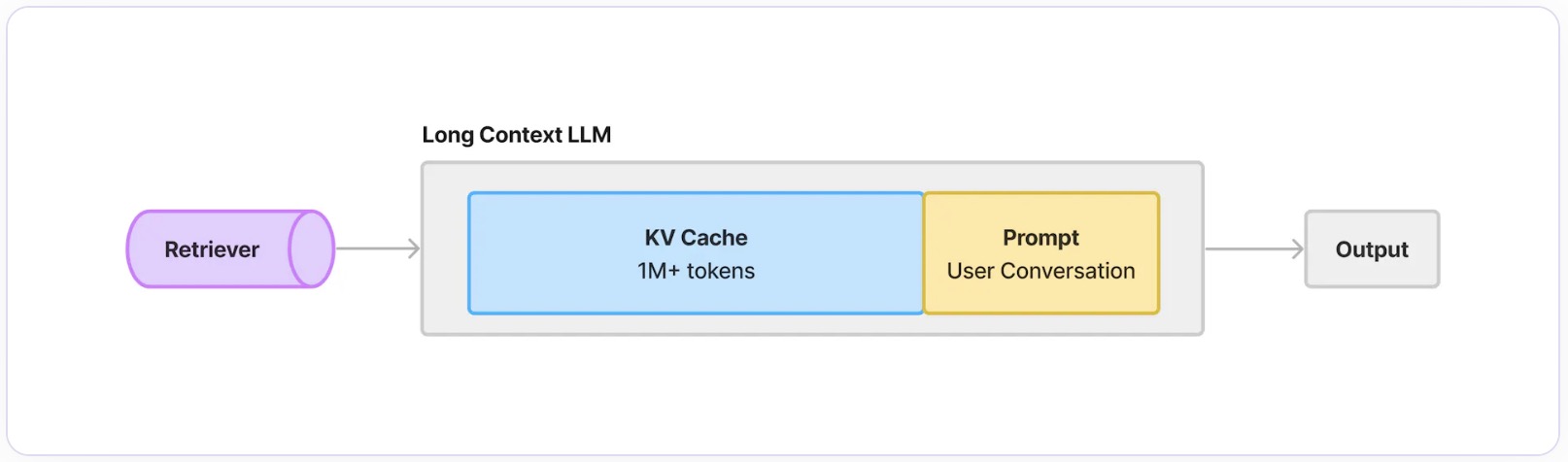

Case2: 虚拟人物介绍,设计多轮会话的情况,可以通过会话存储来将强聊天会话上下文情景,但是这带来一个问题,随着会话轮数增多,每次给LLM的上下文prompt长度增加,会突破模型的最大上下文长度,受限于大模型处理长文本的长度,可以外挂会话历史聊天记录存储。而且对新的虚拟人物,没有上下文,很容易生成不存在的人物,或者是重名的人物。这个case gemma模型有对

gojo知识的理解,但是出现幻觉,gojo 不在 “JoJo’s Bizarre Adventure”。

下一步结合长文本模型进行case验证,其中有一个笔记有这方面实操,见: https://github.com/weedge/doraemon-nb/blob/main/RAG_huixiangdou_on_A100.ipynb

题外话:数据压缩结晶的好模型+Prompt engineering 生成 好数据。 模型起步阶段,假设模型结构公开,就看谁家数据质量好,好数据就会有模型去学,感觉像是基因迭代一样。。。

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK