k8s实战案例之部署redis单机和redis cluster - Linux-1874

source link: https://www.cnblogs.com/qiuhom-1874/p/17459116.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

1、在k8s上部署redis单机

1.1、redis简介

redis是一款基于BSD协议,开源的非关系型数据库(nosql数据库),作者是意大利开发者Salvatore Sanfilippo在2009年发布,使用C语言编写;redis是基于内存存储,而且是目前比较流行的键值数据库(key-value database),它提供将内存通过网络远程共享的一种服务,提供类似功能的还有memcache,但相比 memcache,redis 还提供了易扩展、高性能、具备数据持久性等功能。主要的应用场景有session共享,常用于web集群中的tomcat或PHP中多web服务器的session共享;消息队列,ELK的日志缓存,部分业务的订阅发布系统;计数器,常用于访问排行榜,商品浏览数等和次数相关的数值统计场景;缓存,常用于数据查询、电商网站商品信息、新闻内容等;相对memcache,redis支持数据的持久化,可以将内存的数据保存在磁盘中,重启redis服务或者服务器之后可以从备份文件中恢复数据到内存继续使用;

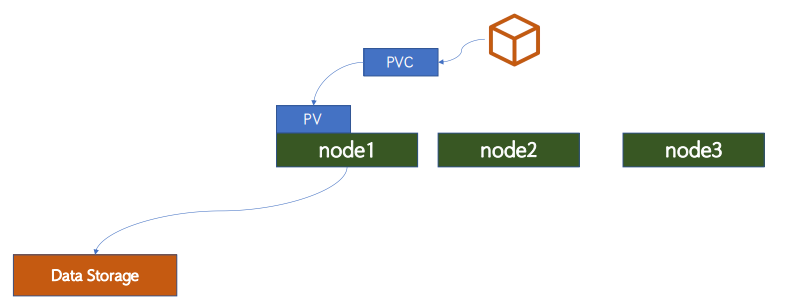

1.2、PV/PVC 及 Redis 单机

由于redis的数据(主要是redis快照)都存放在存储系统中,即便redis pod挂掉,对应数据都不会丢;因为在k8s上部署redis单机,redis pod挂了,k8s会将对应pod重建,重建时会把对应pvc挂载至pod中,加载快照,从而使得redis的数据不被pod的挂掉而丢数据;

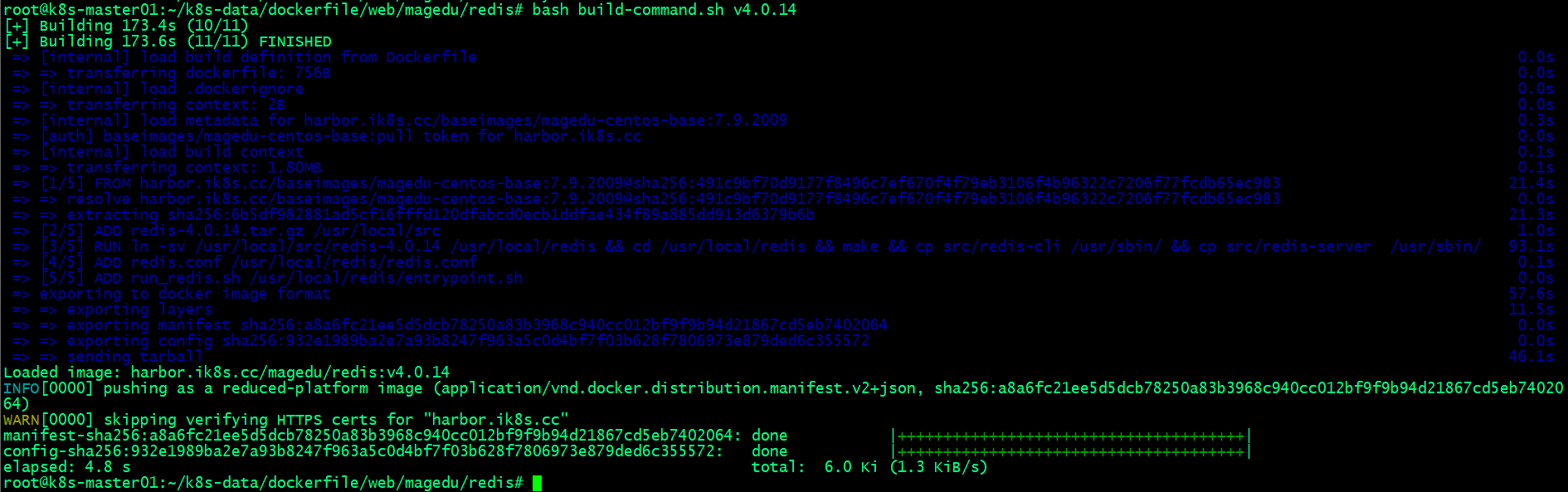

1.3、构建redis镜像

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis# ll

total 1784

drwxr-xr-x 2 root root 4096 Jun 5 15:22 ./

drwxr-xr-x 11 root root 4096 Aug 9 2022 ../

-rw-r--r-- 1 root root 717 Jun 5 15:20 Dockerfile

-rwxr-xr-x 1 root root 235 Jun 5 15:21 build-command.sh*

-rw-r--r-- 1 root root 1740967 Jun 22 2021 redis-4.0.14.tar.gz

-rw-r--r-- 1 root root 58783 Jun 22 2021 redis.conf

-rwxr-xr-x 1 root root 84 Jun 5 15:21 run_redis.sh*

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis# cat Dockerfile

#Redis Image

# 导入自定义centos基础镜像

FROM harbor.ik8s.cc/baseimages/magedu-centos-base:7.9.2009

# 添加redis源码包至/usr/local/src

ADD redis-4.0.14.tar.gz /usr/local/src

# 编译安装redis

RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && cd /usr/local/redis && make && cp src/redis-cli /usr/sbin/ && cp src/redis-server /usr/sbin/ && mkdir -pv /data/redis-data

# 添加redis配置文件

ADD redis.conf /usr/local/redis/redis.conf

# 暴露redis服务端口

EXPOSE 6379

#ADD run_redis.sh /usr/local/redis/run_redis.sh

#CMD ["/usr/local/redis/run_redis.sh"]

# 添加启动脚本

ADD run_redis.sh /usr/local/redis/entrypoint.sh

# 启动redis

ENTRYPOINT ["/usr/local/redis/entrypoint.sh"]

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.ik8s.cc/magedu/redis:${TAG} .

#sleep 3

#docker push harbor.ik8s.cc/magedu/redis:${TAG}

nerdctl build -t harbor.ik8s.cc/magedu/redis:${TAG} .

nerdctl push harbor.ik8s.cc/magedu/redis:${TAG}

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis# cat run_redis.sh

#!/bin/bash

# Redis启动命令

/usr/sbin/redis-server /usr/local/redis/redis.conf

# 使用tail -f 在pod内部构建守护进程

tail -f /etc/hosts

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis# grep -v '^#\|^$' redis.conf

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo yes

save 900 1

save 5 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error no

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis-data

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

slave-lazy-flush no

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble no

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/redis#

1.3.1、验证rdis镜像是否上传至harbor?

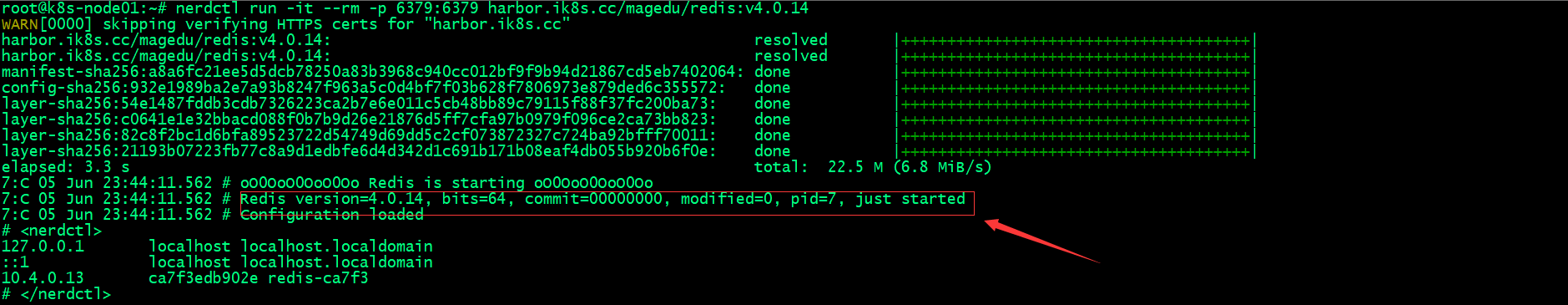

1.4、测试redis 镜像

1.4.1、验证将redis镜像运行为容器,看看是否正常运行?

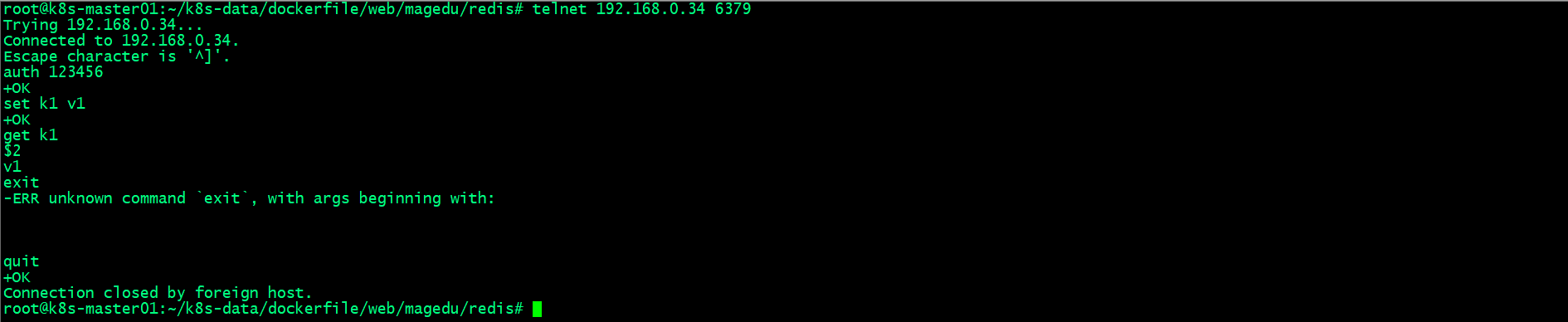

1.4.2、远程连接redis,看看是否可正常连接?

能够将redis镜像运行为容器,并且能够通过远程主机连接至redis进行数据读写,说明我们构建的reids镜像没有问题;

1.5、创建PV和PVC

1.5.1、在nfs服务器上准备redis数据存储目录

root@harbor:~# mkdir -pv /data/k8sdata/magedu/redis-datadir-1

mkdir: created directory '/data/k8sdata/magedu/redis-datadir-1'

root@harbor:~# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/k8sdata/kuboard *(rw,no_root_squash)

/data/volumes *(rw,no_root_squash)

/pod-vol *(rw,no_root_squash)

/data/k8sdata/myserver *(rw,no_root_squash)

/data/k8sdata/mysite *(rw,no_root_squash)

/data/k8sdata/magedu/images *(rw,no_root_squash)

/data/k8sdata/magedu/static *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-1 *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-2 *(rw,no_root_squash)

/data/k8sdata/magedu/zookeeper-datadir-3 *(rw,no_root_squash)

/data/k8sdata/magedu/redis-datadir-1 *(rw,no_root_squash)

root@harbor:~# exportfs -av

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [5]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/mysite".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [7]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/images".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [8]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/static".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [11]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [12]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [13]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [16]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting *:/data/k8sdata/magedu/redis-datadir-1

exporting *:/data/k8sdata/magedu/zookeeper-datadir-3

exporting *:/data/k8sdata/magedu/zookeeper-datadir-2

exporting *:/data/k8sdata/magedu/zookeeper-datadir-1

exporting *:/data/k8sdata/magedu/static

exporting *:/data/k8sdata/magedu/images

exporting *:/data/k8sdata/mysite

exporting *:/data/k8sdata/myserver

exporting *:/pod-vol

exporting *:/data/volumes

exporting *:/data/k8sdata/kuboard

root@harbor:~#

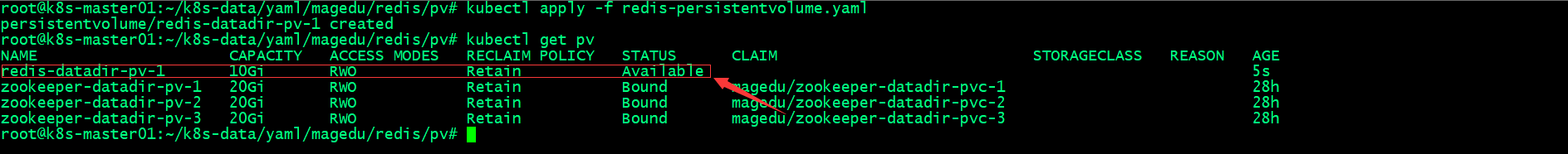

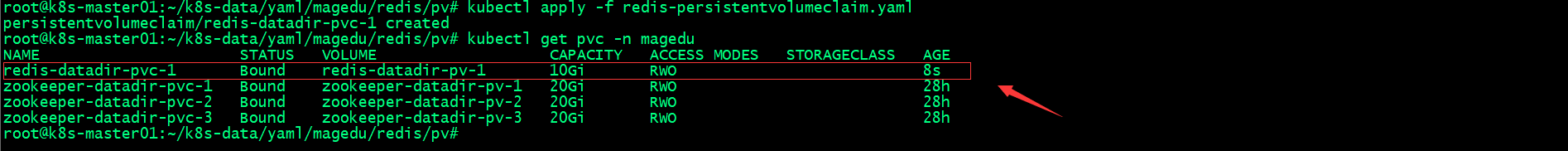

1.5.2、创建pv

root@k8s-master01:~/k8s-data/yaml/magedu/redis/pv# cat redis-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv-1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/magedu/redis-datadir-1

server: 192.168.0.42

root@k8s-master01:~/k8s-data/yaml/magedu/redis/pv#

1.5.3、创建pvc

root@k8s-master01:~/k8s-data/yaml/magedu/redis/pv# cat redis-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc-1

namespace: magedu

spec:

volumeName: redis-datadir-pv-1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

root@k8s-master01:~/k8s-data/yaml/magedu/redis/pv#

1.6、部署redis服务

root@k8s-master01:~/k8s-data/yaml/magedu/redis# cat redis.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: devops-redis

name: deploy-devops-redis

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: devops-redis

template:

metadata:

labels:

app: devops-redis

spec:

containers:

- name: redis-container

image: harbor.ik8s.cc/magedu/redis:v4.0.14

imagePullPolicy: Always

volumeMounts:

- mountPath: "/data/redis-data/"

name: redis-datadir

volumes:

- name: redis-datadir

persistentVolumeClaim:

claimName: redis-datadir-pvc-1

---

kind: Service

apiVersion: v1

metadata:

labels:

app: devops-redis

name: srv-devops-redis

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 6379

targetPort: 6379

nodePort: 36379

selector:

app: devops-redis

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

root@k8s-master01:~/k8s-data/yaml/magedu/redis#

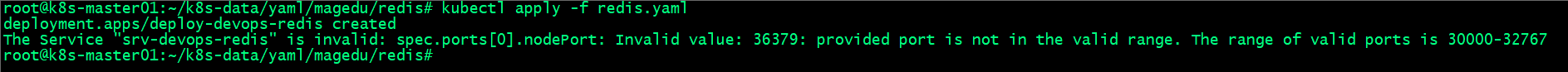

上述报错说我们的服务端口超出范围,这是因为我们在初始化k8s集群时指定的服务端口范围;

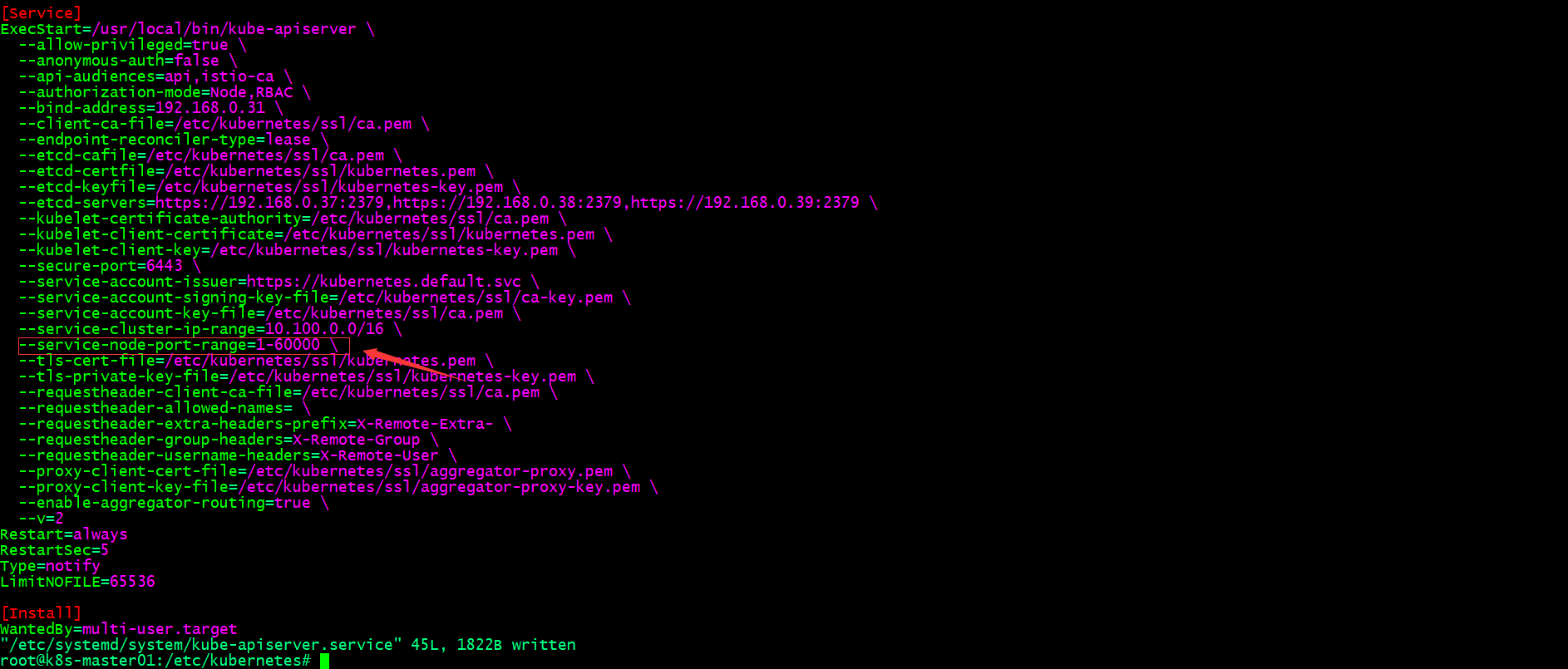

1.6.1、修改nodeport端口范围

编辑/etc/systemd/system/kube-apiserver.service,将其--service-node-port-range选项指定的值修改即可;其他两个master节点也需要修改哦

1.6.2、重载kube-apiserver.service,重启kube-apiserver

root@k8s-master01:~# systemctl daemon-reload

root@k8s-master01:~# systemctl restart kube-apiserver.service

root@k8s-master01:~#

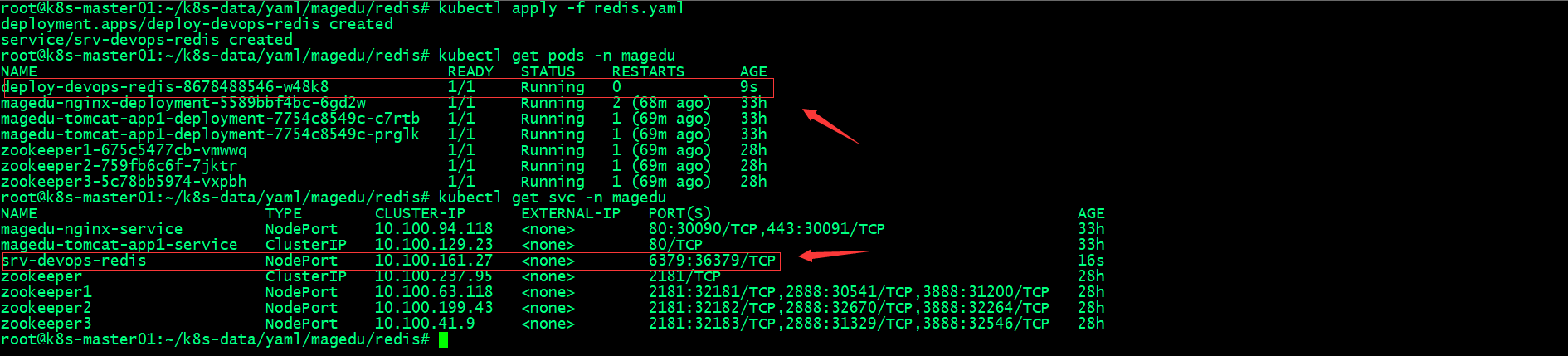

再次部署redis

1.7、验证redis数据读写

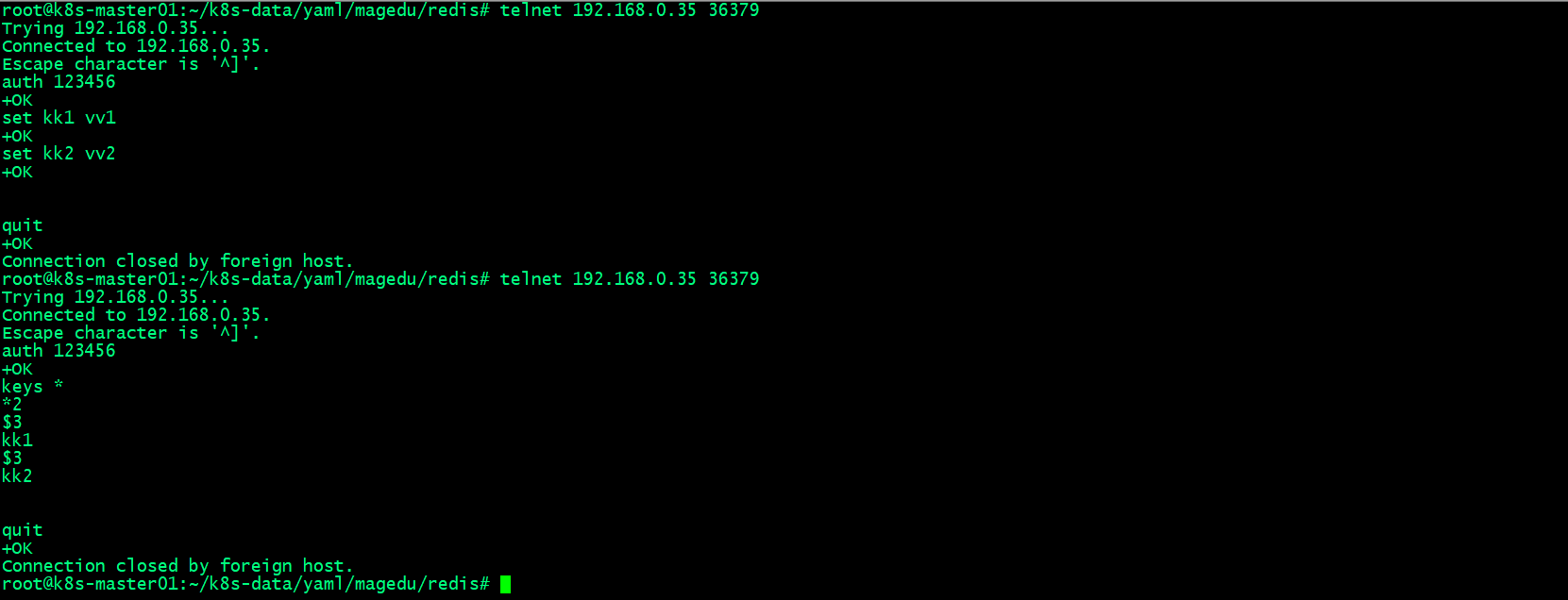

1.7.1、连接k8s任意节点的36376端口,测试redis读写数据

1.8、验证redis pod 重建对应数据是否丢失?

1.8.1、查看redis快照文件是否存储到存储上呢?

root@harbor:~# ll /data/k8sdata/magedu/redis-datadir-1

total 12

drwxr-xr-x 2 root root 4096 Jun 5 16:29 ./

drwxr-xr-x 8 root root 4096 Jun 5 15:53 ../

-rw-r--r-- 1 root root 116 Jun 5 16:29 dump.rdb

root@harbor:~#

可以看到刚才我们向redis写入数据,对应redis在规定时间内发现key的变化就做了快照,因为redis数据目录时通过pv/pvc挂载的nfs,所以我们在nfs对应目录里时可以正常看到这个快照文件的;

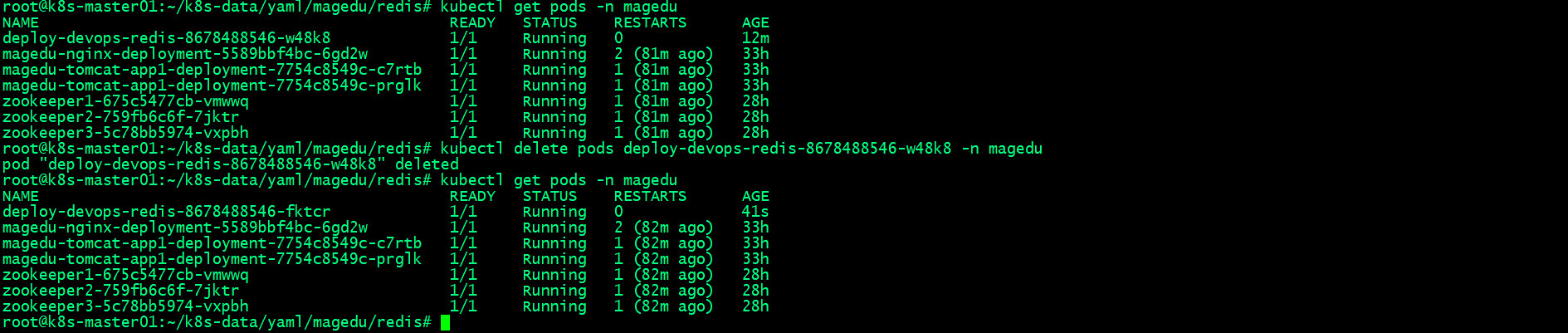

1.8.2、删除redis pod 等待k8s重建redis pod

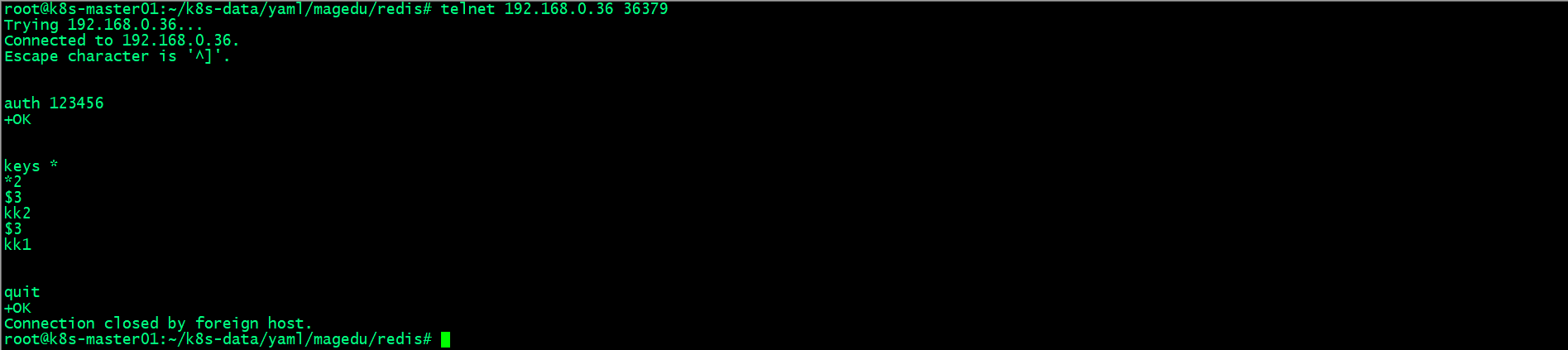

1.8.3、验证重建后的redis pod数据

可以看到k8s重建后的redis pod 还保留着原有pod的数据;这说明k8s重建时挂载了前一个pod的pvc;

2、在k8s上部署redis集群

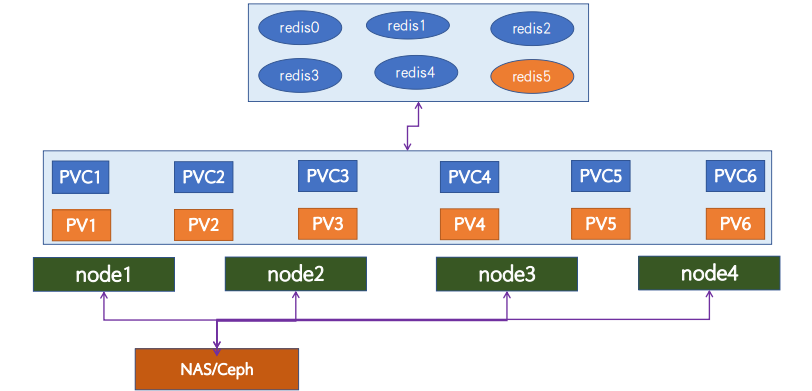

2.1、PV/PVC及Redis Cluster-StatefulSet

redis cluster相比redis单机要稍微复杂一点,我们也是通过pv/pvc将redis cluster数据存放在存储系统中,不同于redis单机,redis cluster对存入的数据会做crc16计算,然后和16384做取模计算,得出一个数字,这个数字就是存入redis cluster的一个槽位;即redis cluster将16384个槽位,平均分配给集群所有master节点,每个master节点存放整个集群数据的一部分;这样一来就存在一个问题,如果master宕机,那么对应槽位的数据也就不可用,为了防止master单点故障,我们还需要对master做高可用,即专门用一个slave节点对master做备份,master宕机的情况下,对应slave会接管master继续向集群提供服务,从而实现redis cluster master的高可用;如上图所示,我们使用3主3从的redis cluster,redis0,1,2为master,那么3,4,5就对应为0,1,2的slave,负责备份各自对应的master的数据;这六个pod都是通过k8s集群的pv/pvc将数据存放在存储系统中;

2.2、创建PV

2.2.1、在nfs上准备redis cluster 数据目录

root@harbor:~# mkdir -pv /data/k8sdata/magedu/redis{0,1,2,3,4,5}

mkdir: created directory '/data/k8sdata/magedu/redis0'

mkdir: created directory '/data/k8sdata/magedu/redis1'

mkdir: created directory '/data/k8sdata/magedu/redis2'

mkdir: created directory '/data/k8sdata/magedu/redis3'

mkdir: created directory '/data/k8sdata/magedu/redis4'

mkdir: created directory '/data/k8sdata/magedu/redis5'

root@harbor:~# tail -6 /etc/exports

/data/k8sdata/magedu/redis0 *(rw,no_root_squash)

/data/k8sdata/magedu/redis1 *(rw,no_root_squash)

/data/k8sdata/magedu/redis2 *(rw,no_root_squash)

/data/k8sdata/magedu/redis3 *(rw,no_root_squash)

/data/k8sdata/magedu/redis4 *(rw,no_root_squash)

/data/k8sdata/magedu/redis5 *(rw,no_root_squash)

root@harbor:~# exportfs -av

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [5]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/mysite".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [7]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/images".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [8]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/static".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [11]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [12]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [13]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [16]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [18]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis0".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [19]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [20]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [21]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [22]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis4".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [23]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis5".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting *:/data/k8sdata/magedu/redis5

exporting *:/data/k8sdata/magedu/redis4

exporting *:/data/k8sdata/magedu/redis3

exporting *:/data/k8sdata/magedu/redis2

exporting *:/data/k8sdata/magedu/redis1

exporting *:/data/k8sdata/magedu/redis0

exporting *:/data/k8sdata/magedu/redis-datadir-1

exporting *:/data/k8sdata/magedu/zookeeper-datadir-3

exporting *:/data/k8sdata/magedu/zookeeper-datadir-2

exporting *:/data/k8sdata/magedu/zookeeper-datadir-1

exporting *:/data/k8sdata/magedu/static

exporting *:/data/k8sdata/magedu/images

exporting *:/data/k8sdata/mysite

exporting *:/data/k8sdata/myserver

exporting *:/pod-vol

exporting *:/data/volumes

exporting *:/data/k8sdata/kuboard

root@harbor:~#

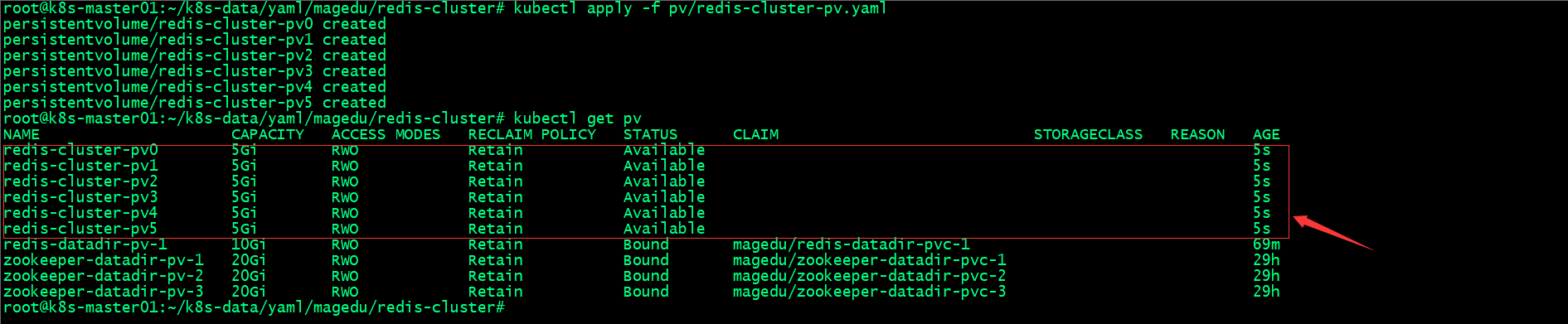

2.2.2、创建pv

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# cat pv/redis-cluster-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis0

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv3

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv4

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv5

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/redis5

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

2.3、部署redis cluster

2.3.1、基于redis.conf文件创建configmap

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# cat redis.conf

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

2.3.2、创建configmap

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# kubectl create cm redis-conf --from-file=./redis.conf -n magedu

configmap/redis-conf created

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# kubectl get cm -n magedu

NAME DATA AGE

kube-root-ca.crt 1 35h

redis-conf 1 6s

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

2.3.3、验证configmap

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# kubectl describe cm redis-conf -n magedu

Name: redis-conf

Namespace: magedu

Labels: <none>

Annotations: <none>

Data

====

redis.conf:

----

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

BinaryData

====

Events: <none>

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

2.3.4、部署redis cluster

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# cat redis.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: magedu

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis

port: 6379

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

name: redis-access

namespace: magedu

labels:

app: redis

spec:

type: NodePort

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis-access

protocol: TCP

port: 6379

targetPort: 6379

nodePort: 36379

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: magedu

spec:

serviceName: redis

replicas: 6

selector:

matchLabels:

app: redis

appCluster: redis-cluster

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis:4.0.14

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

resources:

requests:

cpu: "500m"

memory: "500Mi"

ports:

- containerPort: 6379

name: redis

protocol: TCP

- containerPort: 16379

name: cluster

protocol: TCP

volumeMounts:

- name: conf

mountPath: /etc/redis

- name: data

mountPath: /var/lib/redis

volumes:

- name: conf

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

volumeClaimTemplates:

- metadata:

name: data

namespace: magedu

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

上述配置清单,主要用sts控制器创建了6个pod副本,每个副本都使用configmap中的配置文件作为redis配置文件,使用pvc模板指定pod在k8s上自动关联pv,并在magedu名称空间创建pvc,即只要k8s上有空余的pv,对应pod就会在magedu这个名称空间按pvc模板信息创建pvc;当然我们可以使用存储类自动创建pvc,也可以提前创建好pvc,一般情况下使用sts控制器,我们可以使用pvc模板的方式来指定pod自动创建pvc(前提是k8s有足够的pv可用);

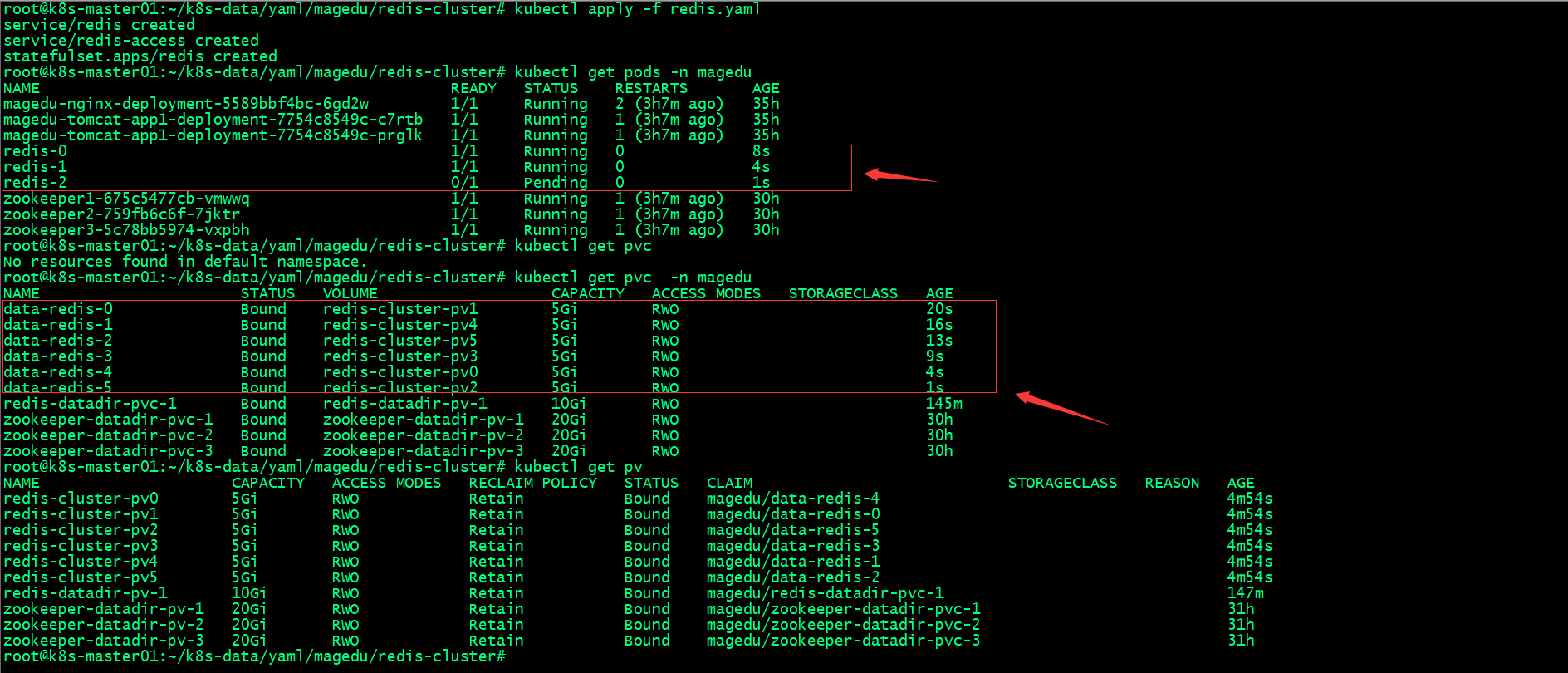

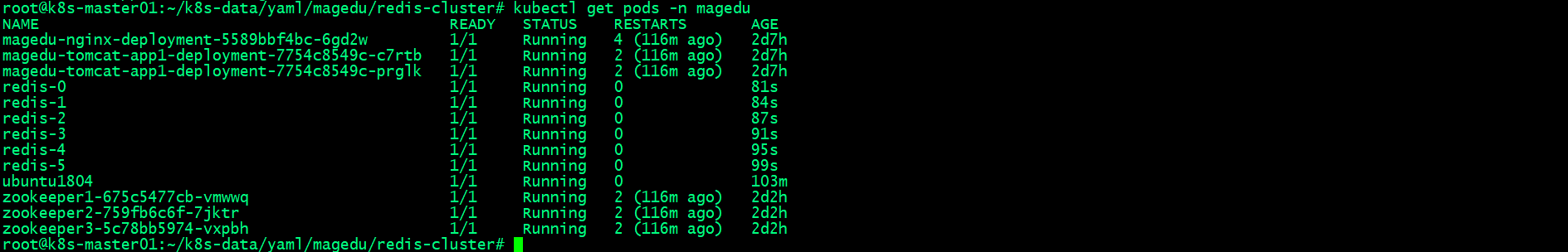

应用配置清单部署redis cluster

使用sts控制器创建pod,pod名称是sts控制器的名称-id,使用pvc模板创建pvc的名称为pvc模板名称-pod名称,即pvc模板名-sts控制器名-id;

2.4、初始化redis cluster

2.4.1、在k8s上创建临时容器,安装redis cluster 初始化工具

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# kubectl run -it ubuntu1804 --image=ubuntu:18.04 --restart=Never -n magedu bash

If you don't see a command prompt, try pressing enter.

root@ubuntu1804:/#

root@ubuntu1804:/# apt update

# 安装必要工具

root@ubuntu1804:/# apt install python2.7 python-pip redis-tools dnsutils iputils-ping net-tools

# 更新pip

root@ubuntu1804:/# pip install --upgrade pip

# 使用pip安装redis cluster初始化工具redis-trib

root@ubuntu1804:/# pip install redis-trib==0.5.1

root@ubuntu1804:/#

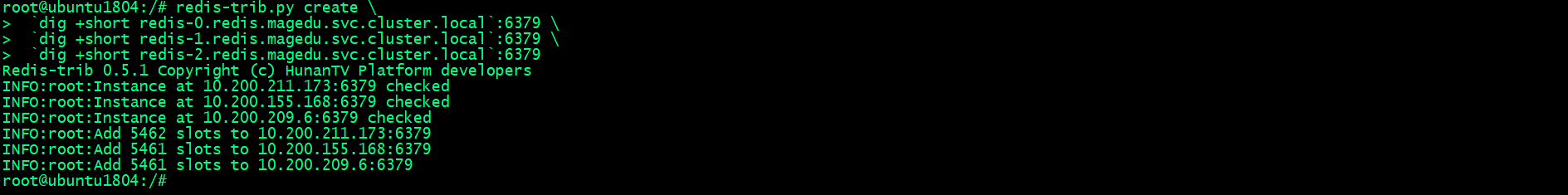

2.4.2、初始化redis cluster

root@ubuntu1804:/# redis-trib.py create \

`dig +short redis-0.redis.magedu.svc.cluster.local`:6379 \

`dig +short redis-1.redis.magedu.svc.cluster.local`:6379 \

`dig +short redis-2.redis.magedu.svc.cluster.local`:6379

在k8s上我们使用sts创建pod,对应pod的名称是固定不变的,所以我们初始化redis 集群就直接使用redis pod名称就可以直接解析到对应pod的IP地址;在传统虚拟机或物理机上初始化redis集群,我们可用直接使用IP地址,原因是物理机或虚拟机IP地址是固定的,在k8s上pod的IP地址是不固定的;

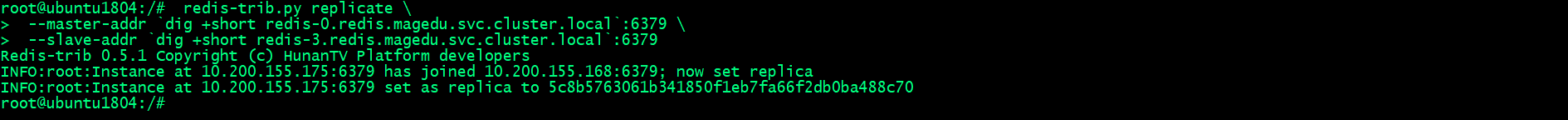

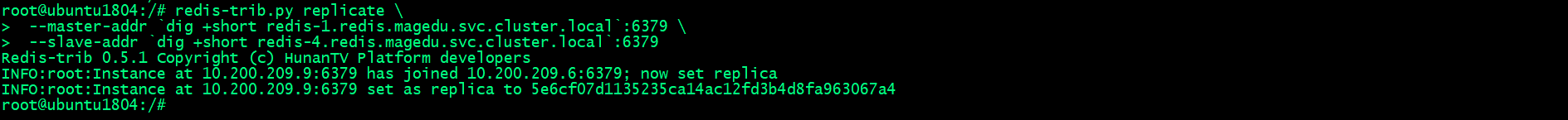

2.4.3、给master指定slave

- 给redis-0指定slave为 redis-3

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-0.redis.magedu.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-3.redis.magedu.svc.cluster.local`:6379

- 给redis-1指定slave为 redis-4

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-1.redis.magedu.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-4.redis.magedu.svc.cluster.local`:6379

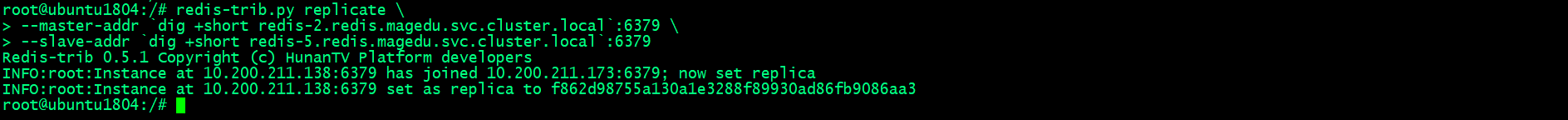

- 给redis-2指定slave为 redis-5

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-2.redis.magedu.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-5.redis.magedu.svc.cluster.local`:6379

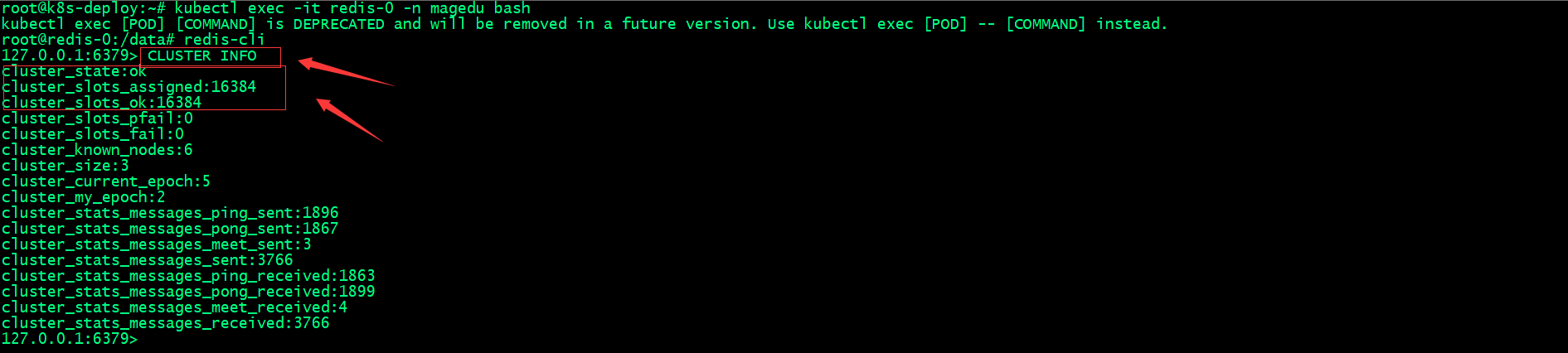

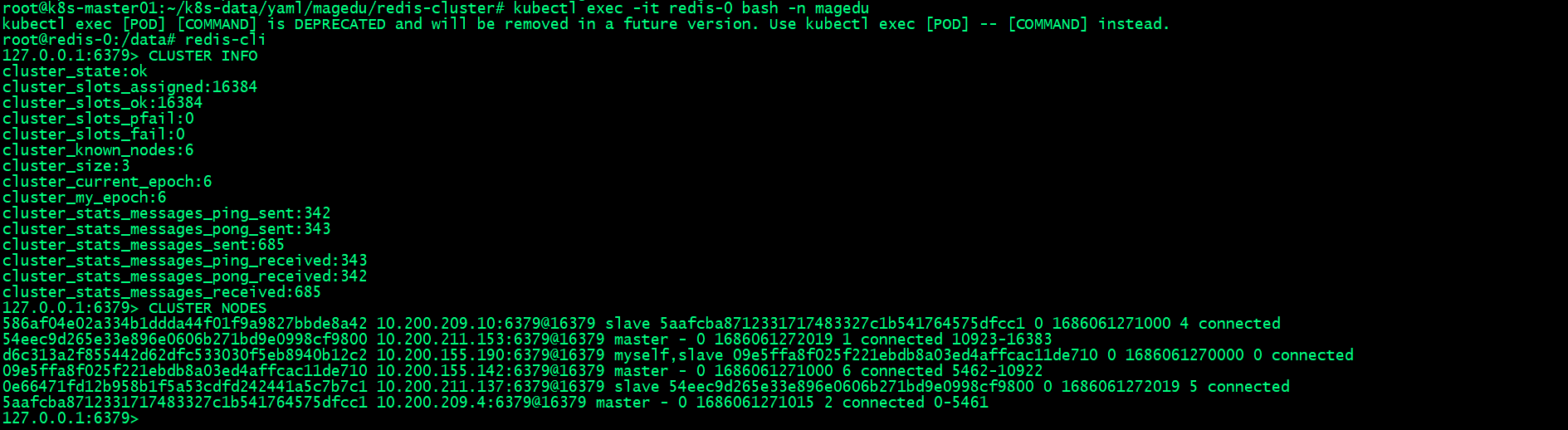

2.5、验证redis cluster状态

2.5.1、进入redis cluster 任意pod 查看集群信息

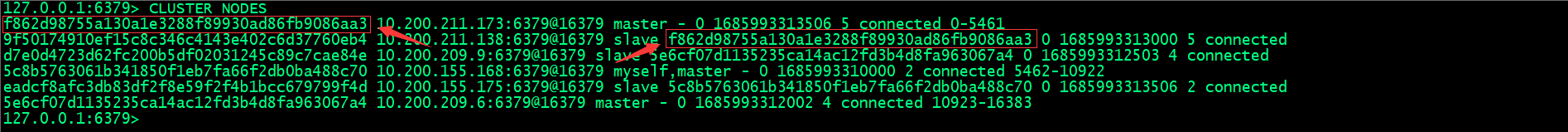

2.5.2、查看集群节点

集群节点信息中记录了master节点id和slave id,其中slave后面会对应master的id,表示该slave备份对应master数据;

2.5.3、查看当前节点信息

127.0.0.1:6379> info

# Server

redis_version:4.0.14

redis_git_sha1:00000000

redis_git_dirty:0

redis_build_id:165c932261a105d7

redis_mode:cluster

os:Linux 5.15.0-73-generic x86_64

arch_bits:64

multiplexing_api:epoll

atomicvar_api:atomic-builtin

gcc_version:8.3.0

process_id:1

run_id:aa8ef00d843b4f622374dbb643cf27cdbd4d5ba3

tcp_port:6379

uptime_in_seconds:4303

uptime_in_days:0

hz:10

lru_clock:8272053

executable:/data/redis-server

config_file:/etc/redis/redis.conf

# Clients

connected_clients:1

client_longest_output_list:0

client_biggest_input_buf:0

blocked_clients:0

# Memory

used_memory:2642336

used_memory_human:2.52M

used_memory_rss:5353472

used_memory_rss_human:5.11M

used_memory_peak:2682248

used_memory_peak_human:2.56M

used_memory_peak_perc:98.51%

used_memory_overhead:2559936

used_memory_startup:1444856

used_memory_dataset:82400

used_memory_dataset_perc:6.88%

total_system_memory:16740012032

total_system_memory_human:15.59G

used_memory_lua:37888

used_memory_lua_human:37.00K

maxmemory:0

maxmemory_human:0B

maxmemory_policy:noeviction

mem_fragmentation_ratio:2.03

mem_allocator:jemalloc-4.0.3

active_defrag_running:0

lazyfree_pending_objects:0

# Persistence

loading:0

rdb_changes_since_last_save:0

rdb_bgsave_in_progress:0

rdb_last_save_time:1685992849

rdb_last_bgsave_status:ok

rdb_last_bgsave_time_sec:0

rdb_current_bgsave_time_sec:-1

rdb_last_cow_size:245760

aof_enabled:1

aof_rewrite_in_progress:0

aof_rewrite_scheduled:0

aof_last_rewrite_time_sec:-1

aof_current_rewrite_time_sec:-1

aof_last_bgrewrite_status:ok

aof_last_write_status:ok

aof_last_cow_size:0

aof_current_size:0

aof_base_size:0

aof_pending_rewrite:0

aof_buffer_length:0

aof_rewrite_buffer_length:0

aof_pending_bio_fsync:0

aof_delayed_fsync:0

# Stats

total_connections_received:7

total_commands_processed:17223

instantaneous_ops_per_sec:1

total_net_input_bytes:1530962

total_net_output_bytes:108793

instantaneous_input_kbps:0.04

instantaneous_output_kbps:0.00

rejected_connections:0

sync_full:1

sync_partial_ok:0

sync_partial_err:1

expired_keys:0

expired_stale_perc:0.00

expired_time_cap_reached_count:0

evicted_keys:0

keyspace_hits:0

keyspace_misses:0

pubsub_channels:0

pubsub_patterns:0

latest_fork_usec:853

migrate_cached_sockets:0

slave_expires_tracked_keys:0

active_defrag_hits:0

active_defrag_misses:0

active_defrag_key_hits:0

active_defrag_key_misses:0

# Replication

role:master

connected_slaves:1

slave0:ip=10.200.155.175,port=6379,state=online,offset=1120,lag=1

master_replid:60381a28fee40b44c409e53eeef49215a9d3b0ff

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:1120

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:1120

# CPU

used_cpu_sys:12.50

used_cpu_user:7.51

used_cpu_sys_children:0.01

used_cpu_user_children:0.00

# Cluster

cluster_enabled:1

# Keyspace

127.0.0.1:6379>

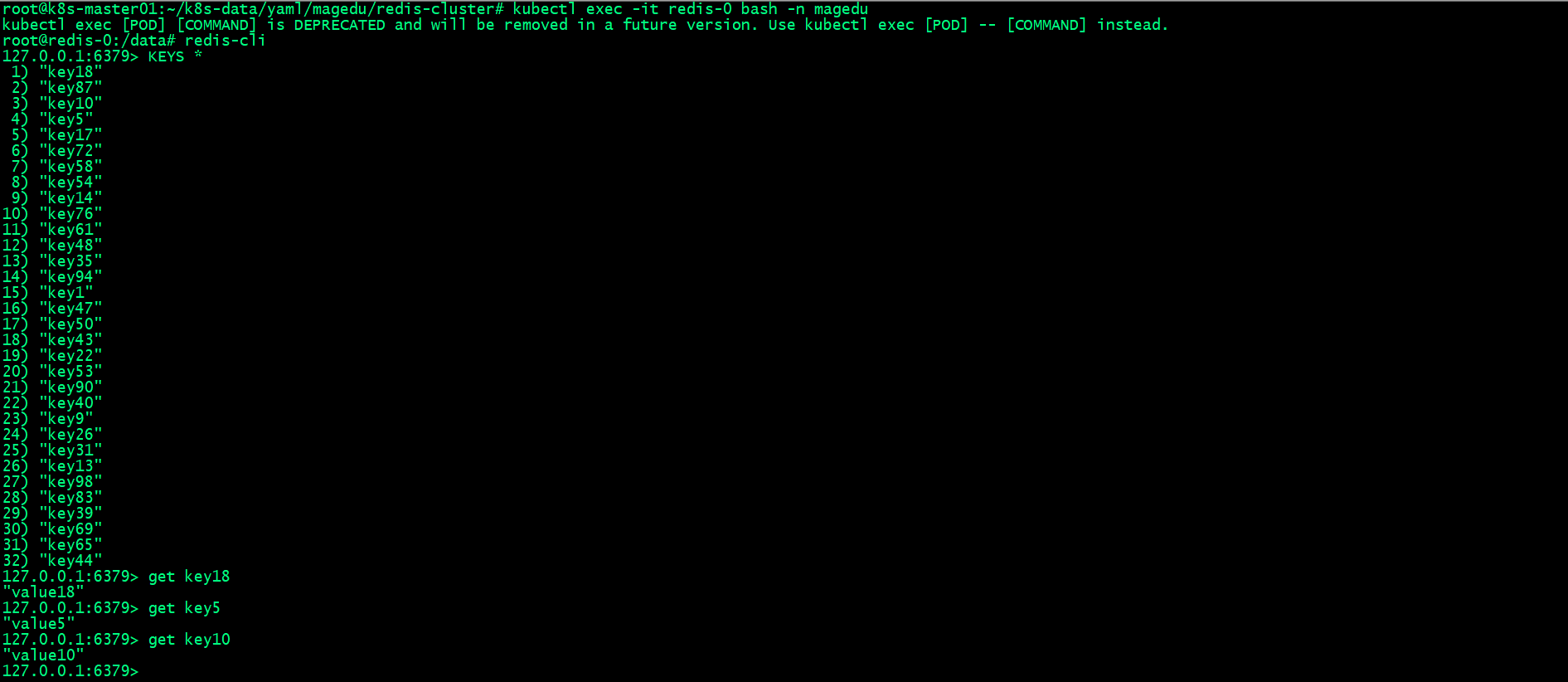

2.5.4、验证redis cluster读写数据是否正常?

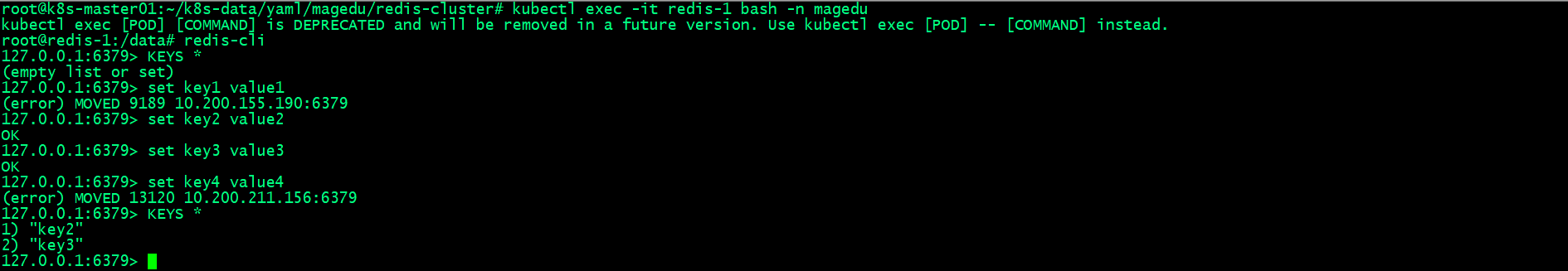

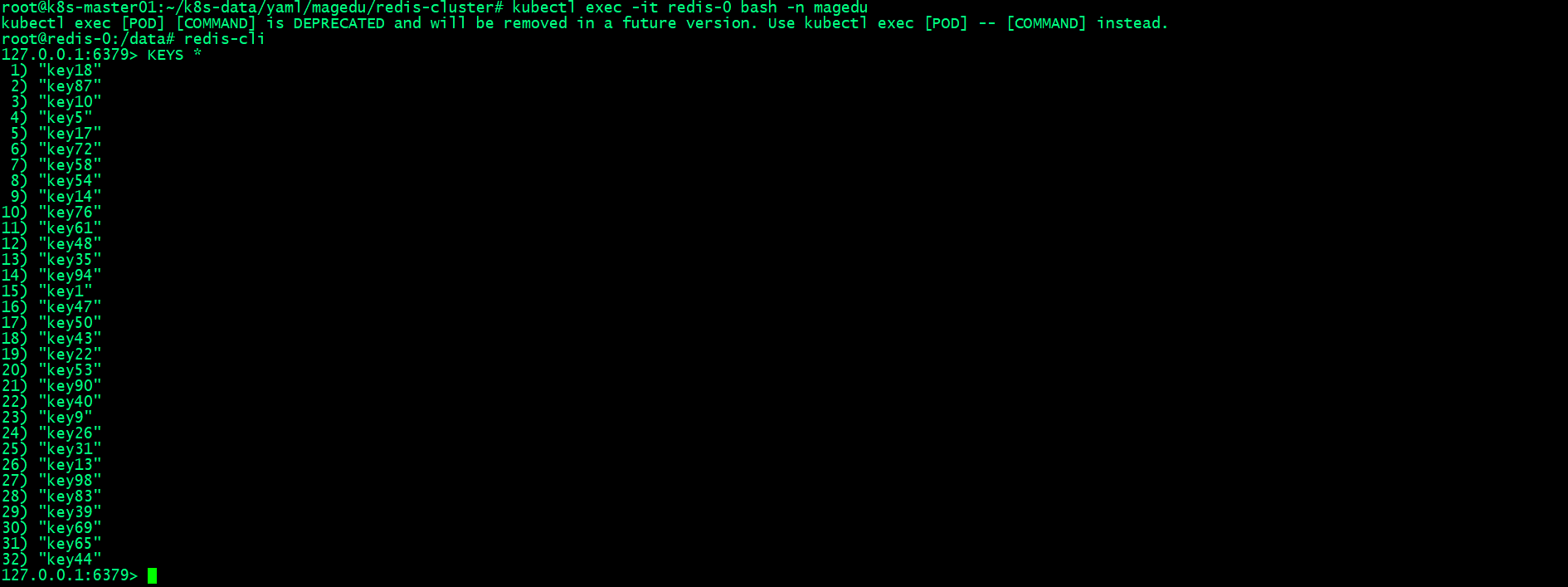

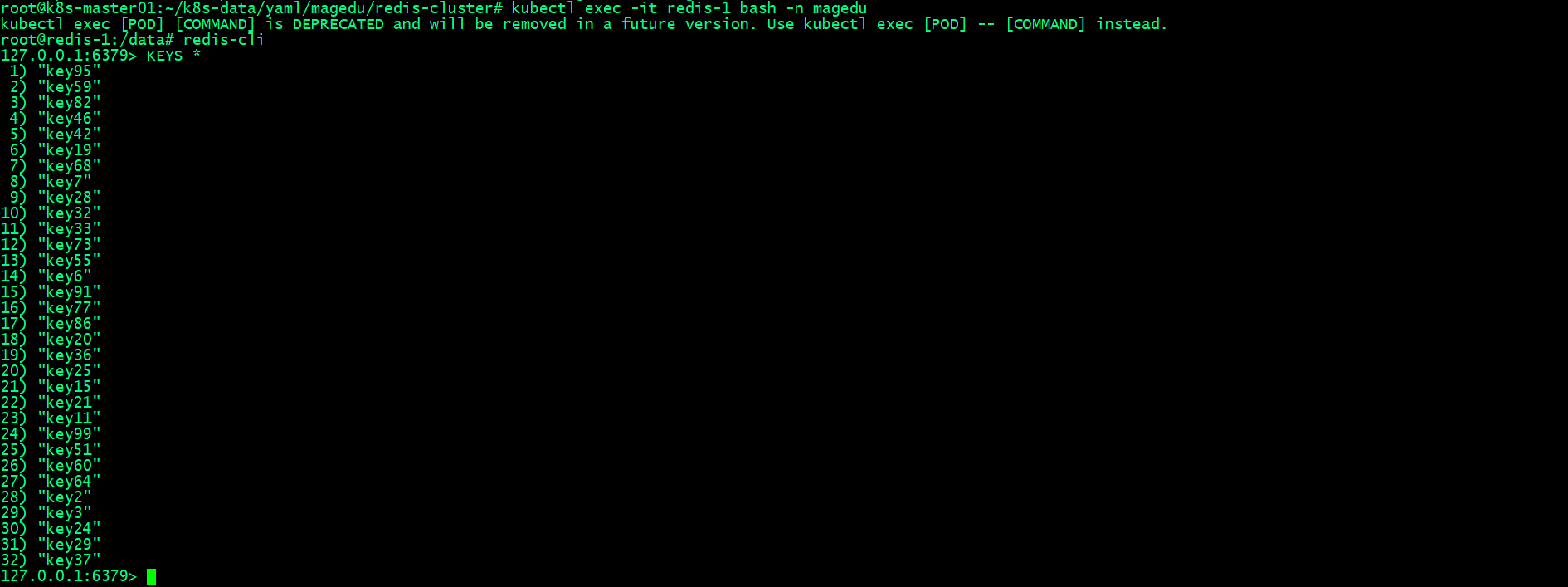

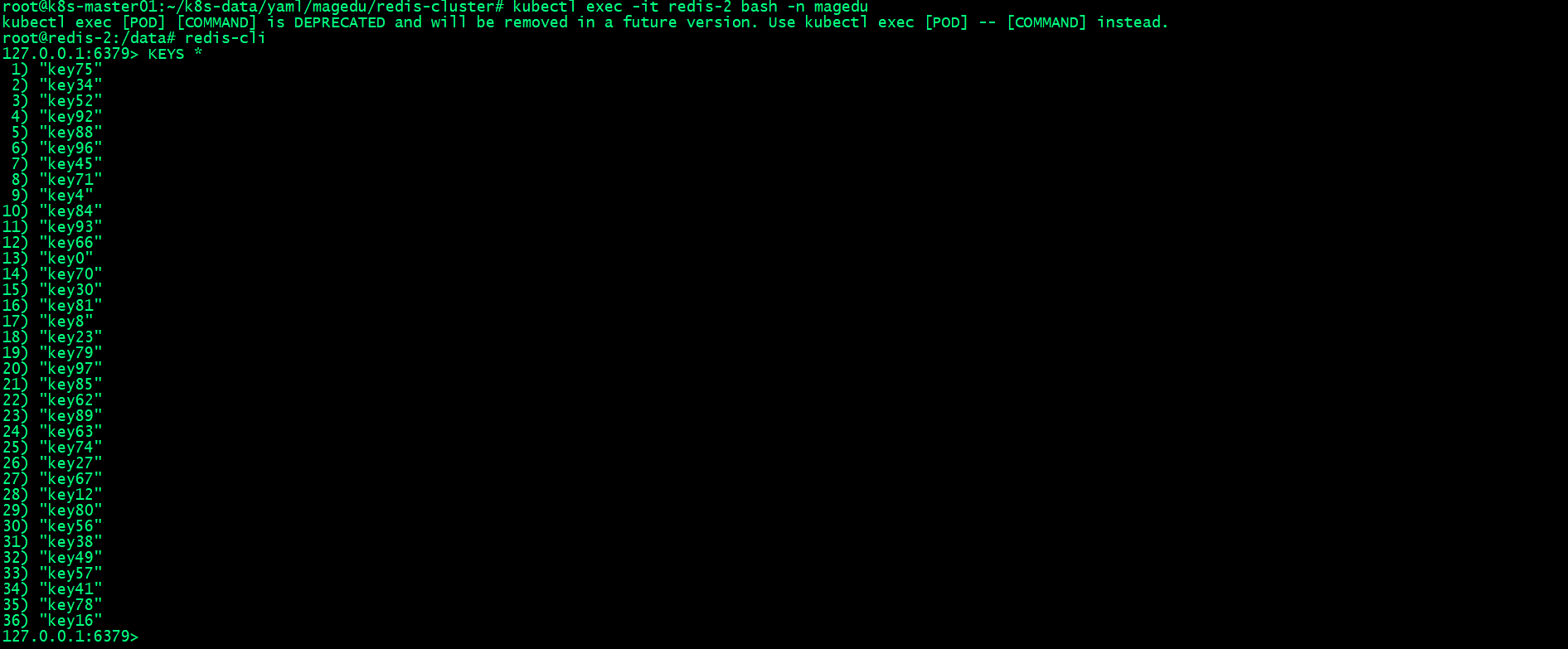

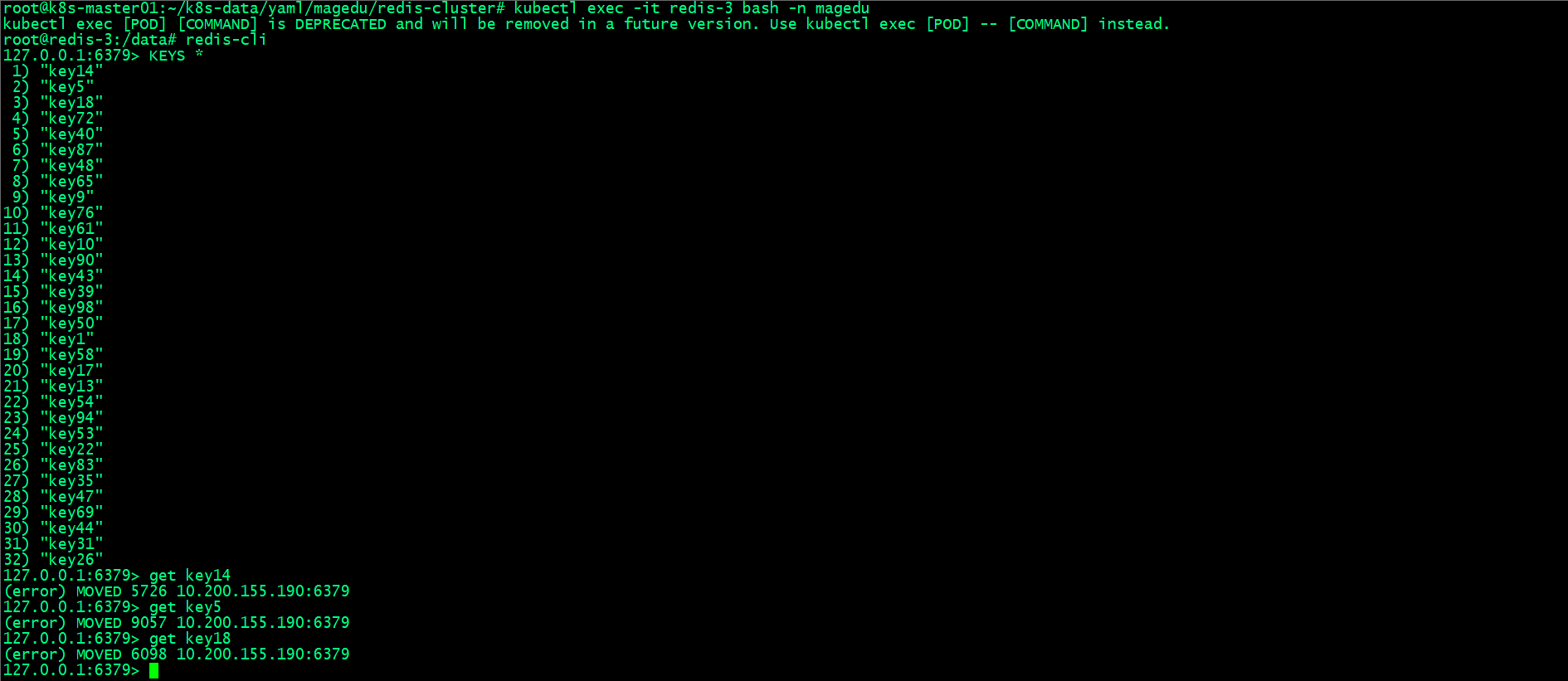

2.5.4.1、手动连接redis cluster 进行数据读写

手动连接redis 集群master节点进行数据读写,存在一个问题就是当我们写入的key经过crc16计算对16384取模后,对应槽位可能不在当前节点,redis它会告诉我们该key该在哪里去写;从上面的截图可用看到,现在redis cluster 是可用正常读写数据的

2.5.4.2、使用python脚本连接redis cluster 进行数据读写

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# cat redis-client-test.py

#!/usr/bin/env python

#coding:utf-8

#Author:Zhang ShiJie

#python 2.7/3.8

#pip install redis-py-cluster

import sys,time

from rediscluster import RedisCluster

def init_redis():

startup_nodes = [

{'host': '192.168.0.34', 'port': 36379},

{'host': '192.168.0.35', 'port': 36379},

{'host': '192.168.0.36', 'port': 36379},

{'host': '192.168.0.34', 'port': 36379},

{'host': '192.168.0.35', 'port': 36379},

{'host': '192.168.0.36', 'port': 36379},

]

try:

conn = RedisCluster(startup_nodes=startup_nodes,

# 有密码要加上密码哦

decode_responses=True, password='')

print('连接成功!!!!!1', conn)

#conn.set("key-cluster","value-cluster")

for i in range(100):

conn.set("key%s" % i, "value%s" % i)

time.sleep(0.1)

data = conn.get("key%s" % i)

print(data)

#return conn

except Exception as e:

print("connect error ", str(e))

sys.exit(1)

init_redis()

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

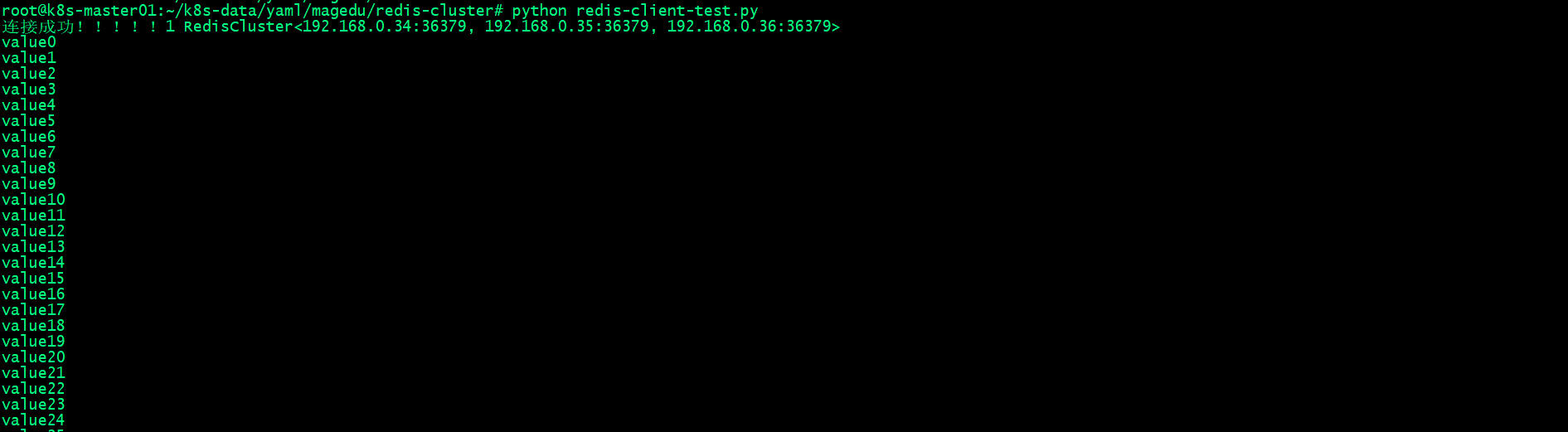

运行脚本,向redis cluster 写入数据

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# python redis-client-test.py

Traceback (most recent call last):

File "/root/k8s-data/yaml/magedu/redis-cluster/redis-client-test.py", line 8, in <module>

from rediscluster import RedisCluster

ModuleNotFoundError: No module named 'rediscluster'

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

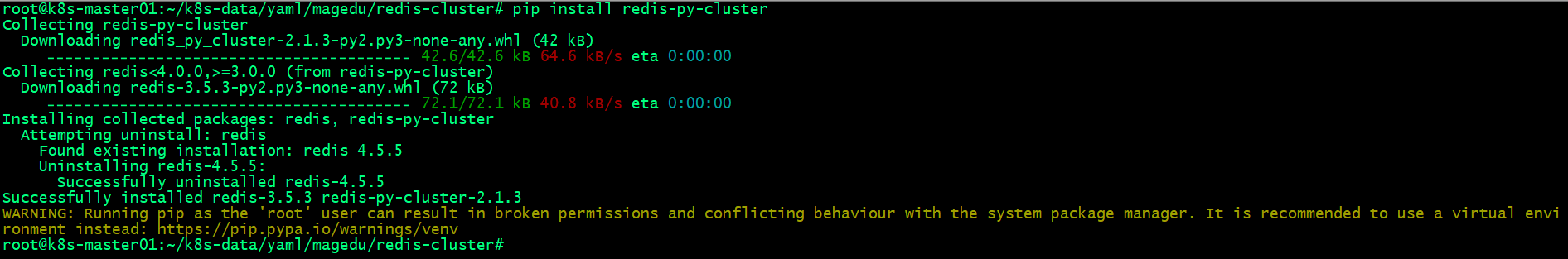

这里提示没有找到rediscluster模块,解决办法就是通过pip安装redis-py-cluster模块即可;

安装redis-py-cluster模块

运行脚本连接redis cluster进行数据读写

连接redis pod,验证数据是否正常写入?

从上面的截图可用看到三个reids cluster master pod各自都存放了一部分key,并非全部;说明刚才我们用python脚本把数据正常写入了redis cluster;

验证在slave 节点是否可用正常读取数据?

从上面的截图可以了解到在slave节点是不可以读取数据;

到slave对应的master节点读取数据

上述验证说明了redis cluster 只有master可以读写数据,slave只是对master数据做备份,不可以在slave上读写数据;

2.6、验证验证redis cluster高可用

2.6.1、在k8s node节点将redis:4.0.14镜像上传至本地harbor

- 修改镜像tag

root@k8s-node01:~# nerdctl tag redis:4.0.14 harbor.ik8s.cc/redis-cluster/redis:4.0.14

- 上传redis镜像至本地harbor

root@k8s-node01:~# nerdctl push harbor.ik8s.cc/redis-cluster/redis:4.0.14

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.list.v2+json, sha256:1ae9e0f790001af4b9f83a2b3d79c593c6f3e9a881b754a99527536259fb6625)

WARN[0000] skipping verifying HTTPS certs for "harbor.ik8s.cc"

index-sha256:1ae9e0f790001af4b9f83a2b3d79c593c6f3e9a881b754a99527536259fb6625: done |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:5bd4fe08813b057df2ae55003a75c39d80a4aea9f1a0fbc0fbd7024edf555786: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:191c4017dcdd3370f871a4c6e7e1d55c7d9abed2bebf3005fb3e7d12161262b8: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.4 s total: 8.5 Ki (6.1 KiB/s)

root@k8s-node01:~#

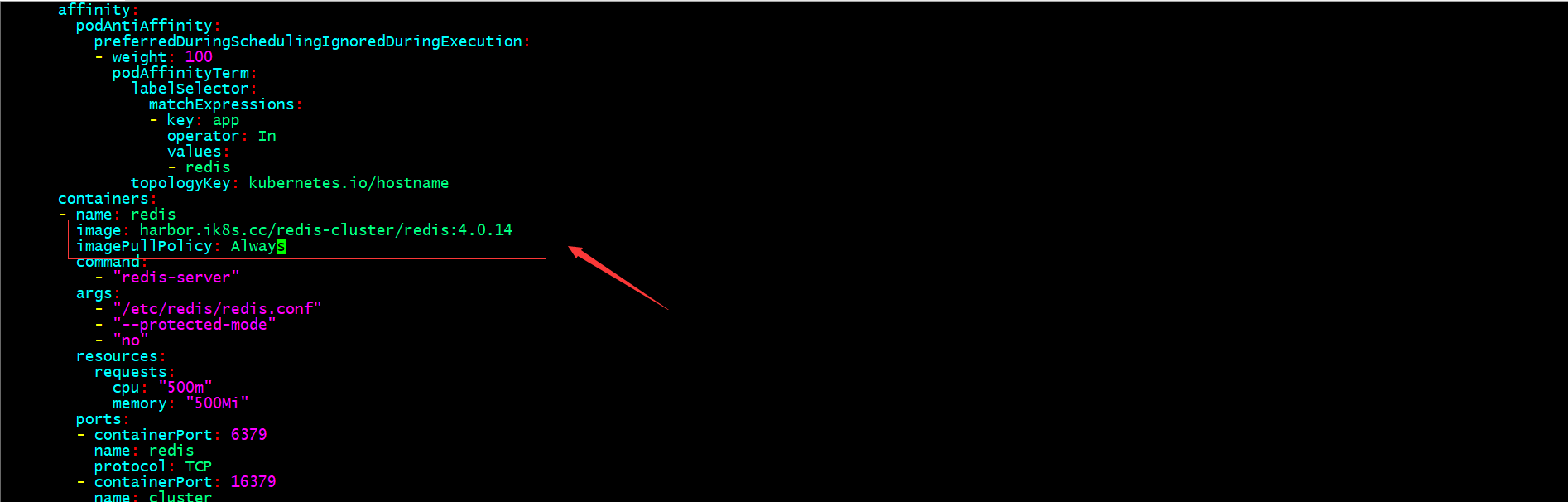

2.6.2、修改redis cluster部署清单镜像和镜像拉取策略

修改镜像为本地harbor镜像和拉取策略是方便我们测试redis cluster的高可用;

2.6.3、重新apply redis cluster部署清单

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster# kubectl apply -f redis.yaml

service/redis unchanged

service/redis-access unchanged

statefulset.apps/redis configured

root@k8s-master01:~/k8s-data/yaml/magedu/redis-cluster#

这里相当于给redis cluster更新,他们之间的集群关系还存在,因为集群关系配置都保存在远端存储之上;

- 验证pod是否都正常running?

- 验证集群状态和集群关系

不同于之前,这里rdis-0变成了slave ,redis-3变成了master;从上面的截图我们也发现,在k8s上部署redis cluster pod重建以后(IP地址发生变化),对应集群关系不会发生变化;对应master和salve一对关系始终只是再对应的master和salve两个pod中切换,这其实就是高可用;

2.6.4、停掉本地harbor,删除redis master pod,看看对应slave是否会提升为master?

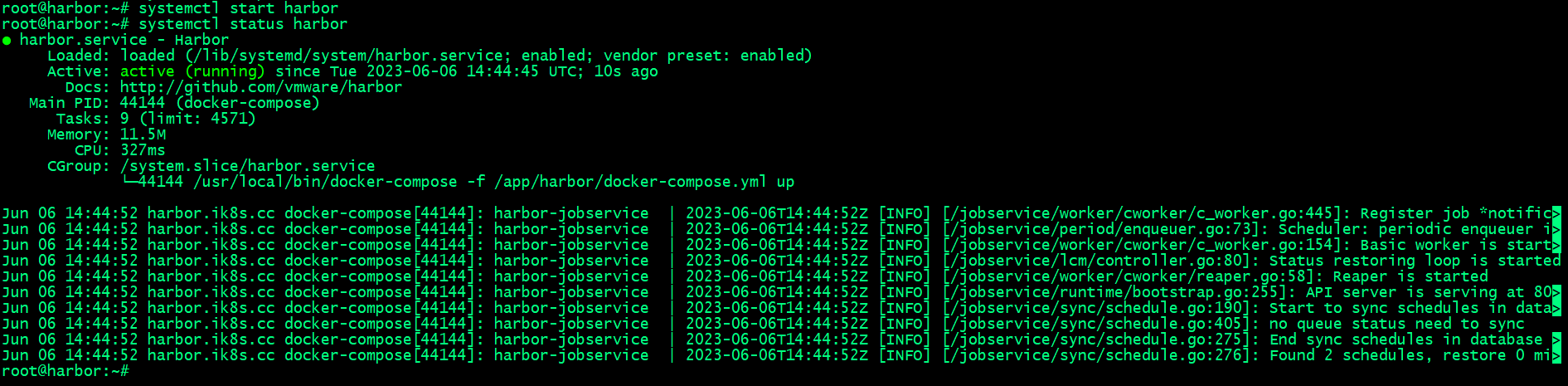

- 停止harbor服务

root@harbor:~# systemctl stop harbor

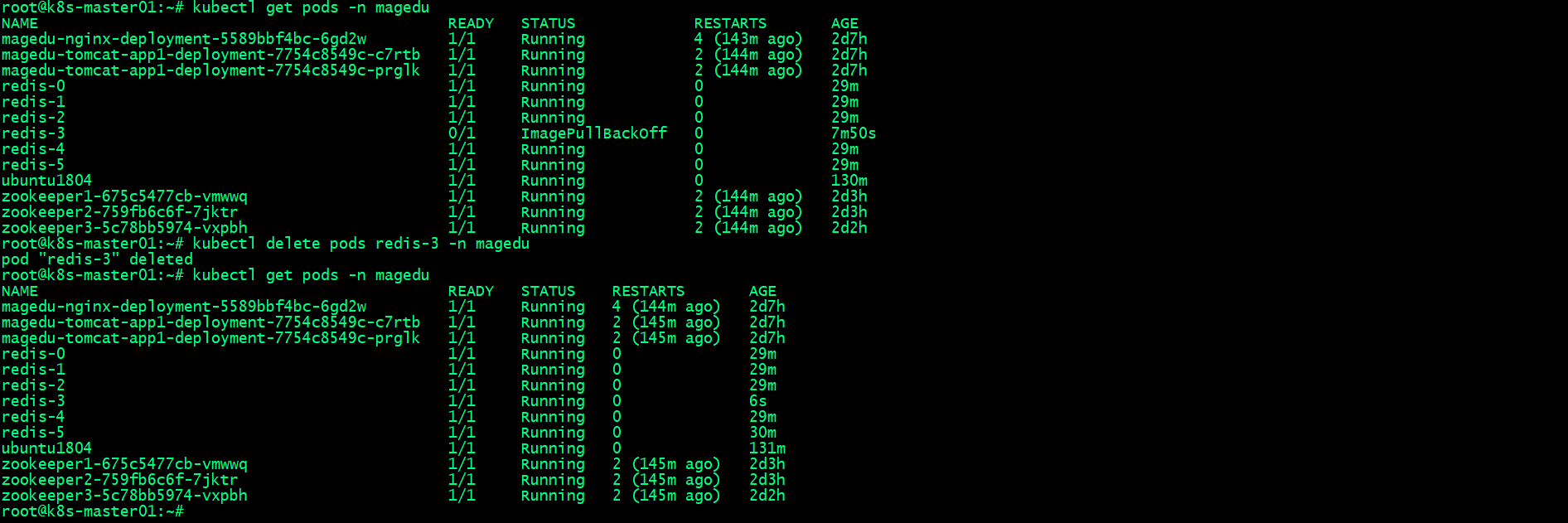

- 删除redis-3,看看redis-0是否会提升为master?

可用看到我们把redis-3删除(相当于master宕机)以后,对应slave提升为master了;

2.6.5、恢复harbor服务,看看对应redis-3恢复会议后是否还是redis-0的slave呢?

- 恢复harbor服务

- 验证redis-3pod是否恢复?

再次删除redis-3以后,对应pod正常被重建,并处于running状态;

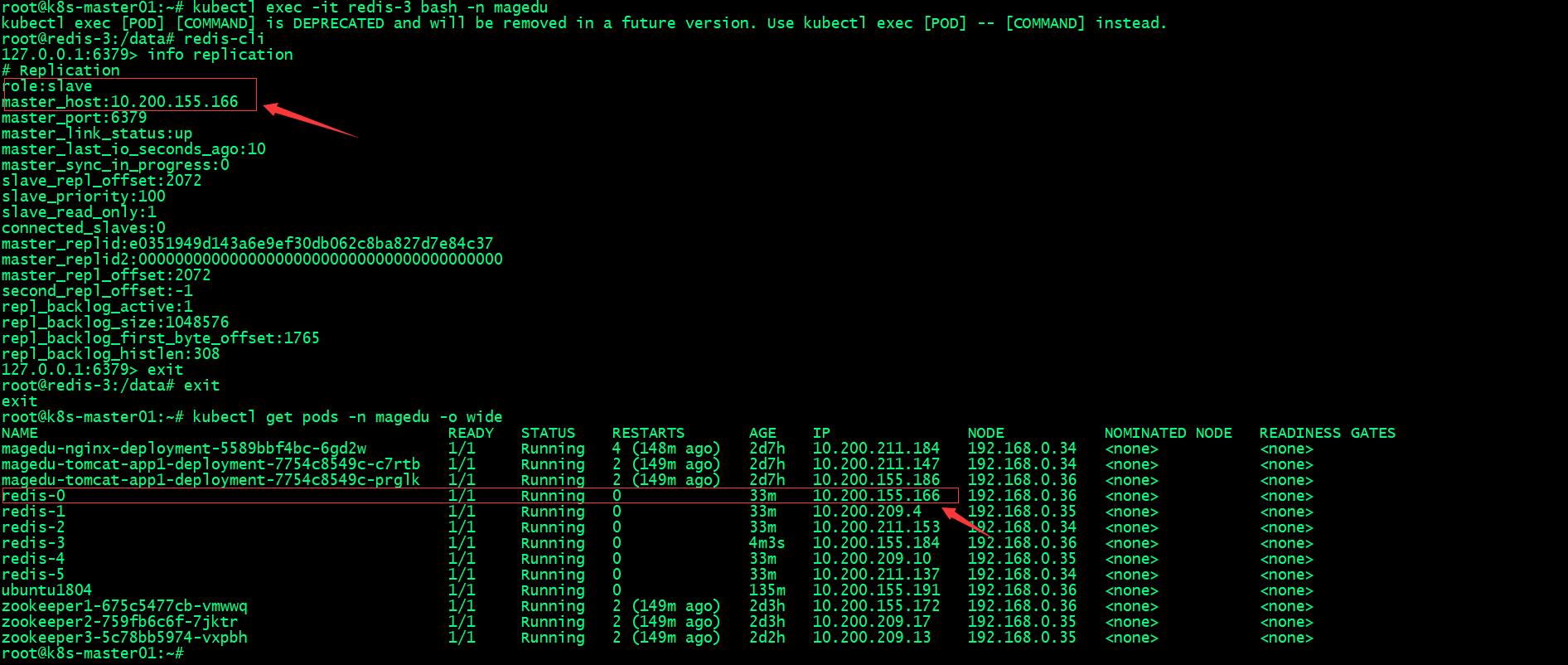

- 验证redis-3的主从关系、

可以看到redis-3恢复以后,对应自动加入集群成为redis-0的slave;

Recommend

-

42

42

单机部署open-falcon 0.2

-

19

19

ZooKeeper是一个分布式的、开源的分布式应用程序协调服务,可以在分布式环境中实现应用配置管理、统一命名服务、状态同步服务等功能。ZooKeeper是一种为分布式应用所设计的高可用、高性能的开源协调服务,它提供了一项基本服务:分布式锁服务。由于ZooKeeper开源的...

-

6

6

Verdaccio 性能优化:单机 Cluster Posted on 2019-12-31...

-

11

11

Redis是一个开源,高级的键值存储和一个适用的解决方案,用于构建高性能,可扩展的Web应用程序。它有三个主要特点,使其优越于其它键值数据存储系统: Redis将其数据库完全保存在内存中,仅使用磁盘进行持久化。 与其它键值数据存储相比...

-

7

7

分类: 数据库 标签: 数据库,

-

7

7

This site can’t be reached oohcode.com’s server IP address could not be found.

-

12

12

前文实践了Redis原生命令部署集群,redis还提供了cluster命令集,可以简化了集群的部署和管理,本文将利用cluster命令集来完成集群搭建的实践。官方文档 https://redis.io/docs/manual/scaling/redis cluster 相关命令可以查看 --cluster 帮助,命令...

-

7

7

Redis 6 单机安装及日常维护指令 精选 原创 1.安装配置

-

9

9

单机环境下的秒杀问题 全局唯一ID# 为什么要使用全局唯一ID:

-

6

6

Redis单机部署 下载最新稳定版Redis https://download.redis.io/redis-stable.tar.gz # 安装wget

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK