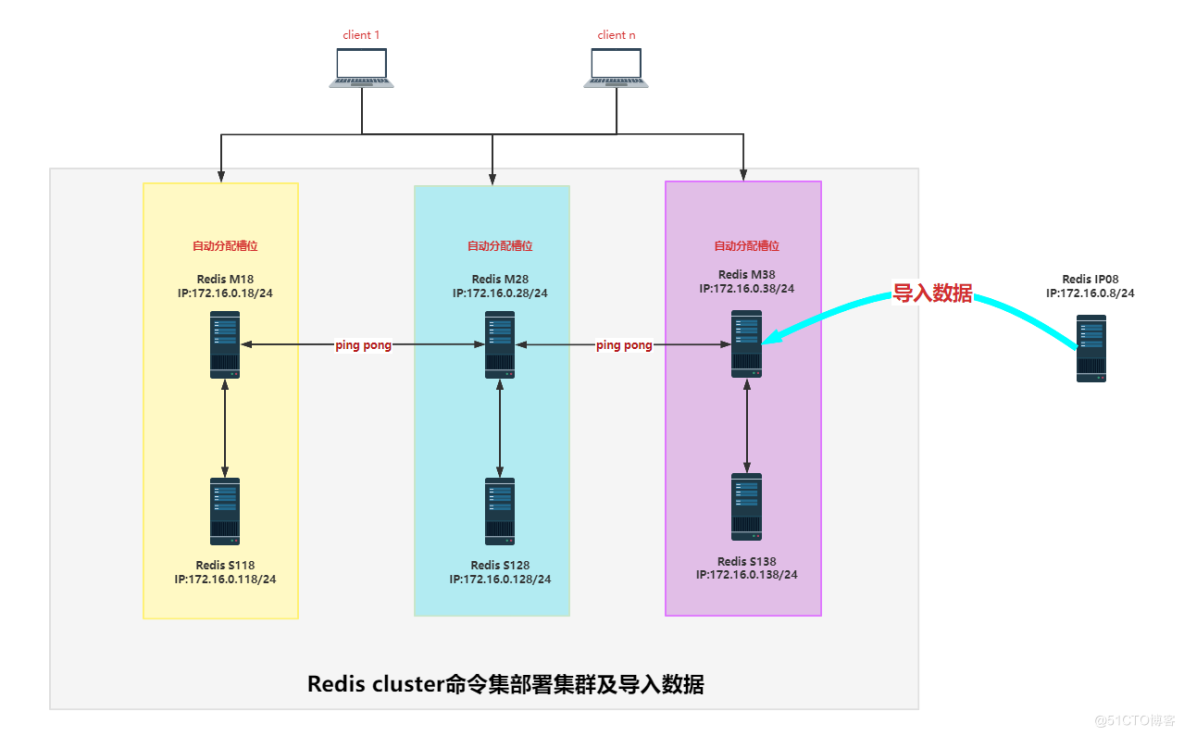

Redis cluster命令部署集群及数据导入

source link: https://blog.51cto.com/shone/5283672

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

前文实践了Redis原生命令部署集群,redis还提供了cluster命令集,可以简化了集群的部署和管理,本文将利用cluster命令集来完成集群搭建的实践。

官方文档 https://redis.io/docs/manual/scaling/

redis cluster 相关命令可以查看 --cluster 帮助,命令格式:redis-cli --cluster help

1. Redis cluster集群的环境准备

需要准备六台主实现redis集群 及 一台 redis 独立机器完成本实验

时间同步,确保NTP或Chrony服务正常运行。

禁用SELinux和防火墙(或放通需要的端口)

每个redis 节点采用相同的相同的redis版本、相同的密码、硬件配置

所有redis服务器必须没有任何数据

2. Redis cluster 主机基本配置

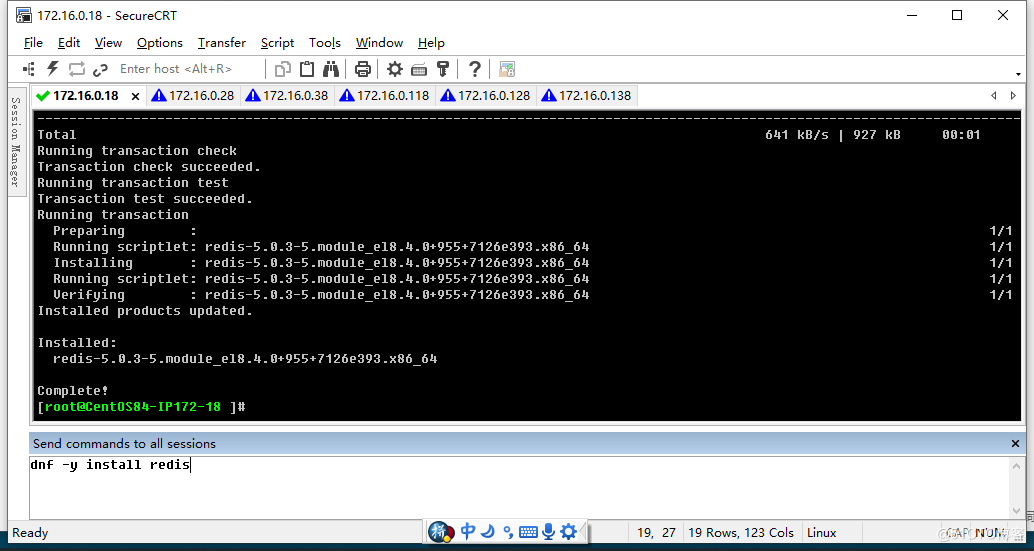

所有六台主机都执行以下配置,通过SSH终端软件一次性向六台主机发送命令来完成。

[root@CentOS84 ]#dnf -y install redis

## 每个节点同时修改redis配置,开启cluster功能参数

[root@CentOS84 ]#ll /etc/redis.conf

-rw-r----- 1 redis root 62189 Oct 20 2021 /etc/redis.conf

[root@CentOS84-IP172-18 ]#sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' -e '/masterauth/a masterauth 123456' -e '/# requirepass/a requirepass 123456' -e '/# cluster-enabled yes/a cluster-enabled yes' -e '/# cluster-config-file nodes-6379.conf/a cluster-config-file nodes-6379.conf' -e '/cluster-require-full-coverage yes/c cluster-require-full-coverage no' /etc/redis.conf

## 开机自动启动

[root@CentOS84 ]#systemctl enable --now redis

[root@CentOS84 ]#systemctl restart redis

## 验证当前Redis服务状态

[root@CentOS84 ]#ss -lnt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 511 0.0.0.0:16379 0.0.0.0:*

LISTEN 0 511 0.0.0.0:6379 0.0.0.0:*

## 进程标准了[cluster]

[root@CentOS84-IP172-18 ]#ps -ef|grep redis

redis 206879 1 0 16:27 ? 00:00:00 /usr/bin/redis-server 0.0.0.0:6379 [cluster]

root 206929 105260 0 16:29 pts/0 00:00:00 grep --color=auto redis

[root@CentOS84-IP172-18 ]#

3. 创建并验证集群

基本任务:利用redis-cli --cluster-replicas 命令一次性完成六个节点三主三从集群的创建;并通过命令查看验证集群创建情况。

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster create 172.16.0.18:6379 172.16.0.28:6379 172.16.0.38:6379 172.16.0.118:6379 172.16.0.128:6379 172.16.0.138:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.16.0.118:6379 to 172.16.0.18:6379

Adding replica 172.16.0.128:6379 to 172.16.0.28:6379

Adding replica 172.16.0.138:6379 to 172.16.0.38:6379

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379 # 带M的为master

slots:[0-5460] (5461 slots) master # 槽位起始和结束位

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

M: aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379

slots:[10923-16383] (5461 slots) master

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379 # 带S的slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

replicates dabcadd4490387830f60d01e361931d010b6c1f2

S: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

replicates aff40cdf12744b6444f79d7237360d70e70b4b97

Can I set the above configuration? (type 'yes' to accept): yes # 输入yes自动创建集群

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.....

>>> Performing Cluster Check (using node 172.16.0.18:6379)

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379

slots: (0 slots) slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

S: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

slots: (0 slots) slave

replicates aff40cdf12744b6444f79d7237360d70e70b4b97

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

slots: (0 slots) slave

replicates dabcadd4490387830f60d01e361931d010b6c1f2

M: aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration. # 所有节点槽位分配完成

>>> Check for open slots... # 检查打开的槽位

>>> Check slots coverage... # 检查槽位覆盖范围

[OK] All 16384 slots covered. # 所有槽位(16384个)分配完成

[root@CentOS84-IP172-18 ]#

################################################################################

#### 利用redis自带的cluster 工具的一条命令完成了三主三从的redis集群的创建

replica 172.16.0.118:6379 to 172.16.0.18:6379

replica 172.16.0.128:6379 to 172.16.0.28:6379

replica 172.16.0.138:6379 to 172.16.0.38:6379

#### 验证一对主从

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.18 -a 123456 --no-auth-warning info replication

# Replication

role:master

connected_slaves:1

slave0:ip=172.16.0.118,port=6379,state=online,offset=672,lag=1

master_replid:52a9386e8831ceb3b14bf68378b060a7336b9c13

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:672

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:672

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.118 -a 123456 --no-auth-warning info replication

# Replication

role:slave

master_host:172.16.0.18

master_port:6379

master_link_status:up

master_last_io_seconds_ago:1

master_sync_in_progress:0

slave_repl_offset:686

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:52a9386e8831ceb3b14bf68378b060a7336b9c13

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:686

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:686

[root@CentOS84-IP172-18 ]#

################################################################################

#### 验证集群状态

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:575

cluster_stats_messages_pong_sent:583

cluster_stats_messages_sent:1158

cluster_stats_messages_ping_received:578

cluster_stats_messages_pong_received:575

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:1158

## 查看任意节点的集群状态

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster info 172.16.0.18:6379

172.16.0.18:6379 (c42d1733...) -> 0 keys | 5461 slots | 1 slaves.

172.16.0.28:6379 (dabcadd4...) -> 0 keys | 5462 slots | 1 slaves.

172.16.0.38:6379 (aff40cdf...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

[root@CentOS84-IP172-18 ]#

################################################################################

#### 查看集群node对应关系

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning cluster nodes

dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379@16379 master - 0 1651826734642 2 connected 5461-10922

c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379@16379 myself,master - 0 1651826731000 1 connected 0-5460

fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379@16379 slave c42d1733b3a317becddc484c5a981a5b0ae15566 0 1651826732639 4 connected

86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379@16379 slave aff40cdf12744b6444f79d7237360d70e70b4b97 0 1651826733000 6 connected

f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379@16379 slave dabcadd4490387830f60d01e361931d010b6c1f2 0 1651826732000 5 connected

aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379@16379 master - 0 1651826734000 3 connected 10923-16383

[root@CentOS84-IP172-18 ]#

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster check 172.16.0.18:6379

172.16.0.18:6379 (c42d1733...) -> 0 keys | 5461 slots | 1 slaves.

172.16.0.28:6379 (dabcadd4...) -> 0 keys | 5462 slots | 1 slaves.

172.16.0.38:6379 (aff40cdf...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 172.16.0.18:6379)

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379

slots: (0 slots) slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

S: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

slots: (0 slots) slave

replicates aff40cdf12744b6444f79d7237360d70e70b4b97

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

slots: (0 slots) slave

replicates dabcadd4490387830f60d01e361931d010b6c1f2

M: aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@CentOS84-IP172-18 ]#

4. 手工命令验证集群写入数据

################################################################################

# 集群会经过算法计算,当前key的槽位需要写入指定的node

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.18 --no-auth-warning set key1 shone001

(error) MOVED 9189 172.16.0.28:6379

# 槽位不在当前node所以无法写入

# 指定槽位对应node可写入

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.28 --no-auth-warning set key1 shone001

OK

[root@CentOS84-IP172-18 ]#

# 随机查询一个key,集群会自动按照算法得出应该在哪个节点的槽位内找对应数据

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.18 --no-auth-warning get key1

(error) MOVED 9189 172.16.0.28:6379 # 告知在IP28的槽位内

# 在相应槽位上去才能查到对应的值

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.28 --no-auth-warning get key1

"shone001"

# 在其自身的slave节点上可以用 keys "*" 看到键,但是并不支持 get 去查询值

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.128 --no-auth-warning keys "*"

1) "key1"

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.128 --no-auth-warning get key1

(error) MOVED 9189 172.16.0.28:6379

[root@CentOS84-IP172-18 ]#

################################################################################

#### redis集群计算得到shone和key1 对应的slot 值

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.18 --no-auth-warning cluster keyslot shone

(integer) 3129

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -h 172.16.0.18 --no-auth-warning cluster keyslot key1

(integer) 9189

[root@CentOS84-IP172-18 ]#

################################################################################

# 使用选项-c 以集群模式连接

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -c --no-auth-warning

127.0.0.1:6379> cluster keyslot shone

(integer) 3129

127.0.0.1:6379> set shone LTD_XJX

OK

127.0.0.1:6379> get shone

"LTD_XJX"

127.0.0.1:6379> exit

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 -c --no-auth-warning get shone

"LTD_XJX"

[root@CentOS84-IP172-18 ]#

5. 利用python程序实现RedisCluster集群写入

官网:https://github.com/Grokzen/redis-py-cluster

################################################################################

# 安装redis-cli 的工具

[root@CentOS84-IP172-08 ]#yum install -y redis

# 安装python3 支持环境及依赖包等

[root@CentOS84-IP172-08 ]#dnf -y install python3

[root@CentOS84-IP172-08 ]#pip3 install redis-py-cluster

......................

Installing collected packages: redis, redis-py-cluster

Successfully installed redis-3.5.3 redis-py-cluster-2.1.3

[root@CentOS84-IP172-08 ]#

################################################################################

## 准备程序文件

[root@CentOS84-IP172-08 ]#vim redis_cluster_test.py

#!/usr/bin/env python3

from rediscluster import RedisCluster

if __name__ == '__main__':

startup_nodes = [

{"host":"172.16.0.18", "port":6379},

{"host":"172.16.0.28", "port":6379},

{"host":"172.16.0.38", "port":6379},

{"host":"172.16.0.118", "port":6379},

{"host":"172.16.0.128", "port":6379},

{"host":"172.16.0.138", "port":6379}]

try:

redis_conn= RedisCluster(startup_nodes=startup_nodes,password='123456', decode_responses=True)

except Exception as e:

print(e)

for i in range(0, 10000):

redis_conn.set('key'+str(i),'value'+str(i))

print('key'+str(i)+':',redis_conn.get('key'+str(i)))

[root@CentOS84-IP172-08 ]#

## 赋予执行权限及运行脚本写入1万条数据

[root@CentOS84-IP172-08 ]#chmod +x redis_cluster_test.py

[root@CentOS84-IP172-08 ]#./redis_cluster_test.py

...................

key9997: value9997

key9998: value9998

key9999: value9999

[root@CentOS84-IP172-08 ]#

################################################################################

# 在IP08上远程连接集群并验证数据

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.18 -a 123456 --no-auth-warning DBSIZE

(integer) 3332

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.28 -a 123456 --no-auth-warning DBSIZE

(integer) 3340

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.38 -a 123456 --no-auth-warning DBSIZE

(integer) 3329

[root@CentOS84-IP172-08 ]#

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.18 -a 123456 --no-auth-warning

172.16.0.18:6379> dbsize

(integer) 3332

172.16.0.18:6379> get key1

(error) MOVED 9189 172.16.0.28:6379

172.16.0.18:6379> get key2

"value2"

172.16.0.18:6379> get key3

"value3"

172.16.0.18:6379>

172.16.0.18:6379> key *

(error) ERR unknown command `key`, with args beginning with: `*`,

172.16.0.18:6379> keys *

1) "key9835"

2) "key1414"

3) "key6091"

4) "key4110"

5) "key8646"

6) "key6200"

................

3327) "key7665"

3328) "key8669"

3329) "key9688"

3330) "key3699"

3331) "key4625"

3332) "key2998"

172.16.0.18:6379>

6. 模拟master节点故障,观测从节点接管过程

模拟master故障,关闭了IP38的rendis服务,对应的slave节点IP138自动提升为新master.

# 关闭 节点 IP172.16.0.38 模拟这个点的故障

[root@CentOS84-IP172-38 ]#redis-cli -a 123456 --no-auth-warning

127.0.0.1:6379> shutdown

not connected> exit

[root@CentOS84-IP172-38 ]#ss -tln

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

[root@CentOS84-IP172-38 ]#

# 模拟nod2节点出故障,需要相应的数秒故障转移时间

[root@CentOS84-IP172-38 ]#tail -f /var/log/redis/redis.log

..................

139129:M 06 May 2022 17:40:04.627 # User requested shutdown...

139129:M 06 May 2022 17:40:04.627 * Saving the final RDB snapshot before exiting.

139129:M 06 May 2022 17:40:04.632 * DB saved on disk

139129:M 06 May 2022 17:40:04.632 * Removing the pid file.

139129:M 06 May 2022 17:40:04.632 # Redis is now ready to exit, bye bye...

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster info 172.16.0.18:6379

Could not connect to Redis at 172.16.0.38:6379: Connection refused

172.16.0.18:6379 (c42d1733...) -> 3332 keys | 5461 slots | 1 slaves.

172.16.0.28:6379 (dabcadd4...) -> 3340 keys | 5462 slots | 1 slaves.

172.16.0.138:6379 (86af91ad...) -> 3329 keys | 5461 slots | 0 slaves. #IP138为master

[OK] 10001 keys in 3 masters.

0.61 keys per slot on average.

[root@CentOS84-IP172-18 ]#

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster check 172.16.0.18:6379

Could not connect to Redis at 172.16.0.38:6379: Connection refused

172.16.0.18:6379 (c42d1733...) -> 3332 keys | 5461 slots | 1 slaves.

172.16.0.28:6379 (dabcadd4...) -> 3340 keys | 5462 slots | 1 slaves.

172.16.0.138:6379 (86af91ad...) -> 3329 keys | 5461 slots | 0 slaves.

[OK] 10001 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 172.16.0.18:6379)

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379

slots: (0 slots) slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

M: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

slots:[10923-16383] (5461 slots) master

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

slots: (0 slots) slave

replicates dabcadd4490387830f60d01e361931d010b6c1f2

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

[root@CentOS84-IP172-18 ]

#### 从IP138 已经被提升为master节点

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning -h 172.16.0.138 info replication

# Replication

role:master

connected_slaves:0

master_replid:6aa09e5dfcfbcdc88a885e4a5b595e698e348bd4

master_replid2:eff658e190973e2ba983fd519bff34006dfe1dab

master_repl_offset:141180

second_repl_offset:141181

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:141180

[root@CentOS84-IP172-18 ]#

################################################################################

# 恢复故障节点 IP172.16.0.38

[root@CentOS84-IP172-38 ]#systemctl start redis

# 查看自动生成的配置文件,可以查看IP172.16.0.38自动成为slave节点

[root@CentOS84-IP172-38 ]#cat /var/lib/redis/nodes-6379.conf

f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379@16379 slave dabcadd4490387830f60d01e361931d010b6c1f2 0 1651830722627 5 connected

dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379@16379 master - 0 1651830722627 2 connected 5461-10922

86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379@16379 master - 0 1651830722627 7 connected 10923-16383

aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379@16379 myself,slave 86af91ad4a9ee614f240232627700bc7ae17885d 0 1651830722615 3 connected

c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379@16379 master - 1651830722626 1651830722615 1 connected 0-5460

fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379@16379 slave c42d1733b3a317becddc484c5a981a5b0ae15566 1651830722626 1651830722615 4 connected

vars currentEpoch 7 lastVoteEpoch 0

[root@CentOS84-IP172-38 ]#

## 恢复后的IP38自动成为IP138的从节点

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning -h 172.16.0.138 info replication

# Replication

role:master

connected_slaves:1

slave0:ip=172.16.0.38,port=6379,state=online,offset=141544,lag=1

master_replid:6aa09e5dfcfbcdc88a885e4a5b595e698e348bd4

master_replid2:eff658e190973e2ba983fd519bff34006dfe1dab

master_repl_offset:141544

second_repl_offset:141181

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:141544

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning -h 172.16.0.38 info replication

# Replication

role:slave

master_host:172.16.0.138

master_port:6379

master_link_status:up

master_last_io_seconds_ago:2

master_sync_in_progress:0

slave_repl_offset:141558

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:6aa09e5dfcfbcdc88a885e4a5b595e698e348bd4

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:141558

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:141181

repl_backlog_histlen:378

[root@CentOS84-IP172-18 ]#

7. Redis集群导入数据

集群维护之导入现有Redis数据至集群。

官方提供了离线迁移数据到集群的工具,有些公司开发了离线迁移工具

官方工具: redis-cli --cluster import

第三方在线迁移工具: 模拟slave 节点实现, 比如: 唯品会 redis-migrate-tool , 豌豆荚 redis-port

本次任务内容:将redis cluster部署完成之后,将之前的数据(本次在IP08上模拟一批数据)导入之Redis cluster集群。由于Redis cluster使用的分片保存key的机制,使用传统的AOF文件或RDB快照恢复或者导入的方法无法完成本次任务,为此需要使用集群数据导入命令完成。

注意:导入数据需要redis cluster不能与被导入的数据有重复的key名称,否则导入不成功或中断。

7.1 在IP08上准备100条数据

[root@CentOS84-IP172-08 ]#dnf install -y redis

[root@CentOS84-IP172-08 ]#systemctl enable --now redis

[root@CentOS84-IP172-08 ]#ss -tln

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 511 127.0.0.1:6379 0.0.0.0:*

[root@CentOS84-IP172-08 ]#vim redis_test.sh

#!/bin/bash

#

NUM=100

PASS=

for i in `seq $NUM`;do

redis-cli -h 127.0.0.1 -a "$PASS" --no-auth-warning set shone${i} value${i}

echo "shone${i} value${i} 写入完成"

done

echo "$NUM个key写入到Redis完成"

[root@CentOS84-IP172-08 ]#

[root@CentOS84-IP172-08 ]#redis-cli --no-auth-warning

127.0.0.1:6379> flushall

OK

127.0.0.1:6379> keys *

(empty list or set)

127.0.0.1:6379> quit

# 清空redis数据后通过脚本新生成100个键值对

[root@CentOS84-IP172-08 ]#bash redis_test.sh

OK

shone1 value1 写入完成

................

OK

shone100 value100 写入完成

100个key写入到Redis完成

# 查看键值对

[root@CentOS84-IP172-08 ]#redis-cli --no-auth-warning

127.0.0.1:6379> keys *

1) "shone2"

.................

100) "shone70"

127.0.0.1:6379>

[root@CentOS84-IP172-08 ]#redis-cli -h 127.0.0.1 --no-auth-warning DBSIZE

(integer) 100

[root@CentOS84-IP172-08 ]#redis-cli -h 127.0.0.1 --no-auth-warning get shone1

"value1"

[root@CentOS84-IP172-08 ]#

####################################################################################

####################################################################################

# 取消需要导入的主机的密码 本案例没设密码,如果设密码可以通过下面密码删除

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.8 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

Could not connect to Redis at 172.16.0.8:6379: Connection refused

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.8 -p 6379 --no-auth-warning CONFIG SET requirepass ""

Could not connect to Redis at 172.16.0.8:6379: Connection refused

## 绑定的IP地址需要修改成0.0.0.0 , 否则不支持通过网络访问,还得重新启动redis

[root@CentOS84-IP172-08 ]#sed -i.bak -e 's/bind 127.0.0.1/bind 0.0.0.0/' /etc/redis.conf

[root@CentOS84-IP172-08 ]#systemctl restart redis

[root@CentOS84-IP172-08 ]#redis-cli -h 172.16.0.8 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-08 ]#

7.2 导入数据至集群

###################################################################################

## 查看集群保证状态正常

[root@CentOS84-IP172-18 ]#redis-cli -a 123456 --no-auth-warning --cluster check 172.16.0.18:6379

172.16.0.18:6379 (c42d1733...) -> 0 keys | 5461 slots | 1 slaves.

172.16.0.38:6379 (aff40cdf...) -> 0 keys | 5461 slots | 1 slaves.

172.16.0.28:6379 (dabcadd4...) -> 0 keys | 5462 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 172.16.0.18:6379)

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

slots: (0 slots) slave

replicates aff40cdf12744b6444f79d7237360d70e70b4b97

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

slots: (0 slots) slave

replicates dabcadd4490387830f60d01e361931d010b6c1f2

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379

slots: (0 slots) slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

###################################################################################

#### 迁移前先删空现有库中的所有键值,便于查看效果。 只需要在master上去清空数据即可

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.18 -a 123456 --no-auth-warning flushall

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.28 -a 123456 --no-auth-warning flushall

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.38 -a 123456 --no-auth-warning flushall

OK

# 清空六个节点上的登录密码 这个是临时清空,并没写入配置文件,redis重启就会又有密码被载入

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.8 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.18 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.28 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.38 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.118 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.128 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.138 -p 6379 -a 123456 --no-auth-warning CONFIG SET requirepass ""

OK

###################################################################################

# 通过命令将模拟的数据导入到集群

[root@CentOS84-IP172-18 ]#redis-cli --cluster import 172.16.0.18:6379 --cluster-from 172.16.0.8:6379 --cluster-copy --cluster-replace

>>> Importing data from 172.16.0.8:6379 to cluster 172.16.0.18:6379

>>> Performing Cluster Check (using node 172.16.0.18:6379)

M: c42d1733b3a317becddc484c5a981a5b0ae15566 172.16.0.18:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: aff40cdf12744b6444f79d7237360d70e70b4b97 172.16.0.38:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 86af91ad4a9ee614f240232627700bc7ae17885d 172.16.0.138:6379

slots: (0 slots) slave

replicates aff40cdf12744b6444f79d7237360d70e70b4b97

M: dabcadd4490387830f60d01e361931d010b6c1f2 172.16.0.28:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f7e1f8a7a65e8fcae1cf47245f9e8c259f501757 172.16.0.128:6379

slots: (0 slots) slave

replicates dabcadd4490387830f60d01e361931d010b6c1f2

S: fc60360518729269b6a105e3684883ee5420a46f 172.16.0.118:6379

slots: (0 slots) slave

replicates c42d1733b3a317becddc484c5a981a5b0ae15566

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

*** Importing 100 keys from DB 0

Migrating shone13 to 172.16.0.18:6379: OK

Migrating shone41 to 172.16.0.28:6379: OK

Migrating shone36 to 172.16.0.38:6379: OK

Migrating shone100 to 172.16.0.38:6379: OK

Migrating shone58 to 172.16.0.38:6379: OK

Migrating shone50 to 172.16.0.38:6379: OK

Migrating shone52 to 172.16.0.28:6379: OK

Migrating shone43 to 172.16.0.38:6379: OK

Migrating shone98 to 172.16.0.38:6379: OK

Migrating shone87 to 172.16.0.38:6379: OK

Migrating shone95 to 172.16.0.28:6379: OK

Migrating shone45 to 172.16.0.28:6379: OK

Migrating shone85 to 172.16.0.18:6379: OK

Migrating shone99 to 172.16.0.28:6379: OK

Migrating shone39 to 172.16.0.18:6379: OK

Migrating shone26 to 172.16.0.18:6379: OK

Migrating shone12 to 172.16.0.28:6379: OK

Migrating shone79 to 172.16.0.18:6379: OK

Migrating shone27 to 172.16.0.28:6379: OK

Migrating shone21 to 172.16.0.38:6379: OK

Migrating shone37 to 172.16.0.38:6379: OK

Migrating shone61 to 172.16.0.38:6379: OK

Migrating shone18 to 172.16.0.38:6379: OK

Migrating shone96 to 172.16.0.18:6379: OK

Migrating shone20 to 172.16.0.38:6379: OK

Migrating shone74 to 172.16.0.18:6379: OK

Migrating shone2 to 172.16.0.38:6379: OK

Migrating shone57 to 172.16.0.18:6379: OK

Migrating shone40 to 172.16.0.18:6379: OK

Migrating shone6 to 172.16.0.38:6379: OK

Migrating shone80 to 172.16.0.18:6379: OK

Migrating shone33 to 172.16.0.38:6379: OK

Migrating shone46 to 172.16.0.28:6379: OK

Migrating shone38 to 172.16.0.28:6379: OK

Migrating shone51 to 172.16.0.28:6379: OK

Migrating shone32 to 172.16.0.38:6379: OK

Migrating shone44 to 172.16.0.18:6379: OK

Migrating shone71 to 172.16.0.18:6379: OK

Migrating shone7 to 172.16.0.38:6379: OK

Migrating shone15 to 172.16.0.28:6379: OK

Migrating shone3 to 172.16.0.38:6379: OK

Migrating shone72 to 172.16.0.38:6379: OK

Migrating shone49 to 172.16.0.28:6379: OK

Migrating shone30 to 172.16.0.28:6379: OK

Migrating shone88 to 172.16.0.18:6379: OK

Migrating shone64 to 172.16.0.28:6379: OK

Migrating shone53 to 172.16.0.18:6379: OK

Migrating shone63 to 172.16.0.18:6379: OK

Migrating shone86 to 172.16.0.28:6379: OK

Migrating shone10 to 172.16.0.38:6379: OK

Migrating shone75 to 172.16.0.18:6379: OK

Migrating shone55 to 172.16.0.28:6379: OK

Migrating shone14 to 172.16.0.38:6379: OK

Migrating shone89 to 172.16.0.18:6379: OK

Migrating shone59 to 172.16.0.28:6379: OK

Migrating shone69 to 172.16.0.38:6379: OK

Migrating shone77 to 172.16.0.28:6379: OK

Migrating shone11 to 172.16.0.28:6379: OK

Migrating shone56 to 172.16.0.28:6379: OK

Migrating shone66 to 172.16.0.18:6379: OK

Migrating shone81 to 172.16.0.18:6379: OK

Migrating shone34 to 172.16.0.28:6379: OK

Migrating shone62 to 172.16.0.18:6379: OK

Migrating shone17 to 172.16.0.18:6379: OK

Migrating shone22 to 172.16.0.18:6379: OK

Migrating shone29 to 172.16.0.38:6379: OK

Migrating shone5 to 172.16.0.18:6379: OK

Migrating shone35 to 172.16.0.18:6379: OK

Migrating shone16 to 172.16.0.28:6379: OK

Migrating shone47 to 172.16.0.38:6379: OK

Migrating shone24 to 172.16.0.38:6379: OK

Migrating shone67 to 172.16.0.18:6379: OK

Migrating shone54 to 172.16.0.38:6379: OK

Migrating shone48 to 172.16.0.18:6379: OK

Migrating shone76 to 172.16.0.38:6379: OK

Migrating shone73 to 172.16.0.28:6379: OK

Migrating shone94 to 172.16.0.38:6379: OK

Migrating shone1 to 172.16.0.18:6379: OK

Migrating shone28 to 172.16.0.38:6379: OK

Migrating shone4 to 172.16.0.28:6379: OK

Migrating shone82 to 172.16.0.28:6379: OK

Migrating shone42 to 172.16.0.28:6379: OK

Migrating shone83 to 172.16.0.38:6379: OK

Migrating shone91 to 172.16.0.28:6379: OK

Migrating shone78 to 172.16.0.18:6379: OK

Migrating shone9 to 172.16.0.18:6379: OK

Migrating shone8 to 172.16.0.28:6379: OK

Migrating shone25 to 172.16.0.38:6379: OK

Migrating shone70 to 172.16.0.18:6379: OK

Migrating shone65 to 172.16.0.38:6379: OK

Migrating shone90 to 172.16.0.38:6379: OK

Migrating shone31 to 172.16.0.18:6379: OK

Migrating shone19 to 172.16.0.28:6379: OK

Migrating shone93 to 172.16.0.18:6379: OK

Migrating shone60 to 172.16.0.28:6379: OK

Migrating shone97 to 172.16.0.18:6379: OK

Migrating shone92 to 172.16.0.18:6379: OK

Migrating shone68 to 172.16.0.28:6379: OK

Migrating shone84 to 172.16.0.18:6379: OK

Migrating shone23 to 172.16.0.28:6379: OK

[root@CentOS84-IP172-18 ]#

###################################################################################

# 验证导入后数据后的集群槽位存放情况

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.18 -p 6379 --no-auth-warning dbsize

(integer) 35

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.28 -p 6379 --no-auth-warning dbsize

(integer) 32

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.38 -p 6379 --no-auth-warning dbsize

(integer) 33

[root@CentOS84-IP172-18 ]#redis-cli -h 172.16.0.38 -p 6379 --no-auth-warning keys "*"

1) "shone61"

2) "shone69"

3) "shone6"

4) "shone98"

5) "shone14"

6) "shone65"

7) "shone2"

8) "shone21"

9) "shone54"

10) "shone83"

11) "shone7"

12) "shone43"

13) "shone25"

14) "shone76"

15) "shone3"

16) "shone94"

17) "shone24"

18) "shone37"

19) "shone28"

20) "shone58"

21) "shone90"

22) "shone33"

23) "shone10"

24) "shone32"

25) "shone72"

26) "shone87"

27) "shone36"

28) "shone100"

29) "shone29"

30) "shone20"

31) "shone18"

32) "shone47"

33) "shone50"

[root@CentOS84-IP172-18 ]#

#### 至此导入数据成功

3.3.6.4 集群偏斜

redis cluster 多个节点运行一段时间后,可能会出现倾斜现象,某个节点数据偏多,内存消耗更大,或者接受

用户请求访问更多

发生倾斜的原因可能如下:

- 节点和槽分配不均

- 不同槽对应键值数量差异较大

- 包含bigkey,建议少用

- 内存相关配置不一致

- 热点数据不均衡 : 一致性不高时,可以使用本缓存和MQ

8. redis cluster集群总结

经过最近一段时间的实践,redis的主要知识点基本完成,redis有很多优点和优势,前面博文都介绍过了,我们在使用过程中也要规避redis cluster 的局限性,基本要点如下:

- 大多数时客户端性能会”降低”

- 命令无法跨节点使用:mget、keys、scan、flush、sinter等

- 客户端维护更复杂:SDK和应用本身消耗(例如更多的连接池)

- 不支持多个数据库︰集群模式下只有一个db 0

- 复制只支持一层∶不支持树形复制结构,不支持级联复制

- Key事务和Lua支持有限∶操作的key必须在一个节点,Lua和事务无法跨节点使用

- 范例: 跨slot的局限性

- 大多数时客户端性能会”降低”

- 命令无法跨节点使用:mget、keys、scan、flush、sinter等

- 客户端维护更复杂:SDK和应用本身消耗(例如更多的连接池)

- 不支持多个数据库︰集群模式下只有一个db 0

- 复制只支持一层∶不支持树形复制结构,不支持级联复制

- Key事务和Lua支持有限∶操作的key必须在一个节点,Lua和事务无法跨节点使用

Recommend

-

58

58

-

49

49

前面我们介绍了国人自己开发的Redis集群方案——Codis,Codis友好的管理界面以及强大的自动平衡槽位的功能深受广大开发者的喜爱。今天我们一起来聊一聊Redis作者自己提供的集群方案——Cluster。希望读完这篇文章,你能够充分了解Cod...

-

11

11

Redis集群化方案对比:Codis、Twemproxy、Redis Cluster 2020-07-07Redis ...

-

30

30

记一次redis集群异常.(error) CLUSTERDOWN The cluster is down 吃馍夹菜 · 大约1小时之前 · 11 次点击 · 预计阅读时间 7 分钟 · 不到1分钟之前 开始浏览 ...

-

10

10

CentOS7 超速武装 Redis Cluster 集群 因为最近有在 CentOS7 上实战部署了一个 Redis 6节点的主从集群 (三主三从) 所以整理了一份超速部署文档, 任谁看了都可以简单+快速的搞起一个Redis Cluster模式...

-

15

15

Redis 超详细的手动搭建Cluster集群步骤 Redis Cluster是Redis的自带的官方分布...

-

8

8

大家好,我是「码哥」,码哥出品,必属精品。关注公众号「码哥字节」并加码哥微信(MageByte1024),窥探硬核文章背后的男人的另一面。 本文将对集群的节点、槽指派、命令执行、重新分片、转向、故障转移、消息等各个方面进行深入拆解。

-

1

1

Redis系列1:深刻理解高性能Redis的本质

-

8

8

Redis-Cluster集群创建内部细节详解 版权声明 本站原创文章 由 萌叔 发表 转载请注明 萌叔 | http...

-

6

6

版权声明 本站原创文章 由 萌叔 发表 转载请注明 萌叔 | http://vearne.cc 在前面的文章中, 《REDIS-CLUSTER集群创建内部细节详解》 萌叔创建一个Redis集群...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK