AI Doesn’t Understand Much—But Maybe A Little

source link: https://smbrinson.medium.com/ai-doesnt-understand-much-but-maybe-a-little-baa6c7a45f25

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

AI Doesn’t Understand Much—But Maybe A Little

In only the last few months, we’ve seen a number of developments in artificial intelligence making headlines:

- OpenAI’s DALLE-2 can generate incredible images from simple text prompts.

- Google’s PaLM appears to reason and solve problems.

- DeepMind’s Gato tackles a range of tasks and could be an example of general AI.

Each has added fuel to the growing number of debates around the current and near-future capabilities of AI.

Some are singing the praises of deep learning (the layered neural network approach which most modern AI is based on), claiming we only need to scale these models up for us to reach the heights of super intelligent AI. Others argue there is still something fundamentally missing.

Then there’s those like Google engineer Blake Lemoine, who have become so enamoured with what’s happening to consider if AI is already sentient.

The new programs are good enough that they’re certain to impact how people create and interact with computers, but do DALLE-2, PaLM, Gato, or any other current manifestations of AI, know what they’re doing?

What Does it Mean to Understand?

There’s a scene in the Big Bang Theory where the car the group of scientists are in breaks down. Leonard asks if anyone knows anything about internal combustion engines, and everyone says yes, of course they do, it’s 19th-century technology. When Leonard follows up by asking if anyone knows how to fix an internal combustion engine — no, not a clue.

It’s not an exaggeration to say that people have different interpretations of what understanding is. We use it in place of knowledge, intelligence, thinking, experience, and awareness.

You can understand what something is, how to do something, how something works, the where’s, when’s, and why’s. You might understand Spanish, how to make coffee, the rules of basketball, why someone reacted the way they did, and what might have been had you asked the girl out.

Understanding isn’t all-or-nothing. Some people understand things a bit better than others. Some claim to understand the basics of how a helicopter works, but get stumped when asked how it moves forward. Some understand what a bicycle is but then draw it like this:

Is an inner experience necessary? There’s a big difference between someone that can see the colour red, yet know’s nothing of colour theory, or the physics of light, or the physiology of eyes, or the neuroscience of vision; and someone that’s always been colour blind but has studied all of the above. Who understands ‘red’?

One important feature that’s often involved is a grasp of cause-and-effect. Whenever people try to show that they understand something, it’s usually with an explanation full of causal relationships.

However, that still has limitations, as it rules out basic knowledge of facts — understanding that the brain has around 80 billion neurons doesn’t seem to require cause-and-effect. Nor does knowing that the hypotenuse is the longest side of a right-angle triangle, or that Paris is the capital of France.

Is there a common thread that runs through all of these definitions and distinctions? If not, how are we to test the understanding of AI? Despite the difficulties, people have not been afraid to venture a guess.

The AI Understands

Following the successes of neural networks and deep learning, computer scientists like Nando de Freitas, Geoffrey Hinton, and Richard Sutton, have suggested that scaling the current methods and leveraging greater computation will result in artificial general intelligence, or human-level AI.

Meanwhile, OpenAI’s Sam Altman thinks current AI already has some form of understanding, saying in a blog post about DALLE-2:

“It sure does seem to “understand” concepts at many levels and how they relate to each other in sophisticated ways.”

Here are a couple of lines of reasoning behind these claims:

Reward Pursuit

In a 2021 paper titled Reward Is Enough, the authors argue that an agent placed in a rich environment with a goal, and the ability to learn via trial and error, will eventually find itself with a general form of intelligence:

“maximising reward is enough to drive behaviour that exhibits most if not all attributes of intelligence that are studied in natural and artificial intelligence, including knowledge, learning, perception, social intelligence, language and generalisation.”

A dynamic environment that continuously throws something new at the agent necessitates adaptation if it’s to continuously reach its goal. It can’t rely on learning everything afresh when its world changes, it needs to identify commonalities, pick up on patterns and rules, and build mental models that allow it to make quality assumptions and guess about new places.

Of course, most AI algorithms exist in digital environments, dealing in data, not the material world with us humans. The intelligence they evolve is limited by this environment and the input they receive, but that doesn’t mean they don’t form any understanding of their virtual worlds.

Data is Understanding

While working solely with pixels or text won’t result in understanding things in the same ways we do, Blaise Agüera y Arcas, a software engineer at Google, claims it still counts as understanding:

“[S]tatistics do amount to understanding, in any falsifiable sense.”

He notes that while we can ask a language model (LaMDA in his example) what its favourite island is, the answer it gives will be bullshit. It lacks a body, it’s never set foot on any island, felt the sand between its toes or the sun on its face. But its goal isn’t honesty, its goal is to produce believable responses. We’ve trained it to produce bullshit, and it understands how to do that.

“The understanding of a concept can be anywhere from superficial to highly nuanced; from purely abstract to strongly grounded in sensorimotor skills; it can be tied to an emotional state, or not; but it’s unclear how we’d distinguish “real understanding” from “fake understanding”. Until such time as we can make such a distinction, we should probably just retire the idea of “fake understanding.””

It doesn’t matter in which form data or information is fed into the system so long as there is a signal to be extracted, value to be gleaned. This is true of AI and of the human mind, as when a blind person learns about colour, or when people try sensory substitution.

“Neural activity is neural activity, whether it comes from eyes, ears, fingertips or web documents.”

Missing Pieces

Not everyone is convinced that scaling deep neural networks and charging them towards singular goals is enough to build an AI that knows what it’s doing. Count Gary Marcus, Melanie Mitchell, and Judea Pearl among them.

Core Knowledge

Gary Marcus points to compositionality as an essential but missing component. When asked to put ‘a blue cube on top of a red cube, beside a smaller yellow sphere’, DALLE-2 gets it all wrong. It has cubes and spheres and colours, but not constructed in the way asked.

Regarding GPT-3, Marcus writes:

“the problem is not with GPT-3’s syntax (which is perfectly fluent) but with its semantics: it can produce words in perfect English, but it has only the dimmest sense of what those words mean, and no sense whatsoever about how those words relate to the world.”

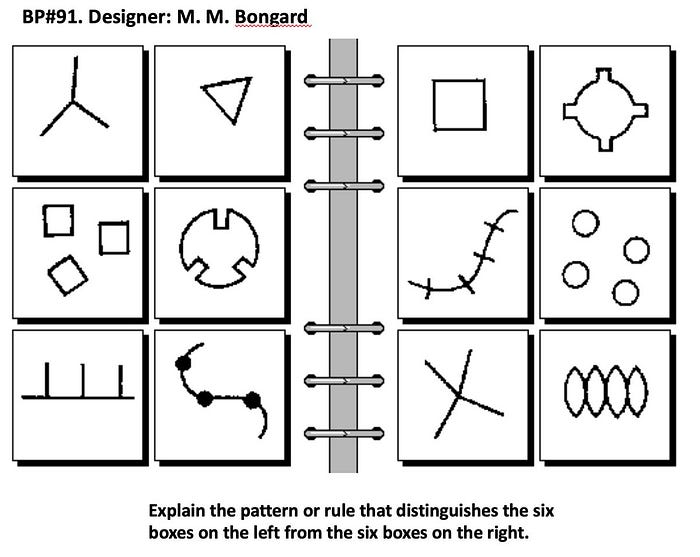

Melanie Mitchell wants to see AI deal with Bongard problems, which a Russian computer scientist posed to AI way back in the 1960s. Here’s an example:

Solving the problems necessitates an understanding of concepts like ‘same,’ ‘different,’ and ‘numerosity.’ Mitchell says in a 2021 article in Quanta that AI lacks ‘infant metaphysics’ or ‘core knowledge,’ including systems for representing objects, actions, numbers, and space.

“We humans can solve these visual puzzles due to our core knowledge of basic concepts and our abilities of flexible abstraction and analogy. Bongard realized how central these abilities are to human intelligence.”

Marcus advocates for something called ‘neurosymbolic AI,’ which ties deep learning together with good old-fashioned AI based on symbol manipulations:

“Manipulating symbols has been essential to computer science since the beginning, at least since the pioneer papers of Alan Turing and John von Neumann, and is still the fundamental staple of virtually all software engineering — yet is treated as a dirty word in deep learning.”

Rather than rely on large statistical models alone to form some type of intelligence or understanding, this would involve establishing rules from the outset, to combine statistics and logic.

Causal Reasoning

Judea Pearl helped pioneer the probabilistic approach to AI, and now he’s on a mission to develop causal and counterfactual reasoning. To do this, he believes AI must scale up a 3-step ladder:

- Association: Finding correlations between variables, recognising that one thing is more likely given another thing. Eg: symptoms are associated with a disease.

- Intervention: Interacting with the variables to figure out how manipulating one alters the likelihood of another. Eg: Does aspirin cure headaches? Does smoking increase the risk of cancer?

- Counterfactuals: Considering alternatives to past events, to ask what might have been. Eg: Would Kennedy be alive if Oswald had not killed him?

Most AI is still stuck at the first level, dealing with statistical correlations and finding patterns in big data. The second level is the backbone of most science research, conducting experiments to see what happens when variables are altered.

The third level is where people generally find themselves, reasoning about what-ifs and what-abouts. Counterfactual thinking is probably necessary for our sense of free will, the belief that we could have done otherwise.

An AI that ‘understands’ should be able to reason at least at the second level, to recognise that the disease causes the symptoms and isn’t just correlated with them. But the third is where the magic happens.

To achieve this, Pearl thinks we’ll need to program in a conceptual model of reality, and have the computers postulate their own models and verify them empirically.

He states in The Atlantic:

“If you deprive the robot of your intuition about cause and effect, you’re never going to communicate meaningfully.”

Figuring It Out

While understanding is a fuzzy concept, I’m inclined to say that without at least a basic grasp of cause-and-effect, without an ability to ask why or how, there isn’t any understanding. This rules out most AI that’s analysing data and curve fitting.

But some AI might scratch the surface. Given a goal and a dynamic environment, such as a video game with a score, the AI can interact with different features to learn what happens and what it needs to do to ‘win.’ When producing images or text, our feedback or grading system might help the AI learn what it’s doing in its own way.

The goal in many cases is simply to impress us. They may form an understanding of the domain they function in — be it data, text, images or a combination of them — but they understand it in their own way as it pertains to reaching their goal.

When it comes to leaving the digital realm and learning about cause-and-effect in the real world, things get much more difficult and complex. Self-driving cars will need to deal with a vastly more detailed and less forgiving environment than the programs winning at racing games.

Maybe deep learning could eventually get the hang of the real world with enough computational power and access to different modalities. Maybe reward is all you need to get there, eventually. But maybe we could save it a lot of trouble by teaching it some core skills we already know about — rules of logic, symbolic manipulations, and ‘infant metaphysics.’

I would be willing to grant the new developments from Google, OpenAI, and DeepMind, as having some very rudimentary forms of understanding, knowledge that’s limited to an overly simplified world, a narrow band of a much wider reality. But it’s still leagues away from the range, richness, and depth of human understanding.

We construct worlds in our heads based on sights, sounds, smells, and other sensory domains. We often think with symbols and abstractions. We’re so inclined to think causally that we often see causation where there is only correlation, and while that’s a fail in terms of understanding, it highlights our deep desire to find explanations. AI has some ground to cover, even if it has taken its first steps.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK