NannyML - OSS Python library for detecting silent ML model failure | Product Hun...

source link: https://www.producthunt.com/posts/nannyml

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

NannyML

OSS Python library for detecting silent ML model failure

Hey Everyone! I am Hakim, co-founder of NannyML.

Early in my data science career, I developed the intuition that machine learning models are dynamic systems. You set performance metrics, train them on historical data, put them out into the real world to make decisions, and then the real-world changes.

So what happens to these models and their performance?

For the most part, we have no idea.

There is no way to measure the performance of most machine learning models directly. Ground truth is delayed or doesn't exist.

We developed an algorithm called Confidence-based Performance estimation (CPBE). It is the only open-source algorithm capable of fully capturing the impact of data drift on performance.

We also have some extra features to help you understand why it has happened.

Our core algorithms are free and open-source forever. Stop by https://github.com/NannyML/nannyml.

We would love feedback from you guys on how you currently solve this problem in your workflows, and we can't wait to continue building this product with the community.

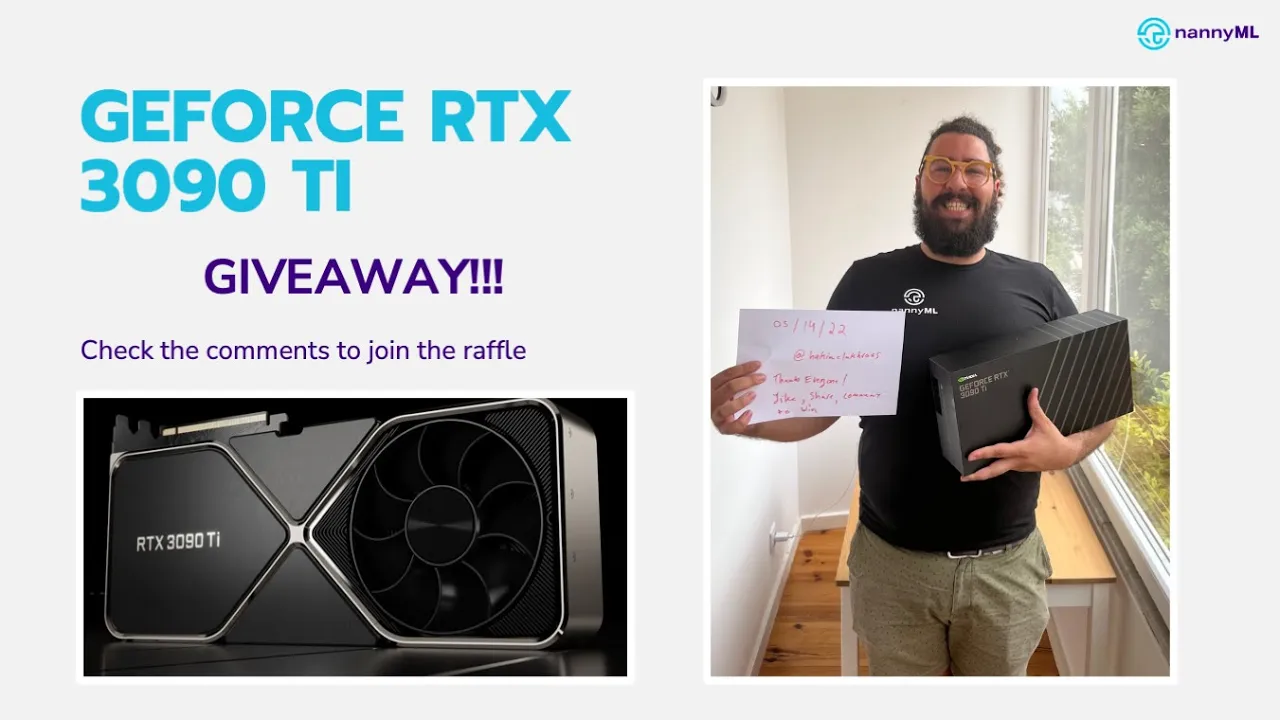

To enter the raffle like/comment/share this post on LinkedIn => https://www.linkedin.com/feed/up...

CONFESSIONS of a Data Science Bro (and professional storyteller):

In my previous job, I would hit the gym every day, right before work. After I would get to the office and my colleagues would usually yell: “W-dawg, how is it going? Congrats, on your latest deployment man! Numbers are up by 20%”. I would go to the coffee machine, hand nuke my colleagues with some crazy stories and make everyone tear up! Even Dan, our CEO would give me an occasional nod. “Whoooh, life sure was good as a data scientist”.

Then one day, I come in and I immediately noticed something is off. Everyone looked right through me. Even my post-workout pump started feeling different. No jokes, no “W-dwag”s, even Steve from Data Engineering straight up ignored me. I open my inbox, and it is literally piling over with complaints from businesses. Then, right before my eyes, I see a meeting popping up in my Calendar: It’s a 1on1 with Dan. In 10 minutes! ONE OF MY MODELS HAD BEEN SILENTLY FAILING FOR THE PAST FEW MONTHS. I didn’t even have time to figure out how or why. I never felt so useless… I thought I had everything under control.

Just a personal story based on true feelings. I value peace of mind, and that is exactly what I hope NannyML will give you. Nobody wants to lose their rockstar data science status ;) I am passionate about open-source, data visualisation, and all things post-deployment data science. Happy to exchange war stories.

Hey, Niels here, lead engineer at NannyML. I’ve worked as a software engineering and architecture consultant for over decade, specializing in building data-intensive applications.

As a long-term user of open source tools and libraries I’m ever so proud to finally give back to the community - please don’t hate the code though ;-)

The last year has been a great working experience, expanding my data science knowledge, getting to learn both the strengths and weaknesses of Python and applying my previous expertise to a product I feel passionate about. I’m really looking forward to the coming months of building our library as well as our community.

Fun fact: as an engineer I love to meme about data scientists that write code (but truth be told, I would hire our resident data science researchers as coders, they’re awesome). One contention point is the use of ‘magic strings’. I hate them as an engineer, but had to swallow my pride because all of our data scientists kept nagging. Please support me and bring some love for the Enum (I’m still using Enums under the covers but shhh, don’t tell them).

It’s been a wild ride, personally learning about all the quirks of data science practice from the NannyML team and all the users we’ve spoken to in the months leading up to this. I can’t wait to see what folks make of it, and get all the feedback that will help us to make this better and better. Making real product design a core part of the process has been crucial to keeping the user needs we’re meeting front-and-centre of creating the library, and I’m proud to say I think we’ve made something that stands out from the crowd in terms of usability as well as function.

There’s so much more we want to do with this, and being able to help support an open source product in doing those great things is brilliant fun!

I‘m Nik Senior Data Scientist at NannyML.

I am very excited to be building an open source product I wish I had on my previous roles in Capital One. I can already see how I would ‘ve spent less time getting the results and more time evaluating and disseminating them to stakeholders if NannyML had been available. My dream is for NannyML to make post deployment Data Science more productive and for me to be part of that journey!

Hopefully this resonates with you :)

Congrats on the launch @hakim_elakhrass and NannyML team. This is a pressing problem for ML models in production for organizations of any size and the taught open-sourcing the tool is just amazing. Confident this will bring a lot of innovation with the support of the data science community across the globe.

Cheers, Chanukya

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK