Natural Language Processing, Adobe PDF Extract, and Deep PDF Intelligence

source link: https://dzone.com/articles/natural-language-processing-adobe-pdf-extract-and

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Natural Language Processing, Adobe PDF Extract, and Deep PDF Intelligence

A look at integrating PDF text extraction with a natural language processor to return deep information about the contents of a PDF.

Join the DZone community and get the full member experience.

Join For FreeThe Adobe PDF Extract API is a powerful tool to get information from your PDFs. This includes the layout and styling of your PDF, tabular data in easy-to-use CSV format, images, and raw text. All things considered, the raw text may be the least interesting aspect of the API. One useful possibility is to take the raw text and provide it to search engines (see Using PDFs with the Jamstack - Adding Search with Text Extraction). But another fascinating possibility for working with the text involves natural language processing or NLP.

Broadly (very broadly, see the Wikipedia article for deeper context), NLP is about understanding the contents of the text. Voice assistants are a great real-world example of this. What makes Alexa and Google Voice devices so powerful is that they don't just hear what you say, but they understand the intent of what you said. This is different from the raw text.

If I say "I work for Adobe", that's a different statement than saying "I live in an adobe house". Understanding the differences between the same word takes machine learning, artificial intelligence, and other words that only really smart people get.

Organizations that deal with incoming PDFs, or dealing with a large history of existing documents, can use a combination of the PDF Extract API and NLP to better gain knowledge of what's contained within their PDFs.

In this article, we'll walk you through an example that demonstrates these two powerful features working together. Our demo application will scan a directory of PDFs. For each PDF, it will first extract the text from the PDF. Next, it will take that text to an NLP API. In both operations, we can save the results for faster processing later.

For our NLP API, we will be making use of a service from Diffbot. Diffbot has multiple APIs, but we will focus on their natural language service. Their API is rather simple to use but provides a wealth of data, including:

- Entities, or basically, subjects of a document.

- The type of those entities, so for example, a name is an entity and a type is a person.

- Facts revealed in the document, "The foundation of a company so and so was Joe So and So."

- The sentiment (how negative or positive something is).

Diffbot's API can be also trained with custom data so that it can better parse your input. Check their docs for more information and their quick start is a great example. They provide a two-week trial and do not require a credit card to sign up.

Here's a simple example of how to call their API using Node.js:

let fields = 'entities,sentiment,facts,records,categories';

let token = 'your private token here';

let url = `https://nl.diffbot.com/v1/?fields=${fields}&token=${token}`;

// the text variable is what you want to parse

let body = [{

content:text,

lang:'en',

format:'plain text'

}];

let req = await fetch(url, {

method:'POST',

body:JSON.stringify(body),

headers: { 'Content-Type':'application/json' }

});

let result = await req.json();Alright, so let's build our demo. We have a folder of PDFs already, so my general process will be:

- Get a list of PDFs.

- For each, see if I already have got the text. Given a PDF named catsrule.pdf, I'll look for catsrule.txt.

- If I don't, use our Extraction API to get the text.

- For each, see if I already got the NLP results. Given a PDF named catsrule.pdf, I'll look for catsrule.json.

Here's the code that represents this logic, minus the actual implementations of either API (I've also removed the required statements):

const DIFFBOT_KEY = process.env.DIFFBOT_KEY;

const INPUT = './pdfs/';

(async () => {

let files = await glob(INPUT + '*.pdf');

console.log(`Going to process ${files.length} PDFs.\n`);

for(file of files) {

console.log(`Checking ${file} to see if it has a txt or json file.`);

// ToDo: This would fail with foo.pdf.pdf. Don't do that.

let txtFile = file.replace('.pdf', '.txt');

let jsonFile = file.replace('.pdf', '.json');

let textExists = await exists(txtFile);

let text;

if(!textExists) {

console.log(`We do not have ${txtFile} and need to make it.`);

text = await getText(file);

await fs.writeFile(txtFile, text);

console.log('I have saved the extracted PDF text.');

} else {

console.log(`The text file ${txtFile} already exists.`);

text = await fs.readFile(txtFile,'utf8');

}

let jsonExists = await exists(jsonFile);

if(!jsonExists) {

console.log(`We do not have ${jsonFile} and need to make it.`);

let data = await getData(text);

await fs.writeFile(jsonFile, JSON.stringify(data));

console.log('I have saved the parsed data from the text.');

} else console.log(`The data file ${jsonFile} already exists.`);

}

})();By saving results, this script could be run multiple times as new PDFs are added. Now, let's look at our API calls. First, getText:

async function getText(pdf) {

const credentials = PDFServicesSdk.Credentials

.serviceAccountCredentialsBuilder()

.fromFile('pdftools-api-credentials.json')

.build();

// Create an ExecutionContext using credentials

const executionContext = PDFServicesSdk.ExecutionContext.create(credentials);

// Build extractPDF options

const options = new PDFServicesSdk.ExtractPDF.options.ExtractPdfOptions.Builder()

.addElementsToExtract(

PDFServicesSdk.ExtractPDF.options.ExtractElementType.TEXT

)

.build()

// Create a new operation instance.

const extractPDFOperation = PDFServicesSdk.ExtractPDF.Operation.createNew(),

input = PDFServicesSdk.FileRef.createFromLocalFile(

pdf,

PDFServicesSdk.ExtractPDF.SupportedSourceFormat.pdf

);

extractPDFOperation.setInput(input);

extractPDFOperation.setOptions(options);

let outputZip = './' + nanoid() + '.zip';

let result = await extractPDFOperation.execute(executionContext);

await result.saveAsFile(outputZip);

let zip = new AdmZip(outputZip);

let data = JSON.parse(zip.readAsText('structuredData.json'));

let text = data.elements.filter(e => e.Text).reduce((result, e) => {

return result + e.Text + '\n';

},'');

await fs.unlink(outputZip);

return text;

}

For the most part, this is taken right from our Extract API docs. Our SDK returns a ZIP file, so we use an NPM package (AdmZip) to get the JSON result out of the ZIP. We then filter out the text elements from our JSON result to create one big honkin' text string. The net result is - given a PDF filename as input, we get a text string back.

Now, let's look at the code to execute the natural language processing on the text:

async function getData(text) {

let fields = 'entities,sentiment,facts,records,categories';

let token = DIFFBOT_KEY;

let url = `https://nl.diffbot.com/v1/?fields=${fields}&token=${token}`;

let body = [{

content:text,

lang:'en',

format:'plain text'

}];

let req = await fetch(url, {

method:'POST',

body:JSON.stringify(body),

headers: { 'Content-Type':'application/json' }

});

return await req.json();

}

This is virtually identical to the earlier example. Note that you can tweak the fields value to change what Diffbot does with your text. The result is an impressively large amount of data. Much like the PDF Extract API, the JSON can be hundreds of lines long. If you want to see the raw result, you can look at an example here. Warning: when formatted, that's roughly sixty-two thousand lines of data.

So, now what? Great question! As a quick demo, I thought it would be nice to filter the NLP data down to a list of people mentioned in the PDF as well as categories. For people, I looked at the entities returned. Here is one example:

{

"name": "Sarah Clark",

"confidence": 0.99999547,

"salience": 0.976258,

"sentiment": 0,

"isCustom": false,

"allUris": [],

"allTypes": [

{

"name": "person",

"diffbotUri": "https://diffbot.com/entity/E4aFoJie0MN6dcs_yDRFwXQ",

"dbpediaUri": "http://dbpedia.org/ontology/Person"

}

],

"mentions": [

{

"text": "Sarah Clark",

"beginOffset": 425,

"endOffset": 436,

"confidence": 0.99984574

},

{

"text": "Sarah Clark",

"beginOffset": 38923,

"endOffset": 38934,

"confidence": 0.99999547

}

]

}Note the value in allTypes which specifies this is a person. Here's an example of a non-person entity from a little company in Washington:

{

"name": "Microsoft",

"confidence": 0.9998447,

"salience": 0,

"sentiment": 0,

"isCustom": false,

"allUris": [],

"allTypes": [

{

"name": "organization",

"diffbotUri": "https://diffbot.com/entity/EN1ClYEdMMQCxB6AWTkT3mA",

"dbpediaUri": "http://dbpedia.org/ontology/Organisation"

}

],

"mentions": [

{

"text": "Microsoft",

"beginOffset": 35999,

"endOffset": 36008,

"confidence": 0.9998447

},

{

"text": "Microsoft",

"beginOffset": 38848,

"endOffset": 38857,

"confidence": 0.9988986

}

]

}Categories are a bit simpler as they don't need any filtering (outside of uniqueness):

"categories": {

"iabv1": [

{

"id": "IAB19",

"name": "Technology & Computing",

"confidence": 0.99088717

}

],

"iabv2": [

{

"id": "596",

"name": "Technology & Computing",

"confidence": 0.99088717

}

]

}Knowing where to get stuff, I wrote a script that scanned my PDF directories and for each PDF, attempted to 'gather' the data:

const INPUT = './pdfs/';

const OUTPUT = './pdfdata.json';

(async () => {

let result = [];

let files = await glob(INPUT + '*.pdf');

for(file of files) {

console.log(`Checking ${file} to see if it has a json file.`);

let jsonFile = file.replace('.pdf', '.json');

let jsonExists = await exists(jsonFile);

if(jsonExists) {

let json = JSON.parse(await fs.readFile(jsonFile, 'utf8'));

let people = gatherPeople(json);

let categories = gatherCategories(json);

result.push({

pdf: file,

people,

categories

});

} else console.log(`The data file ${jsonFile} didn't exist so we are skipping.`);

}

await fs.writeFile(OUTPUT, JSON.stringify(result));

console.log(`Done and written to ${OUTPUT}.`);

})();

async function exists(p) {

try {

await fs.stat(p);

return true;

} catch(e) {

return false;

}

}

function gatherPeople(data) {

let people = data[0].entities.filter(ent => {

return ent.allTypes.some(type => {

return type.name === 'person';

});

}).map(person => {

return person.name;

});

//https://stackoverflow.com/a/43046408/52160

return [...new Set(people)];

}

function gatherCategories(data) {

let categories = [];

if(data[0].categories['iabv1']) {

data[0].categories.iabv1.forEach(c => {

categories.push(c.name);

});

}

if(data[0].categories['iabv2']) {

data[0].categories.iabv2.forEach(c => {

categories.push(c.name);

});

}

//https://stackoverflow.com/a/43046408/52160

return [...new Set(categories)];

}As before, we stripped out the required statements. The end result of this script is a JSON file with every PDF and a list of people and categories. For both, we filter to unique values. (This may be problematic for names of course, but I assume the chance of two people with the same name in a PDF to be slight).

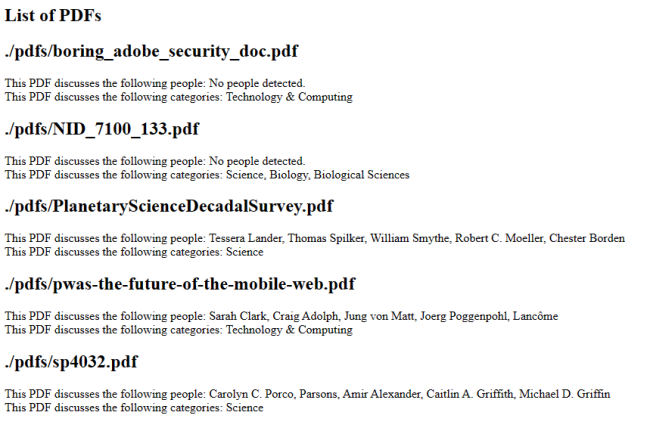

With this data, we can then build a web app to load it and render it on screen:

The code for the web application isn't terribly interesting, but if you want to see it, or any other sample from this article, you may find it here.

If you want to try this yourself, sign up for a free trial of PDF Extract API and checkout Diffbot for a deeper look at their awesome APIs!

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK