Project Monterey, Capitola, and NVIDIA announcements… What does this mean?

source link: https://www.yellow-bricks.com/2021/11/08/project-monterey-capitola-nvidia/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Project Monterey, Capitola, and NVIDIA announcements… What does this mean?

Duncan Epping · Nov 8, 2021 · Leave a Comment

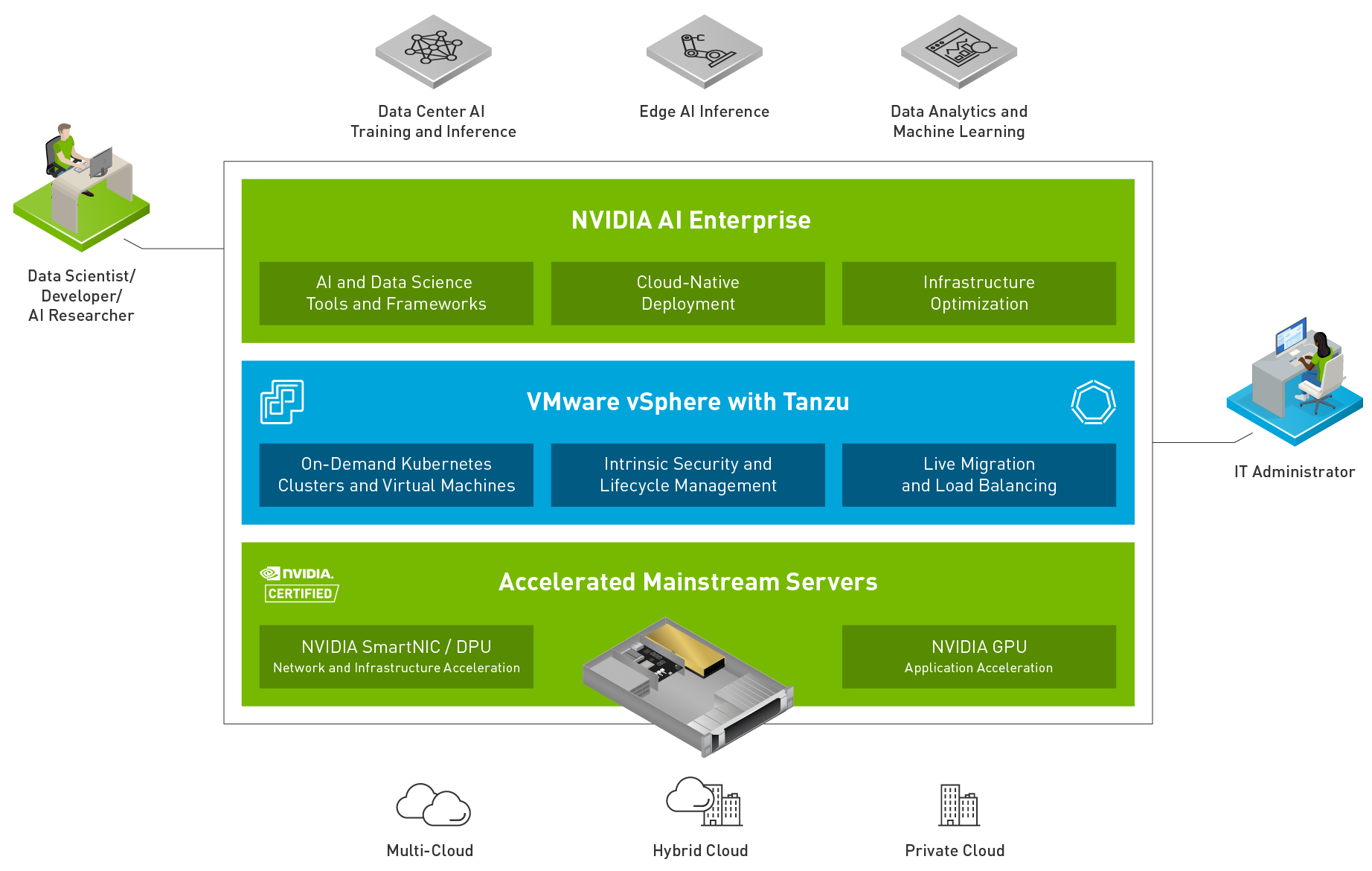

At VMworld, there were various announcements and updates around projects that VMware is working on. Three of these announcements/projects received a lot of attention at VMworld and from folks attending. These announcements were Project Monterey, Project Capitola, and the NVIDIA + VMware AI Ready Platform. For those who have not followed these projects and/or announcements, they are all about GPUs, DPUs, and Memory. The past couple of weeks I have been working on developing new content for some upcoming VMUGs, these projects most likely will be part of those presentations.

When I started looking at the projects I looked at them as three separate unrelated projects, but over time I realized that although they are separate projects they are definitely related. I suspect that over time these projects, along with other projects like Radium, will play a crucial role in many infrastructures out there. Why? Well, because I strongly believe that data is at the heart of every business transformation! Analytics, artificial intelligence, machine learning, it is what most companies over time will require and use to grow their business, either through the development of new products and services or through the acquisition of new customers in innovative ways. Of course, many companies already use these technologies extensively.

This is where things like the work with NVIDIA, Project Monterey, and Project Capitola come into play. The projects will enable you to provide a platform to your customers that will enable them to analyze data, learn from the outcome, and then develop new products, services, be more efficient, and/or expand the business in general. When I think about analyzing data or machine learning, one thing that stands out to me is that today close to 50% of all AI/ML projects never go into production. This is for a variety of reasons, but key reasons these projects fail typically are Complexity, Security/Privacy, and Time To Value.

This is why the collaboration between VMware and NVIDIA is extremely important. The ability to deploy a VMware/NVIDIA certified solution should mitigate a lot of risks, not just from a hardware point of view, but of course also from a software perspective, as this work with NVIDIA is not just about a certified hardware stack, but also the software stack that is typically required for these environments will come with it.

So what has Monterey and Capitola to do with all of this? If you look at some of Frank’s posts on the topic of AI and ML, it becomes clear that there are a couple of obvious bottlenecks in most infrastructures when it comes to AI/ML workloads. Some of these bottlenecks have been bottlenecks for storage and VMs in general as well for the longest time. What these bottlenecks are? Data movement and memory. This is where Monterey and Capitola come into play. Project Monterey is VMware’s project around SmartNICs (or DPUs as some vendors call them). SmartNICs/DPUs provide many benefits, but the key benefit is definitely the offloading capabilities. By offloading certain tasks to the SmartNIC, the CPU will be freed up for other tasks, which will benefit the workloads running. Also, we can expect the speed of these devices to also go up, which will allow ultimately for faster and more efficient data movement. Of course, there are many more benefits like security and isolation, but that is not what I want to discuss today.

Then lastly, Project Capitola, all about memory! Software-Defined Memory as it is called in this blog. This remains the bottleneck in most environments these days, with-or-without AI/ML, you can never have enough memory! Unfortunately, memory comes at a (high) cost. Project Capitola may not make your DIMMs cheaper, but it does aim to make the use of memory in your host and cluster more cost-efficient. Not only does it aim to provide memory tiering within a host, but it also aims to provide pooled memory across hosts, which will directly tie back to Project Monterey, as low latency and high bandwidth connections will be extremely important in that scenario! Is this purely aimed at AI/ML? No of course note, tiers of memory and pools of memory should be available to all kinds of apps. Does your app need all data in memory, but is there no “nanoseconds latency” requirement? That is where tiered memory comes into play! Do you need more memory for a workload than a single host can offer? That is where pooled memory comes into play! So many cool use cases come to mind.

Some of you may have already realized where these projects were leading too, some may not have had that “aha moment” yet, hopefully, the above helps you realizing that it is projects like these that will ensure your datacenter will be future proof and will enable the transformation of your business.

If you want learn more about some of the announcements and projects, just go to the VMworld website and watch the recordings they have available!

Share it:

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK