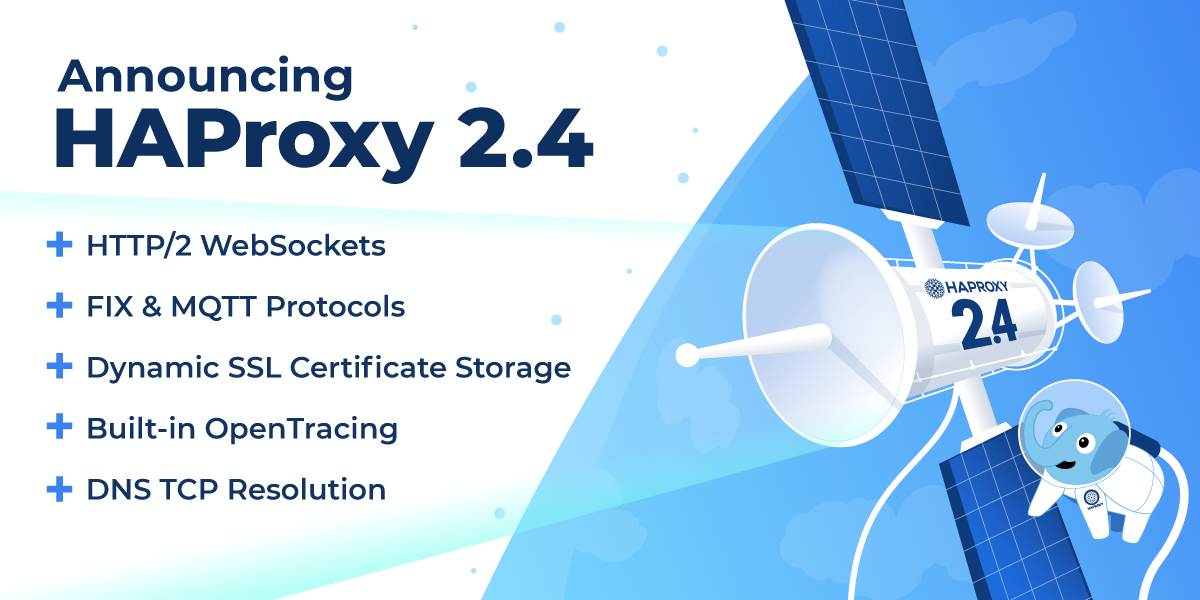

Announcing HAProxy 2.4

source link: https://www.haproxy.com/blog/announcing-haproxy-2-4/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Register for our live webinar to learn more about this release.

HAProxy 2.4 adds exciting features such as support for HTTP/2 WebSockets, authorization and routing of MQTT and FIX (Financial Information Exchange) protocol messages, DNS resolution over TCP, server timeouts that you can change on the fly, dynamic SSL certificate storage for client certificates sent to backend servers, and an improved cache; it adds a built-in OpenTracing integration, new Prometheus metrics, and circuit breaking improvements.

This release was truly a community effort and could not have been made possible without all of the hard work from everyone involved in active discussions on the mailing list and the HAProxy project GitHub. The HAProxy community provides code submissions covering new functionality and bug fixes, documentation improvements, quality assurance testing, continuous integration environments, bug reports, and much more. Everyone has done their part to make this release possible! If you’d like to join this amazing community, you can find it on GitHub, Slack, Discourse, and the HAProxy mailing list.

This has been a busy first half of the year for HAProxy projects with the recent release of the HAProxy Kubernetes Ingress Controller 1.6 and the HAProxy Data Plane API 2.3. Not to forget, HAProxy reached over 2 million HTTP requests per second on a single Arm-based AWS Graviton2 Instance!

HAProxy 2.4 Changelog

Contributors

In this post, you will get an overview of the following updates included in this release:

Protocols

Load Balancing

SSL/TLS Improvements

Observability

Cache

Configuration

New Sample Fetches & Converters

Runtime API

Lua

Build

Debugging

Reorganization

Testing

Miscellaneous

Contributors

Protocols

This version adds load balancing for HTTP/2 WebSockets, FIX (Financial Information eXchange), and MQTT.

HTTP/2 WebSockets

This release supports the WebSocket protocol over HTTP/2 through the extended CONNECT HTTP method, which is outlined in RFC 8441.

Historically, a WebSocket connection, defined in RFC 6455, started out as an ordinary HTTP message; when the client sent a Connection: upgrade header, their connection was upgraded, or switched, to a TCP connection over which the WebSocket protocol was used. That connection then belonged exclusively to that WebSocket client. However, when using HTTP/2, which supports multiplexing, multiple WebSocket tunnels and HTTP/2 streams can share a single TCP connection. This brings a nice performance increase between HAProxy and your backend servers, since it condenses multiple connections down to a single connection.

Financial Information eXchange (FIX)

The Financial Information eXchange protocol is an open standard that has become the de facto protocol in the Fintech world. It allows firms, trading platforms, and regulators to communicate equity trading information, facilitating commerce despite differences in country, spoken language, and currency.

HAProxy can now accept, validate, and route FIX protocol messages. It can also inspect tag values within the Logon message sent by the client, which you can use to make authorization and routing decisions using HAProxy’s powerful ACL syntax. Read more about HAProxy’s FIX protocol implementation in the blog post HAProxy Enterprise 2.3 and HAProxy 2.4 Support the Financial Information eXchange Protocol (FIX).

MQTT is a lightweight messaging protocol typically used for communicating with Internet of Things (IoT) devices. Due to its lightweight nature it scales effectively, and it’s not uncommon to have thousands of IoT devices communicating with a single, centralized MQTT service. In previous versions, HAProxy supported MQTT as an opaque TCP connection. Dave Doyle and Dylan O’Mahony presented how they used HAProxy at Bose to service 5 million MQTT connections on Kubernetes.

HAProxy 2.4 improves its support for MQTT. Now, you can use the mqtt_is_valid converter to check whether the first message sent by the client (a CONNECT message) or by the server (a CONNACK message) is a valid MQTT packet. You can also read the fields in those initial messages to perform an authorization check and/or initialize session persistence to a server.

Below, we use the mqtt_is_valid converter to reject any TCP connections that send an invalid CONNECT message:

tcp-request content reject unless { req.payload(0,0),mqtt_is_valid }

The mqtt_field_value converter reads a field from a CONNECT or CONNACK message. In the example below, we use it on the stick on line to persist a client to a backend server based on the client_identifier field in the CONNECT message. We are using the Mosquitto open-source MQTT broker for the servers.

frontend fe_mqtt mode tcp bind :1993

# Reject connections that have an invalid MQTT packet tcp-request content reject unless { req.payload(0,0),mqtt_is_valid } default_backend be_mqtt

backend be_mqtt mode tcp

# Create a stick table for session persistence stick-table type string len 32 size 100k expire 30m

# Use ClientID / client_identifier as persistence key stick on req.payload(0,0),mqtt_field_value(connect,client_identifier)

server mosquitto1 10.1.0.6:1883 server mosquitto2 10.1.0.7:1883

Another way to use this is to outright reject a connection unless the client_identifier matches a certain value, as shown below:

backend be_mqtt mode tcp

# Deny a request unless it matches # the client identifier "mosquitto_1234" tcp-request content reject unless { req.payload(0,0),mqtt_field_value(connect,client_identifier) mosquitto_1234 }

server mosquitto1 10.1.0.6:1883 server mosquitto2 10.1.0.7:1883

Load Balancing

This version adds DNS over TCP resolving capabilities, which expands the record sizes used in DNS Service Discovery. It also improves support for circuit breaking.

DNS TCP Resolution

With a resolvers section in your configuration, you can declare which DNS nameservers HAProxy should query to resolve backend server hostnames, rather than specifying IP addresses. If configured to do so, HAProxy will resolve these hostnames continuously, which is quite useful when your backend application changes its IP address frequently, which is common in cloud-native environments. It also allows DNS-based service discovery.

Until now, HAProxy supported resolving DNS over UDP only, which caps the maximum size of a DNS response—although you can increase that limit to a degree by setting the accepted_payload_size directive in the resolvers section. However, with more organizations now hosting many replicas of a microservice under a single DNS name, which generates a large DNS response, UDP’s size limit (8192 bytes) has become a bottleneck.

HAProxy 2.4 adds support for resolving hostnames over TCP, which as a connection-oriented protocol raises the cap on response size significantly. HAProxy will accept TCP responses as large as 65,535 bytes. In the example below, we prefix the nameserver’s address with tcp:

resolvers mydns # recommend value for UDP is 4096 # tcp can go up to 65535 accepted_payload_size 65535 nameserver dns1 [email protected]:53

Circuit Breaking Improvements

In our blog post Circuit Breaking in HAProxy, we describe two ways to implement circuit breaking between your backend services. The advanced way uses stick tables, ACLs, and variables to achieve the desired effect. However, since there wasn’t a stick table counter for tracking server-side 5xx errors—the http_err_rate counter tracks client-side, HTTP 4xx errors only—we had to settle for the general-purpose counter, gpc0_rate, to track server-side errors and trigger circuit breaking when there were too many.

In this latest version, you can use the new http_fail_cnt and http_fail_rate counters to track server-side, HTTP 5xx errors. This clarifies the circuit breaking example, since you don’t need to use the general-purpose counter.

Here’s an updated snippet showing how to trigger the circuit breaker and return an HTTP 503 Service Unavailable response to all callers for 30 seconds when more than 50% of requests within 10 seconds have resulted in a server error:

backend serviceA # Create storage for tracking client info stick-table type string size 1 expire 30s store http_req_rate(10s),http_fail_rate(10s),gpc1

# Is the circuit broken? acl circuit_open be_name,table_gpc1 gt 0

# Reject request if circuit is broken http-request return status 503 content-type "application/json" string "{ \"message\": \"Circuit Breaker tripped\" }" if circuit_open

# Begin tracking requests http-request track-sc0 be_name

# Store the HTTP request rate and error rate in variables http-response set-var(res.req_rate) sc_http_req_rate(0) http-response set-var(res.err_rate) sc_http_fail_rate(0)

# Check if error rate is greater than 50% using some math http-response sc-inc-gpc1(0) if { int(100),mul(res.err_rate),div(res.req_rate) gt 50 } server s1 192.168.0.10:80 check

SSL/TLS Improvements

This release adds dynamic ssl certificate storage and management for server-side certificates through the Runtime API. It also improves SSL/TLS connection reuse.

Dynamic SSL Certificate Storage (Server side)

Not only can HAProxy authenticate clients using SSL client certificate authentication, but it can also send its own client certificate to your backend servers so that those servers can authenticate it. However, updating HAProxy’s client certificate(s) had required reloading HAProxy after the change. Reloads are completely safe and will drop zero traffic, but it does inconveniently take some amount of time to load the certificates into memory, especially if you’re dealing with tens of thousands of unique client certificates—stress on the word unique because HAProxy will load a certificate that’s used in multiple places only once.

This release makes it possible to update HAProxy’s client certificates on the fly using the Runtime API, avoiding a reload altogether. The API commands are the same Dynamic SSL Certificate Storage commands introduced in HAProxy 2.2, but now extended to the server line in a backend. You can create, update, and delete SSL client certificates in memory.

Below, we fetch the list of SSL certificates to find the client certificate, then update it:

# Get loaded certificates $ echo "show ssl cert" |socat /var/run/haproxy/api.sock -

# filename /etc/haproxy/cert/haproxy-client.pem

# Update HAProxy's client certificate $ echo -e -n "set ssl cert /etc/haproxy/cert/haproxy-client.pem <<\n$(cat /etc/haproxy/cert/haproxy-client.pem)\n\n" |socat /var/run/haproxy/api.sock -

Transaction created for certificate /etc/haproxy/cert/haproxy-client.pem!

# Commit the SSL transaction $ echo "commit ssl cert /etc/haproxy/cert/haproxy-client.pem" |socat /var/run/haproxy/api.sock -

Committing /etc/haproxy/cert/haproxy-client.pem. Success!

You can now also take advantage of ssl-load-extra-files and ssl-load-extra-del-ext directives in your backend section to allow storing your certificate’s private key in a separate file from the certificate itself. Recall that, historically, you’ve had to combine the certificate and private key into a single PEM file.

Connection Reuse Improvements

As you harness HAProxy’s configuration routing capabilities, you may be unaware of the magic that is happening behind the scenes when these rules are applied. One of those magic items involves connection reuse. Reusing a connection allows you to improve latency, conserve resources, and ultimately service more requests.

When a connection is handled over TLS, many connections need to be marked private (for example, when sending SNI) to prevent reusing the wrong information (e.g. wrong SNI). That prevents a connection from being reused in many cases. HAProxy 2.3 gave some leeway here, allowing you to reuse connections to backend servers when the SNI was hardcoded using a directive like sni str(example.local). HAProxy 2.4 can now reuse connections to backend servers even when the SNI is calculated dynamically, such as from the request’s Host header (e.g. sni req.hdr(host)).

Observability

This release adds a built-in OpenTracing filter, an improved Prometheus exporter, and SSL/TLS session and handshake statistics.

Built-in OpenTracing

HAProxy 2.3 introduced support for OpenTracing in HAProxy by leveraging the Stream Processing Offload Engine, which although it was a step forward, did have known limitations including the inability to attach spans to a trace that had already been created by another service or proxy. HAProxy 2.4 adds support for OpenTracing compiled directly into the core codebase where it does not have this limitation. You’ll find it in the addons directory of the HAProxy GitHub project.

To enable it, first you must install the opentracing-c-wrapper. Then, you can build with the USE_OT=1 flag specified in your make command, as shown below:

$ PKG_CONFIG_PATH=/opt/lib/pkgconfig \ make -j $(nproc) \ TARGET=linux-glibc \ USE_LUA=1 \ USE_OPENSSL=1 \ USE_PCRE=1 \ USE_SYSTEMD=1 \ USE_OT=1

Note that the -j $(nproc) flag tells the make command to use all available cores / threads while compiling.

Prometheus Exporter Improvements

This version brings several improvements to the native Prometheus exporter. First, the exporter code has been moved into a new directory, addons/promex. Second, instead of specifying EXTRA_OBJS when calling make, as was required before…

$ make TARGET=linux-glibc \ USE_LUA=1 \ USE_OPENSSL=1 \ USE_PCRE=1 \ USE_SYSTEMD=1 \ EXTRA_OBJS="contrib/prometheus-exporter/service-prometheus.o"

…you can compile it in by adding USE_PROMEX=1:

$ make TARGET=linux-glibc \ USE_LUA=1 \ USE_OPENSSL=1 \ USE_PCRE=1 \ USE_SYSTEMD=1 \ USE_PROMEX=1

Also, state values for frontends, backends, and servers (up, down, maint, etc.) have been changed from being gauge values to being labels, making it easier to group by this criteria. This is a breaking change from version 2.3.

New metrics have been added to report on listeners, stick tables, and backend/server weight information, which we list below:

- haproxy_process_uptime_seconds

- haproxy_process_recv_logs_total

- haproxy_process_build_info

- haproxy_listener_current_sessions

- haproxy_listener_max_sessions

- haproxy_listener_limit_sessions

- haproxy_listener_sessions_total

- haproxy_listener_bytes_in_total

- haproxy_listener_bytes_out_total

- haproxy_listener_requests_denied_total

- haproxy_listener_responses_denied_total

- haproxy_listener_request_errors_total

- haproxy_listener_status

- haproxy_listener_denied_connections_total

- haproxy_listener_denied_sessions_total

- haproxy_listener_failed_header_rewriting_total

- haproxy_listener_internal_errors_total

- haproxy_backend_uweight

- haproxy_server_uweight

- haproxy_sticktable_size

- haproxy_sticktable_used

SSL/TLS Statistics

HAProxy 2.3 allowed you to see additional statistics on the HAProxy Stats page by adding a directive named stats show-modules to the frontend that hosts the page:

frontend stats bind :8404 stats enable stats uri / stats refresh 10s stats show-modules no log

This adds a new column called Extra modules to the page that shows HTTP/2 related statistics. HAProxy 2.4 adds more fields there for tracking SSL/TLS handshake and session statistics. You will find the following new fields, which are also displayed when you call the Runtime API’s show stat command, given you have at least one bind statement that terminates SSL:

ssl_sess

Total number of SSL sessions established

ssl_reused_sess

Total number of SSL sessions reused

ssl_failed_handshake

Total number of failed handshake

Cache

Caching was introduced in HAProxy 1.8 and has continued to be improved. This release brings support for the Vary header via a new keyword process-vary, which is set to on or off. It defaults to being off, which means that a response containing a Vary header will simply not be cached. It will also automatically normalize the accept-encoding header to improve the use of the cache.

To complement this, a new directive max-secondary-entries allows you to control the maximum number of cached entries with the same primary key. For example, if you cache based on URL, but vary on the User-Agent, you may create a lot of slightly different cached entries for the same URL. This prevents the cache from being filled with duplicates of the same resource. It requires process-vary to be on and it defaults to 10.

Configuration

The HAProxy configuration adds nestable configuration conditionals that allow you to include or exclude blocks of configuration depending on environment, feature, and version information. It also improves the configuration validator with a new suggestions tool, allows for modifying the default path used for accessing supplementary files, and adds new pseudo variables. Your defaults sections can now be named, allowing for improved logical grouping within the configuration.

Conditionals

Have you ever wanted to reuse an HAProxy configuration across multiple environments but needed to account for slight differences between them, such as to enable new HAProxy features depending on the version of HAProxy currently running? HAProxy 2.4 brings a set of nestable preprocessor-like directives that allow you to include or skip some blocks of configuration.

The following directives have been introduced to form conditional blocks:

.if <condition> ... .endif.elif <condition>.else.diag.notice.warning.alert

The .if and .elif statements are followed by a function name like version_atleast to include the nested block of code if the function returns true.

.if version_atleast(2.4) # include configuration lines .endif

Below we list the available functions that you can use as conditions:

Conditional Function Descriptiondefined(<name>)

returns true if an environment variable <name> exists, regardless of its contents

feature(<name>)

returns true if feature <name> is listed as present in the features list reported by haproxy -vv

streq(<str1>,<str2>)

returns true if the two strings are equal

strneq(<str1>,<str2>)

returns true if the two strings differ

version_atleast(<ver>)

returns true if the current HAProxy version is at least as recent as <ver> otherwise false.

version_before(<ver>)

returns true if the current HAProxy version is strictly older than <ver> otherwise false.

You can also nest multiple conditions. Below, we check whether the version of HAProxy is 2.4 and whether the OpenTracing feature is available. If so, we include the OpenTracing filter.

frontend fe_main .if version_atleast(2.4) .if feature(OT) # enable OpenTracing filter opentracing config tracing.conf .endif .endif

You’ll find the OpenTracing feature string, OT, and other feature strings by calling haproxy -vv.

Feature list : +EPOLL -KQUEUE +NETFILTER +PCRE -PCRE_JIT -PCRE2 -PCRE2_JIT +POLL -PRIVATE_CACHE +THREAD -PTHREAD_PSHARED +BACKTRACE -STATIC_PCRE -STATIC_PCRE2 +TPROXY +LINUX_TPROXY +LINUX_SPLICE +LIBCRYPT +CRYPT_H +GETADDRINFO +OPENSSL -LUA +FUTEX +ACCEPT4 -CLOSEFROM +ZLIB -SLZ +CPU_AFFINITY +TFO +NS +DL +RT -DEVICEATLAS -51DEGREES -WURFL +SYSTEMD -OBSOLETE_LINKER +PRCTL +THREAD_DUMP -EVPORTS +OT -QUIC -PROMEX -MEMORY_PROFILING

The .diag, .notice, .warning, and .alert directives, which you’ll place inside a conditional block, print a diagnostic message that you can use to notify in the logs when the block of configuration was inserted.

.if version_atleast(2.4) .diag "Bob Smith, 2021-05-07: replace 'redirect' with 'return' after switch to 2.4" # configuration snippet .endif

The table below describes these directives:

Status directive Description.diag "message"

emit this message only when in diagnostic mode (-dD)

.notice "message"

emit this message at level NOTICE

.warning "message"

emit this message at level WARNING

.alert "message"

emit this message at level ALERT

Validation

When you run HAProxy with the -c flag, it checks the configuration file for errors and then exits. This version improves the output, suggesting alternative syntax for errors it finds. Below we pipe an incorrect configuration to HAProxy:

$ printf "listen f\nbind :8000 tcut\n" | haproxy -c -f /dev/stdin

[ALERT] 070/101358 (25146) : parsing [/dev/stdin:2] : 'bind :8000' unknown keyword 'tcut'; did you mean 'tcp-ut' maybe ? [ALERT] 070/101358 (25146) : Error(s) found in configuration file : /dev/stdin [ALERT] 070/101358 (25146) : Fatal errors found in configuration.

You’ll find that suggestions have been enabled for many different directives within the HAProxy configuration to help point you in the right direction when encountering an error.

Default Path

By default, any file paths in your configuration—paths to map files, ACL files, etc.—that use a relative path are loaded from the current directory where the HAProxy process is started.

However, you may want to force all relative paths to start from a different location. A new global directive default-path allows you to specify the path from which you would like HAProxy to load additional files. It accepts one of the following options:

current

Relative file paths are loaded from the directory the process was started in. This is the default.

config

Relative file paths are loaded from the directory containing the configuration file.

parent

Relative file paths should be loaded from the parent directory above the configuration file directory.

origin <path>

Relative file paths should be loaded from the designated path.

Pseudo Variables

Some pseudo-variables have been added, which can be helpful when troubleshooting.

Variable Description.FILE

the name of the configuration file currently being parsed.

.LINE

the line number of the configuration file currently being parsed, starting at one.

.SECTION

the name of the section currently being parsed, or its type if the section doesn’t have a name (e.g. “global”)

These variables are resolved at the location where they are parsed. For example, if a .LINE variable is used in a log-format directive located in a defaults section, its line number will be resolved before parsing and compiling the log-format directive. So, this same line number will be reused by subsequent proxy sections.

Named Defaults

An HAProxy defaults section allows you to reuse a wide variety of settings in both frontend and backend sections. You can specify multiple defaults sections and any frontends or backends that come after it will have the settings from the prior section. Let’s assume that we had a defaults section for HTTP and another for TCP. Our hypothetical configuration may look roughly like this as pseudocode:

defaults <tcp settings here>

frontend fe_tcp ... backend be_tcp ...

------------------- defaults <http settings here>

frontend fe_http ... backend be_http ...

HAProxy 2.4 introduces support for named defaults sections, allowing a specific frontend or backend to inherit them. The example above becomes a reality:

defaults tcp-defaults ...

defaults http-defaults ... frontend fe_tcp from tcp-defaults ...

frontend fe_http from http-defaults ...

backed be_tcp from tcp-defaults ...

backend be_http from http-defaults ...

You can also extend a named defaults section from another defaults section if you want to modify only a small portion of the settings, but keep the rest.

defaults tcp-defaults mode tcp timeout connect 5s timeout client 5s timeout server 5s

default http-defaults from tcp-defaults mode http

Here, the section http-defaults inherits all of the settings from the section tcp-defaults, but changes the mode from tcp to http.

Dynamic Server Timeouts

Timeout settings in HAProxy give you granular control over how long to wait at various points when relaying messages between a client and server. You can use them to control everything from the initial client connection, idle times for both the client and server, and even for setting timeouts on the length of time it takes a client to transfer a full HTTP request, which can protect against certain types of DoS attacks.

However, in some cases, you may require even more control. HAProxy 2.4 adds the ability to change the timeout server and timeout tunnel settings dynamically using the http-request set-timeout directive. You can use this to set custom timeouts on a per-host or per-URI basis, pull a timeout value from an HTTP header, or change timeouts using a Map file.

The example below has a default server timeout of five seconds but it will update it to 30 seconds if the path begins with /slow/.

frontend fe_main timeout server 5s http-request set-timeout server 30s if { path -m beg /slow/ }

For the next example, we first create a Map file named host.map that maps hostnames to a timeout value in milliseconds.

# host.map www.example.com 3000 api.example.com 1000 websockets.example.com 60000

We apply the server timeout from the Map file depending on the HTTP host header.

frontend fe_main http-request set-timeout server req.hdr(host),map_int(host.map)

The new fetch methods be_server_timeout, be_tunnel_timeout, cur_server_timeout, and cur_tunnel_timeout help to determine when a timeout was changed and allows you to know what the current value is.

be_server_timeout

Returns the configuration value in millisecond for the server timeout of the current backend

be_tunnel_timeout

Returns the configuration value in millisecond for the tunnel timeout of the current backend

cur_server_timeout

Returns the currently applied server timeout in millisecond for the stream

cur_tunnel_timeout

Returns the currently applied tunnel timeout in millisecond for the stream

HTTP Protocol Upgrade

HAProxy allows you to set mode tcp in a frontend and route traffic to a backend that has mode http. This allows you to receive various types of traffic in the frontend and upgrade the connection to HTTP mode depending on the backend. HAProxy 2.4 makes it possible to do this all in the frontend section by using the new tcp-request content switch-mode directive. Currently, only TCP-to-HTTP upgrades are supported, not the other way around.

Here’s an example that checks the built-in variable HTTP to see whether the protocol is valid HTTP and, if so, switches the mode to http:

frontend fe_main mode tcp tcp-request inspect-delay 5s tcp-request content switch-mode http if HTTP ...

Header Deletion with Pattern Matching

After the removal of rspdel in HAProxy 2.1 there was a need to be able to remove headers based on a matched pattern. This release accommodates that by adding an -m <method> argument to the http-request del-header, http-response del-header, and http-after-response del-header directives.

The method can be str for an exact string match, beg for a prefix match, end for a suffix match, sub for a substring match, and reg for a regular expression match. For example, if you wanted to remove any headers that begin with X-Forwarded from a client’s request, you would be able to do the following:

http-request del-header X-Forwarded -m beg

HTTP Request Conditional Body Wait Time

Historically, if you needed to wait for a full request payload to perform analysis you would use the directive option http-buffer-request. This had some drawbacks. First, it was an on or off toggle for the entire frontend/backend and couldn’t be enabled on-demand, such as on a per-request basis. Also, it was only available on the request side and was not available for the response body.

The new http-request wait-for-body and http-response wait-for-body directives allow you to conditionally wait for and buffer a request or response body. These actions may be used as a replacement for option http-buffer-request.

In the snippet below, HAProxy waits one second at most to receive the POST body of the request, or until it gets at least 1,000 bytes:

http-request wait-for-body time 1s at-least 1k if METH_POST

Here is a similar example that shows how to wait for an HTTP response from the server before performing further analysis on it:

http-response wait-for-body time 1s at-least 10k

URI Normalization (Experimental)

On a daily basis, attackers are probing web applications, looking for vulnerabilities and also testing evasion techniques that may allow them to slip through defined security policies. Some invasion techniques may include using percent-encoded characters, adding dots (.) within a path, or appending extra slashes. These are only a small subset of the evasion techniques that hackers may employ. However, de-normalized URIs are not only used by attackers, but also by legitimate applications. If you’re interested in reading the full scope of possibilities you may want to check out this thread from the HAProxy mailing list.

By default, HAProxy does not attempt to normalize paths. There are a few reasons for this, one of which is that if it did, it would remove some visibility since the request logs would not show the URIs as they were sent. That could blind you to an ongoing attack. A new feature was introduced that attempts to solve this while remaining transparent and giving you control over which data is normalized.

A new directive, http-request normalize-uri, normalizes the request’s URI if a given condition is true.

The syntax is:

http-request normalize-uri <normalizer> [ { if | unless } <condition> ]

Set the normalizer parameter to one of the following:

Normalizer Description Examplefragment-encode

Encodes “#” as “%23”.

/#foo -> /%23foo

fragment-strip

Removes the URI’s “fragment” component.

/#foo -> /

path-merge-slashes

Merges adjacent slashes within the “path” component into a single slash.

// -> //foo//bar -> /foo/bar

path-strip-dot

Removes “/./” segments within the “path” component

/. -> //./bar/ -> /bar/

/a/./a -> /a/a

/.well-known/ -> /.well-known/ (no change)

path-strip-dotdot

Normalizes “/../” segments within the “path” component

/foo/../ -> //foo/../bar/ -> /bar/

/foo//../ -> /foo/

percent-decode-unreserved

Decodes unreserved percent encoded characters to

/%61dmin -> /admin/foo%3Fbar=baz -> /foo%3Fbar=baz

/%%36%36 -> /%66

percent-to-uppercase

Uppercases letters within percent-encoded sequences

/%6f -> /%6F

query-sort-by-name

Sorts the query string parameters by parameter name.

/?c=3&a=1&b=2 -> /?a=1&b=2&c=3/?aaa=3&a=1&aa=2 -> /?a=1&aa=2&aaa=3

Both percent-decode-unreserved and percent-to-uppercase support an additional parameter, strict, that will return an HTTP 400 when an invalid sequence is met. This feature is in experimental mode and you must include expose-experimental-directives in your configuration’s global section to use it.

New Sample Fetches & Converters

This table lists fetches that are new in HAProxy 2.4:

Name Descriptionbaseq

Returns the concatenation of the first Host header and the path part of the request with the query-string, which starts at the first slash

bc_dst

This is the destination ip address of the connection on the server side, which is the server address HAProxy connected to.

bc_dst_port

Returns an integer value corresponding to the destination TCP port of the connection on the server side, which is the port HAproxy connected to.

bc_src

This is the source ip address of the connection on the server side, which is the server address haproxy connected from.

bc_src_port

Returns an integer value corresponding to the TCP source port of the connection on the server side, which is the port HAproxy connected from.

be_server_timeout

Returns the configuration value in millisecond for the server timeout of the current backend

be_tunnel_timeout

Returns the configuration value in millisecond for the tunnel timeout of the current backend

cur_server_timeout

Returns the currently applied server timeout in millisecond for the stream

cur_tunnel_timeout

Returns the currently applied tunnel timeout in millisecond for the stream

fe_client_timeout

Returns the configuration value in millisecond for the client timeout of the current frontend

sc_http_fail_cnt(<ctr>[,<table>]

Returns the cumulative number of HTTP response failures from the currently tracked counters. This includes the both response errors and 5xx status codes other than 501 and 505.

sc_http_fail_rate(<ctr>[,<table>])

Returns the average rate of HTTP response failures from the currently tracked counters, measured in amount of failures over the period configured in the table. This includes the both response errors and 5xx status codes other than 501 and 505. See also src_http_fail_rate.

ssl_c_der

Returns the DER formatted certificate presented by the client when the incoming connection was made over an SSL/TLS transport layer

The following converters have been added:

Name Descriptionjson_query(<json_path>,[<output_type>])

The json_query converter supports the JSON types string, boolean and number.

xxh3

Hashes a binary input sample into a signed 64-bit quantity using the XXH3 64-bit variant of the XXhash hash function

ub64dec

This converter is the base64url variant of b64dec converter. base64url encoding is the “URL and Filename Safe Alphabet” variant of base64 encoding. It is also the encoding used in JWT (JSON Web Token) standard.

ub64enc

This converter is the base64url variant of base64 converter.

url_enc

Takes a string provided as input and returns the encoded version as output

Runtime API

HAProxy 2.4 adds functionality to the Runtime API.

Help Filtering

The Runtime API is a powerful tool that allows you to make in-memory changes to HAProxy that take effect immediately without a reload. It has grown to support over 100 commands, which may make it difficult to find the exact command that you are searching for. This release attempts to improve on that by adding a filter parameter to the help command.

In the snippet below, we display help for any command that starts with add:

$ echo "help add" |socat /var/run/haproxy/api.sock -

The following commands are valid at this level: add acl [@<ver>] <acl> <pattern> : add an acl entry add map [@<ver>] <map> <key> <val> : add a map entry (payload supported instead of key/val) add server <bk>/<srv> : create a new server (EXPERIMENTAL) add ssl crt-list <list> <cert> [opts]* : add to crt-list file <list> a line <cert> or a payload help [<command>] : list matching or all commands prompt : toggle interactive mode with prompt quit : disconnect

Command Hints

If you enter an invalid Runtime API command, it will now attempt to find and print the closest match.

$ echo "show state" |socat /var/run/haproxy/api.sock -

Unknown command, but maybe one of the following ones is a better match: show stat [desc|json|no-maint|typed|up]*: report counters for each proxy and server show servers state [<backend>] : dump volatile server information (all or for a single backend) help : full commands list prompt : toggle interactive mode with prompt quit : disconnect

Server agent-port and check-addr

This release adds two new Runtime API commands:

Name Descriptionset server <backend>/<server> agent-port <port>

Change the port used for agent checks.

set server <backend>/<server> check-addr <ip4 | ip6> [port <port>]

Change the IP address used for server health checks. Optionally, change the port used for server health checks.

Dynamic Server SSL/TLS Connection Settings

HAProxy 2.4 adds the set server ssl command, which allows you to activate SSL on outgoing connections to a server at runtime, without requiring a reload.

$ echo "set server be_main/srv1 ssl on" | socat /var/run/haproxy/api.sock -

ACL/Map File Atomic Updates

HAProxy has supported applying updates to ACL and Map file entries through its Runtime API for quite some time now. It allows you to dynamically add or remove entries from the running process. However, in some cases, you may want to replace the entire ACL or Map file and there hasn’t been a safe way to do this atomically. This feature was requested by the team working on the HAProxy Kubernetes Ingress Controller, so you can expect their Map file usage to be improved.

This release supports versioned ACL and Map files, allowing you to add a new version in parallel to the existing one and then perform an atomic replacement. To use this, you must first prepare a new version by calling the prepare command on the existing file name or ACL reference ID:

$ echo "prepare acl /etc/haproxy/acl/denylist.acl" | socat /var/run/haproxy/api.sock -

New version created: 1

Next, add your entries referencing the returned version number:

$ echo "add acl @1 /etc/haproxy/acl/denylist.acl 4.4.4.4" | socat /var/run/haproxy/api.sock - $ echo "add acl @1 /etc/haproxy/acl/denylist.acl 5.5.5.5" | socat /var/run/haproxy/api.sock - $ echo "add acl @1 /etc/haproxy/acl/denylist.acl 6.6.6.6" | socat /var/run/haproxy/api.sock -

Finally, commit your updates.

$ echo "commit acl @1 /etc/haproxy/acl/denylist.acl" | socat /var/run/haproxy/api.sock -

Experimental Mode

A new experimental mode was introduced in the Runtime API that enables certain features that are still under development and may exhibit unexpected behavior. To enable it, send experimental-mode on before sending your command. Once used, the process will be marked as tainted, which you can see with the show info command. Once the tainted flag has been set it will persist for the duration of the lifetime of the HAProxy process.

Dynamic Servers (Experimental)

HAProxy 1.8 introduced the concept of dynamic servers in version 1.8 through the server-template directive. This works by creating a pool of empty servers slots that can be filled and activated on-the-fly through the Runtime API. This release introduces a new method for adding and removing servers dynamically through the Runtime API: the add server and del server commands. It currently does not support all of the various server options, but will be expanded over time. It also does not inherit options from a defined default-server directive.

This feature is under active development and requires you to activate experimental mode.

$ echo "experimental-mode on; add server be_app/app4 192.168.1.22:80" | socat /var/run/haproxy/api.sock -

New server registered.

$ echo "experimental-mode on; del server be_app/app4" | socat /var/run/haproxy/api.sock -

Server deleted.

Process-wide Variable Modification (Experimental)

An experimental Runtime API command, set var <name> <expression>, allows you to set or overwrite a process-wide variable <name> with the result of <expression>. This is useful for activating blue/green deployments. You can fetch the current value with get var <name>.

To access this command, you must enable the new experimental-mode command.

Here’s an example that shows how you could use it to handle blue-green deployments. We set an initial value for the variable proc.myapp_version to blue, which routes all requests to the backend be_myapp_blue:

global set-var proc.myapp_version str(blue)

frontend fe_main mode http bind :::80 bind :::443 ssl crt /etc/haproxy/certs/cert.pem alpn h2,http/1.1 use_backend be_myapp_green if { var(proc.myapp_version) -m str green } use_backend be_myapp_blue if { var(proc.myapp_version) -m str blue }

Use the Runtime API’s get var command to get the current value of the variable:

$ echo "experimental-mode on; get var proc.myapp_version" |socat /var/run/haproxy/api.sock -

proc.myapp_version: type=str value=<blue>

Next, switch HAProxy to route traffic to the green version by calling set var:

$ echo "experimental-mode on; set var proc.myapp_version str(green)" | socat /var/run/haproxy/api.sock -

Validate the switch:

$ echo "experimental-mode on; get var proc.myapp_version" | socat /var/run/haproxy/api.sock -

proc.myapp_version: type=str value=<green>

Lua has been extended to support multithreading. A new global directive, lua-load-per-thread, has been added to aid with this. For Lua scripts that require threading, this should be used as it will launch the Lua code as an independent state in each thread. The existing lua-load keyword still exists and should be used for Lua modules meant to cover the entire process, such as jobs registered with core.register_task.

Build

The following build changes were added:

- New ARMv8 CPU targets

- SLZ is now the default compression library and enabled by default. Disable using USE_SLZ= or USE_ZLIB=1

- sched_setaffinity added to DragonFlyBSD

- DeviceAtlas added pcre2 support

- Support for

defer-acceptwas added to FreeBSD - crypt(3) is now supported for OpenBSD

make cleanwill now delete .o, .a, and .s files for the additional components in addons, admin, and dev directories

Debugging

A new command line argument -dD has been introduced, which enables diagnostic mode. It prints extra warnings concerning suspicious configuration statements.

A new profiling.memory global directive, which you can set to on or off, allows you to enable per-function memory profiling. This will keep usage statistics of malloc/calloc/realloc/free calls anywhere in the process, which will be reported on through the Runtime API with the show profiling command. This supports a sorting criterion, byaddr, which is convenient to observe changes between subsequent dumps. The memory argument allows diagnosing of suspected memory leaks.

A new Runtime API set profiling memory command has also been added, which can be set to on or off. It is meant to be used when an abnormal memory usage is observed. The performance impact is typically around 1% but may be a bit higher on threaded servers. It requires USE_MEMORY_PROFILING to be set at compile time and is limited to certain operating systems. Today, it is known to specifically work on linux-glibc.

The show profiling Runtime API command has also been extended to support the additional keywords: all, status, tasks, and memory. It allows for specifying the maximum number of lines that you would like to have returned. It also has the ability to dump the stats collected by the scheduler. This helps to identify which functions are responsible for high latencies.

Reorganization

The directory structure has been reorganized a bit. Useful tools and contributions in the project’s contrib directory were moved into the addons and admin directories, while others were moved out of the tree and into independent GitHub projects under the HAProxy namespace.

Testing

Tim Düsterhus and Ilya Shipitsin led efforts to enable and expand usage of GitHub Actions CI for testing builds. Travis is used for building binaries for unique architectures, notably s390x, ppc64, arm64.

Integration with the Varnish test suite was released with HAProxy 1.9 and aids in detecting regression bugs. The number of regression tests has grown significantly since then. This release adds 43 new tests, bringing the total number to 144. These tests are run with virtually every git push.

Miscellaneous

- The

tune.chksizedirective has been deprecated and is set for removal in HAProxy 2.5 - Previously idle frontend connections could keep an old process alive on a reload until a request arrived on them, or their keep-alive timeout struck, or the hard-stop-after timer struck. Now they will be gracefully closed, either instantly or right after finishing to respond. As a result, old processes will not stay that long anymore

- Idle backend connections were only closed on reload by the process exiting, which sometimes resulted in keeping a number of TIME_WAIT sockets consuming precious source ports. Now on exit they are actively killed so that they won’t go to the TIME_WAIT state.

- TCP log outputs will now automatically create a ring buffer.

- The memory allocator was reworked to be more dynamic and release memory faster, which should result in 2.4 using less memory than previous versions on varying loads.

- Work was done to significantly reduce the worst-case processing latency compared making 2.4 a perfect fit for timing-sensitive environments (as shown in the blog article)

- On startup, the CPU topology will be inspected and if a multi-socket CPU is found then HAProxy will automatically define the process affinity on the first node with active CPUs. numa-cpu-mapping

- Layer 7 retries now support 401 and 403 HTTP status codes

http-check sendnow supports adding a Connection header.- The global section now supports setting process level variables

set-var proc.current_state str(primary)

- A new macro

HTTP_2.0has been added, which will return true for HTTP/2 requests. - The

server-state-file-namedirective has a new argument, use-backend-name, that allows you to load the state file using the name of the backend. This only applies when the directiveload-server-state-from-fileis set to local. - A new srvkey parameter has been added to the stick-table directive. This allows you to specify how a server is identified. It accepts either name, which will use the current defined name of the server or addr, which will use the current network address including the port. addr is useful when you are using service discovery to generate the addresses.

- New log-format parameter, %HPO, was added, which allows logging of the request path without the query string.

- .gitattributes was updated to add the “cpp” userdiff driver for .c and .h files. This improves the output of git diff in specific cases, primarily when using git diff –word-diff

- SPOA python example has been improved

- XXHash was updated to version 0.8.0. A new XXH3 variant of hash functions shows a noticeable improvement in performance (especially on small data), and also brings 128-bit support, better inlining, and streaming capabilities. Performance comparison is available here.

- New configuration examples (quick-test.cfg and basic-config-edge.cfg) have been added to the examples directory.

- Properly color a backend that has a status of DOWN or going UP in orange

- The peers protocol wireshark dissector has been improved

- Version string has updated from the legacy HA-Proxy to HAProxy

Contributors

We want to thank each and every contributor who was involved in this release. Contributors help in various forms such as discussing design choices, testing development releases and reporting detailed bugs, helping users on Discourse and the mailing list, managing issue trackers and CI, classifying Coverity reports, providing documentation fixes and keeping the documentation in good shape, operating some of the infrastructure components used by the project, reviewing patches, and contributing code.

The following list doesn’t do justice to all of the amazing people who offer their time to the project, but we wanted to give a special shout out to individuals who have contributed code, and their area of contribution.

Contributor Area Florian Apolloner BUG FIX Baptiste Assmann BUG FIX NEW FEATURE Émeric Brun BUG FIX CLEAN UP NEW FEATURE David Carlier BUG FIX DOCUMENTATION NEW FEATURE Daniel Corbett BUG FIX CLEAN UP DOCUMENTATION Gilchrist Dadaglo BUG FIX DOCUMENTATION William Dauchy BUG FIX BUILD CLEAN UP DOCUMENTATION NEW FEATURE Amaury Denoyelle BUG FIX BUILD CLEAN UP DOCUMENTATION NEW FEATURE REORGANIZATION Dragan Dosen BUG FIX CLEAN UP NEW FEATURE Tim Düsterhus BUG FIX BUILD CLEAN UP DOCUMENTATION NEW FEATURE REGTESTS/CI Christopher Faulet BUG FIX BUILD CLEAN UP DOCUMENTATION NEW FEATURE REORGANIZATION REGTESTS/CI Thierry Fournier BUG FIX NEW FEATURE Matthieu Guegan BUILD Olivier Houchard BUG FIX BUILD NEW FEATURE Bertrand Jacquin BUG FIX NEW FEATURE Yves Lafon NEW FEATURE William Lallemand BUG FIX BUILD CLEAN UP DOCUMENTATION NEW FEATURE REGTESTS/CI Frédéric Lécaille BUG FIX BUILD CLEAN UP NEW FEATURE Moemen MHEDHBI CLEAN UP NEW FEATURE Maximilian Mader BUG FIX CLEAN UP NEW FEATURE Jérôme Magnin BUG FIX CLEAN UP Thayne McCombs BUG FIX CLEAN UP DOCUMENTATION NEW FEATURE REGTESTS/CI Joao Morais DOCUMENTATION Adis Nezirovic BUG FIX Julien Pivotto DOCUMENTATION NEW FEATURE Christian Ruppert BUILD Éric Salama BUG FIX NEW FEATURE Phil Scherer DOCUMENTATION Ilya Shipitsin BUILD CLEAN UP DOCUMENTATION REGTESTS/CI Willy Tarreau BUG FIX BUILD OPTIMIZATION CONTRIB CLEAN UP DOCUMENTATION NEW FEATURE REORGANIZATION REGTESTS/CI Rémi Tricot Le Breton BUG FIX DOCUMENTATION NEW FEATURE Jan Wagner DOCUMENTATION Miroslav Zagorac BUG FIX CONTRIB DOCUMENTATION NEW FEATURE Maciej Zdeb BUG FIX DOCUMENTATION NEW FEATURE Alex DOCUMENTATION NEW FEATURE varnav DOCUMENTATIONRecommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK