Unsupervised 3D Neural Rendering of Minecraft Worlds

source link: https://nvlabs.github.io/GANcraft/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Overview

What exactly is GANcraft trying to solve?

GANcraft aims at solving the world-to-world translation problem. Given a semantically labeled block world such as those from the popular game Minecraft, GANcraft is able to convert it to a new world which shares the same layout but with added photorealism. The new world can then be rendered from arbitrary viewpoints to produce images and videos that are both view-consistent and photorealistic. GANcraft simplifies the process of 3D modeling of complex landscape scenes, which will otherwise require years of expertise.

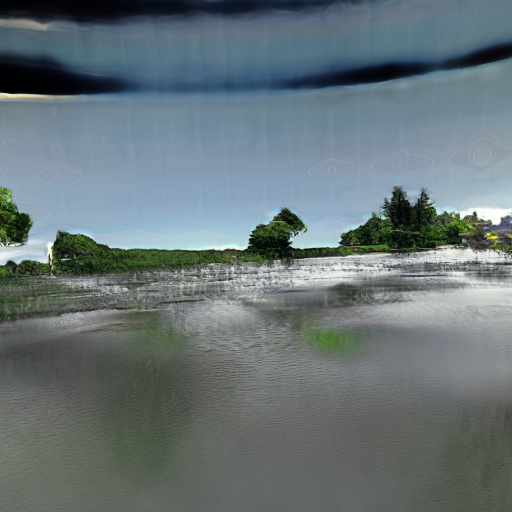

The "Why don't you just use im2im translation? " Question

As the ground truth photorealistic renderings for a user-created block world simply doesn't exist, we have to train models with indirect supervision. Some existing approaches are strong candidates. For example, one can use image-to-image translation (im2im) methods such as MUNIT and SPADE, originally trained on 2D data only, to convert per-frame segmentation masks projected from the block world, to realistic looking images. One can also use wc-vid2vid, a 3D-aware method, to generate view-consistent images through 2D inpainting and 3D warping while using the voxel surfaces as the 3D geometry. These models have to be trained on translating real segmentation maps to real images due to paired training data requirements, and then used on Minecraft to real translation. As yet another alternative, one can train a NeRF-W, which learns a 3D radiance field from a non-photometric consistent, but posed and 3D consistent image collection. This can be trained using predicted images from a im2im method (pseudo-ground truth, explained in the next section), which is the data closest to the requirements that we can get.- im2im methods such as MUNIT and SPADE does not preserve viewpoint consistency, as these methods have no knowledge of the 3D geometry, and each frame is generated independently.

- wc-vid2vid produces view-consistent video, but the image quality deterorates quickly with time due to error accumulation from blocky geometry and the train-test domain gap.

- NSVF-W (our implementation of NeRF-W with added NSVF-style voxel conditioning) produces view-consistent output as well, but the result looks dull and lacks fine detail.

Distribution Mismatch and Pseudo-ground truth

Assume that we have a suitable voxel-conditional neural rendering model which is capable of representing the photorealistic world. We still need a way to train it without any ground truth posed images. Adversarial training has achieved some success in small scale, unconditional neural rendering tasks when the posed images are not available. However, for GANcraft the problem is even more challenging. The block worlds from Minecraft usually have wildly different label distributions compared to the real world. For example, some scenes are completetly covered by snow or sand or water. There are also scenes that cross multiple biomes within a small area. Moreover, it is impossible to match the sampled camera distribution to that of internet photos when randomly sampling views from the neural rendering model.

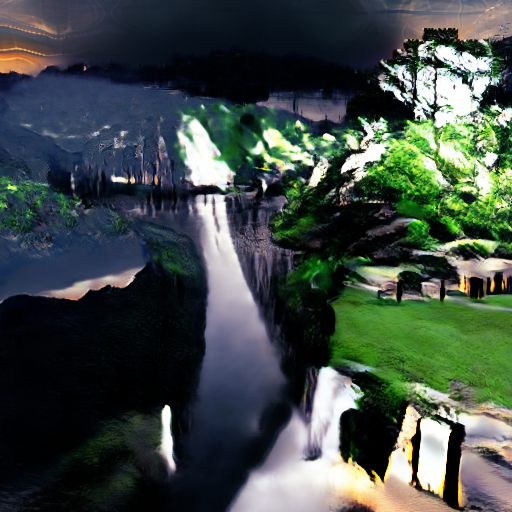

No pseudo-ground truth

W/ pseudo-ground truth

As shown in the first row, adversarial training using internet photos leads to unrealistic results, due to the complexity of the task. Producing and using pseudo-ground truths for training is one of the main contributions of our work, and significantly improves the result (second row).

Generating pseudo-ground truths

The pseudo-ground truths are the photorealistic images generated from segmentation masks using a pretrained SPADE model. As the segmentation masks are sampled from the block world, the pseudo-ground truths share the same labels and camera poses as the images generated from the same views. This not only reduces label and camera distribution mismatch, but also allows us to use stronger losses, such as the perceptual and L2 loss, for faster and more stable training.

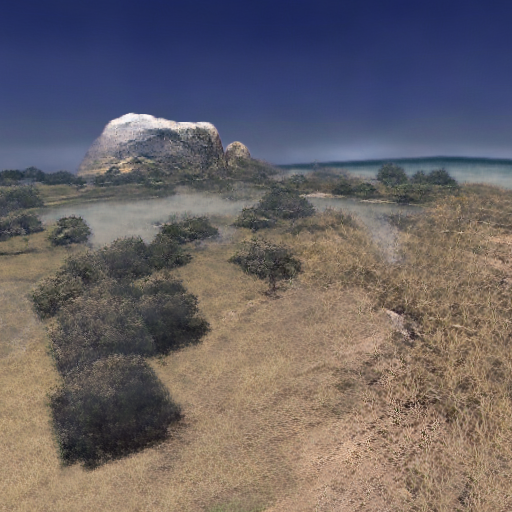

Hybird Voxel-conditional Neural Rendering

In GANcraft, we represent the photorealistic scene with a combination of 3D volumetric renderer and 2D image space renderer. We define a voxel-bounded neural radiance field: given a block world, we assign a learnable feature vector to every corner of the blocks, and use trilinear interpolation to define the location code at arbitrary locations within a voxel. A radiance field can then be defined implicitly using an MLP, which takes the location code, semantic label, and a shared style code as input and produces a point feature (similar to radiance) and its volume density. Given camera parameters, we render the radiance field to obtain a 2D feature map, which is converted to an image via a CNN.

The complete GANcraft architecture

The two-stage architecture significantly improves the image quality while reducing the computation and memory footprint, as the radiance field can be modeled with a simpler MLP, which is the computational bottleneck for implicit volume based methods. The proposed architecture is capable of handling very large worlds. In our experiments, we use voxel grids with a size of 512×512×256, which is equivalent to 65 acres or 32 soccer fields in the real world.Neural Sky Dome

Previous voxel-based neural rendering approaches cannot model sky that is infinitely far away. However, sky is an indispensable ingredient for photorealism. In GANcraft, we use an additional MLP to model sky. The MLP converts the camera ray direction to a feature vector which has the same dimension as the point features from the radiance field. This feature vector serves as the totally opaque, final sample on a ray, blending into the pixel feature according to the residual transmittance of the ray.

Generating Images with Diversified Appearance

The generation process of GANcraft is conditional on a style image. During training, we use the pseudo-ground truth as the style image, which helps explain away the inconsistency between the generated image and its corresponding pseudo-ground truth for the reconstruction loss. During evaluation, we can control the output style by providing GANcraft with different style images. In the example below, we linearly interpolate the style code across 6 different style images.

Interpolation between multiple styles

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK