A Peek at Percona Kubernetes Operator for Percona Server for MongoDB New Feature...

source link: https://www.percona.com/blog/2021/03/10/a-peek-at-percona-kubernetes-operator-for-percona-server-for-mongodb-new-features/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

The latest 1.7.0 release of Percona Kubernetes Operator for Percona Server for MongoDB came out just recently and enables users to:

The latest 1.7.0 release of Percona Kubernetes Operator for Percona Server for MongoDB came out just recently and enables users to:

- Run multiple shards to scale MongoDB horizontally

- Deploy custom sidecar containers to extend operators and MongoDB capabilities in Kubernetes

- Automatically clean up Persistent Volume Claims (PVC) once a database cluster is removed

Today we will look into these new features, the use cases, and highlight some architectural and technical decisions we made when implementing them.

Sharding

The 1.6.0 release of our Operator introduced single shard support, which we highlighted in this blog post and explained why it makes sense. But horizontal scaling is not possible without support for multiple shards.

Adding a Shard

A new shard is just a new ReplicaSet which can be added under spec.replsets in cr.yaml:

Read more on how to configure sharding.

In the Kubernetes world, a MongoDB ReplicaSet is a StatefulSet with a number of pods specified in spec.replsets.[].size variable.

Once pods are up and running, the Operator does the following:

- Initiates ReplicaSet by connecting to newly created pods running mongod

- Connects to mongos and adds a shard with sh.addShard() command

Then the output of db.adminCommand({ listShards:1 }) will look like this:

Have open source expertise to share? Submit your talk for Percona Live ONLINE!

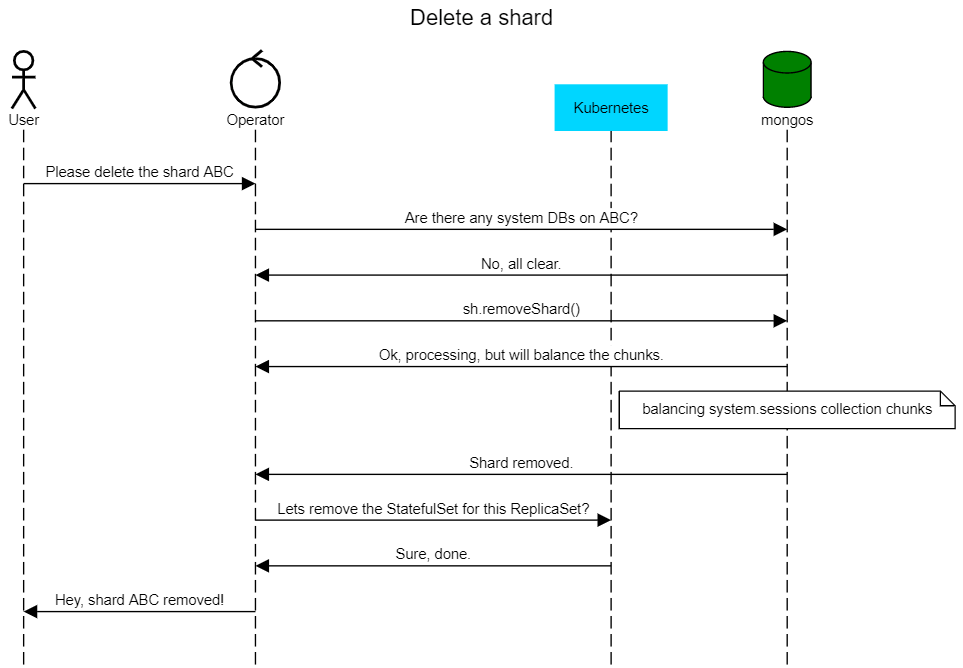

Deleting a Shard

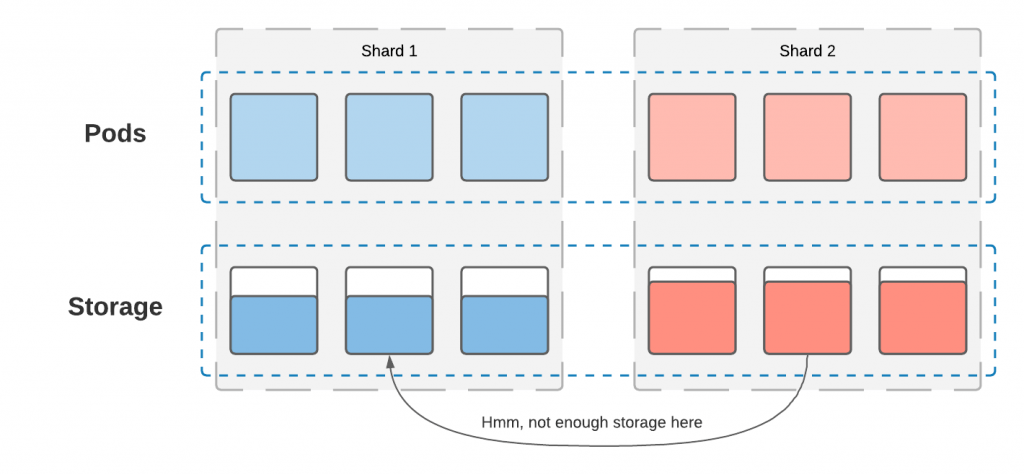

Percona Operators are built to simplify the deployment and management of the databases on Kubernetes. Our goal is to provide resilient infrastructure, but the operator does not manage the data itself. Deleting a shard requires moving the data to another shard before removal, but there are a couple of caveats:

- Sometimes data is not moved automatically by MongoDB – unsharded collections or jumbo chunks

- We hit the storage problem – what if another shard does not have enough disk space to hold the data?

There are a few choices:

- Do not touch the data. The user needs to move the data manually and then the operator removes the empty shard.

- The operator decides where to move the data and deals with storage issues by upscaling if necessary.

- Upscaling the storage can be tricky, as it requires certain capabilities from the Container Storage Interface (CNI) and the underlying storage infrastructure.

For now, we decided to pick option #1 and won’t touch the data, but in future releases, we would like to work with the community to introduce fully-automated shard removal.

When the user wants to remove the shard now, we first check if there are any non-system databases present on the ReplicaSet. If there are none, the shard can be removed:

Custom Sidecars

The sidecar container pattern allows users to extend the application without changing the main container image. They leverage the fact that all containers in the pod share storage and network resources.

Percona Operators have built-in support for Percona Monitoring and Management to gain monitoring insights for the databases on Kubernetes, but sometimes users may want to expose metrics to other monitoring systems. Lets see how mongodb_exporter can expose metrics running as a sidecar along with ReplicaSet containers.

1. Create the monitoring user that the exporter will use to connect to MongoDB. Connect to mongod in the container and create the user:

2. Create the Kubernetes secret with these login and password. Encode both the username and password with base64:

Put these into the secret and apply:

3. Add a sidecar for mongodb_exporter into cr.yaml and apply:

All it takes now is to configure the monitoring system to fetch the metrics for each mongod Pod. For example, prometheus-operator will start fetching metrics once annotations are added to ReplicaSet pods:

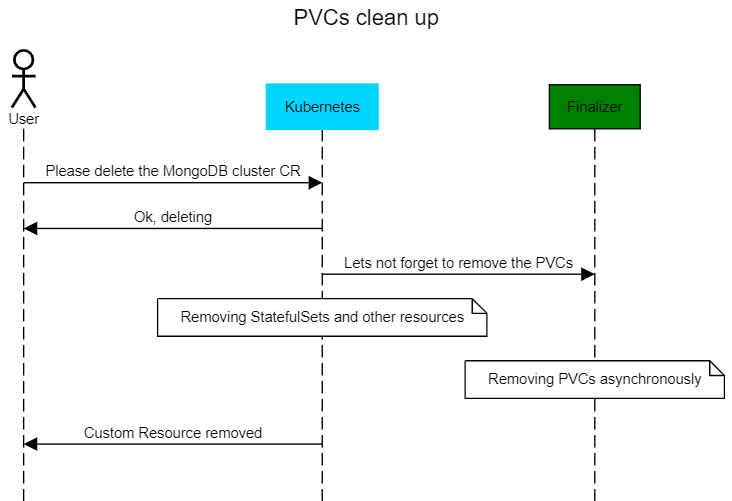

PVCs Clean Up

Running CICD pipelines that deploy MongoDB clusters on Kubernetes is a common thing. Once these clusters are terminated, the Persistent Volume Claims (PVCs) are not. We have now added automation that removes PVCs after cluster deletion. We rely on Kubernetes Finalizers – asynchronous pre-delete hooks. In our case we hook the finalizer to the Custom Resource (CR) object which is created for the MongoDB cluster.

A user can enable the finalizer through cr.yaml in the metadata section:

Conclusion

Percona is committed to providing production-grade database deployments on Kubernetes. Our Percona Kubernetes Operator for Percona Server for MongoDB is a feature-rich tool to deploy and manage your MongoDB clusters with ease. Our Operator is free and open source. Try it out by following the documentation here or help us to make it better by contributing your code and ideas to our Github repository.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK