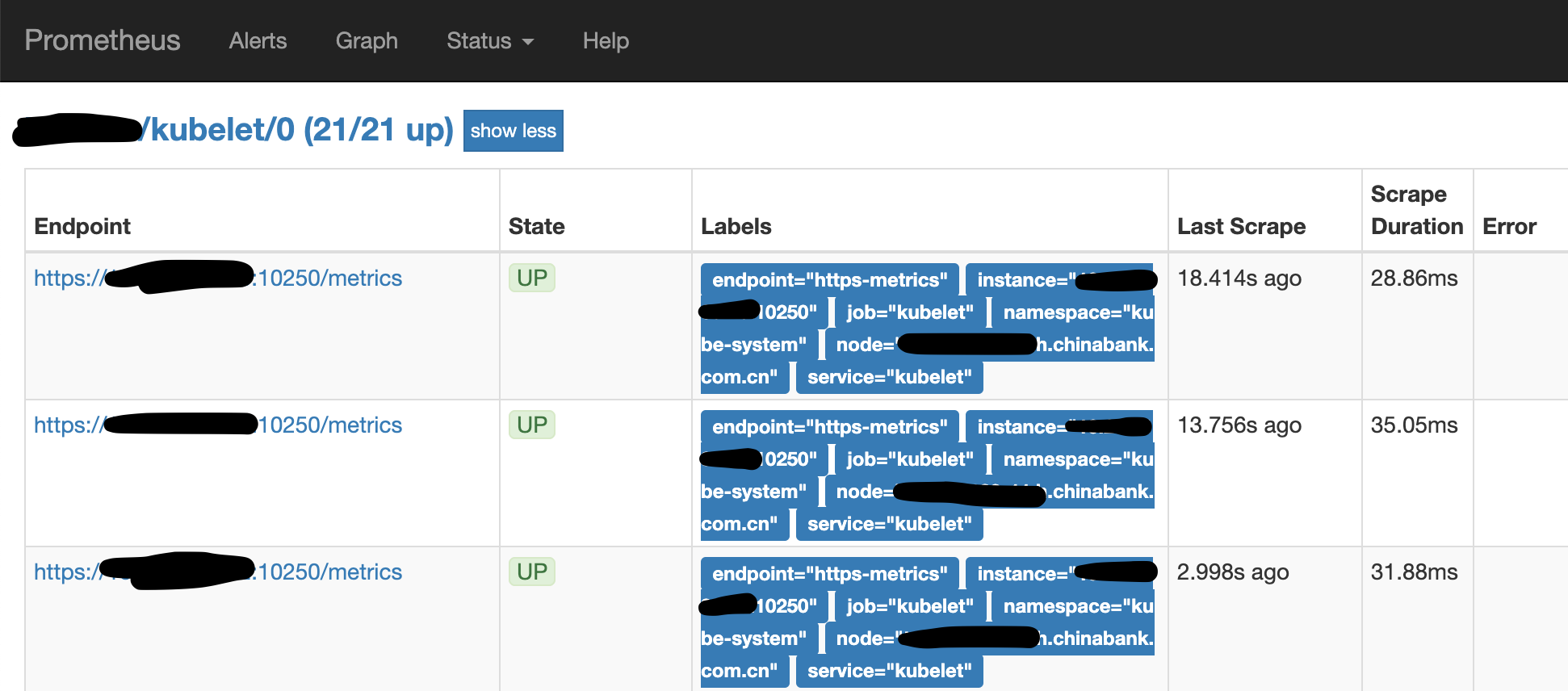

消失的Prometheus指标

source link: https://ieevee.com/tech/2019/10/29/cadvisor.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

消失的Prometheus指标

by 伊布

记一个线上故障的解决过程。

我们有一些GPU的机器,需要统计GPU的相关信息,数据是从Prometheus拿的,但有一天突然发现,部分GPU节点metrics的某些label是空的。

下面是正常节点的metrics。

container_accelerator_duty_cycle{acc_id="GPU-d8384090-81d2-eda5-ed02-8137eb037460",container_name="nvidia-device-plugin-ctr",endpoint="https-metrics",id="/kubepods/besteffort/podd917e00f-a779-11e9-b971-6805ca7f5b2a/38a5decf96e9f007fcb0059d79017ea3b3c29ff4c9a81b7fec86cf63c06baf53",image="sha256:7354b8a316796fba0463a68da79d79eb654d741241ca3c4f62a1ef24d8e11718",instance="10.0.0.1:10250",job="kubelet",make="nvidia",model="Tesla P40",name="k8s_nvidia-device-plugin-ctr_nvidia-device-plugin-daemonset-sn2cg_lambda_d917e00f-a779-11e9-b971-6805ca7f5b2a_1",namespace="ieevee",node="x1.ieevee.com",pod_name="nvidia-device-plugin-daemonset-sn2cg",service="kubelet"} 0

下面是异常节点的metrics。

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",endpoint="https-metrics",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",instance="10.0.0.2:10250",job="kubelet",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""}

可以看到,Prometheus取出来的数据,label中的container_name、name、namespace、pod_name都是空的。

container_accelerator_duty_cycle 这个指标是kubelet提供的(kubelet是Prometheus的一个target),可以通过https://10.0.0.2:10250/metrics查看metrics。

使用下面的命令查看metrics,对比从正常、异常节点的kubelet取出来的数据有什么不同。

curl -i -k -H "Authorization: Bearer ${token}" https://10.0.0.2:10250/metrics/cadvisor |grep container_accelerator_duty_cycle

正常节点:

container_accelerator_duty_cycle{acc_id="GPU-0e67d3a8-5c36-4232-46b1-14d4470adcb6",endpoint="https-metrics",id="/kubepods/besteffort/pod756bcd3a-e9a7-11e9-84e7-f8f21e04010c/9c6af6f329cf4d01462b0ba8c2f8bbb79269bc309434489228b4c97154f0f2d5",instance="10.0.0.1:10250",job="kubelet",make="nvidia",model="Tesla V100-PCIE-16GB",node="x1.ieevee.com",service="kubelet"}

异常节点:

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",image="",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""} 0

也就是说,从kubelet取出来的数据就已经有某些Label为空的问题了,问题跟Prometheus无关,接下来看看为什么kubelet会有问题。

kubelet与cadvisor

我们知道,kubelet很多指标,是通过cadvisor获取的,而cadvisor就直接运行在kubelet进程中,节点上并没有一个单独的cadvisor进程。不过cadvisor代码仍然是使用的是github.com/google/cadvisor,换句话说就是,cadvisor已经变成了一个SDK,供kubelet调用。

我们来简单分析下代码(以下基于1.11.1)。

kubelet在启动的时候,会初始化一个CAdvisorInterface。

func run(s *options.KubeletServer, kubeDeps *kubelet.Dependencies, stopCh <-chan struct{}) (err error) {

...

if kubeDeps.CAdvisorInterface == nil {

imageFsInfoProvider := cadvisor.NewImageFsInfoProvider(s.ContainerRuntime, s.RemoteRuntimeEndpoint)

kubeDeps.CAdvisorInterface, err = cadvisor.New(s.Address, uint(s.CAdvisorPort), imageFsInfoProvider, s.RootDirectory, cadvisor.UsingLegacyCadvisorStats(s.ContainerRuntime, s.RemoteRuntimeEndpoint))

if err != nil {

return err

}

}

初始化过程主要完成的就是启动一个cadvisor http server。

// New creates a cAdvisor and exports its API on the specified port if port > 0.

func New(address string, port uint, imageFsInfoProvider ImageFsInfoProvider, rootPath string, usingLegacyStats bool) (Interface, error) {

...

cadvisorClient := &cadvisorClient{

imageFsInfoProvider: imageFsInfoProvider,

rootPath: rootPath,

Manager: m,

}

err = cadvisorClient.exportHTTP(address, port)

if err != nil {

return nil, err

}

return cadvisorClient, nil

在exportHTTP函数中启动http server,并且向cadvisor http server注册了一个kubelet的回调函数containerLabels,kubelet在这个回调函数里,将自己需要的label加到cadvisor的metrics中去。

func (cc *cadvisorClient) exportHTTP(address string, port uint) error {

// Register the handlers regardless as this registers the prometheus

// collector properly.

mux := http.NewServeMux()

...

cadvisorhttp.RegisterPrometheusHandler(mux, cc, "/metrics", containerLabels)

先看看containerLabels做了什么。

func containerLabels(c *cadvisorapi.ContainerInfo) map[string]string {

// Prometheus requires that all metrics in the same family have the same labels,

// so we arrange to supply blank strings for missing labels

var name, image, podName, namespace, containerName string

if len(c.Aliases) > 0 {

name = c.Aliases[0]

}

image = c.Spec.Image

if v, ok := c.Spec.Labels[types.KubernetesPodNameLabel]; ok {

podName = v

}

if v, ok := c.Spec.Labels[types.KubernetesPodNamespaceLabel]; ok {

namespace = v

}

if v, ok := c.Spec.Labels[types.KubernetesContainerNameLabel]; ok {

containerName = v

}

set := map[string]string{

metrics.LabelID: c.Name,

metrics.LabelName: name,

metrics.LabelImage: image,

"pod_name": podName,

"namespace": namespace,

"container_name": containerName,

}

return set

}

我们看到了什么?pod_name、namespace、container_name,这些正好对应前面缺失的label:

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",image="",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""} 0

那么,cadvisor是什么时候调用containerLabels的呢?继续看RegisterPrometheusHandler。

func RegisterPrometheusHandler(mux httpmux.Mux, containerManager manager.Manager, prometheusEndpoint string, f metrics.ContainerLabelsFunc) {

r := prometheus.NewRegistry()

r.MustRegister(

metrics.NewPrometheusCollector(containerManager, f),

...

}

func NewPrometheusCollector(i infoProvider, f ContainerLabelsFunc) *PrometheusCollector {

if f == nil {

f = DefaultContainerLabels

}

c := &PrometheusCollector{

infoProvider: i,

containerLabelsFunc: f, //here 1

...

containerMetrics: []containerMetric{

{

name: "container_last_seen",

help: "Last time a container was seen by the exporter",

}, {

...

name: "container_accelerator_duty_cycle", //here 2

help: "Percent of time over the past sample period during which the accelerator was actively processing.",

valueType: prometheus.GaugeValue,

extraLabels: []string{"make", "model", "acc_id"},

getValues: func(s *info.ContainerStats) metricValues {

values := make(metricValues, 0, len(s.Accelerators))

for _, value := range s.Accelerators {

values = append(values, metricValue{

value: float64(value.DutyCycle),

labels: []string{value.Make, value.Model, value.ID},

})

}

return values

},

}, {

在NewPrometheusCollector中,我们看到了前面采集的container_accelerator_duty_cycle,以及make、model、acc_id这三个label;并且还将containerLabels挂到containerLabelsFunc上了。

这样,在用户API请求metrics的时候,cadvisor就可以通过调用kubelet的回调函数containerLabels,按kubelet的要求,将他需要的labels/values添加到metrics的labels中去了。

func (c *PrometheusCollector) collectContainersInfo(ch chan<- prometheus.Metric) {

..

for _, container := range containers {

values := make([]string, 0, len(rawLabels))

labels := make([]string, 0, len(rawLabels))

containerLabels := c.containerLabelsFunc(container)

for l := range rawLabels {

labels = append(labels, sanitizeLabelName(l))

values = append(values, containerLabels[l])

}

cadvisor与runtime

从前面的分析可以看到,丢失的labels,是kubelet“委托”cadvisor帮忙处理的,但是显然cadvisor处理出了点问题。是在哪里呢?

回到“委托函数”里,从下面的代码可以看到,containerLabels实际就是从ContainerInfo中读取Labels这个map的值,如果这个map没有相应的key,那么自然取到的就是空的了(初始值为空字符串)。

func containerLabels(c *cadvisorapi.ContainerInfo) map[string]string {

var name, image, podName, namespace, containerName string

...

if v, ok := c.Spec.Labels[types.KubernetesPodNameLabel]; ok {

podName = v

}

if v, ok := c.Spec.Labels[types.KubernetesPodNamespaceLabel]; ok {

namespace = v

}

if v, ok := c.Spec.Labels[types.KubernetesContainerNameLabel]; ok {

containerName = v

}

const (

KubernetesPodNameLabel = "io.kubernetes.pod.name"

KubernetesPodNamespaceLabel = "io.kubernetes.pod.namespace"

KubernetesPodUIDLabel = "io.kubernetes.pod.uid"

KubernetesContainerNameLabel = "io.kubernetes.container.name"

KubernetesContainerTypeLabel = "io.kubernetes.container.type"

)

所以问题简化为,c.Spec.Labels是什么时候处理的?

cadvisor与docker

还是以定位问题的思路来看。

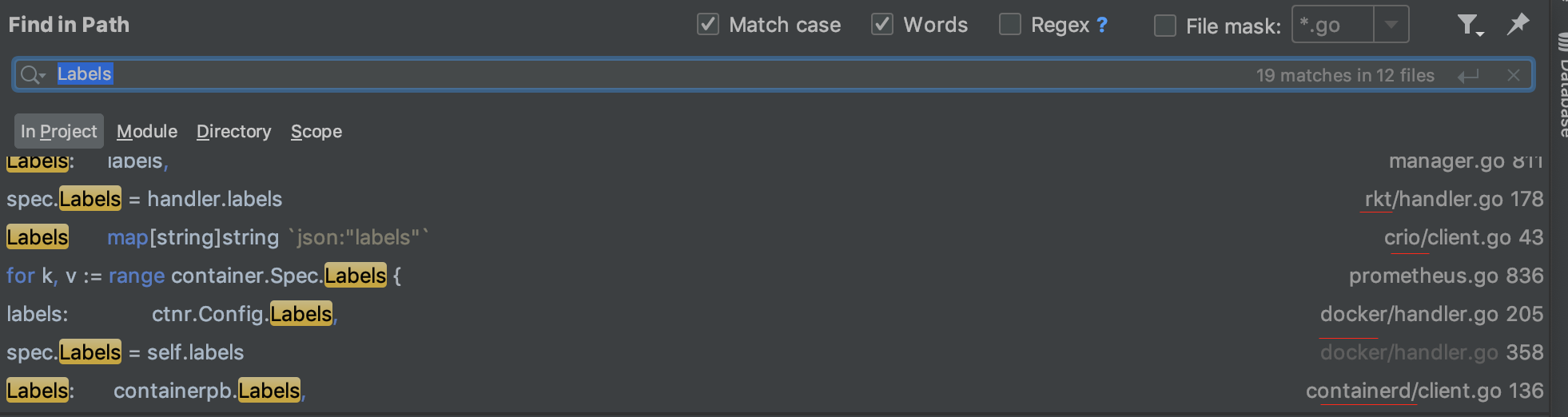

因为在此之前我没看过cadvisor的代码,也不了解cadvisor的架构,所以直接去看cadvisor代码里是怎么获取到Labels的。

可以看到,有docker、containerd、rkt、crio等等相关的实现,因为在我们集群上使用的是docker,所以,我们先来看下docker这里是怎么处理的。

func (self *dockerContainerHandler) GetSpec() (info.ContainerSpec, error) {

hasFilesystem := !self.ignoreMetrics.Has(container.DiskUsageMetrics)

spec, err := common.GetSpec(self.cgroupPaths, self.machineInfoFactory, self.needNet(), hasFilesystem)

spec.Labels = self.labels

spec.Envs = self.envs

spec.Image = self.image

spec.CreationTime = self.creationTime

return spec, err

}

显然,ContainerSpec就是containerLabels里的c.Spec.,spec.Labels = self.labels这行告诉我们,cadvisor是从dockerContainerHandler来获取Labels的。

那dockerContainerHandler又是从哪里获取Labels的呢?继续查找代码。

func newDockerContainerHandler(

client *docker.Client,

name string, ...)

...

// We assume that if Inspect fails then the container is not known to docker.

ctnr, err := client.ContainerInspect(context.Background(), id)

if err != nil {

return nil, fmt.Errorf("failed to inspect container %q: %v", id, err)

}

handler := &dockerContainerHandler{

...

labels: ctnr.Config.Labels,

ignoreMetrics: ignoreMetrics,

zfsParent: zfsParent,

}

ok,找到数据的真正来源了,其实就是 docker inspect 了容器,获取容器的ctnr.Config.Labels,然后一层层传出来,所以问题的原因,很可能是ctnr.Config.Labels并没有我们想要的pod_name、namespace、container_name,也就是types.KubernetesPodNameLabel等Label。

到异常所在GPU节点上,实际看看docker的信息。

# docker inspect b8f6b265835e

[

{

"Id": "b8f6b265835ea112000aec5fd426be66f922bb241f3e65c45997e7bc63bad003",

"Config": {

"Labels": {

"io.kubernetes.container.name": "xxx",

"io.kubernetes.docker.type": "container",

"io.kubernetes.pod.name": "xxx-67d8c96565-nmcwg",

"io.kubernetes.pod.namespace": "ieevee",

很遗憾,docker inspect有相应的Label。说不通哟,不应该获取不到的。

真正的原因

那么有没有可能,cadvisor跟docker的通路不畅,导致直接就没从docker取到任何信息?

我们理一下cadvisor启动到建立到docker通路的流程。

kubelet在创建cadvisorClient的时候,创建了container manager,之后会调用container manager的Start函数,在Start函数里,会注册docker、rkt、containerd、crio等等运行时。

所以,cadvisor实际上是Labels的搬运工,其数据也是调用docker、containerd等等的API来获取的。

// Start the container manager.

func (self *manager) Start() error {

err := docker.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the Docker container factory failed: %v.", err)

}

err = rkt.Register(self, self.fsInfo, self.ignoreMetrics)

...

err = containerd.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the containerd container factory failed: %v", err)

}

err = crio.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the crio container factory failed: %v", err)

}

大胆假设下,如果docker.Register注册失败,那么就别想从docker inspect到任何信息了。

查一下kubelet日志。

[root@10-0-0-2 /var/log]# grep "Registration of the Docker container factory" messages*

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784752 3217 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

messages-20191013:Oct 9 10:10:11 10-0-0-2 kubelet: I1009 10:10:11.221103 751886 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

O(∩_∩)O

果然,docker注册失败了。注册失败的原因很简单,这个docker 的版本是我们做过修改的,docker server的version是“dev”,不满足正则表达式"(\d+)\.(\d+)\.(\d+)"的要求,所以注册失败了,所以,也就inspect不到想要的label了。

虽然不能从docker取到,但是,可以从containerd里取到吗?

一样的,直接对日志grep “Registration of”。

[root@10-0-0-2 /var/log]# grep "Registration of" messages*

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784752 3217 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784809 3217 manager.go:302] Registration of the rkt container factory failed: unable to communicate with Rkt api service: rkt: cannot tcp Dial rkt api service: dial tcp [::1]:15441: connect: connection refused

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784937 3217 manager.go:313] Registration of the containerd container factory failed: unable to create containerd client: containerd: cannot unix dial containerd api service: dial unix /run/containerd/containerd.sock: connect: no such file or directory

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.785134 3217 manager.go:318] Registration of the crio container factory failed: Get http://%2Fvar%2Frun%2Fcrio%2Fcrio.sock/info: dial unix /var/run/crio/crio.sock: connect: no such file or directory

可以看到,containerd也不行,没有文件socket,其他也都失败了。也就是说,cadvisor巧妇难为无米之炊,没有数据来源,当然也就没有办法添加kubelet想要的labels了。

很简单,把docker server的version按正则表达式生成就行了,例如“18.06.1”。

遇到故障,一定要有理有据,整个链路的来龙去脉要理清楚。

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK