Retrieving Recipes from Images: Baselines Strike Back

source link: https://sourcediving.com/retrieving-recipes-from-images-baselines-strike-back-9b638dec8cc8

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Retrieving Recipes from Images: Baselines Strike Back

TL;DR: if you have a complex system and no sensible way to compare its performance to something “reasonable and simple”, whatever that means, it might lead to problems. We find it to be the case in the context of image-to-recipe retrieval task, and show that simple baselines perform surprisingly well there.

1. Overview

In this blog post we will go through some of our work on understanding the problem of cross-modal recipe retrieval, which means, “give me a photo of your meal, and I will find you the right recipe for it in the database”. For more details, please see our paper.

It might look like a rather niche task that only the crazies like us, Cookpad R&D, are interested in, with all our company’s focus being making everyday cooking fun. However, it turns out that in the Machine Learning and Computer Vision community this is a legitimate research problem, and a number of papers trying to get better at solving this problem are published at top venues such as CVPR every year [1,2,3,4,5,6,7]¹.

In our own line of research, we focused on analyzing this body of academic literature which led us to rather interesting conjectures, as well as to creation of a brand new model that outperforms all the existing methods despite being comparatively much simpler. In this blog, we discuss the importance of strong baselines, and explain how our system achieved such performance.

2. On baselines

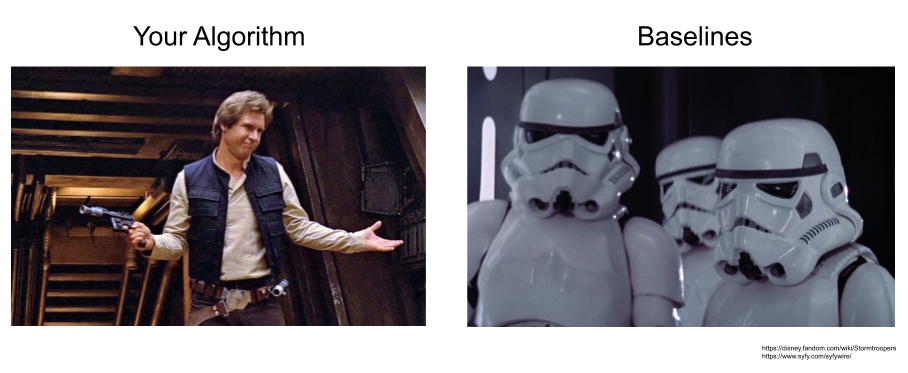

Ah, baselines! They are the for ML researchers as Stormtroopers are for Han Solo: the guys that aren’t meant to be very good and are expected to be beaten up (in my far-fetched analogy, the algorithms which could be assessed by some metric correspond to the Star Wars characters which could be measured by how skilful they are at fighting the enemy).

Imagine yourself developing some new algorithm to solve a problem. Then someone else comes by and says “your algorithm is useless and I don’t like it”. To counter that, you can then take some other algorithm developed previously (The Baseline), run it on your data and say to the not-nice-person “Ha! My algorithm performs better than the baseline! Data says so, statistically significant, end of conversation. May the force be with you.”

The research community as a whole could then eventually improve their methods via the process artfully named graduate student descent. In this process, the newer papers use the older results as baselines, steadily improving the algorithms in terms of performance on a given task.

But there is a problem with the student descent approach. Consider that you managed to beat that baseline enemy of yours comfortably with a fancy method. What does it say about your algorithm? Could you infer how good it is? Well, that depends on how good the baseline that it beat was!

Indeed, if your baseline is an extremely good algorithm already (“the Sith Lord”), your method must be absolutely revolutionary to beat it. If your baseline is weak though (“the battle droid”), then many methods could easily beat it, and that fact that your algorithm managed to beat such a baseline doesn’t say much at all. The perfect baseline to start with is like a Stormtrooper: common, easy to obtain, but not too trivial to beat up even though their aim is a bit off.

So how would you find out who was your baseline? Here is one strategy: peer review. If other smart people looked at it and the baseline looked to them like a Stormtrooper, you count it as a Stormtrooper and move on.

But we had suspicions in the particular case of recipe retrieval baselines. We noticed that in the literature such baselines were beaten very easily and by a large margin, and the new models were beating those previous models by a large margin, and so on and so on. Could be just the speed of progress in ML these days. Or it could be that they all started with too easy of a baseline. So we went on a quest to build our own Stormtrooper (or is it StormCooker??) baseline and see how it fares.

3. Cutting Edge Recipe Finder: ACME

Here is an example of a modern cross-modal retrieval ML system, called ACME [6]. ACME was published in 2019 at CVPR, and achieved stellar performance compared to other methods. For reference, the original baseline finds the correct recipe out of a list of 1000 unseen recipes in 14% of the time [1]. More advanced algorithms have improved this metric to 25% [1,2,3], a few of the later ones hovered around 40% for a while [4,5], until ACME went just over 50% [6]. In other words, mix in your query recipe with another 1000 previously unseen recipes, give the system your image, and half of the time it will find you the exact match! Not bad at all.

How does it work? Well, all of these systems work the same way: they encode the image in some way, encode the text in some way, and align those encodings so that the matching texts and images are close according to some distance metric (e.g. Euclidean).

What differs between the algorithms is how the encoders work, and how the alignment works. ACME’s architecture looks like this:

In ACME, the image encoder is a Convolutional Neural Network, which is very standard. The text is processed using a combination of word2vec, skip-thoughts, ingredient embeddings, and a few different bi-directional LSTMs. The alignment is done with the help of GANs, adversarial alignment, a novel hard negative mining strategy, translation consistency losses and classification losses.

You may not have heard of some of the approaches mentioned in the previous paragraph, but the point is, there are many of them, they are all rather non-trivial, and they all interact with each other. It is a complex, well tuned system, which performs great but is hard to analyze.

4. Baselines Strike Back

But what if, just for the sake of argument, ACME’s performance is not as impressive as it looks? Maybe, achieving this 50% accuracy is not that hard, and the only reason that it hasn’t been done before was because of the weak baseline that the whole graduate student descent process started with?

We wouldn’t know. One reason why it is hard to build baselines for cross-modal retrieval is exactly the nature of the problem involving two sensory modalities. How would you go about building a “simple” or a “trivial” model? You need to map the modalities in some way, and there doesn’t seem to be an obvious way to do so.

For comparison, let’s look at another problem, classifying images into cats and dogs, which does not have the problem of multiple modalities. Then trivial baselines exist. We encode the images in some way, e.g. using a model pretrained on ImageNet. Then, for a query image, we search through our training data to find the closest image to ours, get its training label (Cat or Dog) and use it as our prediction. Voila! There is your k-Nearest-Neighbours (kNN) method in all its glory at k=1.

Now granted it doesn’t work that well, and there are better methods, but it could be surprisingly competitive, serve as a baseline and give an indication of the ballpark of the performance.

Could we do it for cross-modal recipe retrieval though? It turns out we can! We developed a specialized nearest neighbours algorithm which we dubbed Cross-Modal k-Nearest-Neighbours (CkNN). How does it work? Assuming that you know how to search by images in the image database (easy!²), as well as how to search by text in the text database (even easier!³), the algorithm to find the correct recipe text in the long list of UnseenRecipes given a QueryImage works roughly like this:

- Given a QueryImage, find the ClosestTrainingImage in your training data.

- Get the MatchingRecipe corresponding to the ClosestTrainingImage

- Find the recipe closest to the MatchingRecipe among the UnseenRecipes

- Enjoy the rest of your day

Example: consider the case when your QueryImage is an image of an apple pie, and you need to find the “Apple Pie. Ingredients: apples…” recipe among UnseenRecipes. You go to your training database and find the ClosestTrainingImage, which could be an image of an Apple Tart with the MatchingRecipe “Apple Tart. Ingredients: apples…”. So now we search by this text among the UnseenRecipes, and hope that what shows up in the results will be the “Apple Pie” recipe that we were looking for. It won’t work all the time, but you can see how it could work once in a while.

Not rocket science, is it? But suddenly, mixing up the modalities seems like a piece of cake. We can swap in and out different ways to search for an image and to search for text and see which ones are more efficient. We can even try out the encoders trained as part of the state-of-the-art research methods within our CkNN!

Or, we can create some dumb encoders ourselves using standard approaches. We can automatically extract frequent words from recipe titles and count them as “labels”. We can then train a standard CNN classifier to predict those labels from images, and a basic Average Word Embeddings classifier to predict the labels from recipe steps and ingredients. Forget about all the LSTMs and Skip-Thoughts: our approaches are the essence of all the PyTorch tutorials swarming all over Medium in abundance. We remove the last layer of our classifiers and use the resulting set of numbers as a representation of text and images, as many, many, many researchers, students and engineers have done before us.

We don’t really expect it to work well, but it should do as a baseline, right? And what performance do we get if we use this approach on our original task? The answer is… 50%! Same as the most advanced method published (well, slightly worse actually but the difference is not statistically significant).

5. What does it all mean

Maybe, we just found a magic bullet? The solution to all these cross-modal retrieval methods was Nearest Neighbours all along? It turns out, no. If we replace our CkNN with something slightly more advanced but still basic in terms of modern ML research(e.g. triplet loss), we bump this metric up to 60%, way above state-of-the-art. No, our little CkNN is not a magic bullet. It is a Stormtrooper baseline! And it strikes back!

Do we think that the previous methods are to be abandoned? That there is something wrong with them? No. They didn’t need to fight Stormtroopers before, and so they understandably may need some improvements.

Now that we know which performance can be reached easily with simple approaches, we are certain that the bright academic minds working on it could understand why the previous models did not work as well and what modifications they need. But we are reluctant to say that the improvement in metrics could ever look as drastic as it looked before.

The moral of this story is that if complex systems look like they are too good, it could well be due to the fact that the original baselines were too easy to beat, not because the complex methods are that outstanding. Thus, check on your baselines! Or they will strike back, sooner or later.

6. References

[1] Amaia Salvador, Nicholas Hynes, Yusuf Aytar, Javier Marin, Ferda Ofli, Ingmar Weber, and Antonio Torralba. Learning cross-modal embeddings for cooking recipes and food images. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3068–3076, July 2017.

[2] Jing-jing Chen, Chong-Wah Ngo, and Tat-Seng Chua. Cross-modal recipe retrieval with rich food attributes. In Proceedings of the 25th ACM International Conference on Multimedia, MM ’17, pages 1771–1779, New York, NY, USA, 2017. ACM.

[3] Jing-Jing Chen, Chong-Wah Ngo, Fu-Li Feng, and Tat-Seng Chua. Deep understanding of cooking procedure for cross-modal recipe retrieval. In Proceedings of the 26th ACM International Conference on Multimedia, MM ’18, pages 1020–1028, New York, NY, USA, 2018. ACM.

[4] Micael Carvalho, Rémi Cadène, David Picard, Laure Soulier, Nicolas Thome, and Matthieu Cord. Cross-modal retrieval in the cooking context: Learning semantic text-image embeddings. In The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR ’18, pages 35–44, New York, NY, USA, 2018. ACM.

[5] Bin Zhu, Chong-Wah Ngo, Jingjing Chen, and Yanbin Hao. R2GAN: Cross-modal recipe retrieval with generative adversarial network. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

[6] Hao Wang, Doyen Sahoo, Chenghao Liu, Ee-peng Lim, and Steven C. H. Hoi. Learning cross-modal embeddings with adversarial networks for cooking recipes and food images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 11572–11581, 2019.

[7] Han Fu, Rui Wu, Chenghao Liu and Jianling Sun. MCEN: Bridging Cross-Modal Gap between Cooking Recipes and Dish Images with Latent Variable Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK