Goroutine Scheduler Revealed: Never See Goroutines the Same Way Again

source link: https://blog.devtrovert.com/p/goroutine-scheduler-revealed-youll

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Goroutine Scheduler Revealed: Never See Goroutines the Same Way Again

You might have heard of the Goroutine Scheduler before, but how well do we really know how it works? How does it pair goroutines with threads?

Concurrency Series

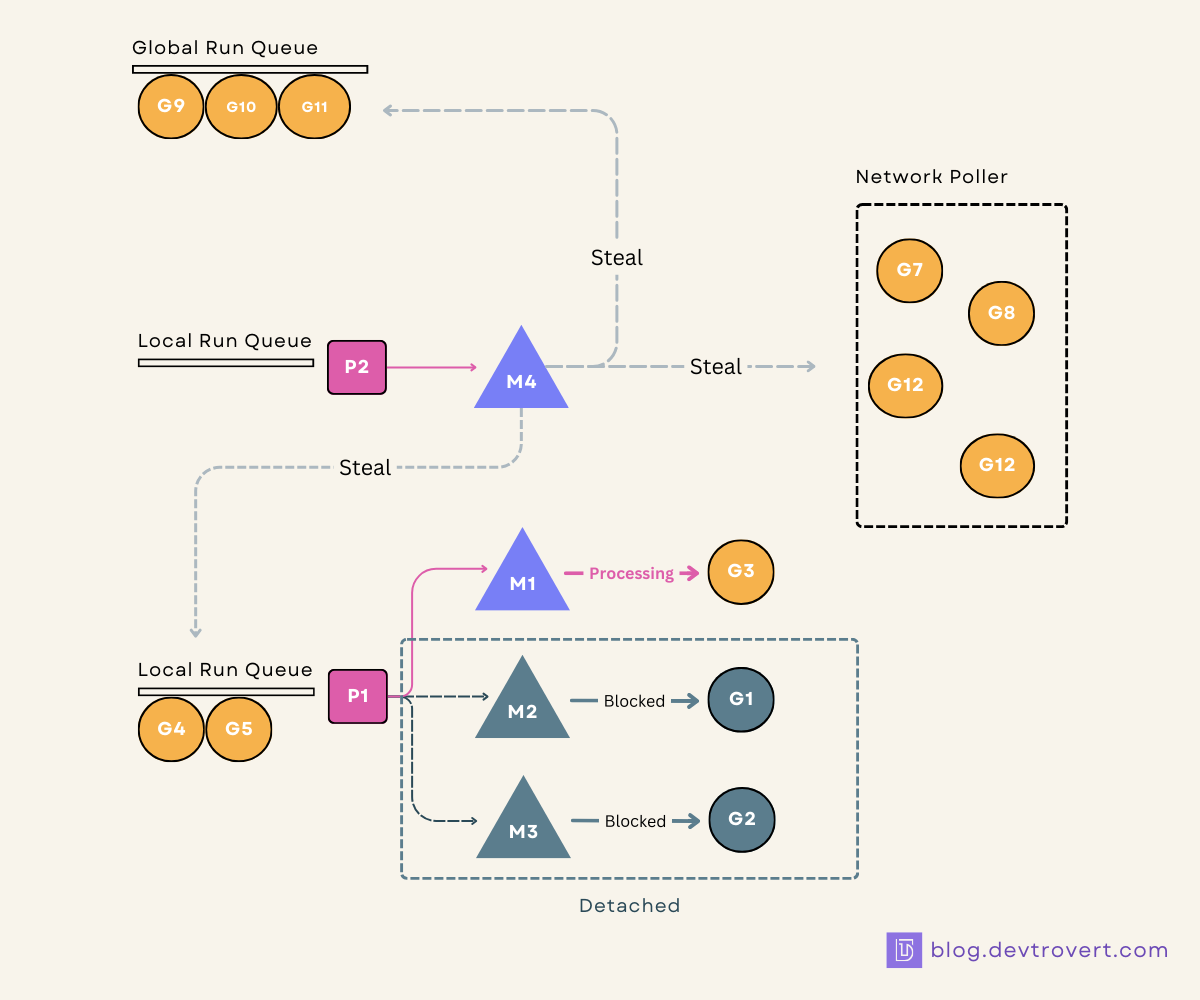

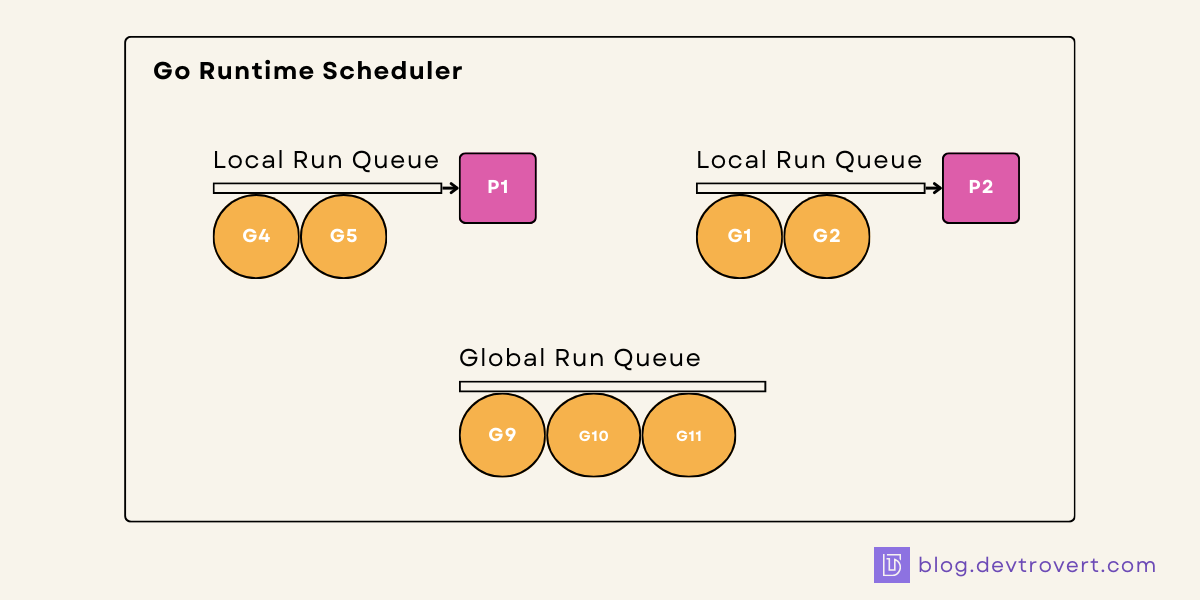

Don’t worry about understanding the image above right now, as we’re going to begin with the very basics.

Goroutines are distributed into threads, which Goroutine Scheduler handle behind the scene. From our previous talks, we know a few things about goroutines:

Goroutines in terms of raw execution speed, aren’t necessarily faster than threads since they need an actual thread to run on.

The real advantage of goroutines lies in areas like context switching, memory footprint, the cost of creation and teardown.

You might have heard of the Goroutine Scheduler before, but how well do we really know how it works? How does it pair goroutines with threads?

Now breaking down the scheduler’s operation one step at a time.

1. The Goroutine M:N Scheduler

The Go Team has truly simplified concurrency for us, just think about it: creating a goroutine is as easy as prefixing a function with the go keyword.

go doWork()But behind this easy step, there’s a deeper system at work.

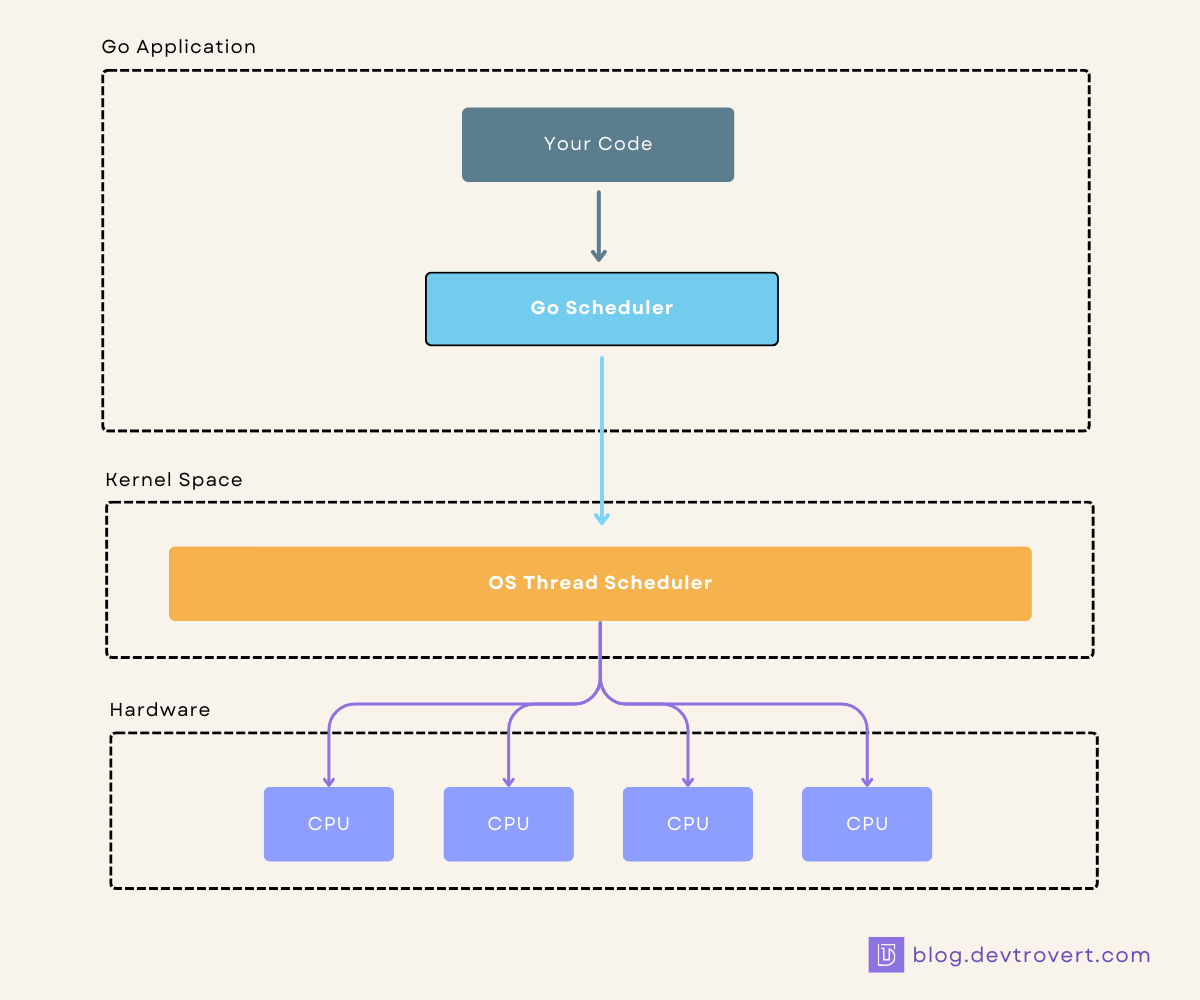

Right from the start, Go didn’t simply provide us with threads. Instead, there’s a helper in the middle, the Goroutine Scheduler which is a key part of the Go Runtime.

So what’s with the M:N label?

It signifies the Go Scheduler’s role in mapping M goroutines to N kernel threads, forming the M:N model. You can have more OS threads than cores, just as there can be more goroutines than OS threads.

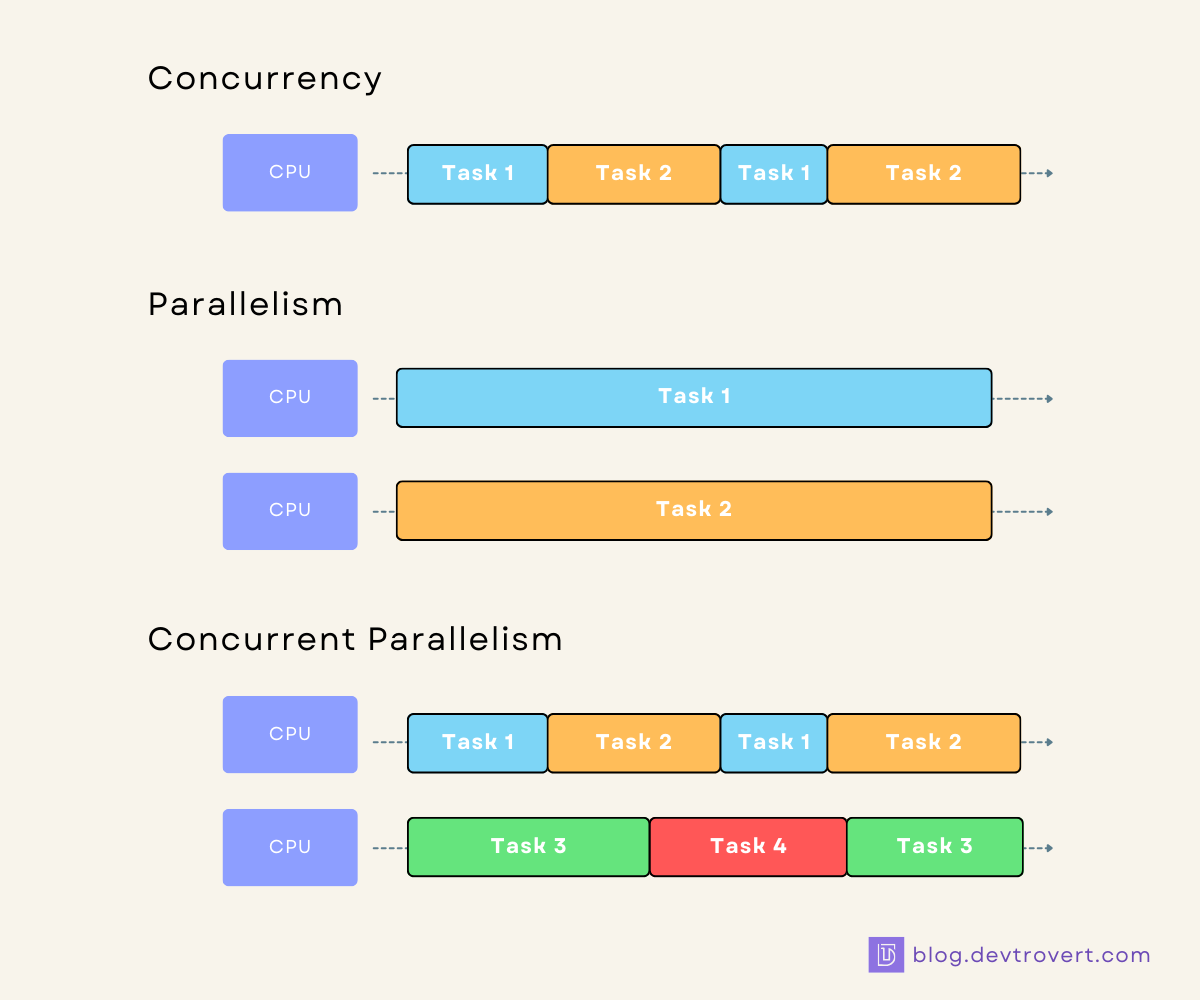

Before we dig deeper into the scheduler, let’s clear up two terms that often get mixed up: concurrency and parallelism.

Concurrency: This is about handling many tasks at once, they’re all moving, but not always at the same time.

Parallelism: This means many tasks are running at the exact same time, often using more than one CPU core.

Let’s see how Go Scheduler play with threads.

Get weekly insights on Go and System Design to enhance your reading routine:

2. PMG model

Before we untangle the inner workings, let’s break down what P, M, and G stand for.

G (goroutine)

Goroutine acts as Go’s smallest execution unit, akin to a lightweight thread.

Within Go’s runtime, this is represented by a struct{} named g. Once established, it finds its place in a logical processor P's local runnable queue (or Gs queue) and from there, P hands it over to an actual kernel thread (M).

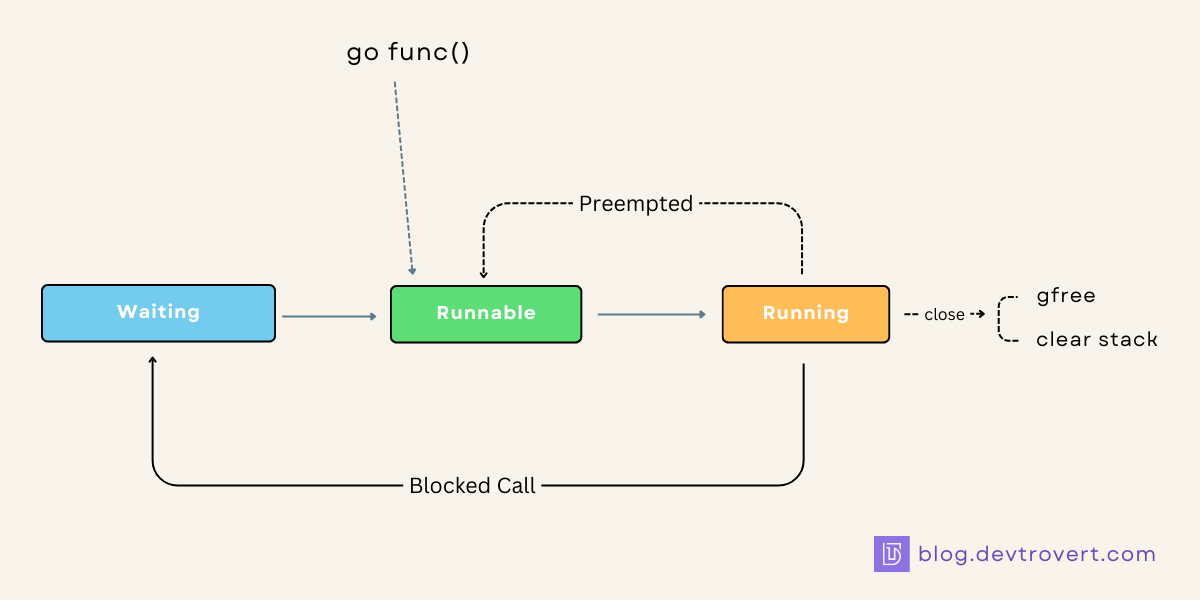

Goroutines generally exist in three main states:

Waiting: At this stage, the goroutine’s at a standstill, maybe it’s paused for an operation like a channel or lock, or perhaps it’s halted by a syscall.

Runnable: The goroutine is all set to run, but hasn’t started yet, it’s waiting for its turn to run on a thread (M).

Running: Now, the goroutine is actively executing on a thread (M). It continues until its task is done, unless the scheduler interrupts it or something else blocks its path

Goroutines are NOT used just once and then discarded.

Instead, when a new goroutine is initiated, Go’s runtime dips into the goroutine pool to select one, but if none are found, it creates a new one. This new goroutine then joins the runnable queue of a P.

P (Logical Processor)

Within the Go scheduler, when we mention “processor”, we’re referring to a logical entity, not a physical one.

By default, the number of P is set to the number of cores available, you can check or change the number of these processors using the runtime.GOMAXPROCS(int).

runtime.GOMAXPROCS(0) // get the current allowed number of logical processors

// Output: 8 (depends on your machine)If you think of changing this, it’s best to do it once at the start of your app, if you changing it at runtime, it will cause STW (stopTheWorld), everything paused until it resizes the processor.

Each P holds its own list of runnable goroutines, called the Local Run Queue, which can accommodate up to 256 goroutines.

If the P’s queue is reached max goroutines (256), there’s a shared queue called Global Run Queue, but we’ll get into that later.

“So, what does this number of ‘P’ really show?”

It indicates the number of goroutines that can operate concurrently — imagine them running side by side.

M (Machine Thread — OS Thread)

A typical Go program has the capacity to use up to 10,000 threads.

And yes, I’m talking about threads not goroutines. If you push beyond this limit, you risk crashing your Go application.

“When is a thread created?”

Think of this situation: a goroutine is in a runnable state and requires a thread.

What happens if all the threads are already blocked, perhaps due to system calls or non-preempted operations? In this case, the scheduler steps in and creates a new thread for that goroutine.

(One thing to note: if a thread is simply busy with expensive calculation or long running task, it’s not considered as being stuck or blocked)

If you want to change the default thread limit, you can use the runtime/debug.SetMaxThreads() function, this lets you set the maximum number of OS threads your Go program can use.

Also, it’s good to know that threads are reused since the creation or deletion of threads is resource-intensive.

3. How MPG works

Let’s understand how M, P, and G operate together step by step through bullet points.

I won’t dive into every tiny detail here, but I’ll delve deeper in upcoming stories. If this interests you, please subscribe.

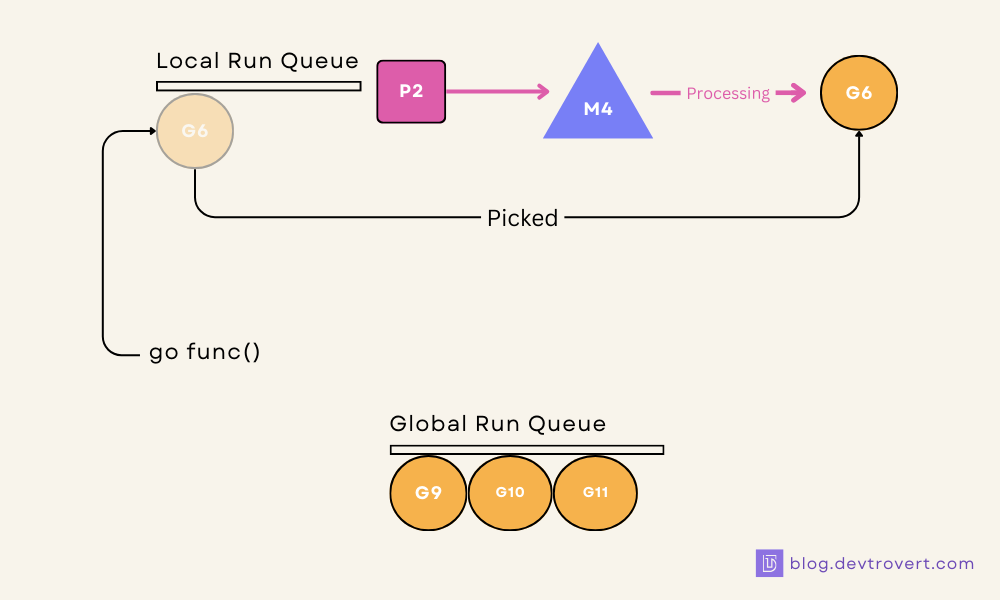

Initiate a goroutine: By using the

go func()command, the Go Runtime either creates a new goroutine or selects an existing one from the pool.Queue positioning: The goroutine looks for its place in a queue and if the local queues of all logical processors (P) are full, this goroutine is placed in the global queue.

Thread-Pairing: This is where M comes into play. It grabs a P and begins processing the goroutine from P’s local queue, as M engages with this goroutine, its associated P becomes occupied and unavailable for other Ms.

The act of stealing: If a P’s queue is depleted, M tries to “borrow” half of the runnable goroutines from another P’s queue. If unsuccessful, it then checks the global queue, followed by the network poller (look at the Stealing Process diagram section below).

Resource allocation: After an M selects a goroutine (G), it secures all necessary resources to run the G.

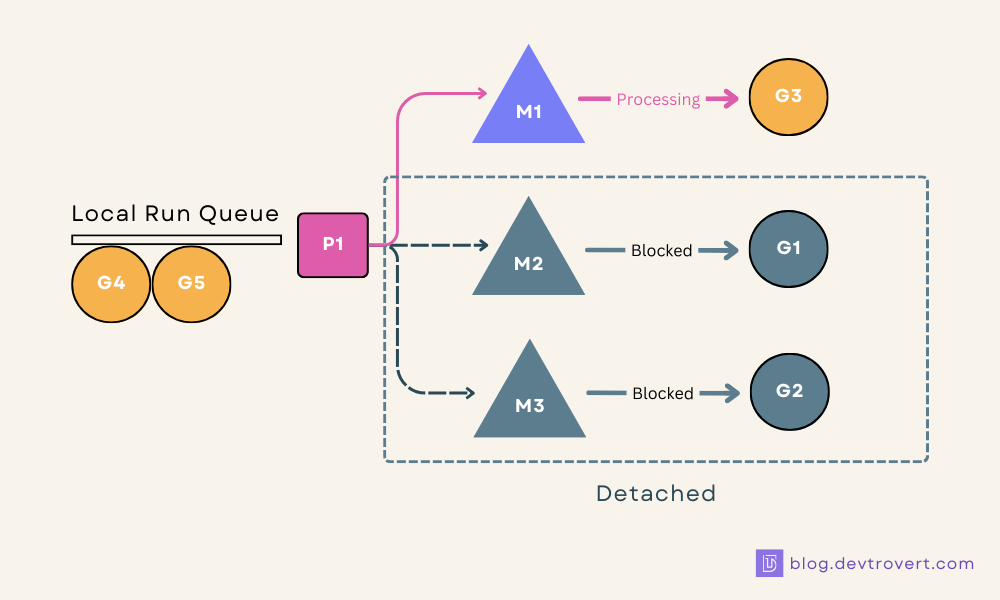

“How about a thread being blocked?”

If a goroutine starts a system call that takes time (like reading a file), the M waits.

But the scheduler doesn’t like who just sitting and waiting, it unlinks the halted M from its P and connects a different, runnable goroutine from the queue to a fresh or existing M, which then teams up with the P

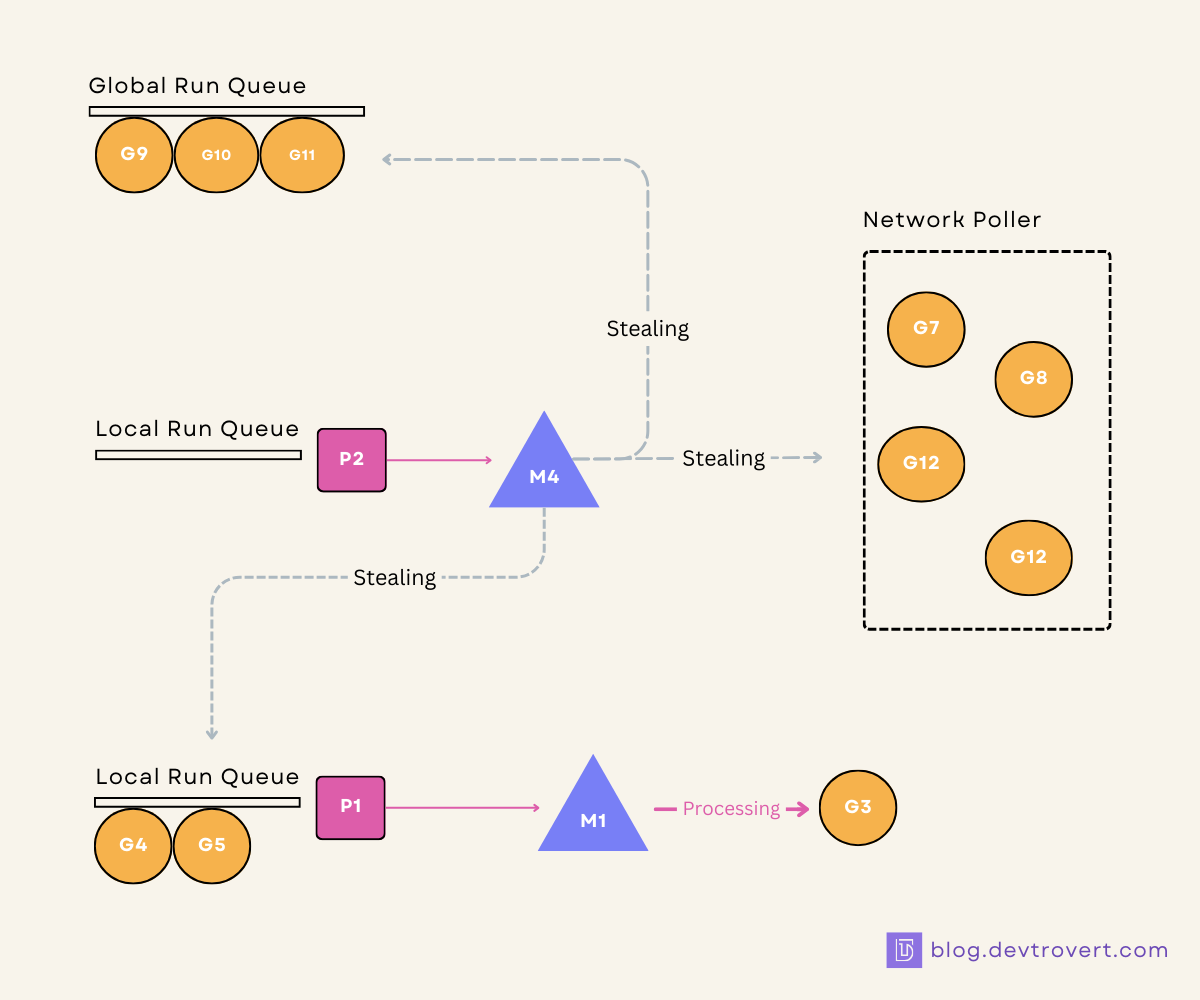

Stealing Process

When a thread (M) finishes its tasks and has nothing left to do, it doesn’t just sit around.

Instead, it actively seeks more work by looking at other processors and taking half of their tasks, let’s break this down:

Every 61 ticks, an M checks the global runnable queue to ensure fairness in execution. If a runnable goroutine is found in the global queue, stop.

That thread M now checks its local run queue, linked to its processor P, for any runnable goroutines to work on.

If the thread finds its queue empty, it then looks at the global queue to see if there are any tasks waiting there.

The thread then checks with the network poller for any network-related jobs.

If the thread still hasn’t found any tasks after checking the network poller, it goes into an active search mode, which we can think of as spinning state.

In this state, the thread attempts to “borrow” tasks from the queues of other processors.

After all these steps, if the thread still hasn’t found any work, it stops its active search.

Now, if new tasks come in and there’s a free processor without any searching threads, another thread can be prompted to start working.

The detail to note is that the global queue is actually checked twice: once every 61 ticks for fairness and again if the local queue is empty.

“If M is attached to its P, how can it take tasks from other processors? Does M change its P?”

The answer is no.

Even if M takes a task from another P’s queue, it runs that task using its original processor. So, while M takes on new tasks, it remains loyal to its processor.

“Why 61?”

When designing algorithms, especially hashing algorithms, prime numbers are often chosen because of their lack of divisors other than 1 and themselves.

This can reduce the chances of patterns or regularities from emerging, which can lead to “collisions” or other unwanted behaviors.

If too short, the system may waste resources frequently checking the global run queue. If too long, goroutines might wait excessively before execution.

Network Poller

We haven’t discussed much about this network poller, but it’s presented in the stealing process diagram.

Like Go Scheduler, the Network Poller is a component of the Go Runtime and responsible for handling network related calls (e.g. network I/O).

Let’s compare between 2 syscall types:

Network-related Syscalls: When a goroutine performs a network I/O operation, instead of blocking the thread, it gets registered with the network poller. The poller waits for the operation to complete asynchronously and once complete, the goroutine becomes runnable again and can continue its execution on a thread.

Other Syscalls: If they’re potentially blocking and aren’t handled by the network poller, they can cause the goroutine to offload its execution to an OS thread. Only that particular OS thread gets blocked, and the Go runtime scheduler can execute other goroutines on different threads.

In the subsequent section, we’ll delve even deeper into preemption and analyze each step the scheduler takes during its operation.

Get weekly insights on Go and System Design to enhance your reading routine:

Oh, I’m sharing insights on Twitter.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK