As Air Canada just learned, chatbots' owners are responsible for their actions -...

source link: https://bernoff.com/blog/as-air-canada-just-learned-chatbots-owners-are-responsible-for-their-actions

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

As Air Canada just learned, chatbots’ owners are responsible for their actions

If an employee of a company told you something wrong that cost you money, you could sue the company for damages. It’s the same with a chatbot.

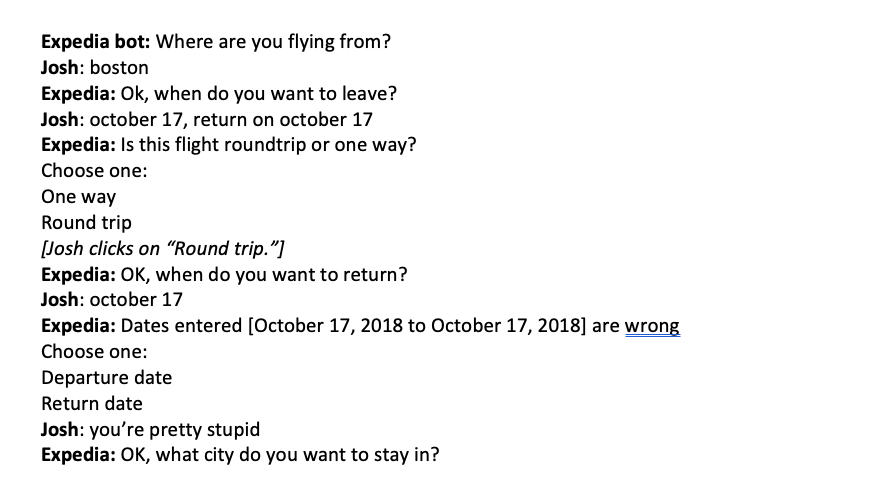

Here’s what happened. Air Canada features a customer service chatbot on its website. You’ve probably interacted with service chatbots like these on other companies’ sites — I researched and ghostwrote a whole book about them.

In this case, the Air Canada chatbot gave a customer named Jake Moffatt bad advice, in particular implying that by submitting a form, Moffatt could receive a bereavement fare discount after the travel was completed. This was false, and in fact contradicted text on the Air Canada site that says that the discount must be applied before ticketing.

As detailed in an article in The Guardian, Air Canada attempted to imply that the chatbot, not the airline, was responsible for its mistakes:

Moffatt then sued for the fare difference, prompting Air Canada to issue what the tribunal member Christopher Rivers called a “remarkable submission” in its defense.

Air Canada argued that despite the error, the chatbot was a “separate legal entity” and thus was responsible for its actions.

“While a chatbot has an interactive component, it is still just a part of Air Canada’s website. It should be obvious to Air Canada that it is responsible for all the information on its website,” wrote Rivers. “It makes no difference whether the information comes from a static page or a chatbot.”

While Air Canada argued correct information was available on its website, Rivers said the company did “not explain why the webpage titled ‘Bereavement Travel’ was inherently more trustworthy” than its chatbot.

“There is no reason why Mr Moffatt should know that one section of Air Canada’s webpage is accurate, and another is not,” he wrote.

Air Canada must pay Moffatt C$650.88, the equivalent of the difference between what Moffatt paid for his flight and a discounted bereavement fare – as well as C$36.14 in pre-judgment interest and C$125 in fees.

Who’s responsible for chatbot mistakes?

Increasingly, chatbots will be interacting with people on behalf of companies or other entities. And just as customer service people sometimes do, chatbots will make mistakes.

If you skimmed all the text on a web site and tried to get the gist of how things worked, you could certainly make a wrong assumption. Perhaps, in this case, the chatbot recognized the pattern that the airline offered some discounts and credits after the travel was done, and erroneously extended that to the bereavement fare.

But if chatbots make mistakes, their owners have to pay — as this judgment in Canada now confirms.

Where damages happen, disclaimers follow. It’s already happening: This text appears under every ChatGPT prompt:

ChatGPT can make mistakes. Consider checking important information.

We all know that generalized search engines sometimes surface false information. But when you’re interacting with a company’s chatbot, you have the expectation that it will not lie to you — just as you would have that expectation of an employee. If a company’s chatbot came with a disclaimer that it was sometimes wrong, would you ever risk interacting with it?

Truth and hallucinations are already a big challenge for AI. Before corporations can trust them to deal with humans — especially in customer service — we’ll need to see advances to solve these sorts of problems.

Post navigation

Leave a ReplyCancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed.

One Comment

-

Thomas L Hayden says:

Just wondering what legal genius convinced Air Canada that this defense approach was the best one to take? Had AC just reimbursed Mr. Moffatt for his fare difference and made appropriate changes to their web site it would’ve cost them a lot less than what they must have paid for their feckless defense. And, they would have protected themselves against future similar suits. ?????

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK