Service Mesh with Linkerd & Arm based Kubernetes Clusters - Infrastructure S...

source link: https://community.arm.com/arm-community-blogs/b/infrastructure-solutions-blog/posts/service-mesh-with-linkerd-and-arm-based-k8s

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Managing Distributed Services with Linkerd and Arm-based Kubernetes Cluster

The rise of edge computing has brought new challenges to the world of cloud-native architecture. With applications running on both the edge and the cloud, managing and securing these distributed environments can be a complex task. Service meshes have emerged as a solution to this challenge, providing advanced traffic management, security, and observability features that are essential for effective application management in a distributed environment.

Linkerd provides an efficient service mesh solution to effectively manage and secure clusters at the edge or in the cloud. It works by injecting a lightweight proxy (sidecar) next to each service instance to handle communications between services. The proxy has a very low CPU and RAM footprint, that provides a secure and minimal latency overhead for traffic flow. Linkerd has been available on Arm64 since version 2.9, thanks to contributions and support from project maintainers and the community.

Linkerd provides a feature called multi-cluster, which enables seamless communication between services running in different Kubernetes clusters. These clusters can span multiple clouds, datacenter or edge locations. In a multi-cluster setup, Linkerd deploys a control plane in each cluster, allowing it to manage the services running within that cluster. This multi-cluster setup works by mirroring the service information between clusters. The remote services work as Kubernetes services, and hence all the observability, security and routing features apply to all these clusters. Let's look at a use case now.

Use case - Managing Distributed Services with Linkerd on Arm-based Kubernetes clusters

Here, we describe how to connect an application across a multi-cluster service mesh in a cloud to edge scenario. We deploy Linkerd multi-cluster setup running on Arm-based Kubernetes cluster in the cloud and at the edge. We also showcase features like traffic management, security, real-time monitoring of applications, global observability, traffic splitting etc. in an edge to cloud scenario. The high-level flow of the use case is as follows:

- Deploy two Arm-based Kubernetes clusters – one at the edge/local and another one in the cloud (Amazon EKS with Graviton2 nodes)

- Linkerd multi-cluster setup and configuration on both the clusters

- Deploy services on both the edge and cloud clusters and establish communication

- Securing communication between services of both the clusters

- Showcase a failover scenario with Traffic splitting

Configurations:

Pre-requisites –

- Two Kubernetes clusters –

- A K3s/K3d cluster running on Raspberry Pi or an Arm-powered Laptop (M1 etc.)

- An Amazon EKS cluster with AWS Graviton2 based worker nodes

- Kubectl installed on your local machine to interact with the clusters

- Linkerd cli installed on your local machine

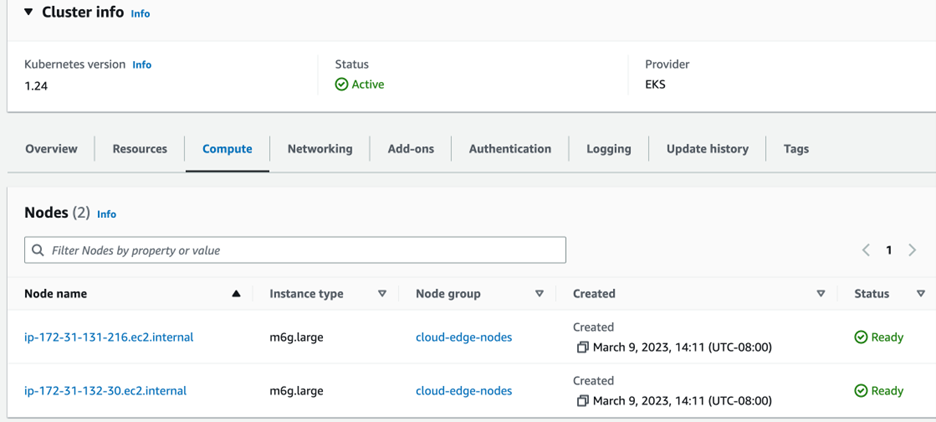

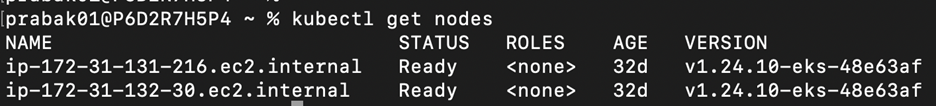

Make sure both the clusters are accessible via kubectl. The figure below shows the EKS cluster and kubectl command result

We’re now ready to install Linkerd on both the clusters. Linkerd requires a shared trust anchor to exist between both of clusters in a multi-cluster setup. This encrypts the traffic between the clusters so that it’s protected and not open to internet. Install the step cli using the instructions given in this link. Use the following command to generate the root certificate for both of our clusters

step certificate create root.linkerd.cluster.local root.crt root.key \

--profile root-ca --no-password --insecure

This will form a common trust relationship between our clusters. Now, using this trust anchor let’s generate certificate for our clusters

step certificate create identity.linkerd.cluster.local issuer.crt issuer.key \

--profile intermediate-ca --not-after 8760h --no-password --insecure \

--ca root.crt --ca-key root.key

Now, let’s install Linkerd on both of our clusters. For simplicity’s sake we’ll call our EKS cluster – cloud and our edge/local cluster – edge. Run the following command to install linkerd CRDs on both clusters

linkerd install --crds |

| tee \

>(kubectl --context=edge apply -f -) \

>(kubectl --context=cloud apply -f -)

Now, install the Linkerd control plane in both the clusters

linkerd install \

--identity-trust-anchors-file root.crt \

--identity-issuer-certificate-file issuer.crt \

--identity-issuer-key-file issuer.key \

| tee \

>(kubectl --context=edge apply -f -) \

>(kubectl --context=cloud apply -f -)

We’ll also install the Linkerd viz extension which install an on-cluster metric stack and dashboard

for ctx in edge cloud; do

linkerd --context=${ctx} viz install | \

kubectl --context=${ctx} apply -f - || break

done

After the installation, we now need to install the multi-cluster components

for ctx in edge cloud; do

echo "Installing on cluster: ${ctx} ........."

linkerd --context=${ctx} multicluster install | \

kubectl --context=${ctx} apply -f - || break

echo "-------------"

done

We’ll also need to activate the gateway component in the Kubernetes namespace – linkerd-multicluster. It’s a simple container injected with linkerd proxy. Use the following command

for ctx in edge cloud; do

echo "Checking gateway on cluster: ${ctx} ........."

kubectl --context=${ctx} -n linkerd-multicluster \

rollout status deploy/linkerd-gateway || break

echo "-------------"

done

Now, that the linkerd control plane is installed in both the clusters, we can now link the clusters and start mirroring the services. To link the edge cluster to cloud, run the following command:

linkerd --context=cloud multicluster link --cluster-name cloud |

kubectl --context=edge apply -f –

Run the following command to check that the edge cluster can reach the cloud cluster and its services

linkerd --context=west multicluster check

Once it’s done successfully, we’ll add a few services that we can mirror across both the clusters. Execute the following to add two deployments – frontend and podinfo - in both the clusters in ‘test’ namespace

for ctx in edge cloud; do

echo "Adding test services on cluster: ${ctx} ........."

kubectl --context=${ctx} apply \

-n test -k "github.com/linkerd/website/multicluster/${ctx}/"

kubectl --context=${ctx} -n test \

rollout status deploy/podinfo || break

echo "-------------"

done

On the edge cluster run the following command to check its status:

kubectl --context=edge -n test port-forward svc/frontend 8080

Access the URL on http://localhost:8080 and check how it looks on the edge cluster.

Now, by default all the requests are going through internet. With Linkerd we can extend the mTLS functionality across both clusters making sure that the traffic is encrypted. Run the following command to verify:

linkerd --context=edge -n test viz tap deploy/frontend | \

grep "$(kubectl --context=cloud -n linkerd-multicluster get svc linkerd-gateway \

-o "custom-columns=GATEWAY_IP:.status.loadBalancer.ingress[*].ip")"

tls=true means that the requests are being encrypted.

Linkerd also allows Failover in a multi-cluster setup. We can achieve this by using TrafficSplit. It allows us to define weights between multiple services and split traffic between them. For our clusters, let’s split the podinfo service in edge and cloud. Run the following command to split the traffic between both services. New requests to podinfo will get forwarded to the podinfo-cloud cluster 50% of time and the other 50% to local podinfo.

kubectl --context=edge apply -f - <<EOF

apiVersion: split.smi-spec.io/v1alpha1

kind: TrafficSplit

metadata:

name: podinfo

namespace: test

spec:

service: podinfo

backends:

- service: podinfo

weight: 50

- service: podinfo-cloud

weight: 50

EOF

Now, refreshing the URL a couple of times http://localhost:8080, should show info from both the clusters.

To see the metrics of the trafficsplit use the following command to launch a dashboard

linkerd viz dashboard

Click the following URL to see the dashboard – http://localhost:50750. We should see a response like below

Conclusion

In distributed computing environments, managing and securing communication between services can be challenging. With a service mesh, we can address these challenges by providing visibility, security, resilience, scalability etc. for a variety of cloud native workloads running on Arm-based Kubernetes clusters either in the cloud or at the edge. Linkerd provides a lightweight and efficient service mesh that focusses on cross-cluster communications with no changes to the application.

Join us at Linkerd Day at Kubecon EU 2023, to know more about such use cases and more!

Cheryl Hung, Sr Director of Ecosystem Development at Arm, will also give a talk at KubeCon about “Multi-Arch Infrastructure from the Ground up” - Wednesday, April 19, 2023. Come and join to hear about challenges developers face when choosing the best hardware solution for their price/performance needs to run workloads.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK