7

k8s1.26.x 最新版本二进制方式部署

source link: https://blog.51cto.com/flyfish225/5988774

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

标签(空格分隔): kubernetes系列

一: 系统环境初始化

1.1 系统环境

系统:

almalinux 8.7x64

cat /etc/hosts

----

172.16.10.81 flyfish81

172.16.10.82 flyfish82

172.16.10.83 flyfish83

172.16.10.84 flyfish84

172.16.10.85 flyfish85

-----

本次部署为前三台almalinux 8.6x64

flyfish81 做 为 master 部署

flyfish82 、flyfish83 作为worker 节点

1.2 下载工具准备

1.下载kubernetes1.26.+的二进制包

github二进制包下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.26.md

wget https://dl.k8s.io/v1.26.0/kubernetes-server-linux-amd64.tar.gz

2.下载etcdctl二进制包

github二进制包下载地址:https://github.com/etcd-io/etcd/releases

wget https://github.com/etcd-io/etcd/releases/download/v3.5.5/etcd-v3.5.5-linux-amd64.tar.gz

3.docker-ce二进制包下载地址

二进制包下载地址:https://download.docker.com/linux/static/stable/x86_64/

这里需要下载20.10.+版本

wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.22.tgz

4.下载cri-docker

二进制包下载地址:https://github.com/Mirantis/cri-dockerd/releases/

wget https://ghproxy.com/https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd-0.2.6.amd64.tgz

5.containerd二进制包下载

github下载地址:https://github.com/containerd/containerd/releases

containerd下载时下载带cni插件的二进制包。

wget https://github.com/containerd/containerd/releases/download/v1.6.6/cri-containerd-cni-1.6.6-linux-amd64.tar.gz

6.下载cfssl二进制包

github二进制包下载地址:https://github.com/cloudflare/cfssl/releases

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

7.cni插件下载

github下载地址:https://github.com/containernetworking/plugins/releases

wget https://github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz

8.crictl客户端二进制下载

github下载:https://github.com/kubernetes-sigs/cri-tools/releases

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.2/crictl-v1.24.2-linux-amd64.tar.gz

1.3系统初始化

# 安装依赖包

yum -y install wget jq psmisc vim net-tools nfs-utils telnet yum-utils device-mapper-persistent-data lvm2 git network-scripts tar curl -y

# 关闭防火墙 与selinux

systemctl disable --now firewalld

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

# 关闭交换分区

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a && sysctl -w vm.swappiness=0

cat /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 0

#

# 配置系统句柄数

ulimit -SHn 65535

cat >> /etc/security/limits.conf <<EOF

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* seft memlock unlimited

* hard memlock unlimitedd

EOF

# 做系统无密码互信登陆

yum install -y sshpass

ssh-keygen -f /root/.ssh/id_rsa -P ''

export IP="172.16.10.81 172.16.10.82 172.16.10.83"

export SSHPASS=flyfish225

for HOST in $IP;do

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no $HOST

done

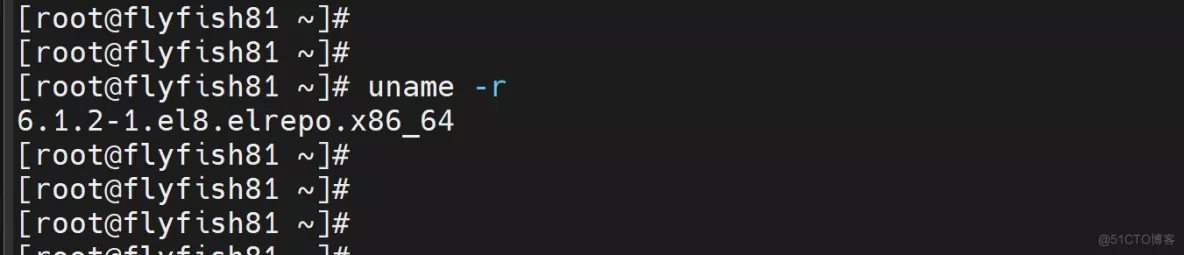

# 升级系统内核

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

yum install https://www.elrepo.org/elrepo-release-8.el8.elrepo.noarch.rpm

修改阿里云 镜像源

mv /etc/yum.repos.d/elrepo.repo /etc/yum.repos.d/elrepo.repo.bak

vim /etc/yum.repos.d/elrepo.repo

----

[elrepo-kernel]

name=elrepoyum

baseurl=https://mirrors.aliyun.com/elrepo/kernel/el8/x86_64/

enable=1

gpgcheck=0

----

yum --enablerepo=elrepo-kernel install kernel-ml

#使用序号为0的内核,序号0是前面查出来的可用内核编号

grub2-set-default 0

#生成 grub 配置文件并重启

grub2-mkconfig -o /boot/grub2/grub.cfg

reboot

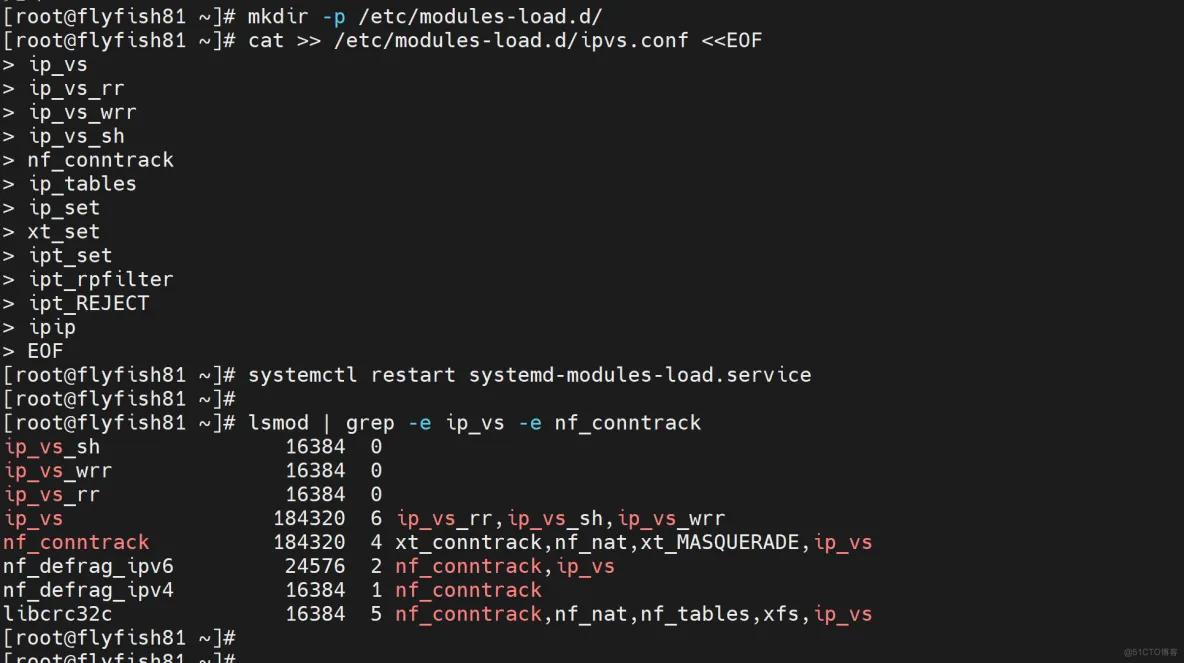

启用ipvs

yum install ipvsadm ipset sysstat conntrack libseccomp -y

mkdir -p /etc/modules-load.d/

cat >> /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl restart systemd-modules-load.service

lsmod | grep -e ip_vs -e nf_conntrack

ip_vs_sh 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs 180224 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 176128 1 ip_vs

nf_defrag_ipv6 24576 2 nf_conntrack,ip_vs

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 3 nf_conntrack,xfs,ip_vs

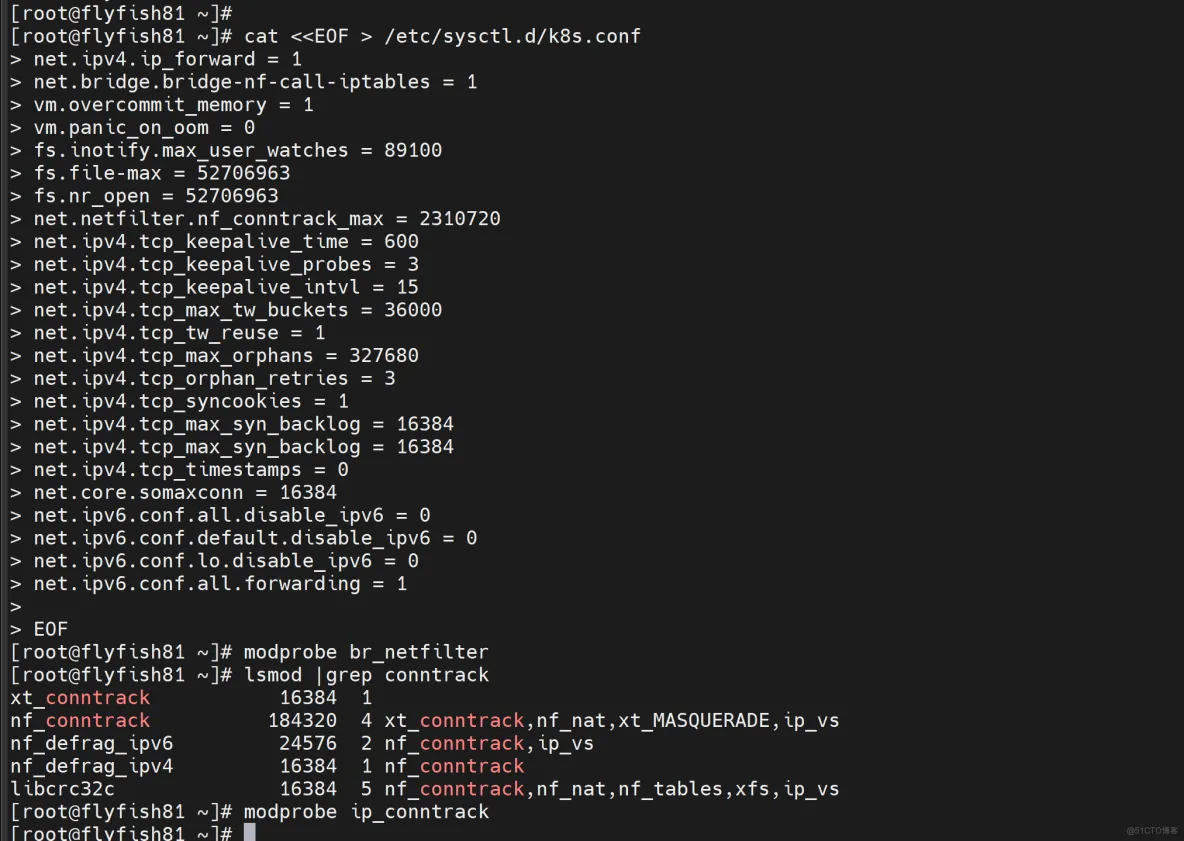

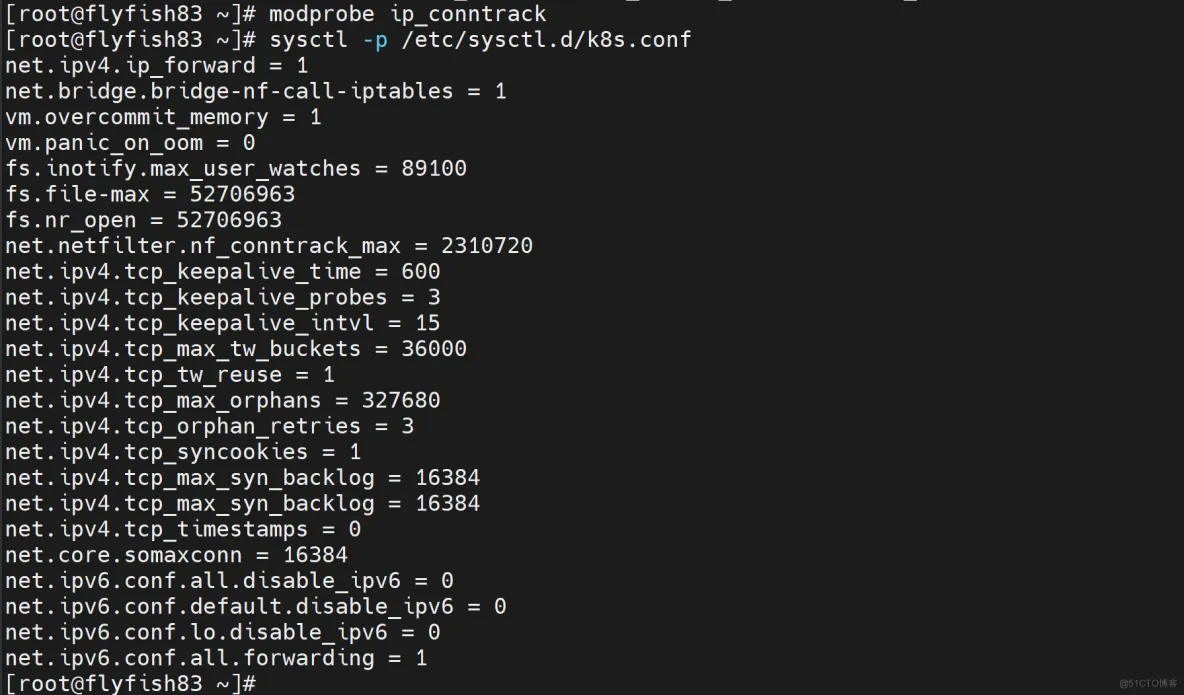

1.4 修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

EOF

modprobe br_netfilter

lsmod |grep conntrack

modprobe ip_conntrack

sysctl -p /etc/sysctl.d/k8s.conf

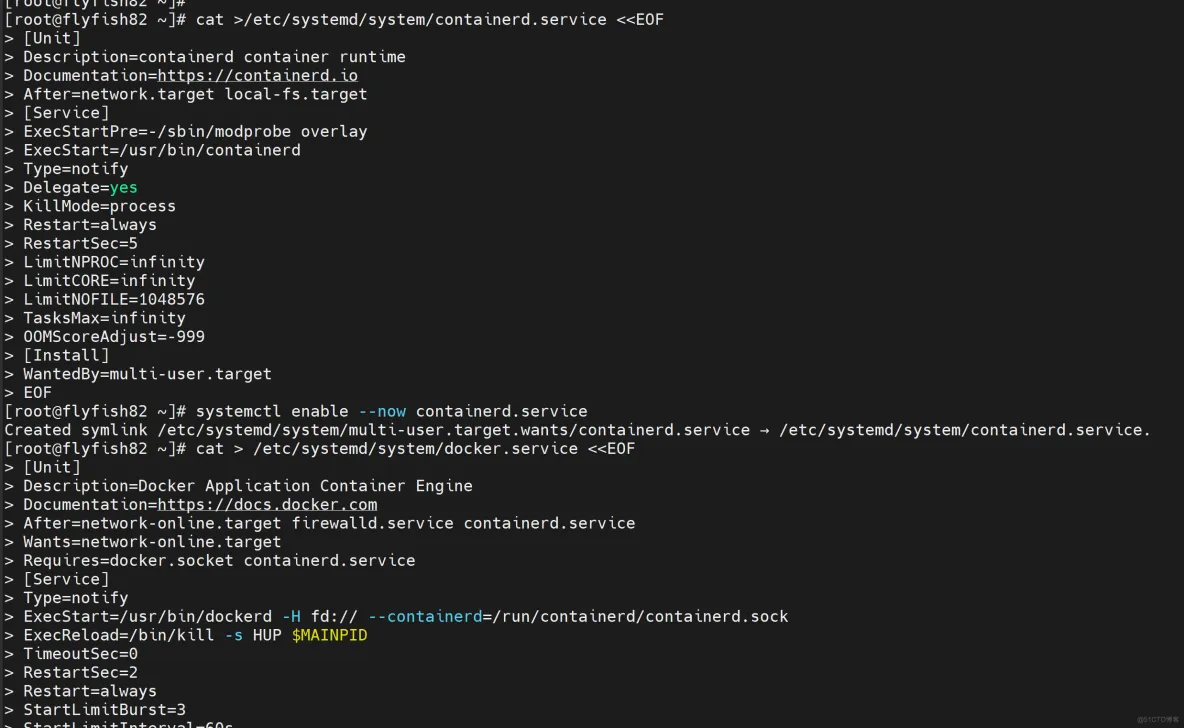

1.5 所有节点安装docker

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-20.10.22.tgz

以下在所有节点操作。这里采用二进制安装,用yum安装也一样。

在 flyfish81/flyfish82/flyfish83 节点上面安装

# 二进制包下载地址:https://download.docker.com/linux/static/stable/x86_64/

# wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.22.tgz

#解压

tar xf docker-*.tgz

#拷贝二进制文件

cp docker/* /usr/bin/

#创建containerd的service文件,并且启动

cat >/etc/systemd/system/containerd.service <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=1048576

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

systemctl enable --now containerd.service

#准备docker的service文件

cat > /etc/systemd/system/docker.service <<EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=docker.socket containerd.service

[Service]

Type=notify

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

OOMScoreAdjust=-500

[Install]

WantedBy=multi-user.target

EOF

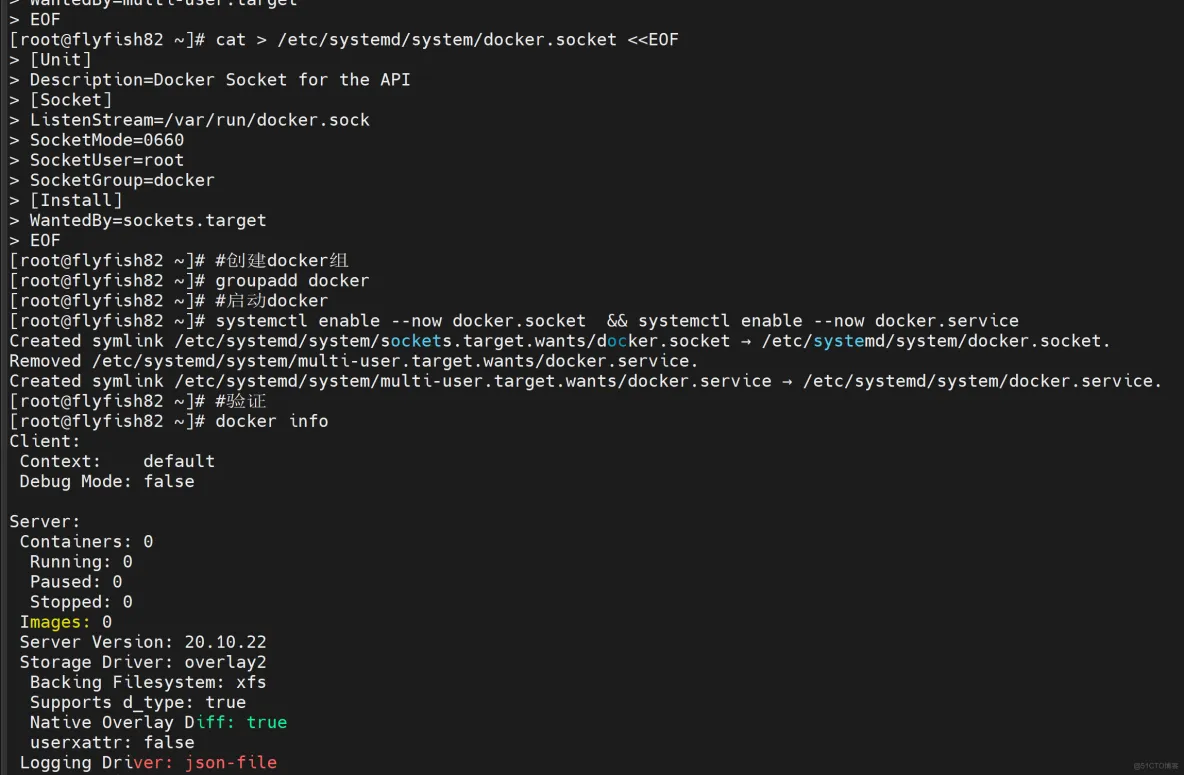

#准备docker的socket文件

cat > /etc/systemd/system/docker.socket <<EOF

[Unit]

Description=Docker Socket for the API

[Socket]

ListenStream=/var/run/docker.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

#创建docker组

groupadd docker

#启动docker

systemctl enable --now docker.socket && systemctl enable --now docker.service

#验证

docker info

cat >/etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com"

],

"max-concurrent-downloads": 10,

"log-driver": "json-file",

"log-level": "warn",

"log-opts": {

"max-size": "10m",

"max-file": "3"

},

"data-root": "/var/lib/docker"

}

EOF

systemctl restart docker

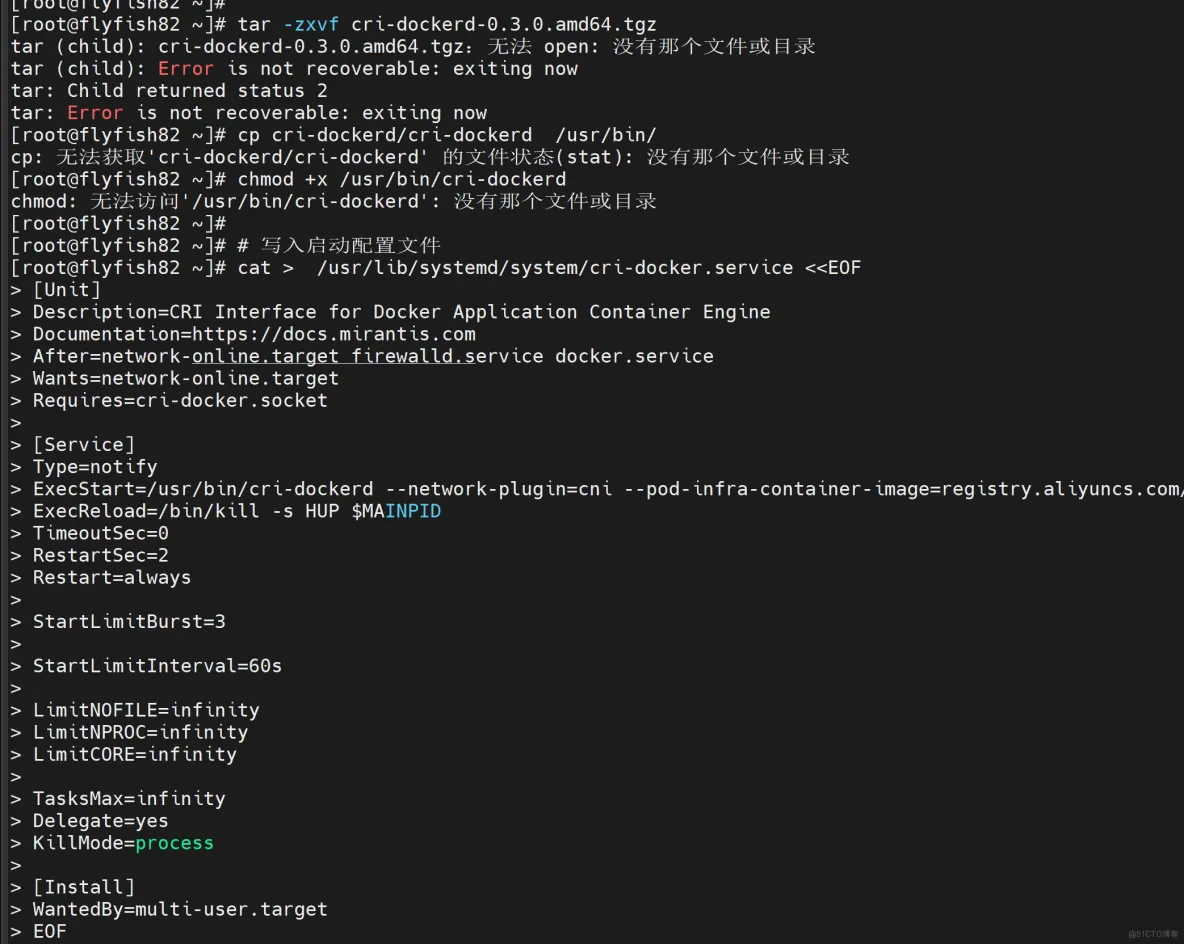

安装cri-dockerd

# 由于1.24以及更高版本不支持docker所以安装cri-docker

# 下载cri-docker

# wget https://ghproxy.com/https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.5/cri-dockerd-0.2.5.amd64.tgz

# 解压cri-docker

tar -zxvf cri-dockerd-0.3.0.amd64.tgz

cp cri-dockerd/cri-dockerd /usr/bin/

chmod +x /usr/bin/cri-dockerd

# 写入启动配置文件

cat > /usr/lib/systemd/system/cri-docker.service <<EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 写入socket配置文件

cat > /usr/lib/systemd/system/cri-docker.socket <<EOF

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

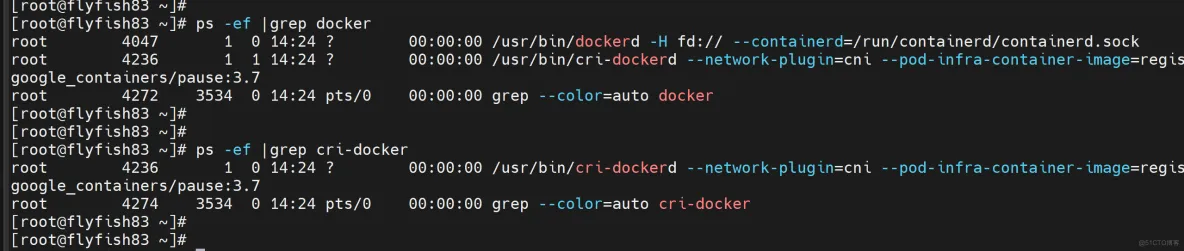

# 进行启动cri-docker

systemctl daemon-reload ; systemctl enable cri-docker --now

二:部署etcd 服务

2.1 设置关于签名证书

下载:

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.2_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.2_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd64

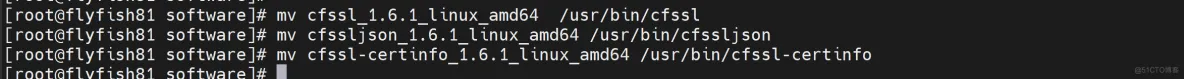

mv cfssl_1.6.1_linux_amd64 /usr/bin/cfssl

mv cfssljson_1.6.1_linux_amd64 /usr/bin/cfssljson

mv cfssl-certinfo_1.6.1_linux_amd64 /usr/bin/cfssl-certinfo

chmod +x /usr/bin/cfssl*

mkdir -p ~/TLS/{etcd,k8s}

cd ~/TLS/etcd

自签CA:

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

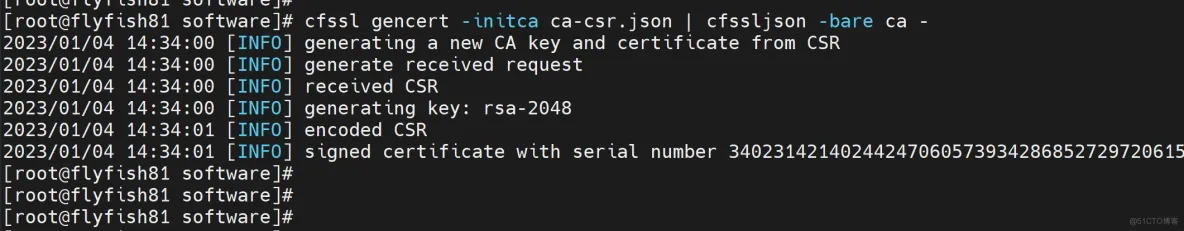

生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

会生成ca.pem和ca-key.pem文件

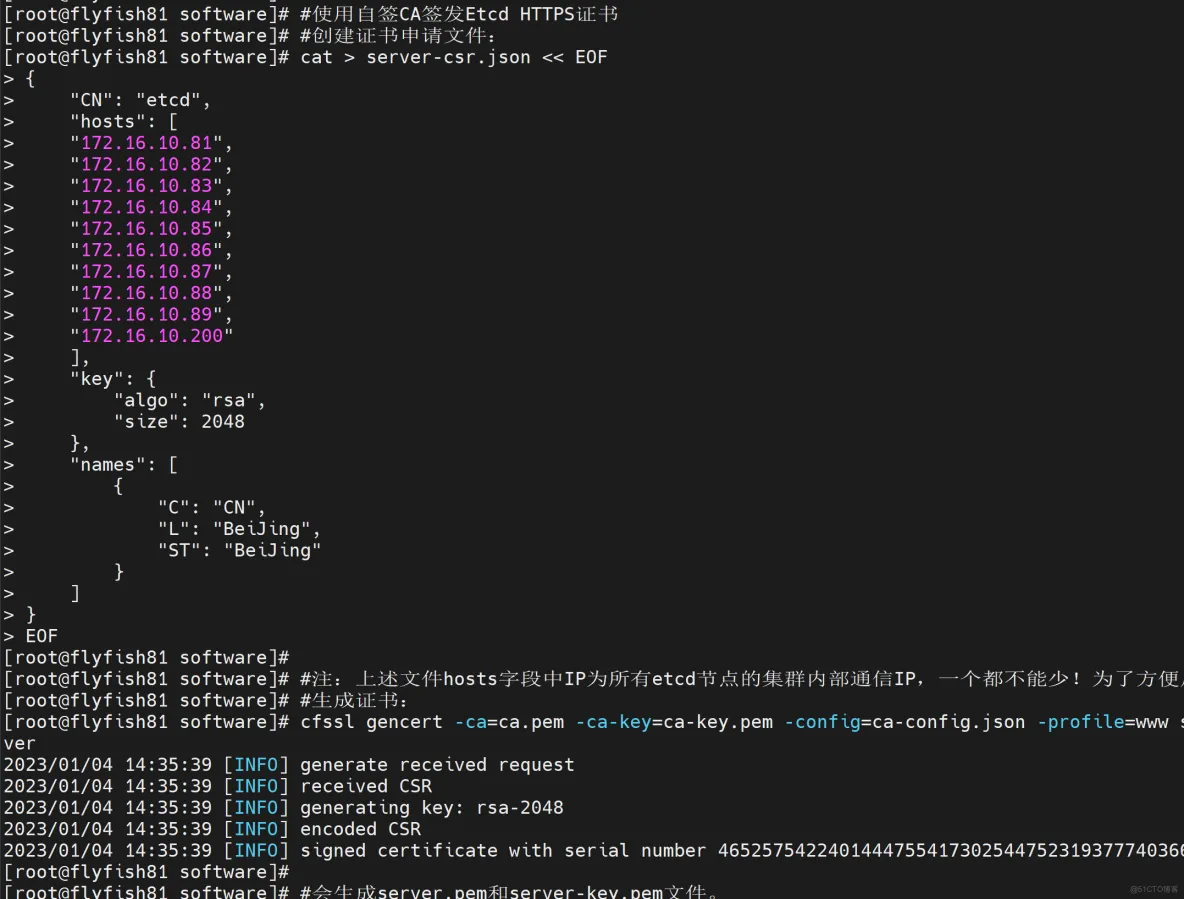

#使用自签CA签发Etcd HTTPS证书

#创建证书申请文件:

cat > server-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"172.16.10.81",

"172.16.10.82",

"172.16.10.83",

"172.16.10.84",

"172.16.10.85",

"172.16.10.86",

"172.16.10.87",

"172.16.10.88",

"172.16.10.89",

"172.16.10.200"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有etcd节点的集群内部通信IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

#生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

#会生成server.pem和server-key.pem文件。

1. Etcd 的概念:

Etcd 是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,当然,你也可以使用5台组建集群,可容忍2台机器故障。

下载地址: https://github.com/etcd-io/etcd/releases

以下在节点flyfish81上操作,为简化操作,待会将节点flyfish81生成的所有

文件拷贝到节点flyfish82和节点flyfish83.

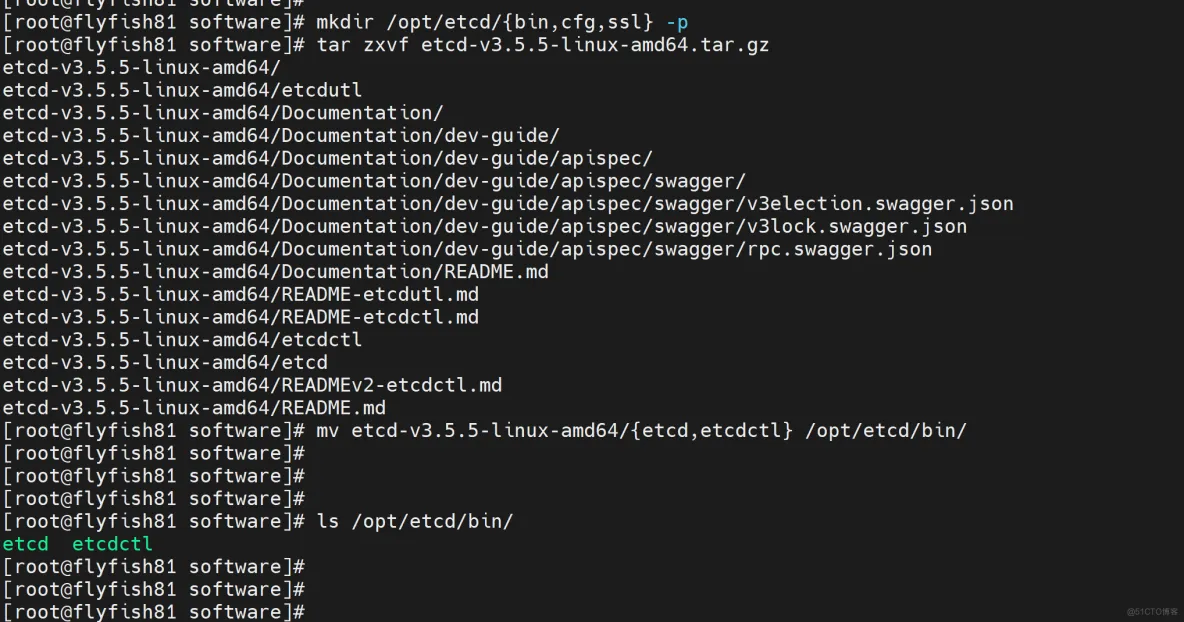

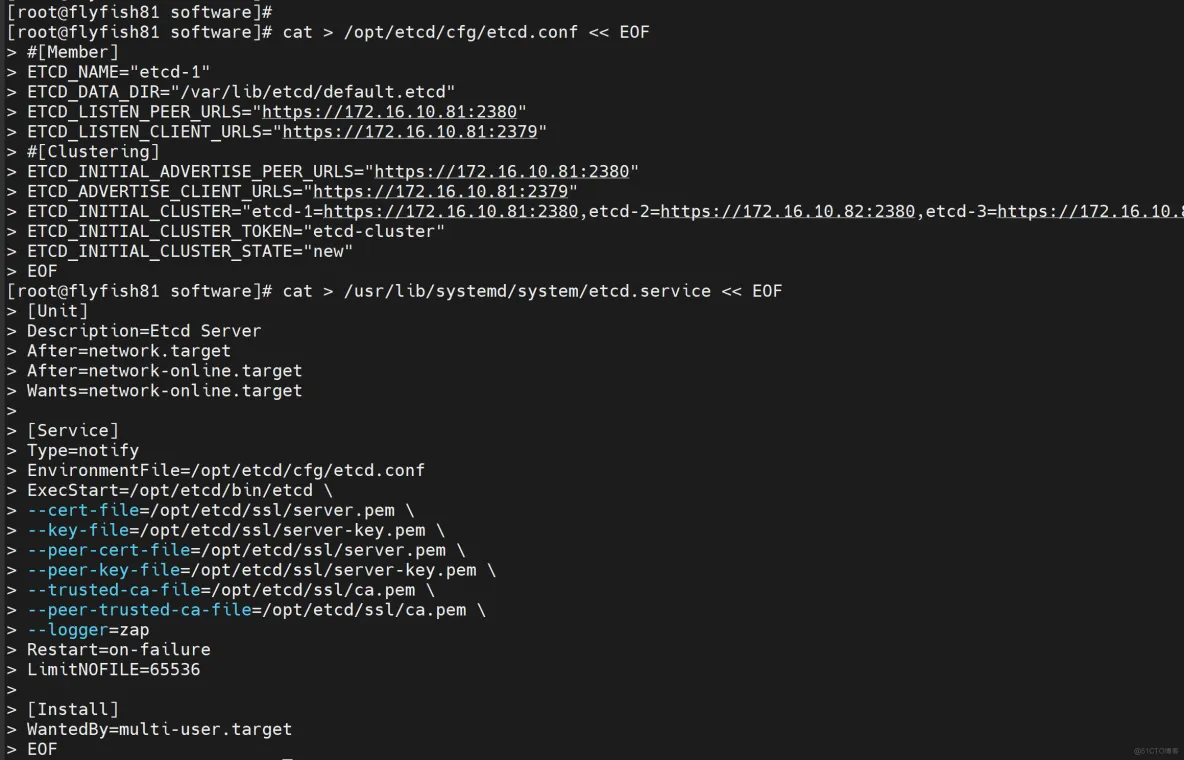

2. 安装配置etcd

mkdir /opt/etcd/{bin,cfg,ssl} -p

tar zxvf etcd-v3.5.5-linux-amd64.tar.gz

mv etcd-v3.5.5-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/

#flyfish81 etcd 配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF

#[Member]

ETCD_NAME="etcd-1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.10.81:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.10.81:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.10.81:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.10.81:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.16.10.81:2380,etcd-2=https://172.16.10.82:2380,etcd-3=https://172.16.10.83:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

---

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

3. systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/opt/etcd/cfg/etcd.conf

ExecStart=/opt/etcd/bin/etcd \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

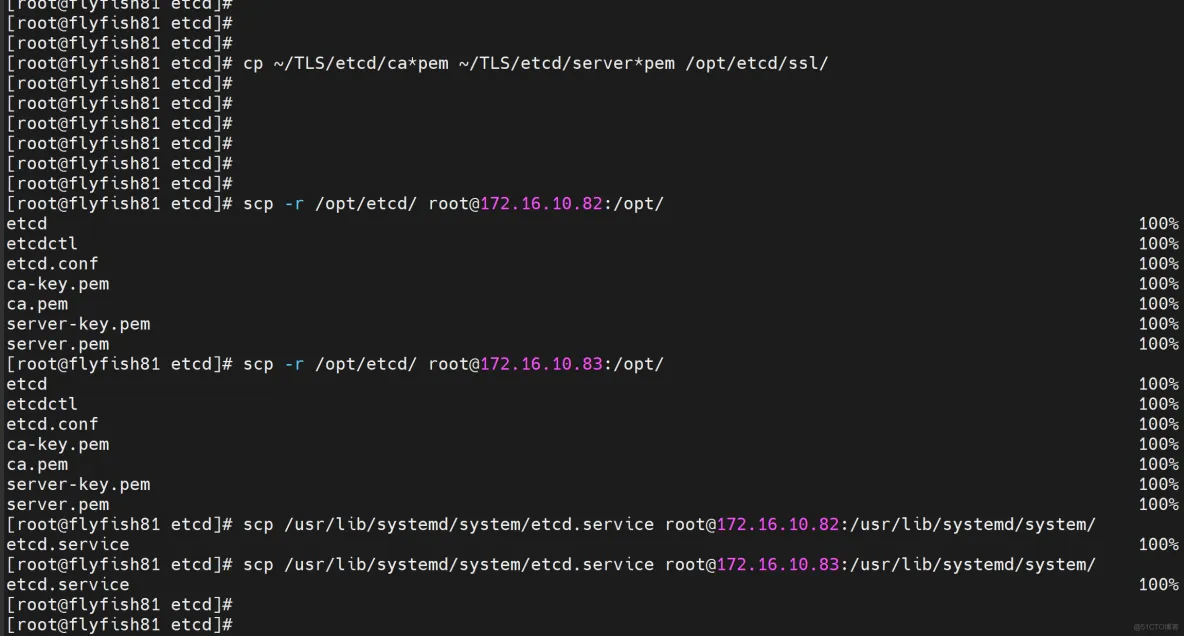

2.2 安装etcd

#拷贝刚才生成的证书

#把刚才生成的证书拷贝到配置文件中的路径:

cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/

5. 同步所有主机

scp -r /opt/etcd/ [email protected]:/opt/

scp -r /opt/etcd/ [email protected]:/opt/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

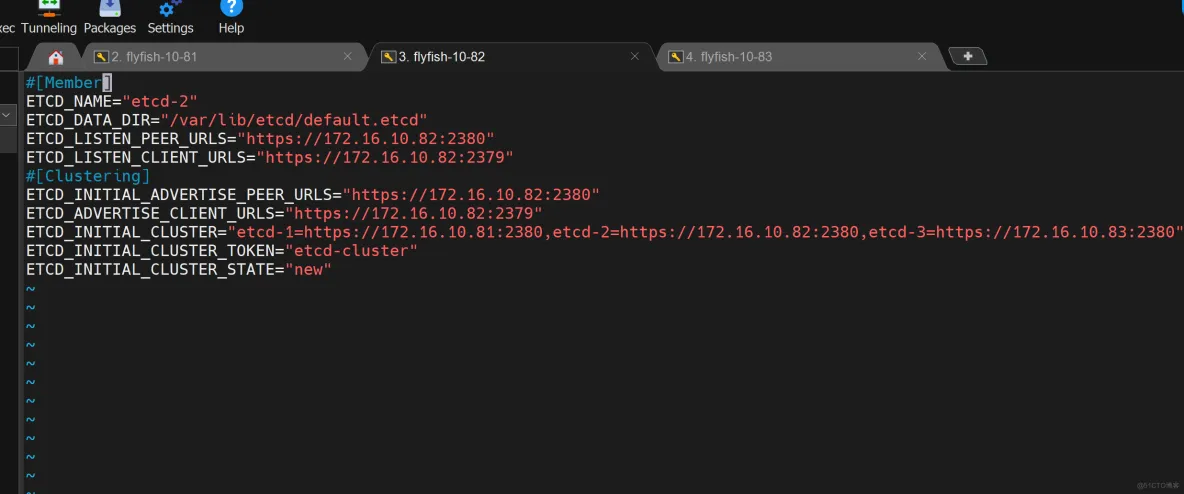

flyfish82 etcd

vim /opt/etcd/cfg/etcd.conf

-----

#[Member]

ETCD_NAME="etcd-2"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.10.82:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.10.82:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.10.82:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.10.82:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.16.10.81:2380,etcd-2=https://172.16.10.82:2380,etcd-3=https://172.16.10.83:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

----

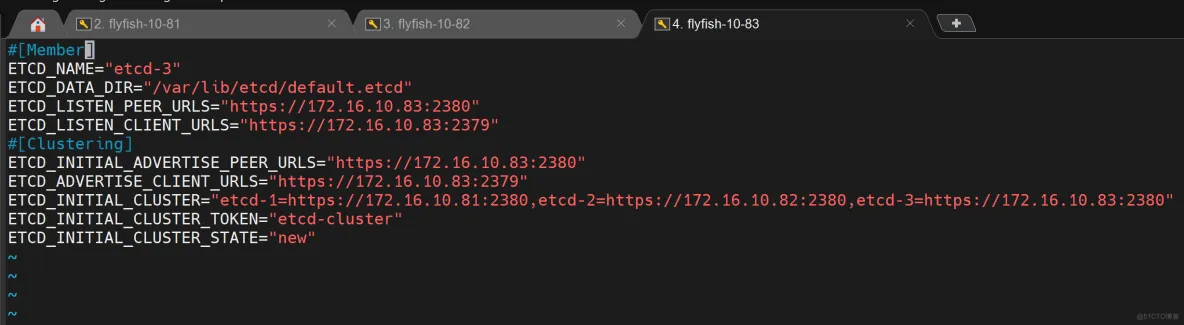

flyfish83 etcd

vim /opt/etcd/cfg/etcd.conf

----

#[Member]

ETCD_NAME="etcd-3"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.16.10.83:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.10.83:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.10.83:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.10.83:2379"

ETCD_INITIAL_CLUSTER="etcd-1=https://172.16.10.81:2380,etcd-2=https://172.16.10.82:2380,etcd-3=https://172.16.10.83:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

-----

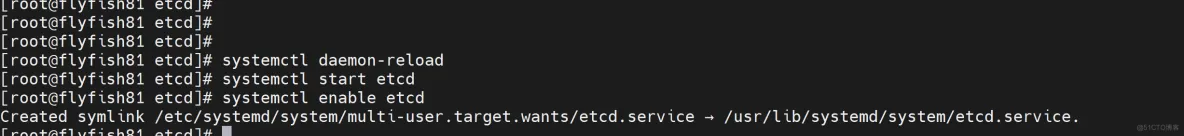

启动etcd:

systemctl daemon-reload

systemctl start etcd

systemctl enable etcd

验证:

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://172.16.10.81:2379,https://172.16.10.82:2379,https://172.16.10.83:2379" endpoint health --write-out=table

三:部署k8s1.26.x

3.1 k8s 1.26.x 最新版本下载

1. 从Github下载二进制文件

下载地址:

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.26.md

注:打开链接你会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。

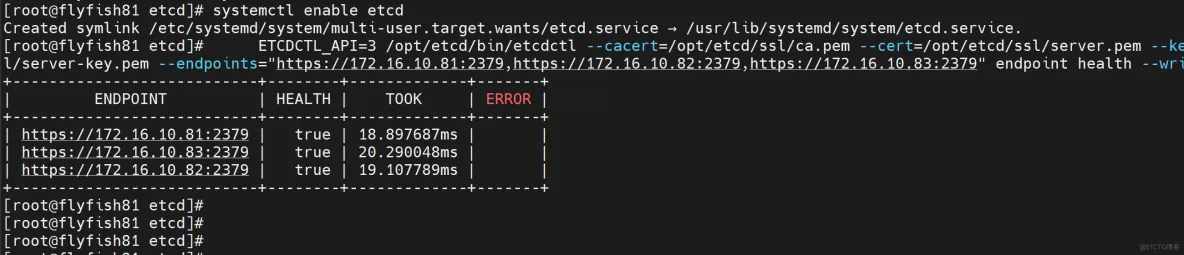

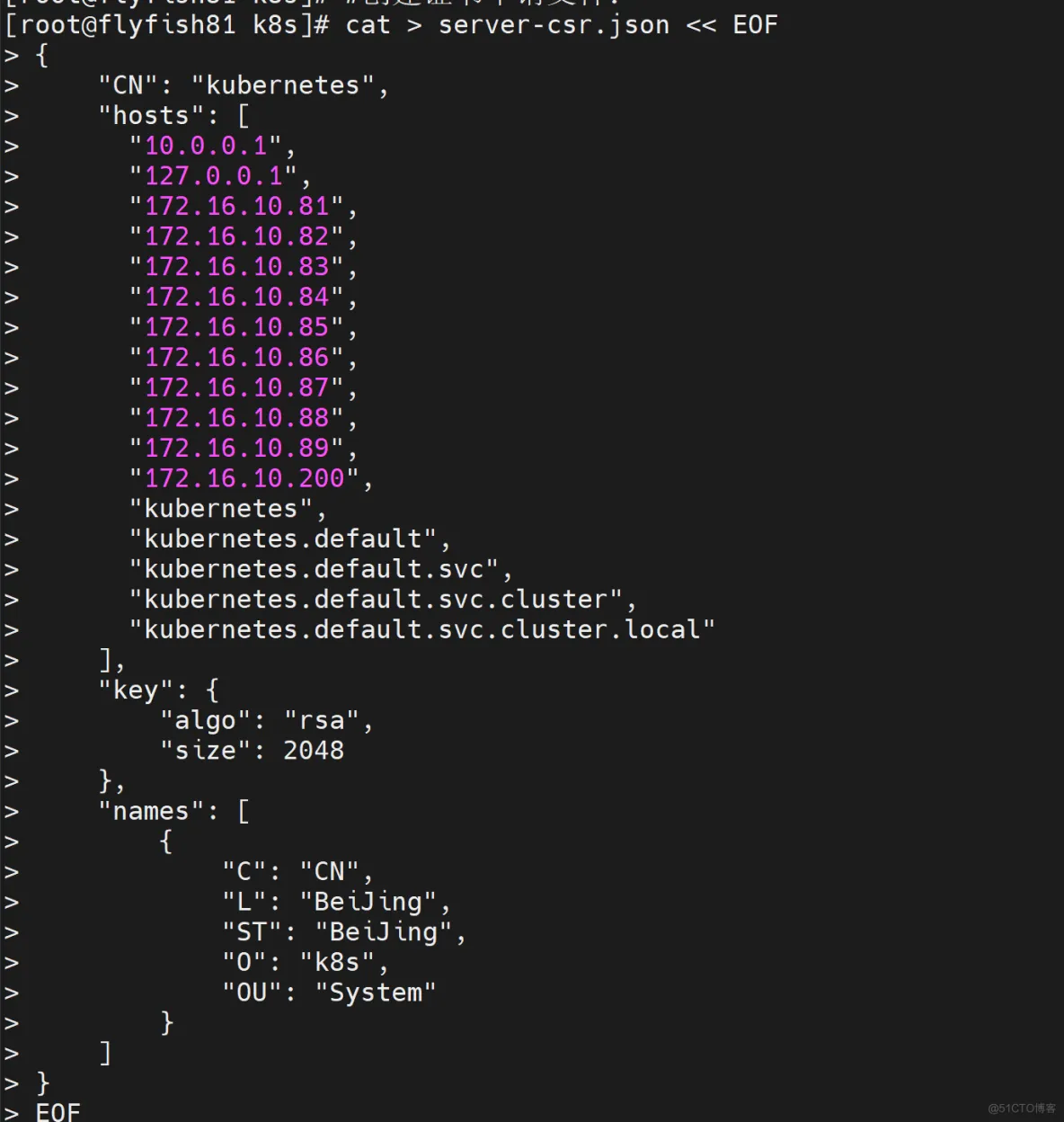

3.2 生成k8s1.26.x 证书

#创建k8s 的kube-apiserver证书

cd ~/TLS/k8s

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

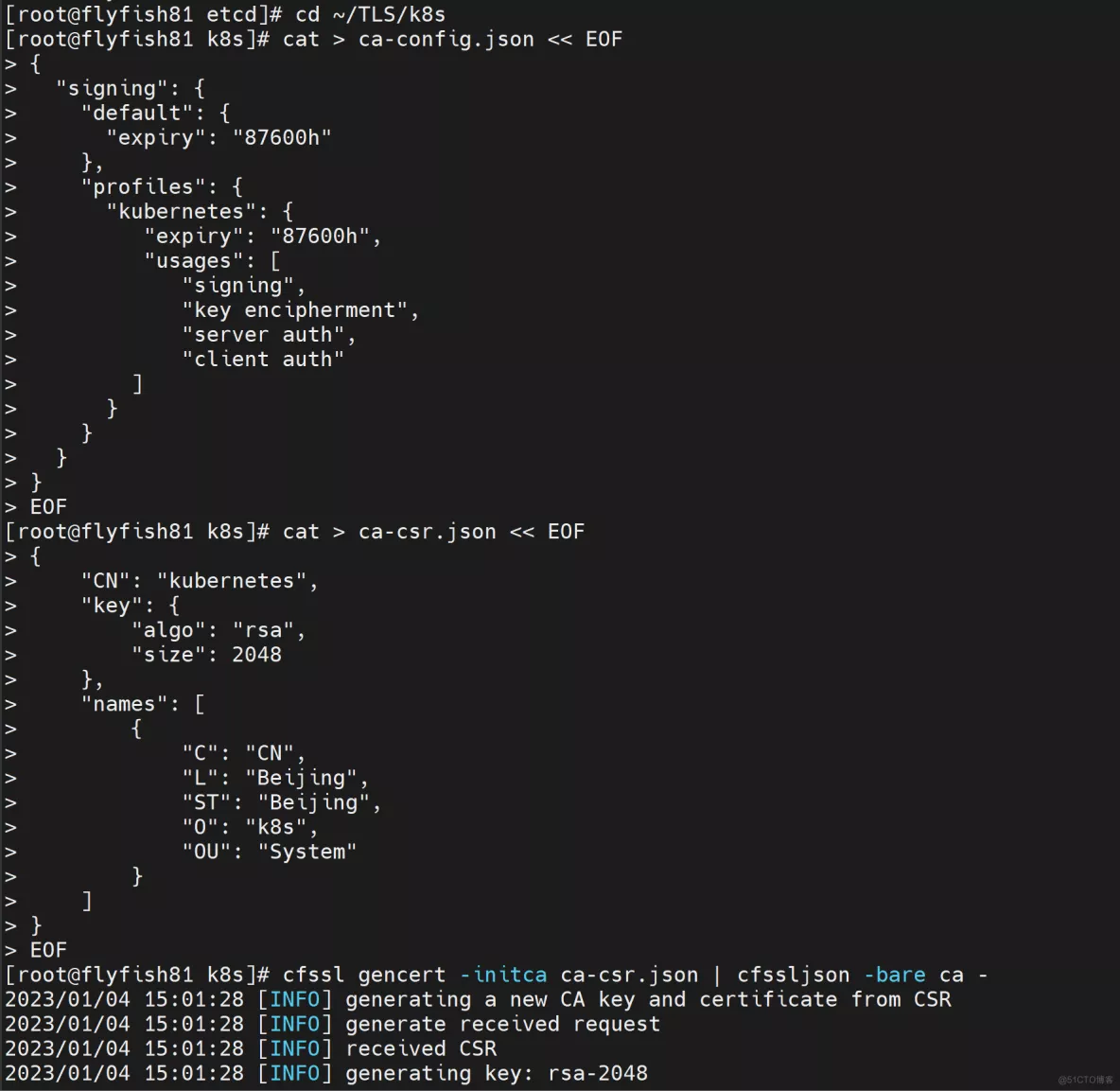

生成证书:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

会生成ca.pem和ca-key.pem文件。

#使用自签CA签发kube-apiserver HTTPS证书

#创建证书申请文件:

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"172.16.10.81",

"172.16.10.82",

"172.16.10.83",

"172.16.10.84",

"172.16.10.85",

"172.16.10.86",

"172.16.10.87",

"172.16.10.88",

"172.16.10.89",

"172.16.10.200",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

#注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。

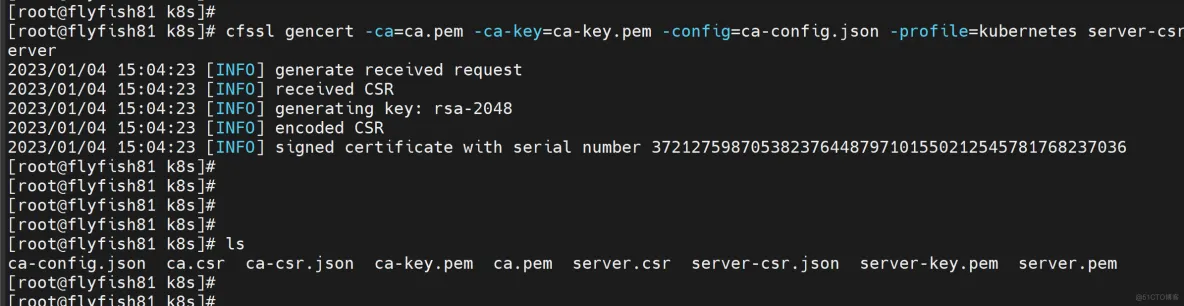

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#会生成server.pem和server-key.pem文件。

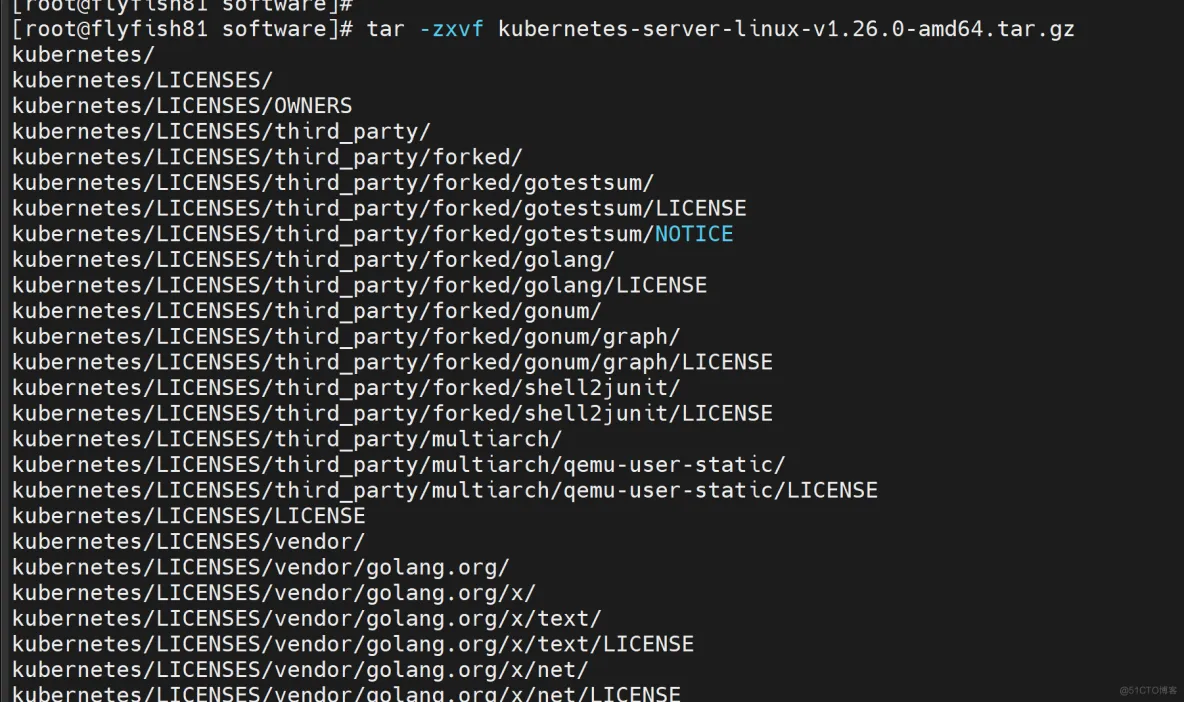

3.3 安装k8s 1.26.x

#部署k8s1.26.0

#解压二进制包

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

tar -zxvf kubernetes-server-linux-v1.26.0-amd64.tar

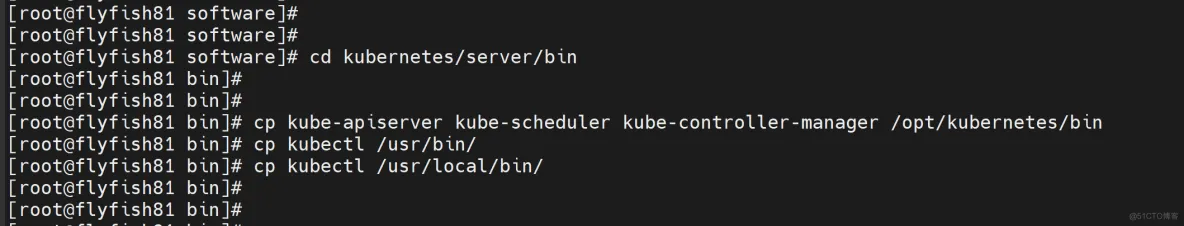

cd kubernetes/server/bin

cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin

cp kubectl /usr/bin/

cp kubectl /usr/local/bin/

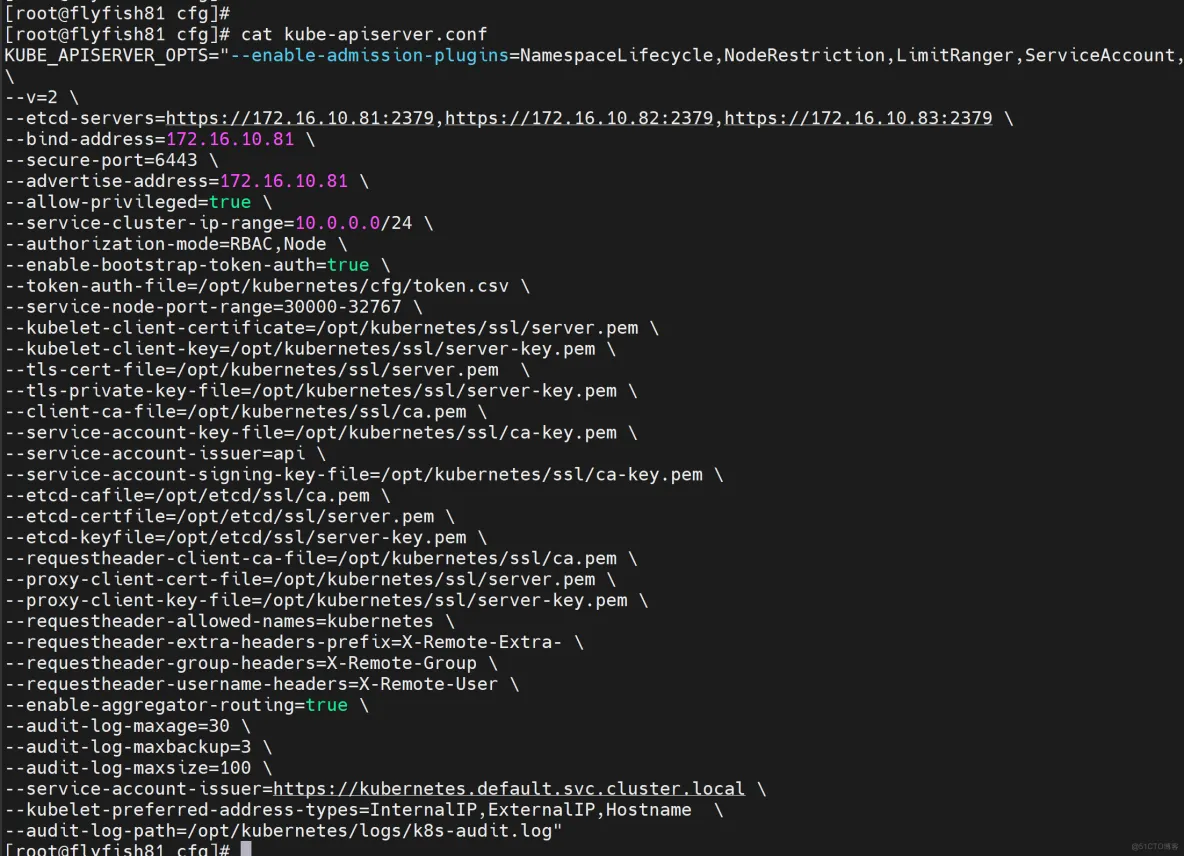

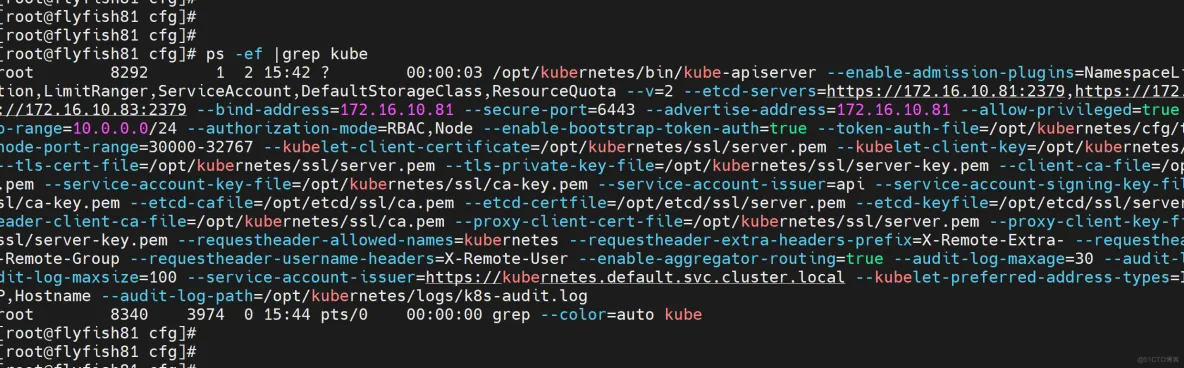

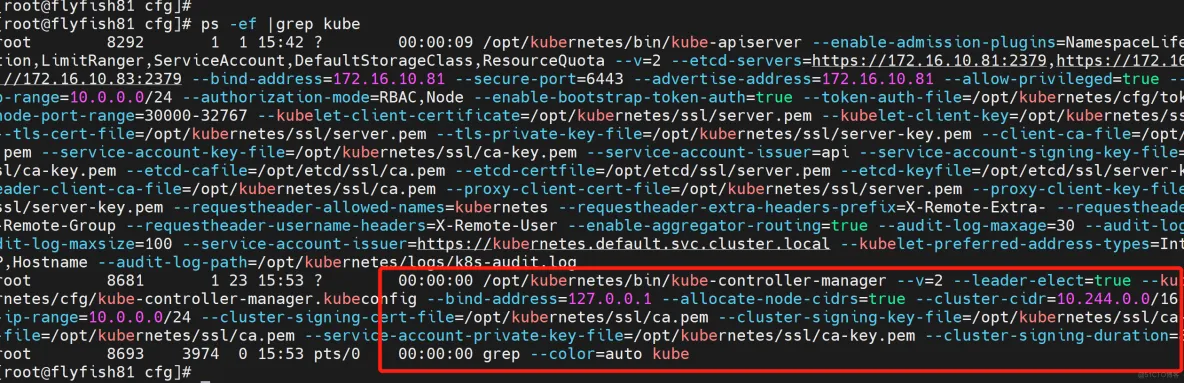

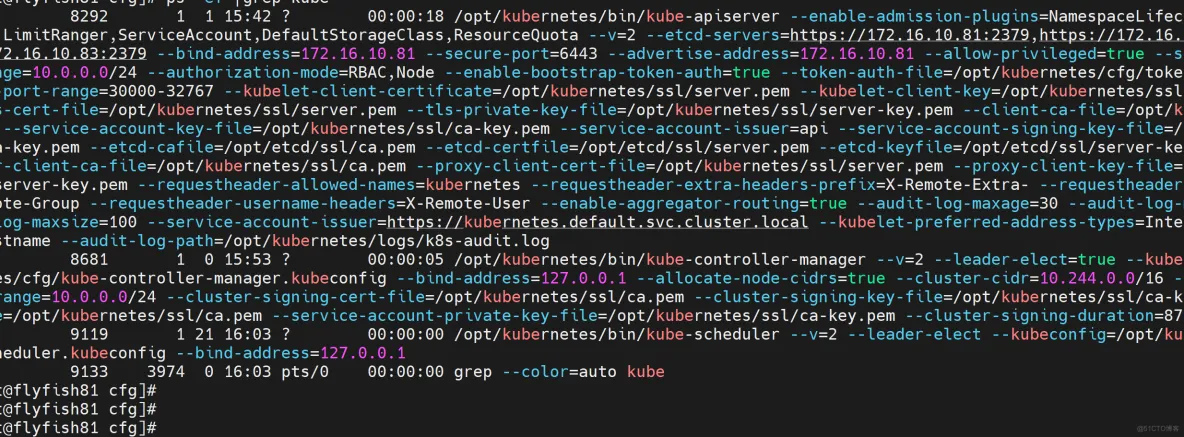

3.3.1 部署kube-apiserver

#部署kube-apiserver

#创建配置文件

vim /opt/kubernetes/cfg/kube-apiserver.conf

-----

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--v=2 \

--etcd-servers=https://172.16.10.81:2379,https://172.16.10.82:2379,https://172.16.10.83:2379 \

--bind-address=172.16.10.81 \

--secure-port=6443 \

--advertise-address=172.16.10.81 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--service-account-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \

--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \

--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \

--requestheader-allowed-names=kubernetes \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

------

注:上面两个\ \ 第一个是转义符,第二个是换行符,使用转义符是为了使用EOF保留换行符。

• ---v:日志等级

• --etcd-servers:etcd集群地址

• --bind-address:监听地址

• --secure-port:https安全端口

• --advertise-address:集群通告地址

• --allow-privileged:启用授权

• --service-cluster-ip-range:Service虚拟IP地址段

• --enable-admission-plugins:准入控制模块

• --authorization-mode:认证授权,启用RBAC授权和节点自管理

• --enable-bootstrap-token-auth:启用TLS bootstrap机制

• --token-auth-file:bootstrap token文件

• --service-node-port-range:Service nodeport类型默认分配端口范围

• --kubelet-client-xxx:apiserver访问kubelet客户端证书

• --tls-xxx-file:apiserver https证书

• 1.20版本必须加的参数:--service-account-issuer,--service-account-signing-key-file

• --etcd-xxxfile:连接Etcd集群证书

• --audit-log-xxx:审计日志

• 启动聚合层相关配置:--requestheader-client-ca-file,--proxy-client-cert-file,--proxy-client-key-file,--requestheader-allowed-names,--requestheader-extra-headers-prefix,--requestheader-group-headers,--requestheader-username-headers,--enable-aggregator-routing

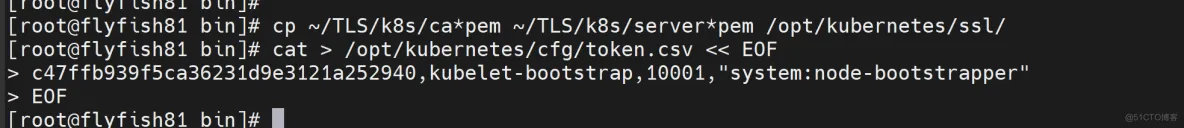

#拷贝刚才生成的证书

#把刚才生成的证书拷贝到配置文件中的路径:

cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

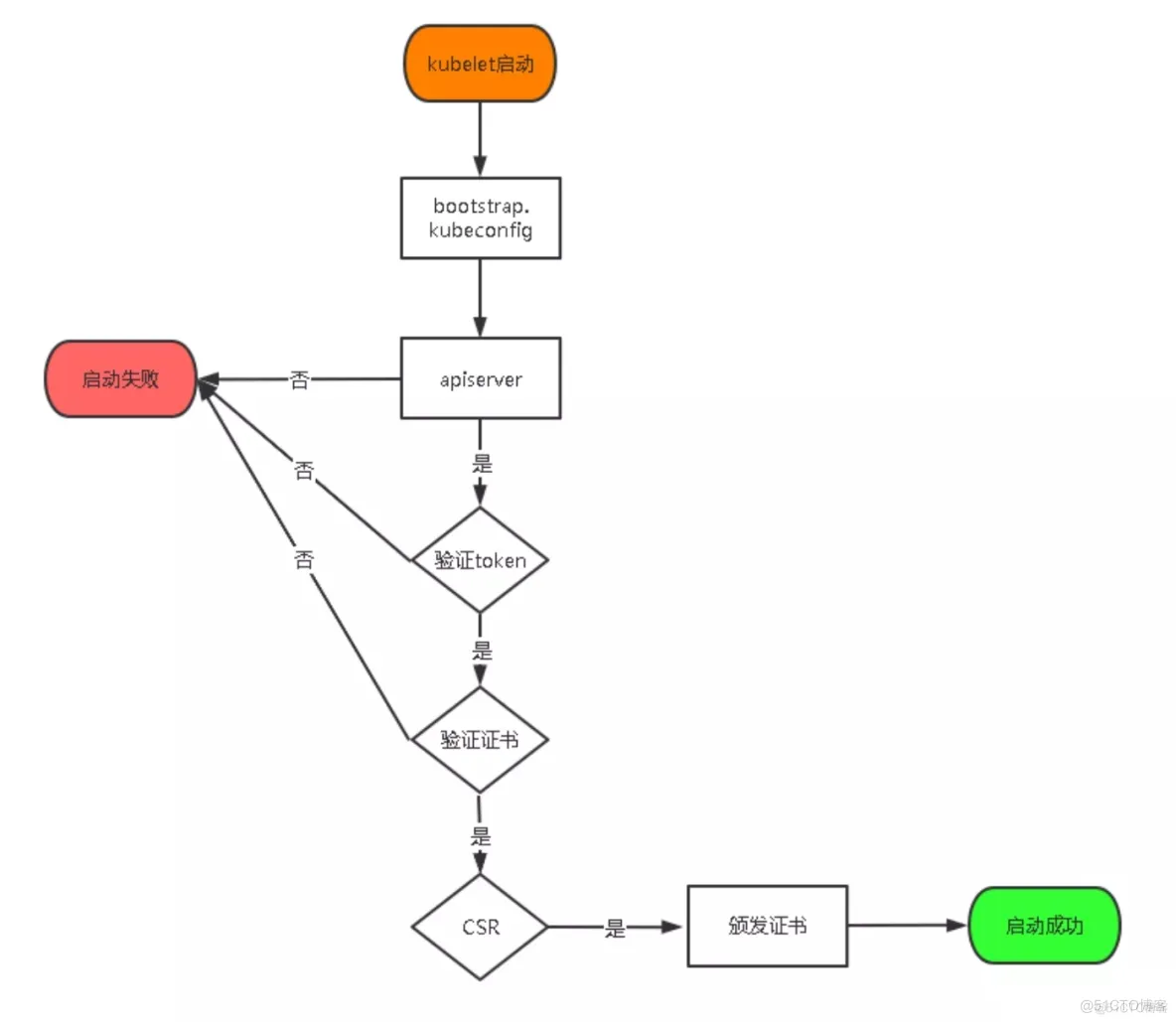

#启用 TLS Bootstrapping 机制

TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和

kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,

当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。

为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,

kubelet会以一个低权限用户自动向apiserver申请证书,

kubelet的证书由apiserver动态签署。

所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy

还是由我们统一颁发一个证书。

创建上述配置文件中token文件:

cat > /opt/kubernetes/cfg/token.csv << EOF

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

EOF

格式:token,用户名,UID,用户组

token也可自行生成替换:

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

#systemd管理apiserver

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

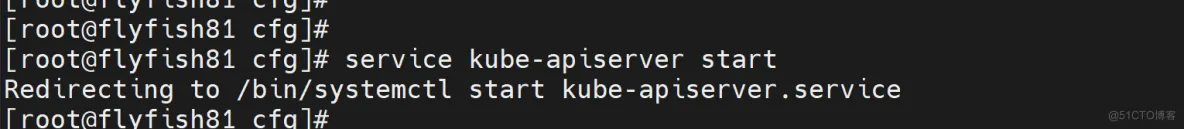

#启动并设置开机启动

systemctl daemon-reload

systemctl start kube-apiserver

systemctl enable kube-apiserver

3.3.2 部署kube-controller-manager

#部署kube-controller-manager

#1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS=" \\

--v=2 \\

--log-dir=/opt/kubernetes/logs \\

--leader-elect=true \\

--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/16 \\

--service-cluster-ip-range=10.0.0.0/24 \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--cluster-signing-duration=87600h0m0s"

EOF

•--kubeconfig:连接apiserver配置文件

•--leader-elect:当该组件启动多个时,自动选举(HA)

•--cluster-signing-cert-file/--cluster-signing-key-file:自动为kubelet颁发证书的CA,与apiserver保持一致

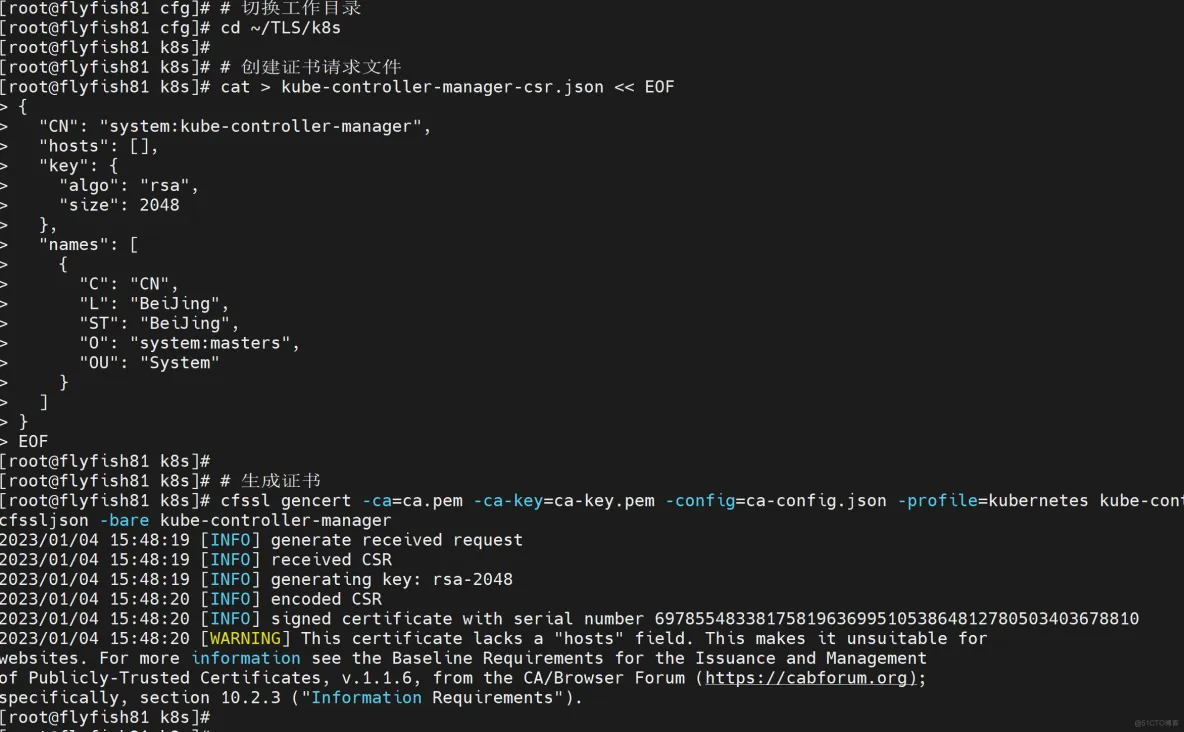

2. 生成kubeconfig文件

生成kube-controller-manager证书:

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

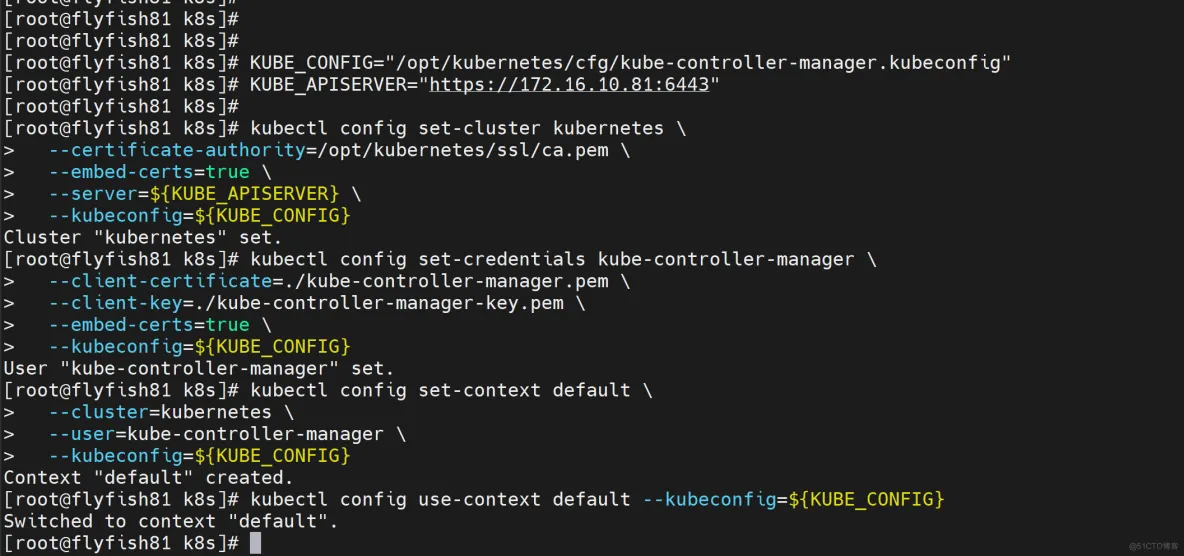

生成kubeconfig文件(以下是shell命令,直接在终端执行):

KUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"

KUBE_APISERVER="https://172.16.10.81:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-controller-manager \

--client-certificate=./kube-controller-manager.pem \

--client-key=./kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-controller-manager \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

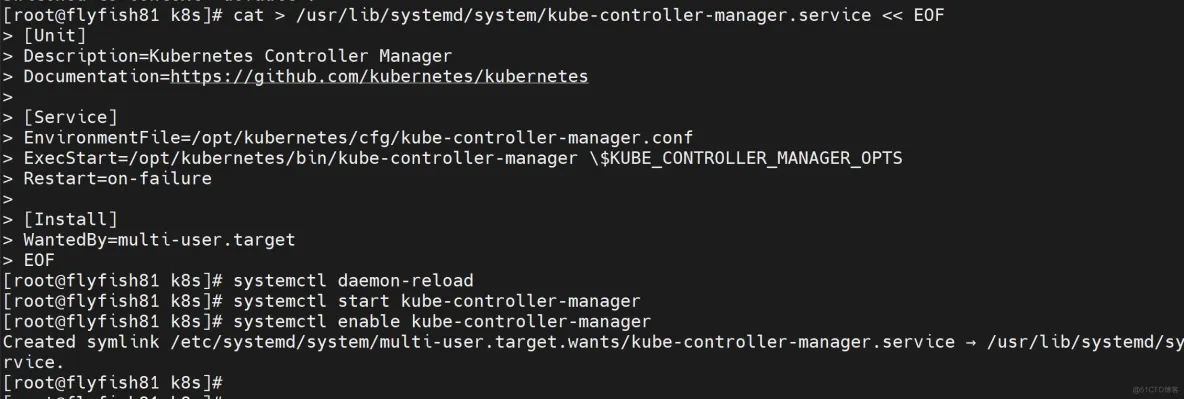

# systemd管理controller-manager

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

#启动并设置开机启动

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

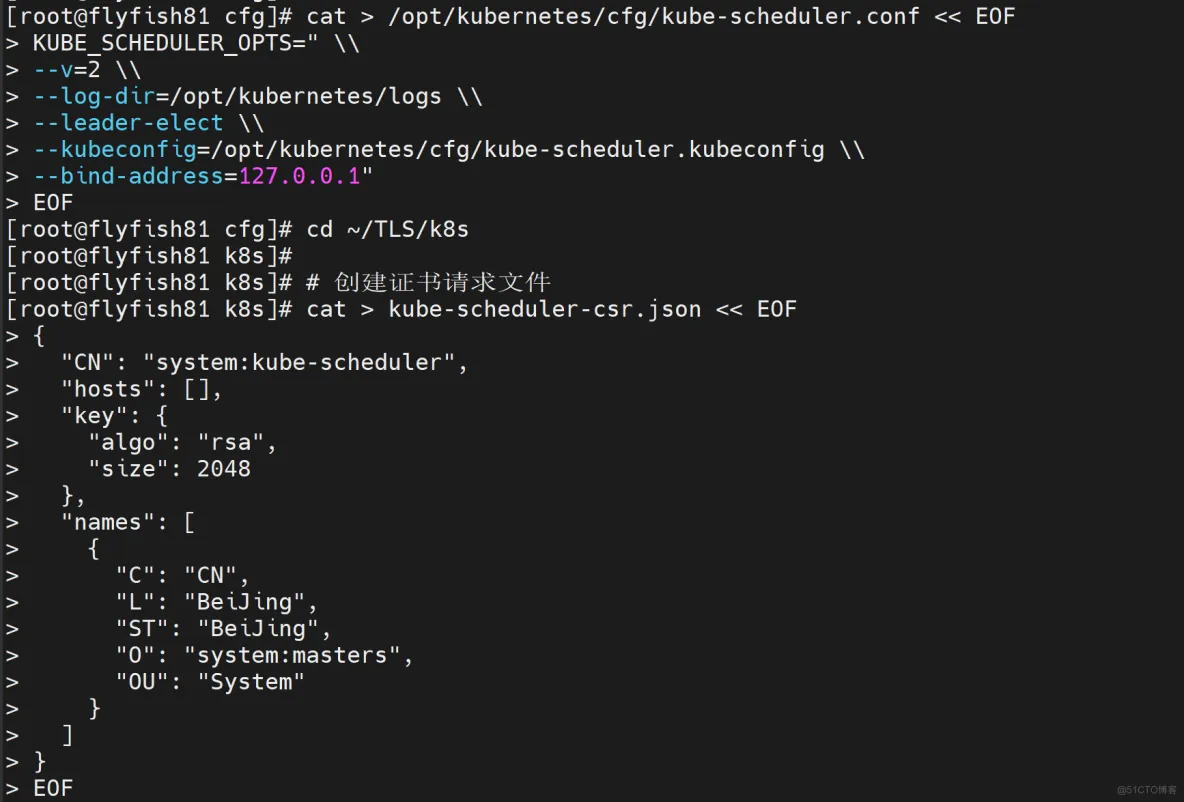

3.3.3 部署kube-scheduler

部署kube-scheduler

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS=" \\

--v=2 \\

--leader-elect \\

--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\

--bind-address=127.0.0.1"

EOF

•--kubeconfig:连接apiserver配置文件

•--leader-elect:当该组件启动多个时,自动选举(HA)

#生成kubeconfig文件

生成kube-scheduler证书:

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

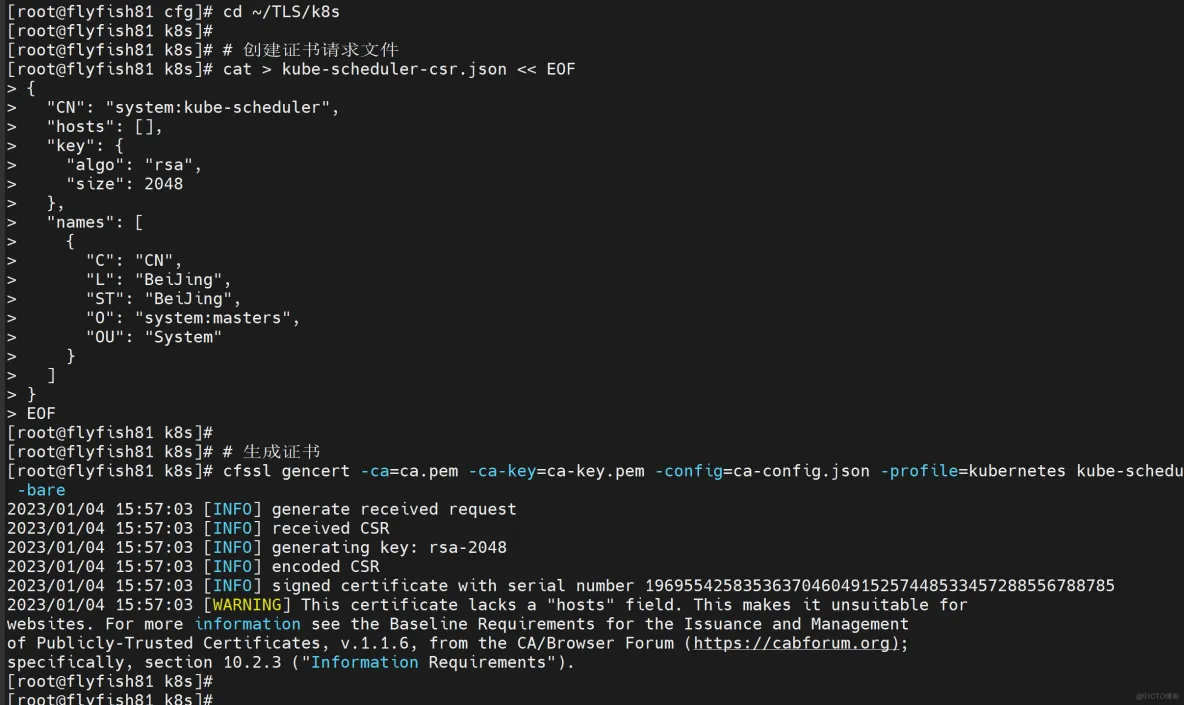

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

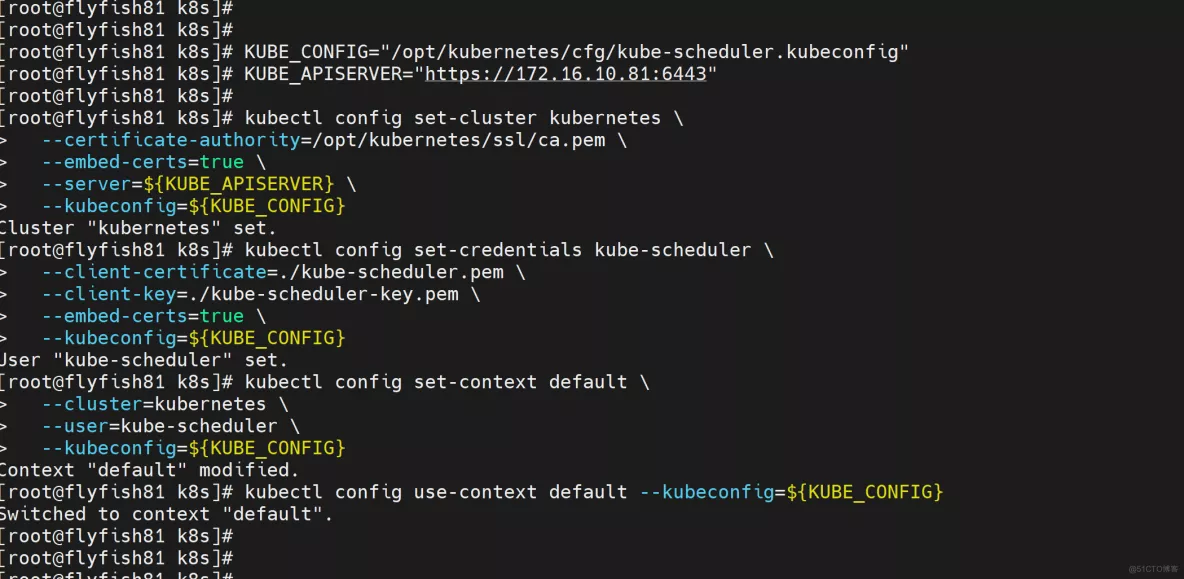

生成kubeconfig文件:

KUBE_CONFIG="/opt/kubernetes/cfg/kube-scheduler.kubeconfig"

KUBE_APISERVER="https://172.16.10.81:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-scheduler \

--client-certificate=./kube-scheduler.pem \

--client-key=./kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-scheduler \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

3. systemd管理scheduler

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

启动并设置开机启动

systemctl daemon-reload

systemctl start kube-scheduler

systemctl enable kube-scheduler

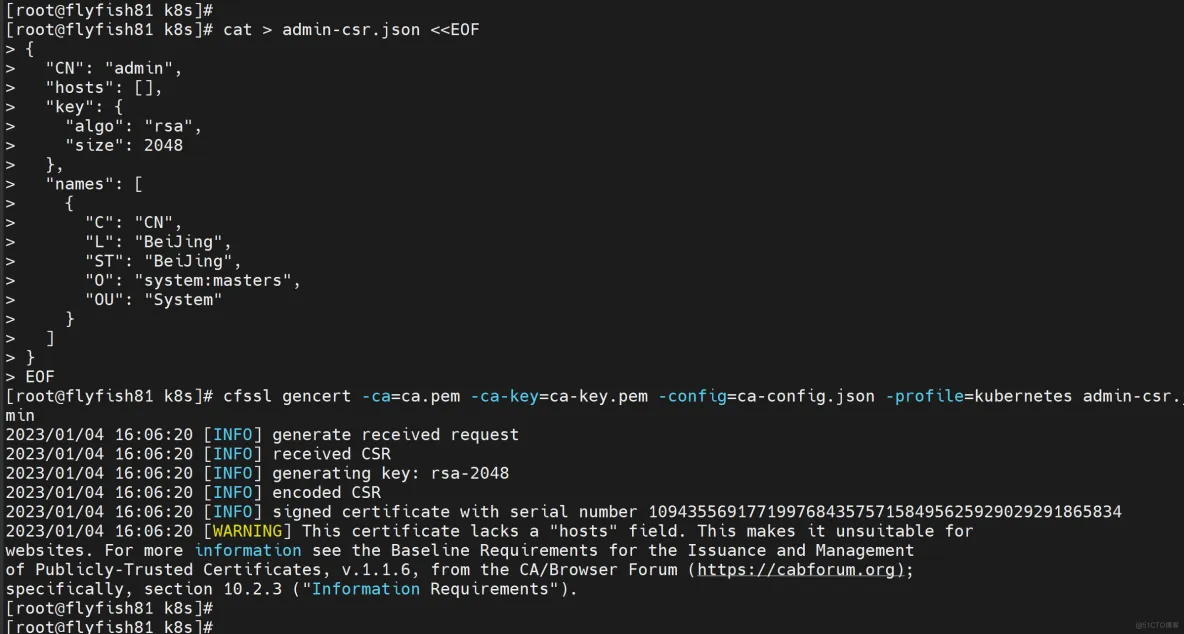

3.3.4 查看集群状态

#查看集群状态

#生成kubectl连接集群的证书:

cd /root/TLS/k8s/

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

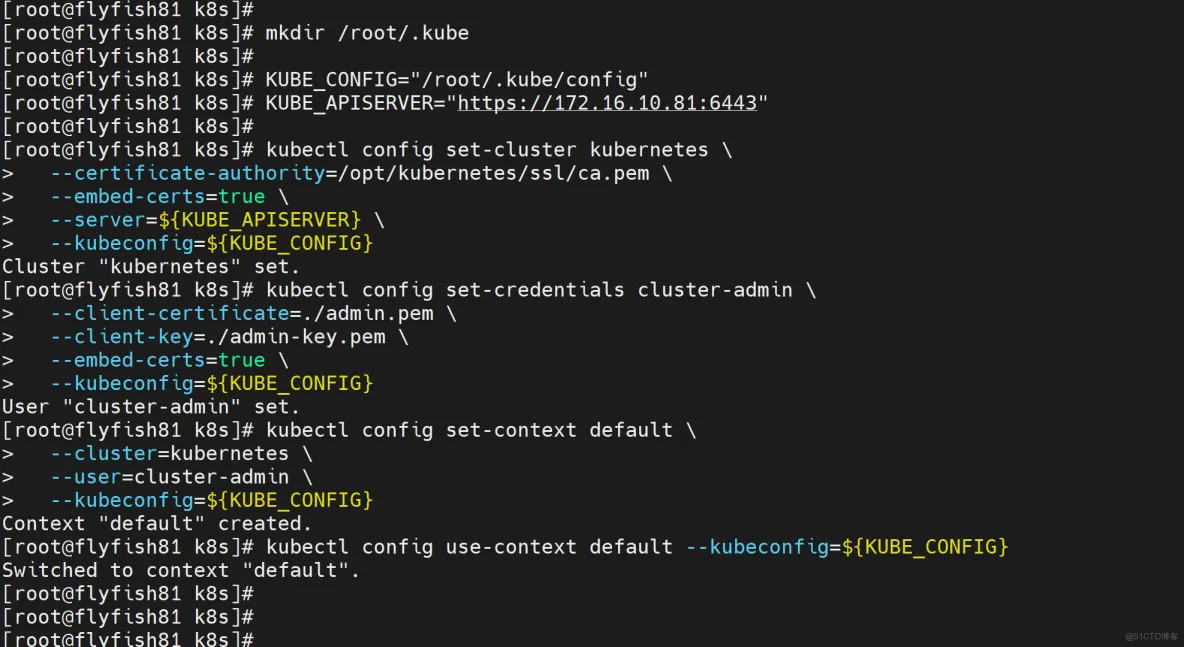

生成kubeconfig文件:

mkdir /root/.kube

KUBE_CONFIG="/root/.kube/config"

KUBE_APISERVER="https://172.16.10.81:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials cluster-admin \

--client-certificate=./admin.pem \

--client-key=./admin-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=cluster-admin \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

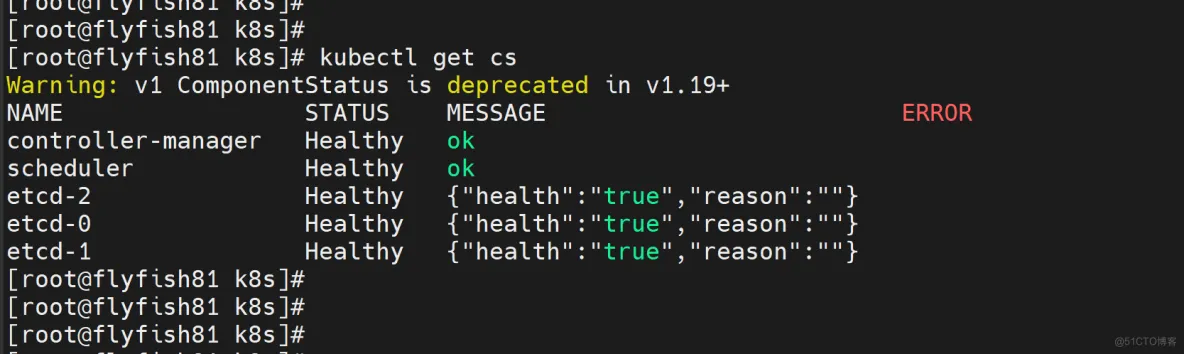

通过kubectl工具查看当前集群组件状态:

kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

如上输出说明Master节点组件运行正常。

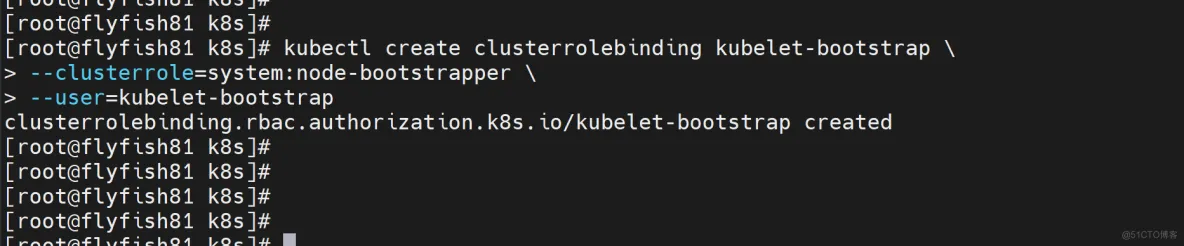

授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

四: 部署worker节点

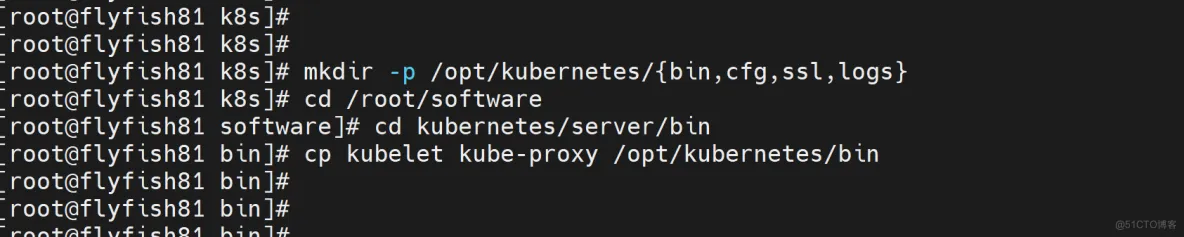

4.1 创建工作目录并拷贝二进制文件

在所有worker node创建工作目录:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

从master节点拷贝:

cd /root/software

cd kubernetes/server/bin

cp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝

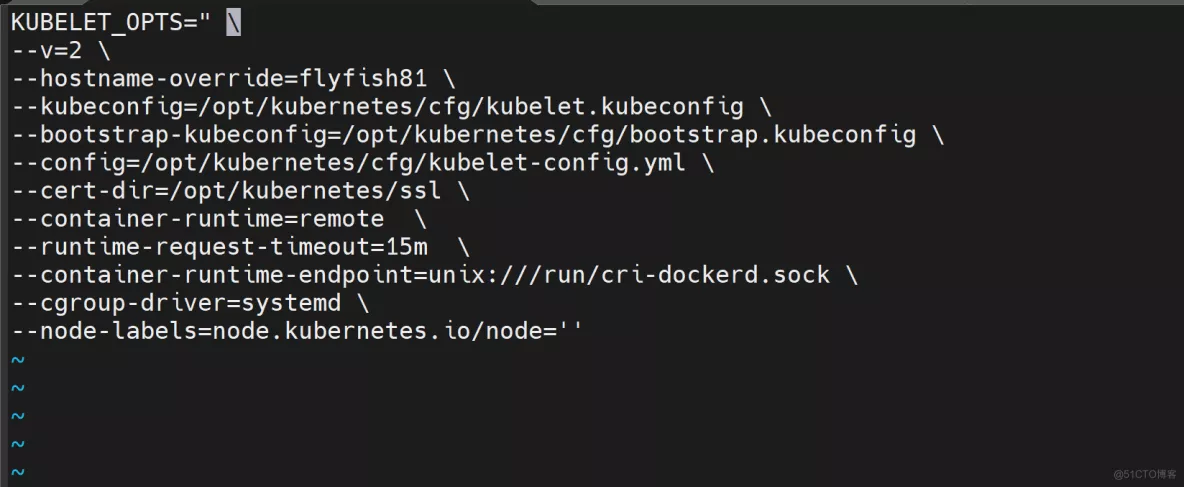

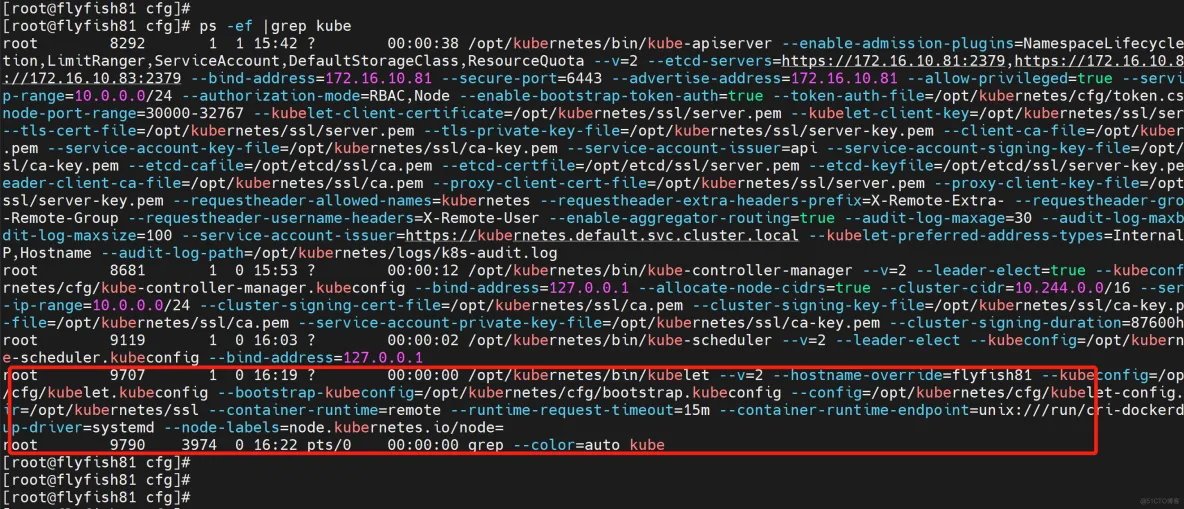

4.2 部署kubelet

1. 创建配置文件

vim /opt/kubernetes/cfg/kubelet.conf

------

KUBELET_OPTS=" \

--v=2 \

--hostname-override=flyfish81 \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-config.yml \

--cert-dir=/opt/kubernetes/ssl \

--container-runtime=remote \

--runtime-request-timeout=15m \

--container-runtime-endpoint=unix:///run/cri-dockerd.sock \

--cgroup-driver=systemd \

--node-labels=node.kubernetes.io/node=''

------

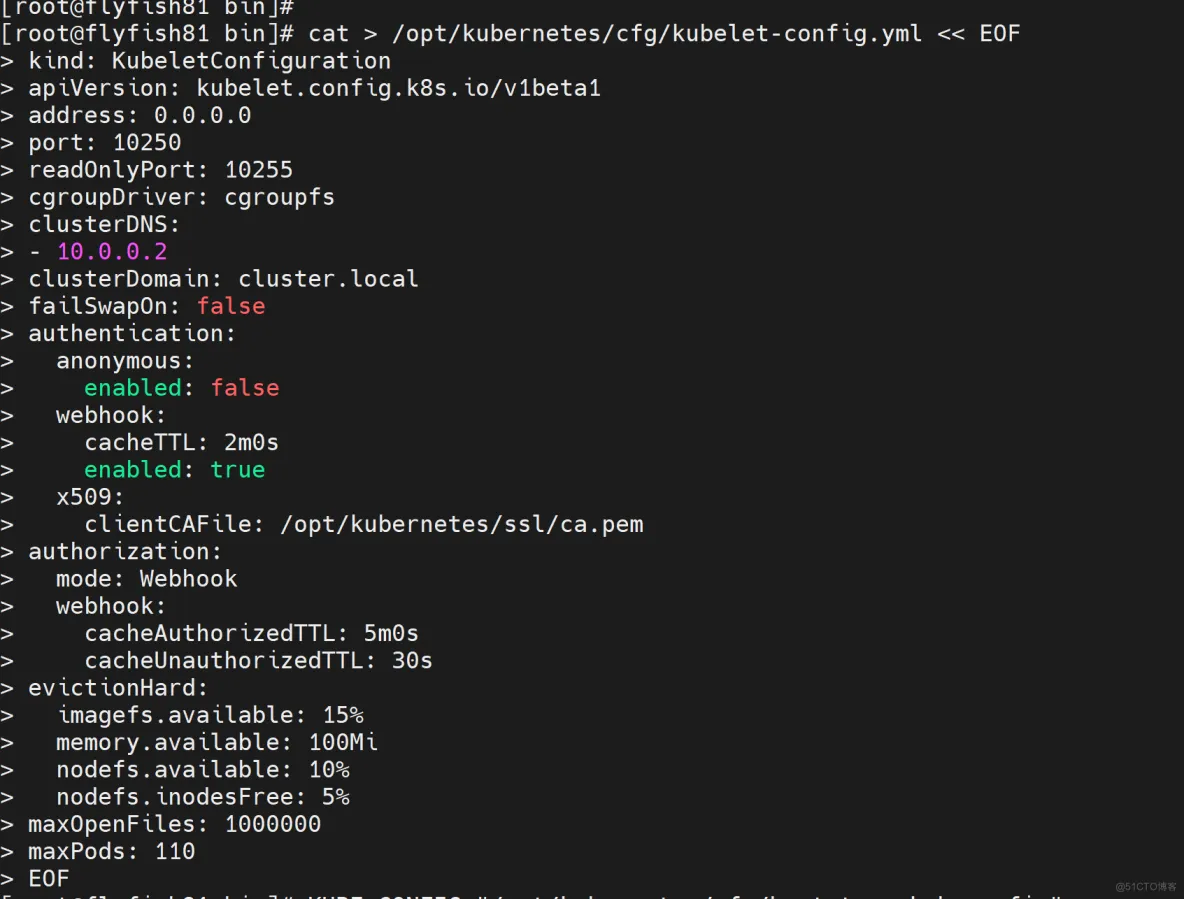

#配置参数文件

cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

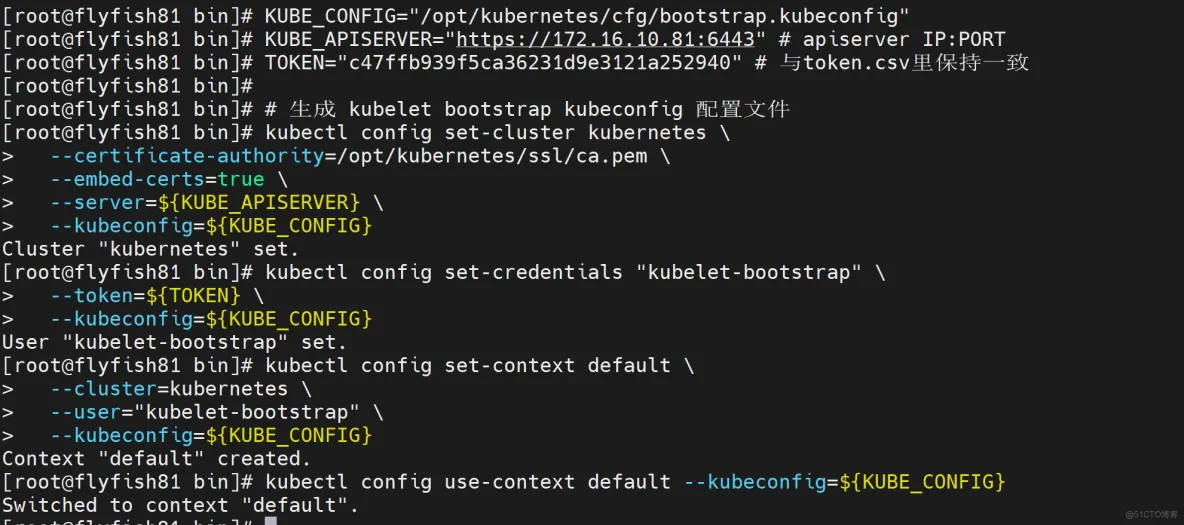

#生成kubelet初次加入集群引导kubeconfig文件

KUBE_CONFIG="/opt/kubernetes/cfg/bootstrap.kubeconfig"

KUBE_APISERVER="https://172.16.10.81:6443" # apiserver IP:PORT

TOKEN="c47ffb939f5ca36231d9e3121a252940" # 与token.csv里保持一致

# 生成 kubelet bootstrap kubeconfig 配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials "kubelet-bootstrap" \

--token=${TOKEN} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

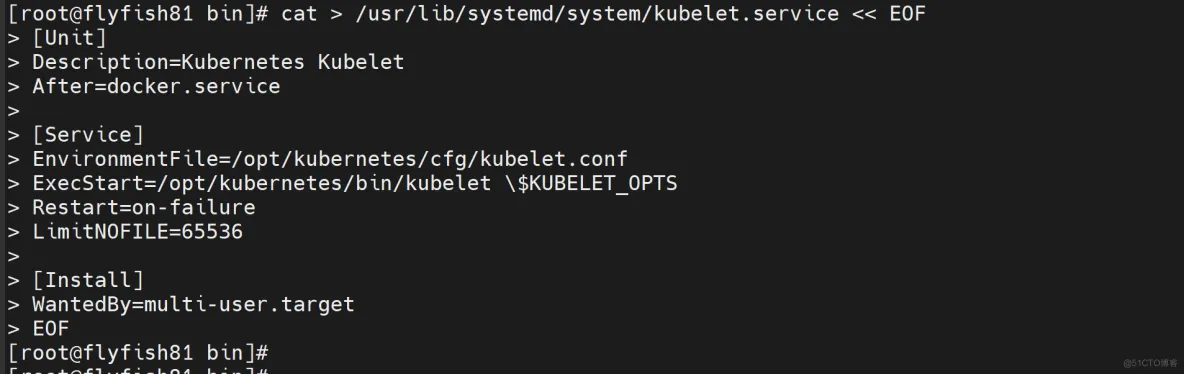

systemd管理kubelet

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

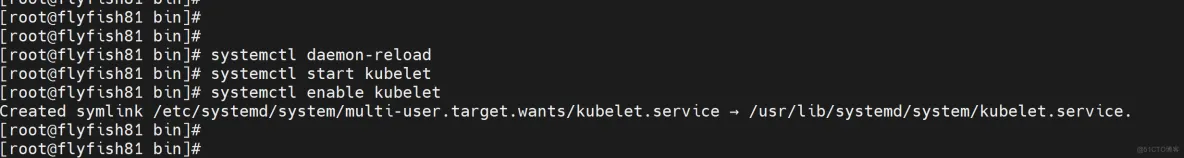

启动并设置开机启动

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

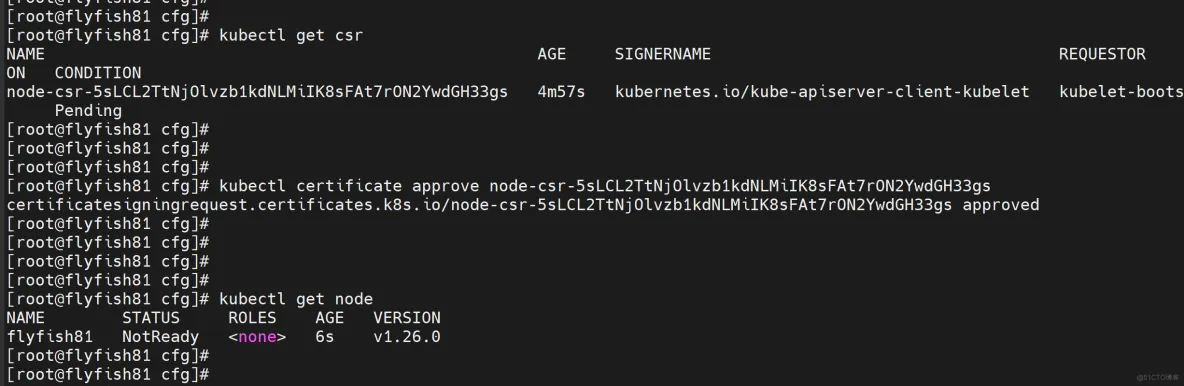

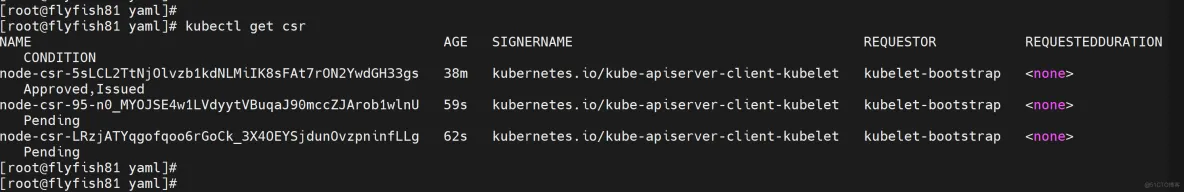

批准kubelet证书申请并加入集群

# 查看kubelet证书请求

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-5sLCL2TtNjOlvzb1kdNLMiIK8sFAt7rON2YwdGH33gs 4m57s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Pending

# 批准申请

kubectl certificate approve node-csr-5sLCL2TtNjOlvzb1kdNLMiIK8sFAt7rON2YwdGH33gs

# 查看节点

[root@rocksrvs01 bin]# kubectl get node

NAME STATUS ROLES AGE VERSION

rockysrvs01 NotReady <none> 6s v1.26.0

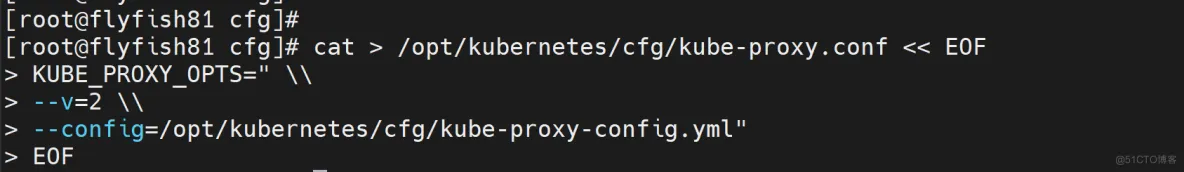

4.3 部署kube-proxy

1. 创建配置文件

cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS=" \\

--v=2 \\

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

EOF

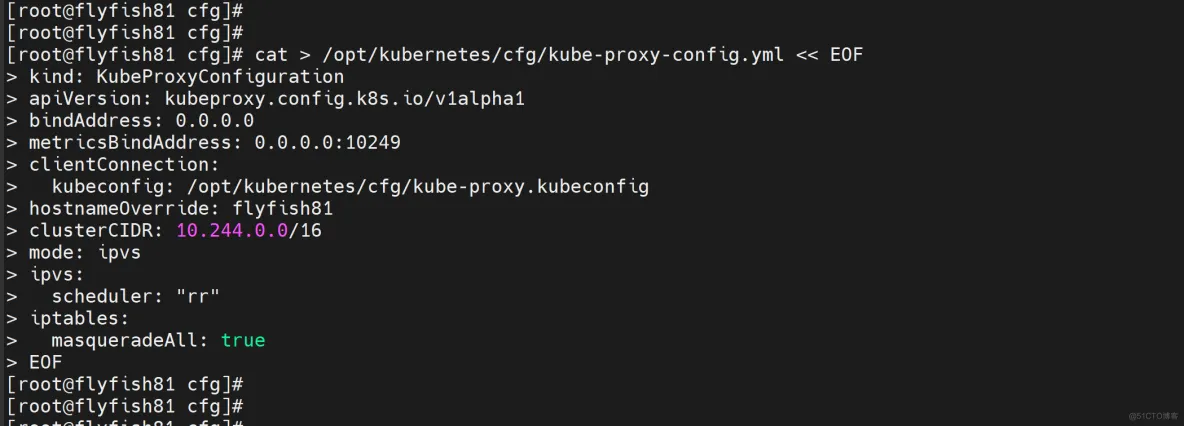

2. 配置参数文件

cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: flyfish81

clusterCIDR: 10.244.0.0/16

mode: ipvs

ipvs:

scheduler: "rr"

iptables:

masqueradeAll: true

EOF

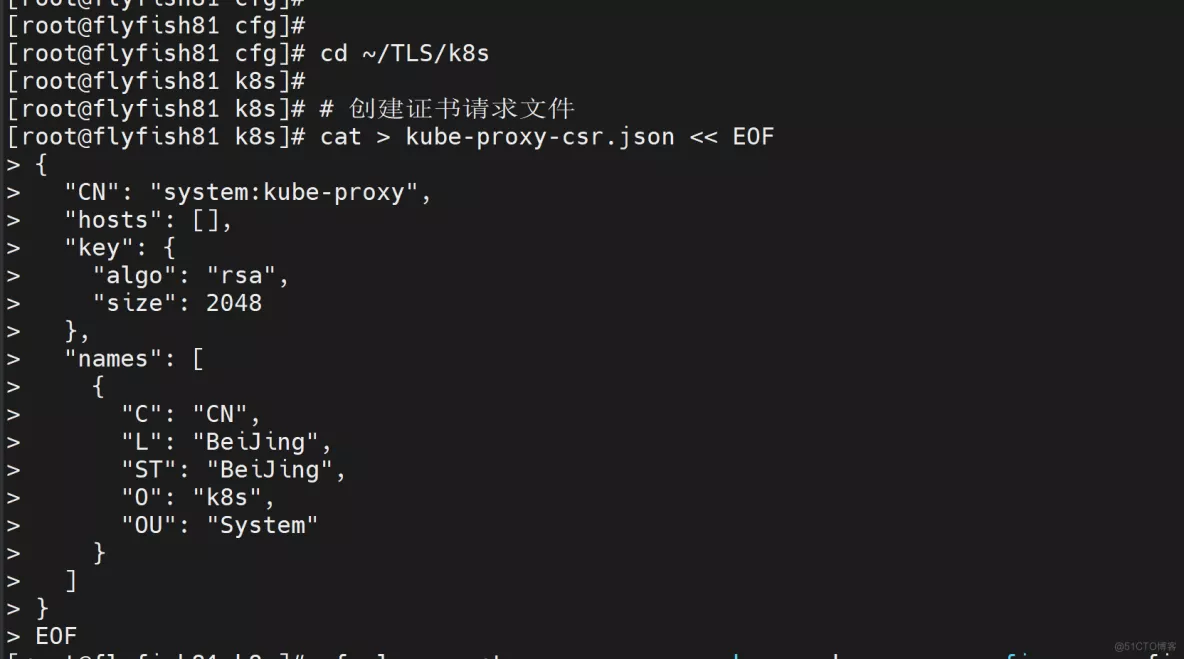

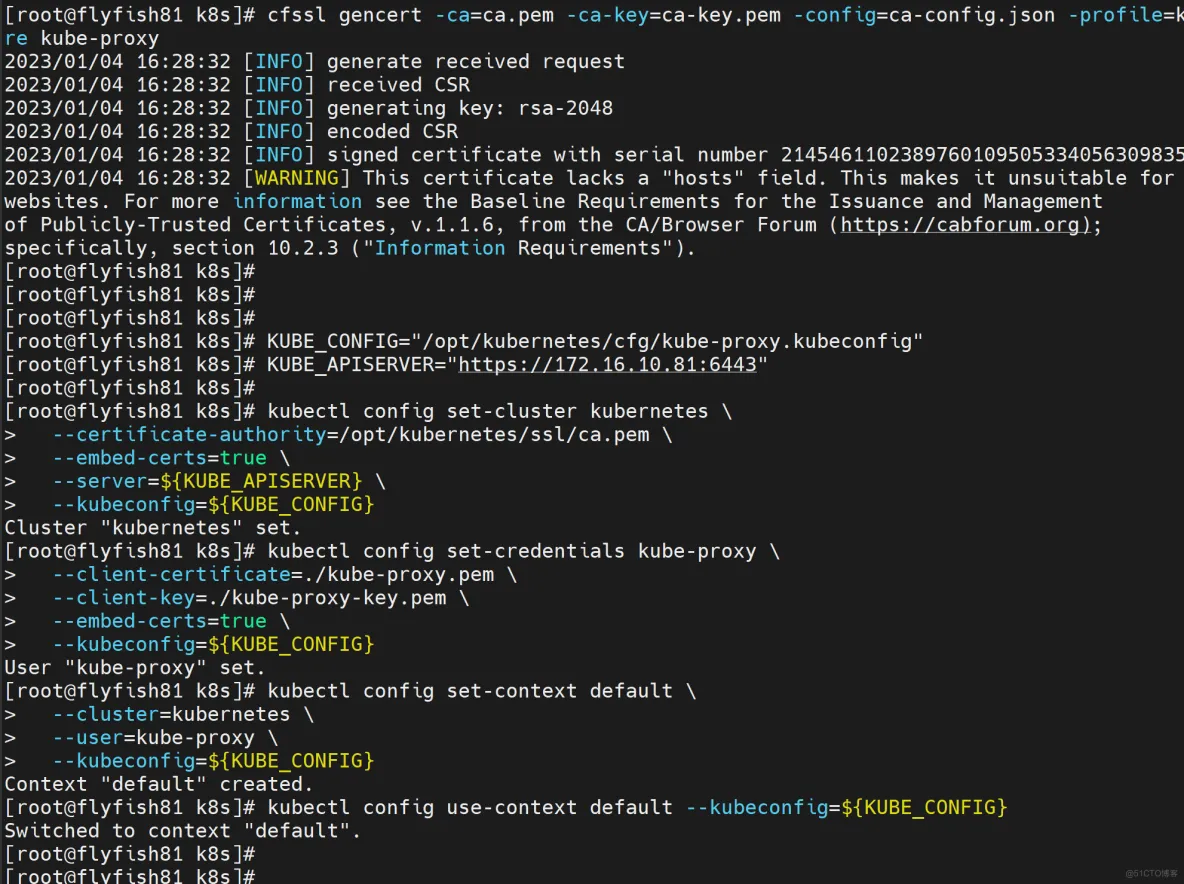

#生成kube-proxy.kubeconfig文件

# 切换工作目录

cd ~/TLS/k8s

# 创建证书请求文件

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

# 生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

生成kubeconfig文件:

KUBE_CONFIG="/opt/kubernetes/cfg/kube-proxy.kubeconfig"

KUBE_APISERVER="https://172.16.10.81:6443"

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

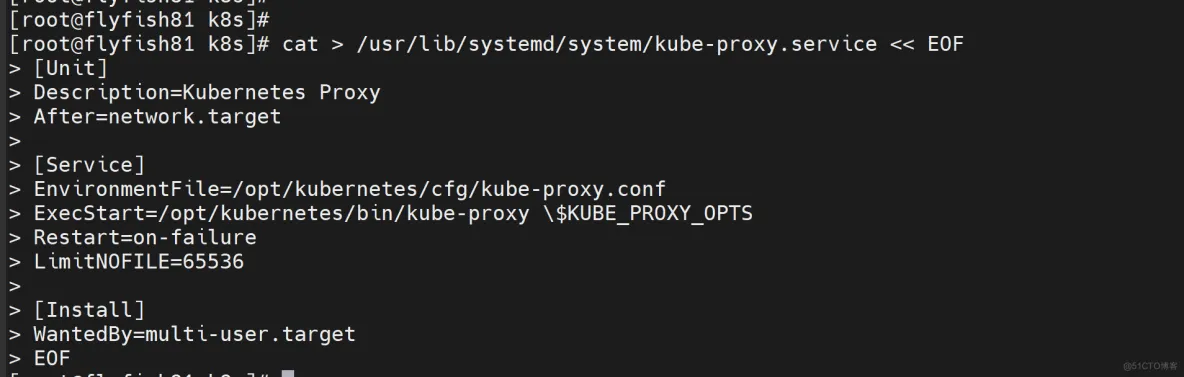

systemd管理kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

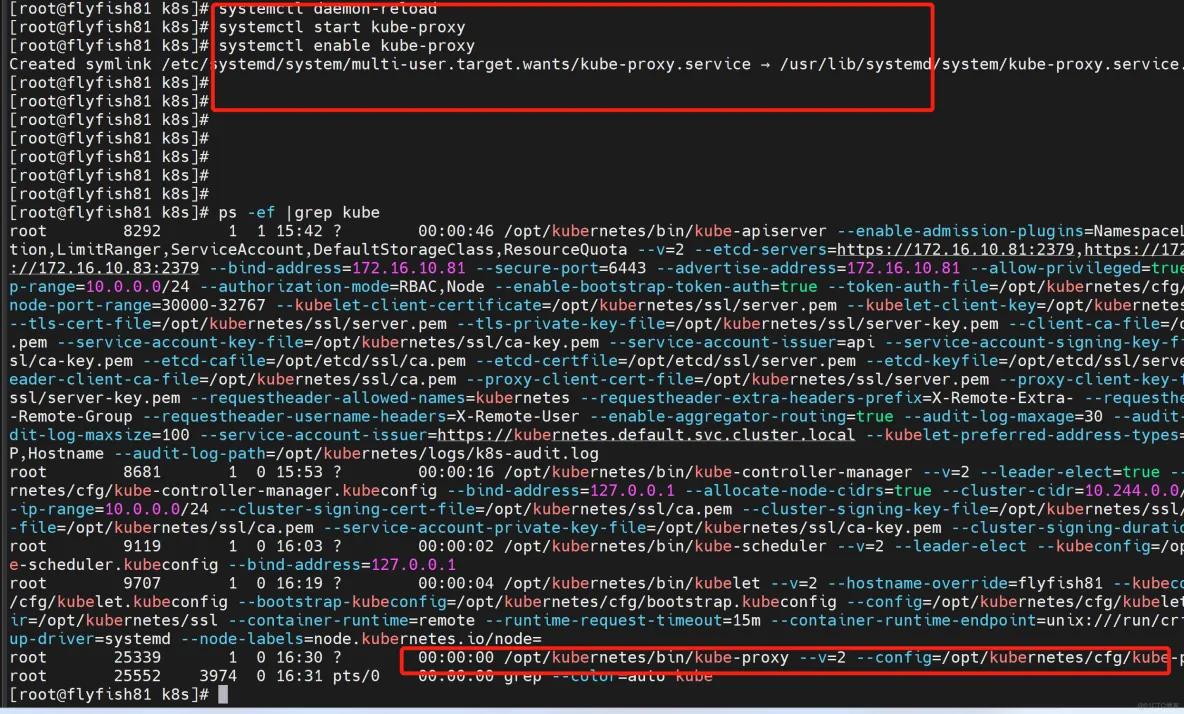

启动并设置开机启动

systemctl daemon-reload

systemctl start kube-proxy

systemctl enable kube-proxy

五:部署calico网络

网络组件有很多种,只需要部署其中一个即可,推荐Calico。

Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。

Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。

此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。

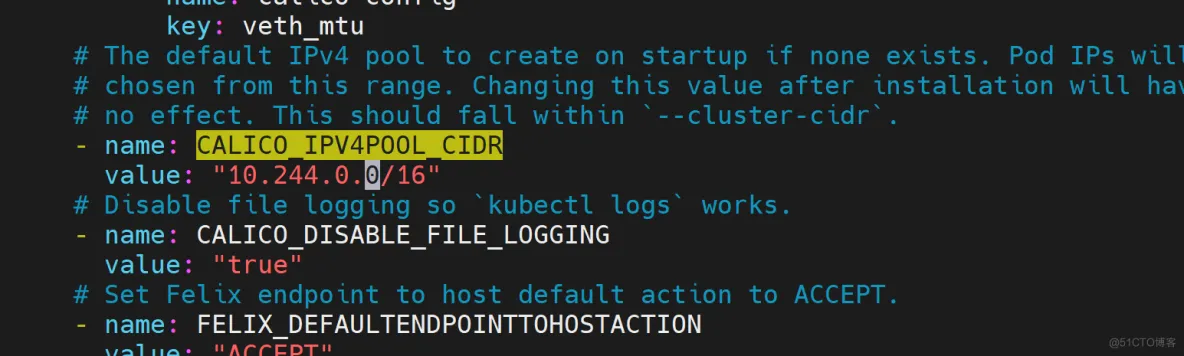

1.下载Calico

wget https://docs.projectcalico.org/manifests/calico.yaml --no-check-certificate

vim calico.yaml

...

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

...

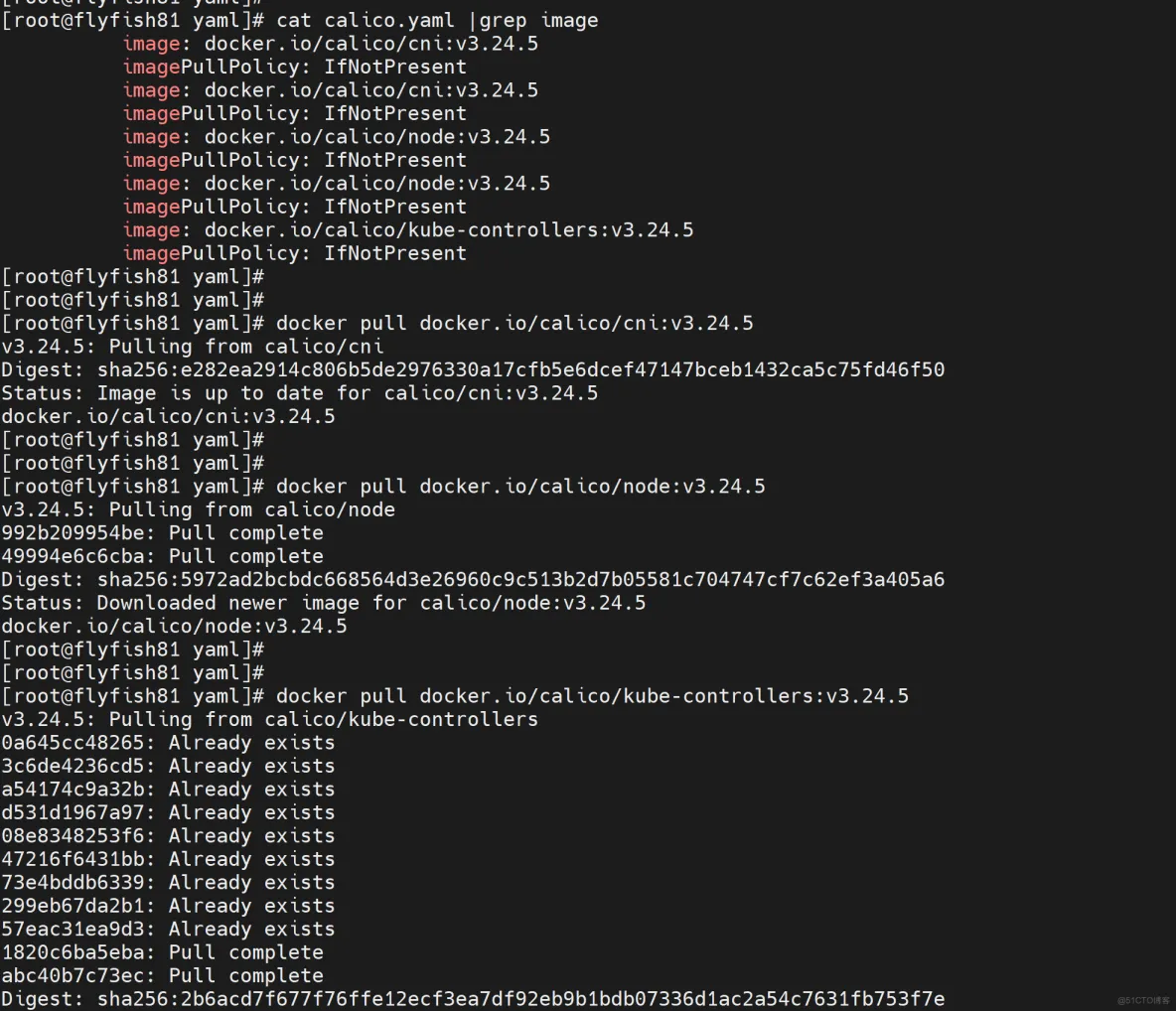

cat calico.yaml |grep image

docker pull docker.io/calico/cni:v3.24.5

docker pull docker.io/calico/node:v3.24.5

docker pull docker.io/calico/kube-controllers:v3.24.5

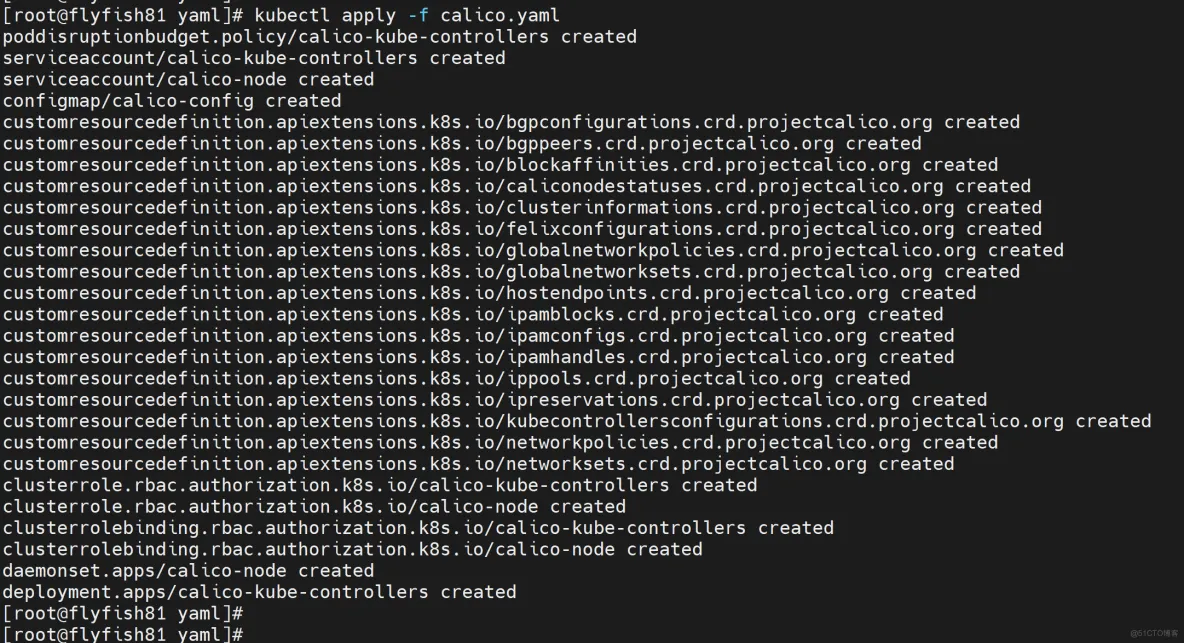

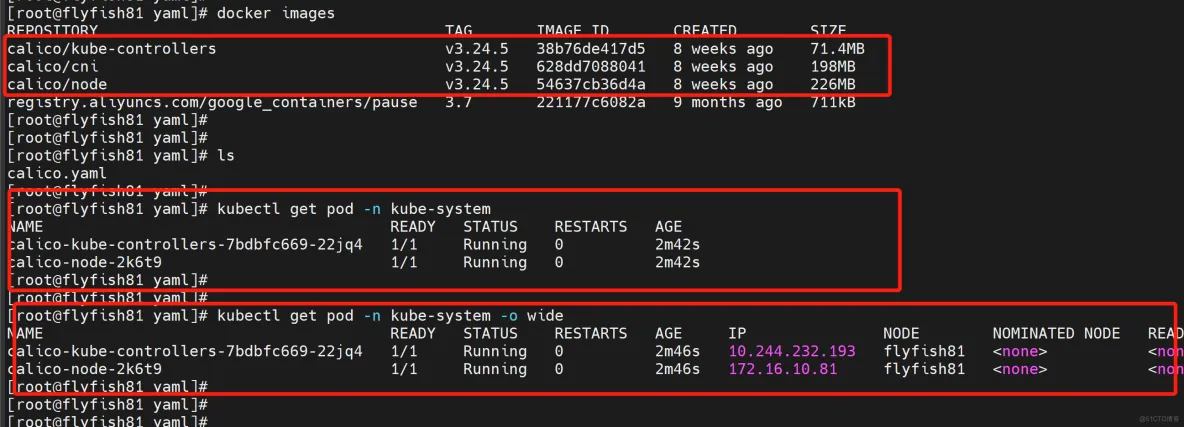

kubectl apply -f calico.yaml

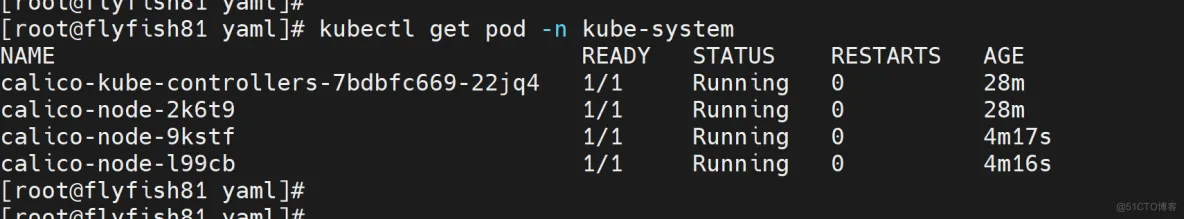

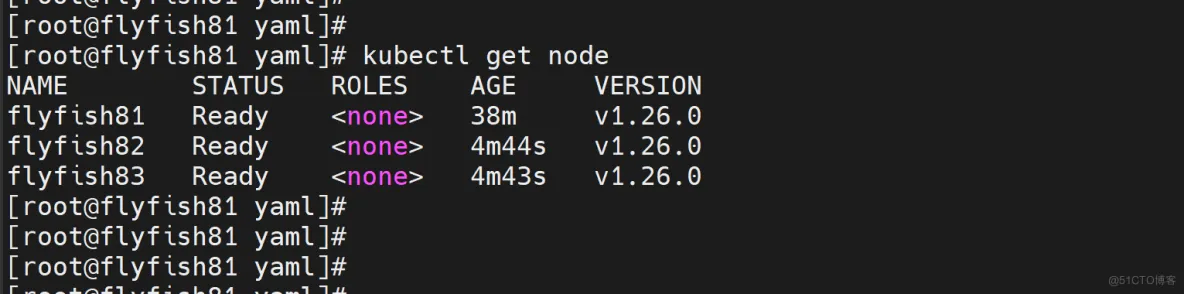

kubectl get pod -n kube-system

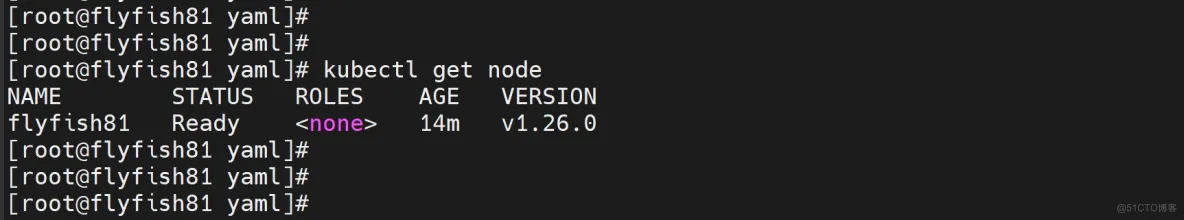

kubectl get node

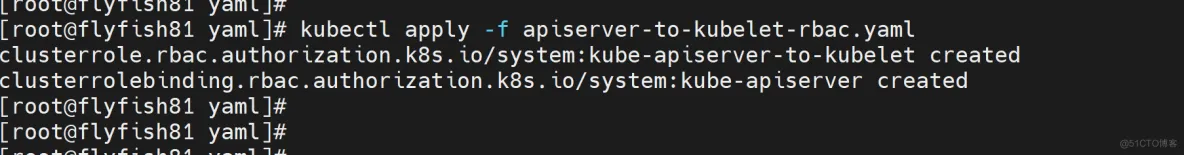

授权apiserver访问kubelet

应用场景:例如kubectl logs

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

kubectl apply -f apiserver-to-kubelet-rbac.yaml

六:新增加一个worker node

6.1 同步配置文件

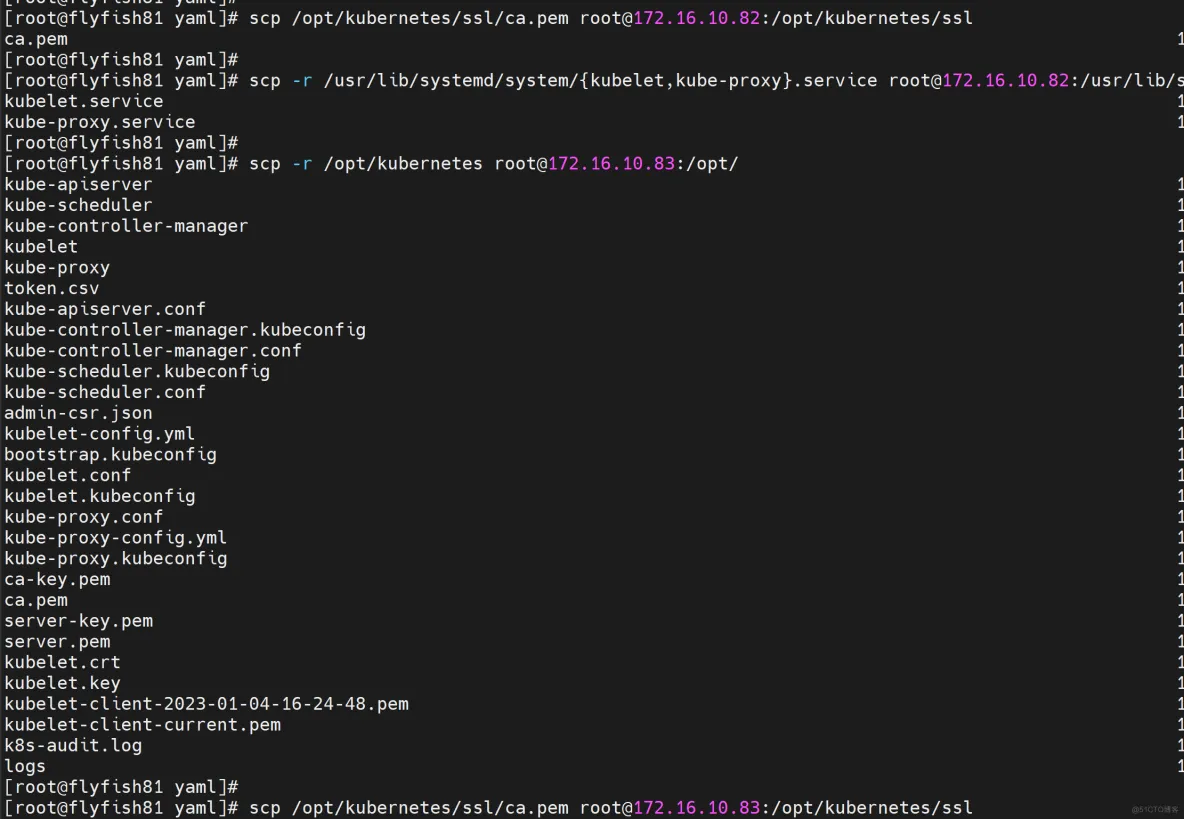

1. 拷贝已部署好的Node相关文件到新节点

在Master节点将Worker Node涉及文件拷贝到新节点172.16.10.82/83

scp -r /opt/kubernetes [email protected]:/opt/

scp /opt/kubernetes/ssl/ca.pem [email protected]:/opt/kubernetes/ssl

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system

scp -r /opt/kubernetes [email protected]:/opt/

scp /opt/kubernetes/ssl/ca.pem [email protected]:/opt/kubernetes/ssl

scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system

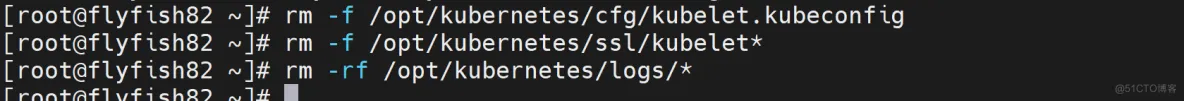

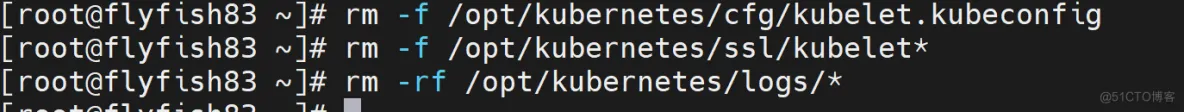

删除kubelet证书和kubeconfig文件

rm -rf /opt/kubernetes/cfg/kubelet.kubeconfig

rm -rf /opt/kubernetes/ssl/kubelet*

rm -rf /opt/kubernetes/logs/*

注:这几个文件是证书申请审批后自动生成的,每个Node不同,必须删除

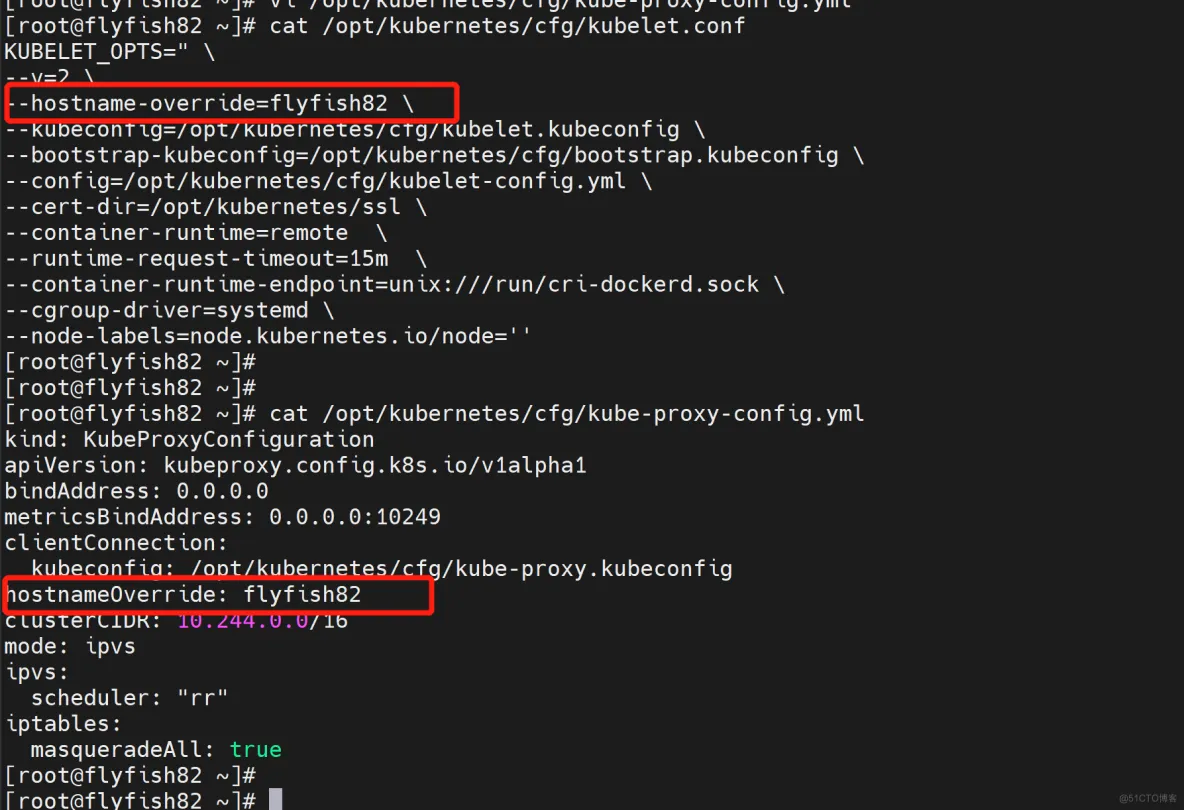

修改主机名 [改节点的主机名]

flyfish82:

vi /opt/kubernetes/cfg/kubelet.conf

--hostname-override=flyfish82

vi /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: flyfish82

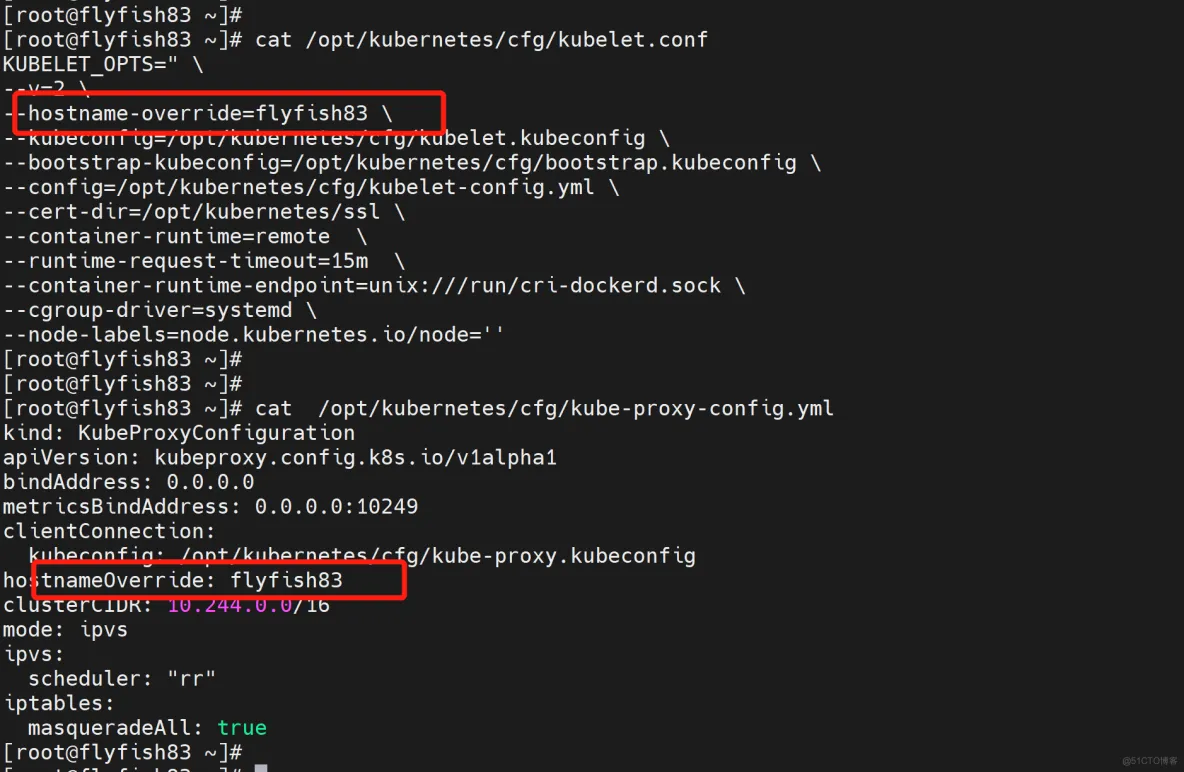

修改主机名 [改节点的主机名]

flyfish83:

vi /opt/kubernetes/cfg/kubelet.conf

--hostname-override=flyfish83

vi /opt/kubernetes/cfg/kube-proxy-config.yml

hostnameOverride: flyfish83

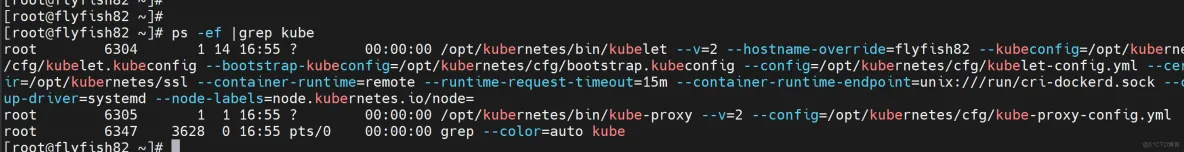

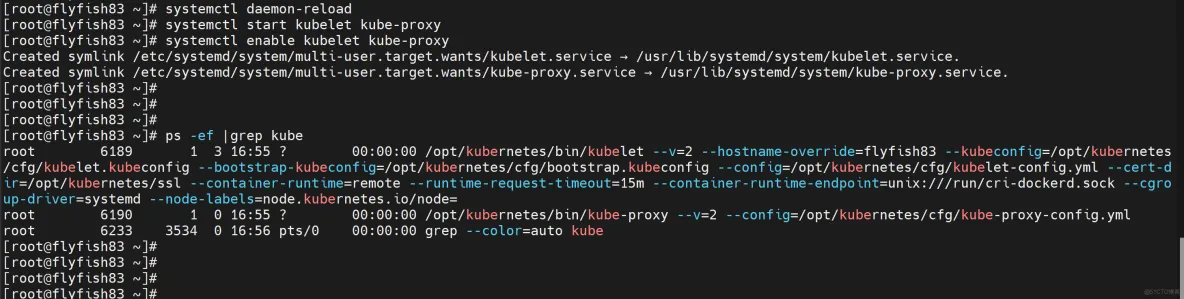

启动并设置开机启动

systemctl daemon-reload

systemctl start kubelet kube-proxy

systemctl enable kubelet kube-proxy

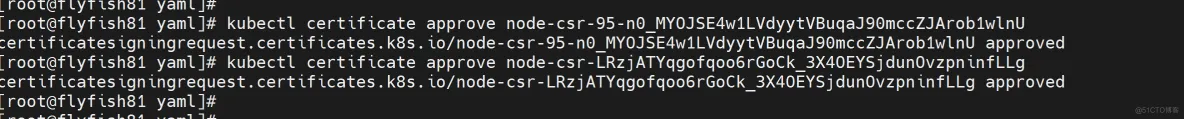

在Master上批准新Node kubelet证书申请

kubectl get csr

# 授权请求

kubectl certificate approve node-csr-95-n0_MYOJSE4w1LVdyytVBuqaJ90mccZJArob1wlnU

kubectl certificate approve node-csr-LRzjATYqgofqoo6rGoCk_3X4OEYSjdunOvzpninfLLg

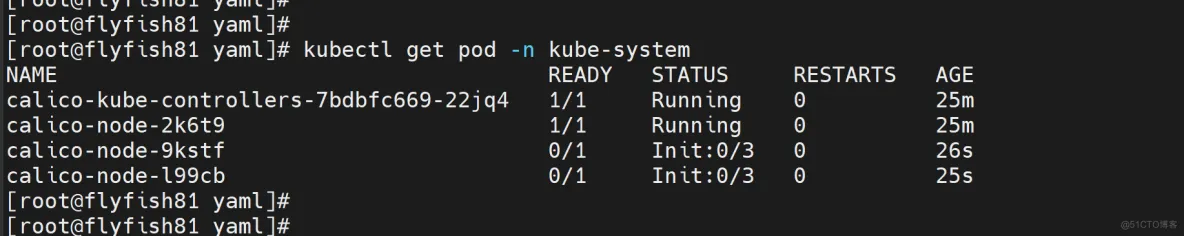

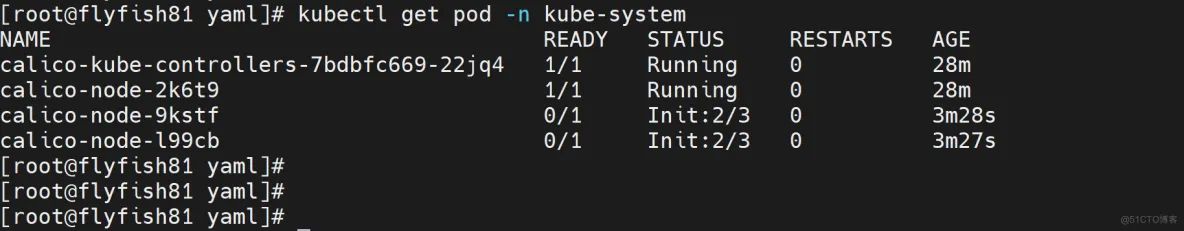

kubectl get pod -n kube-system

kubectl get node

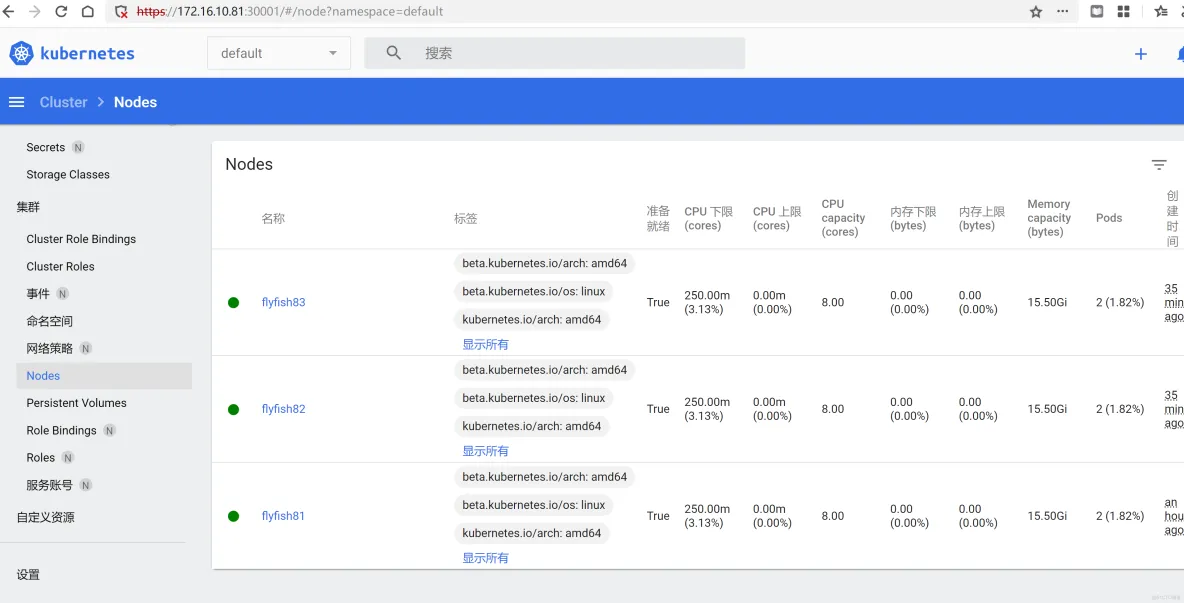

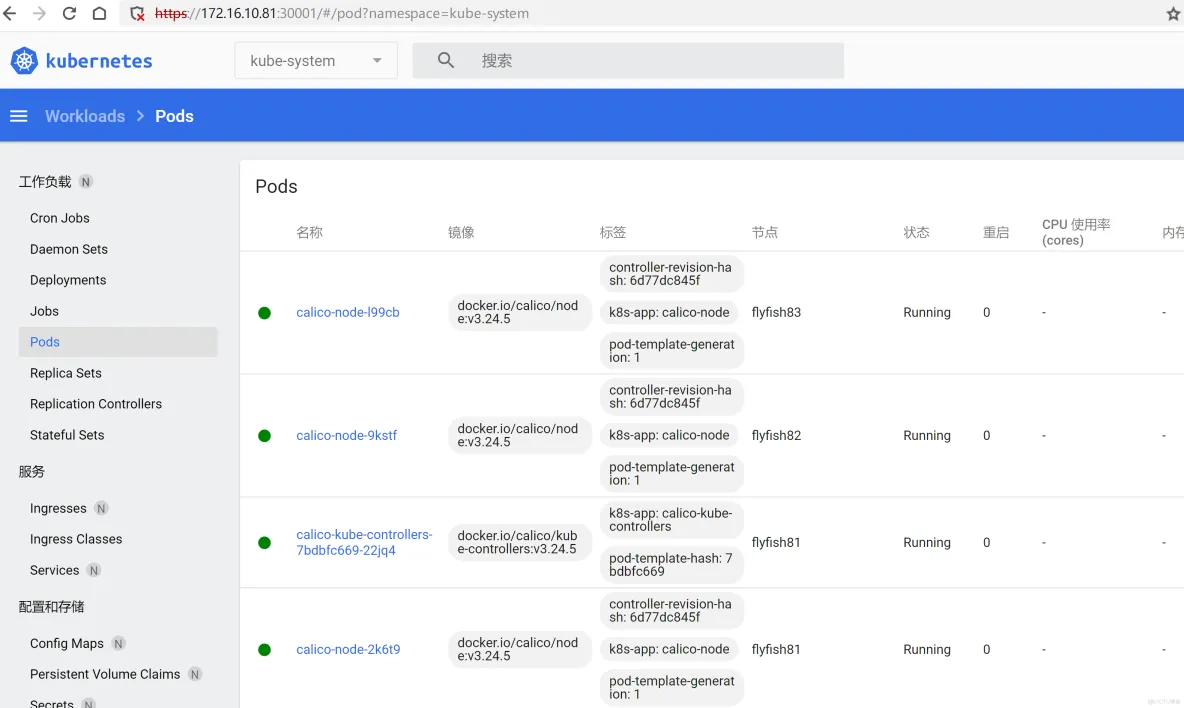

七:部署Dashboard和CoreDNS

7.1 部署Dashboard

github:

https://github.com/kubernetes/dashboard/releases/tag/v2.7.0

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

目前最新版本v2.7.0

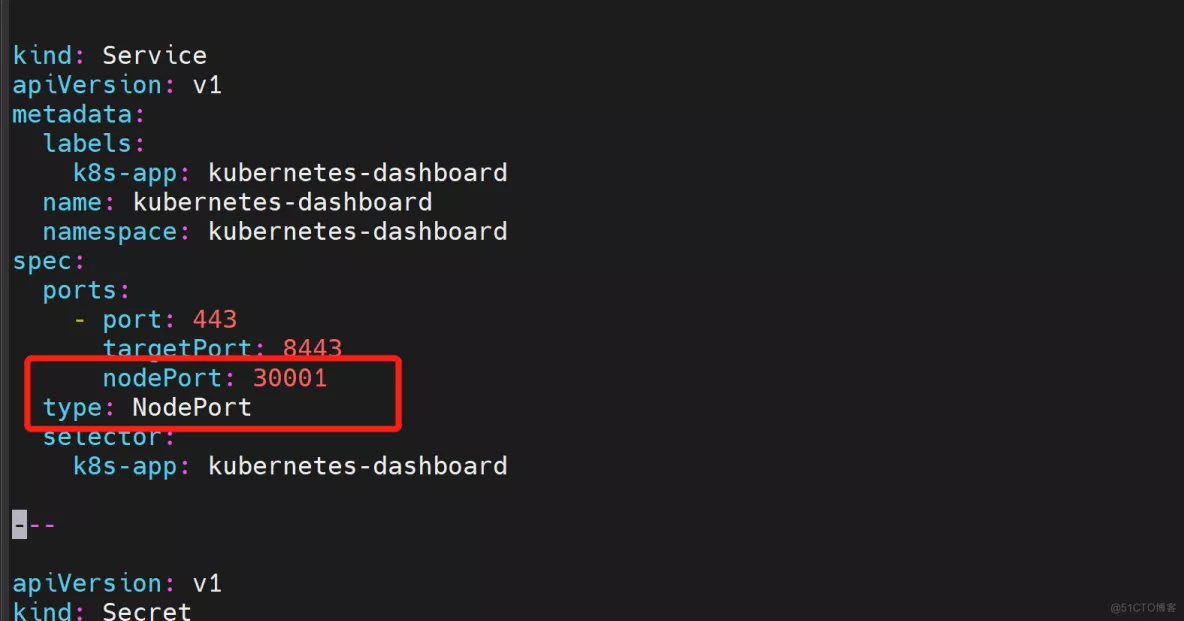

vim recommended.yaml

----

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

----

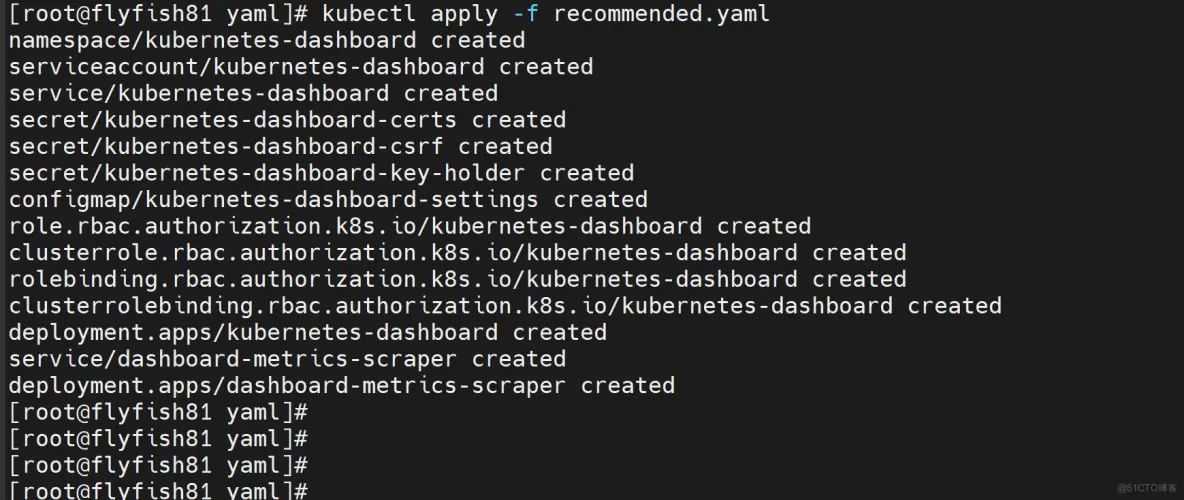

kubectl apply -f recommended.yaml

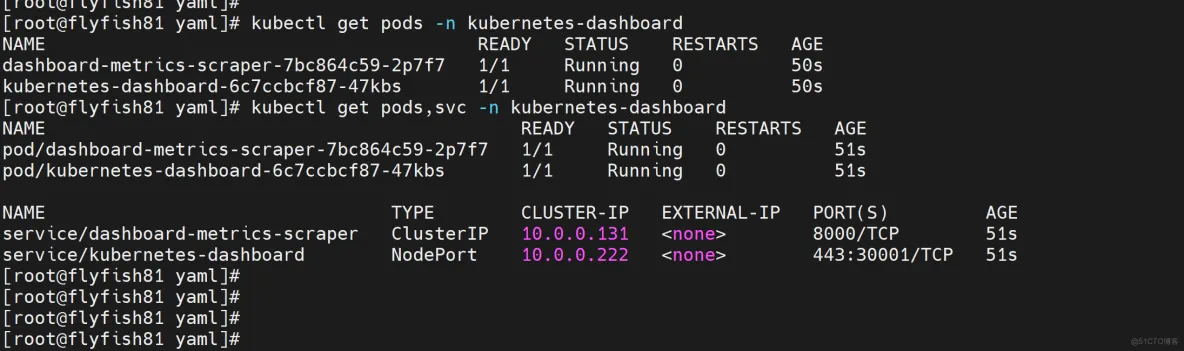

kubectl get pods -n kubernetes-dashboard

kubectl get pods,svc -n kubernetes-dashboard

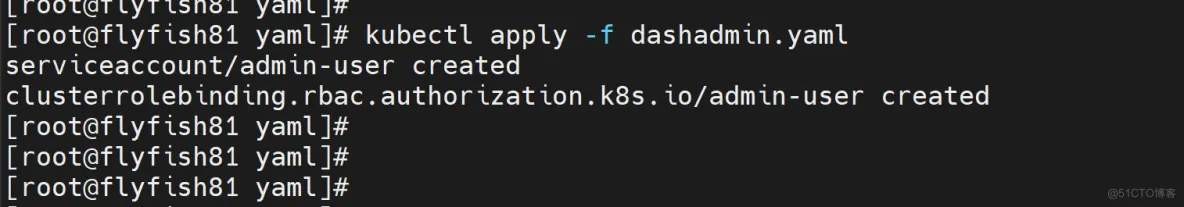

创建service account并绑定默认cluster-admin管理员集群角色:

vim dashadmin.yaml

-----

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

-----

kubectl apply -f dashadmin.yaml

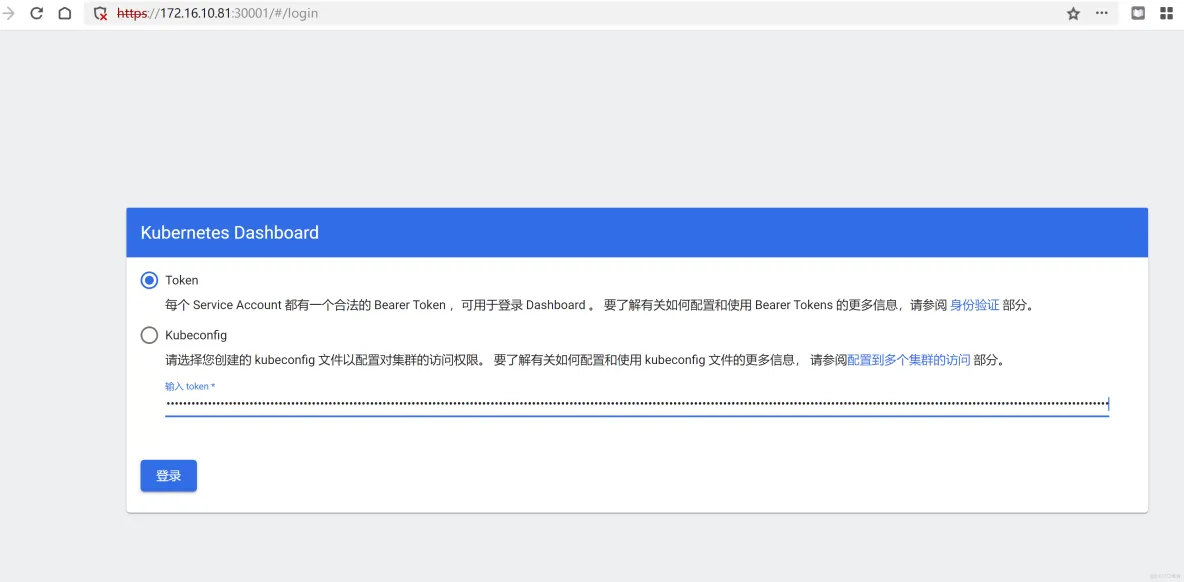

创建用户登录token

kubectl -n kubernetes-dashboard create token admin-user

----

eyJhbGciOiJSUzI1NiIsImtpZCI6ImZHMnMzSjJtaHVTR0djRlpZQmtEX283LXN4VkxQZ2xPekR2aHAyaDNSXzQifQ.eyJhdWQiOlsiYXBpIiwiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjcyODI4MjU3LCJpYXQiOjE2NzI4MjQ2NTcsImlzcyI6ImFwaSIsImt1YmVybmV0ZXMuaW8iOnsibmFtZXNwYWNlIjoia3ViZXJuZXRlcy1kYXNoYm9hcmQiLCJzZXJ2aWNlYWNjb3VudCI6eyJuYW1lIjoiYWRtaW4tdXNlciIsInVpZCI6IjU5ZmFmZDA3LTk3NzctNGQ1ZC1iNjMxLWYyMTg4MDUwNWIyNyJ9fSwibmJmIjoxNjcyODI0NjU3LCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.gH-HAItASsHJGawQqYZzTZ6CUq0nQ23JF-fbpuHsdlu25HSCCO-ORWSFH2uO5cYE9TwvnbOf8lATbadCeIZ9SqKLKNy86LQe-G_xWUDmaZ2S1DvkPDiHrCf5U5X6Xq2_lAXoPpZTvevFAKaPMYAWqETK5t_Ul1SNqn2fI9pnmE4lliXwdA4oegEKa0td-1H1IYMked131eckiXiSnx1Xy5aoRGSo8fAZwkd7pUBK8Wd5AlWoLi82_65Gzhl37U--jWNgomyI3mHEzmiH4HLDZCxmB7pTXjGeq40vReshyXQjCWS6_F5sFaIgtbJcuF_RzvihI7GhC0_3E1dVnik1IQ

----

打开web

https://172.16.10.81:30001

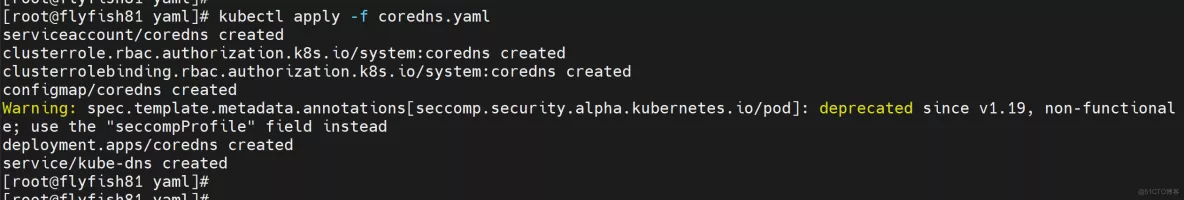

7.2 部署CoreDNS

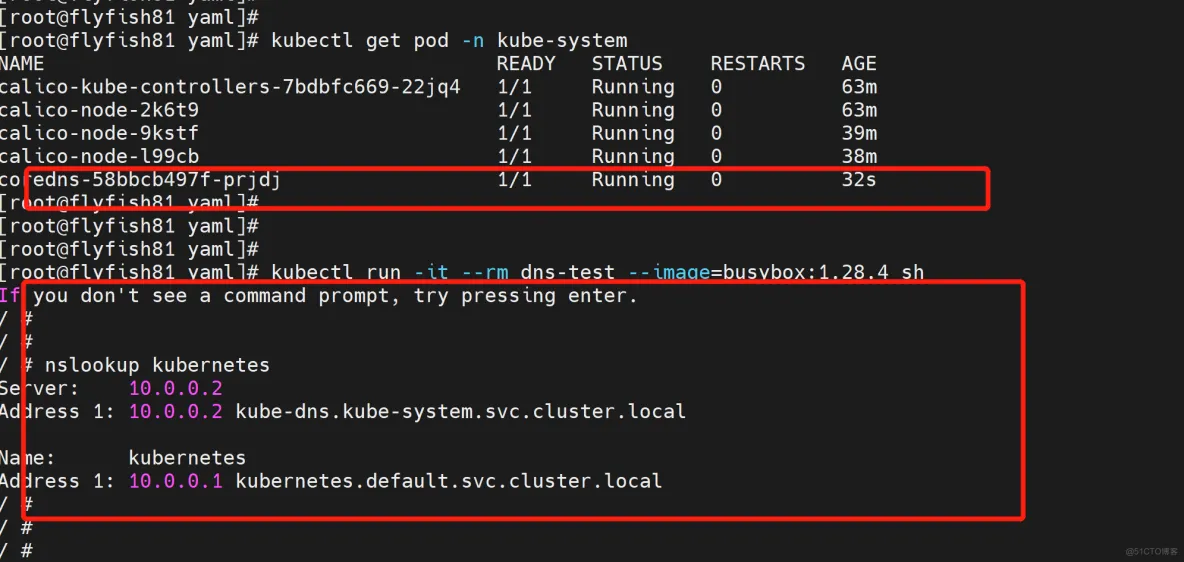

kubectl apply -f coredns.yaml

测试:

kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

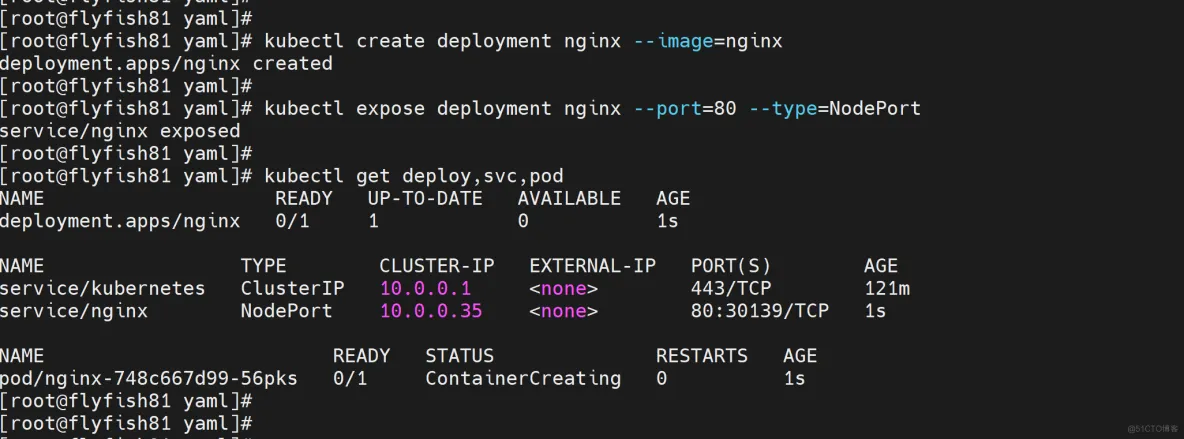

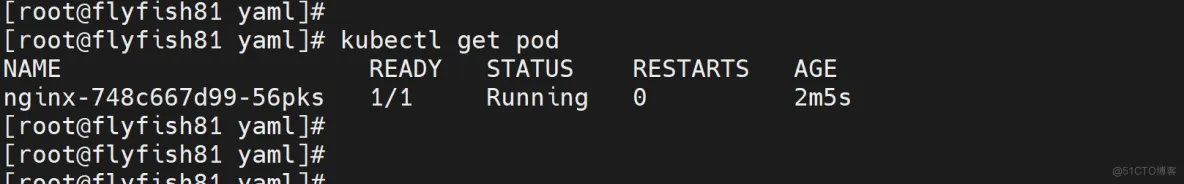

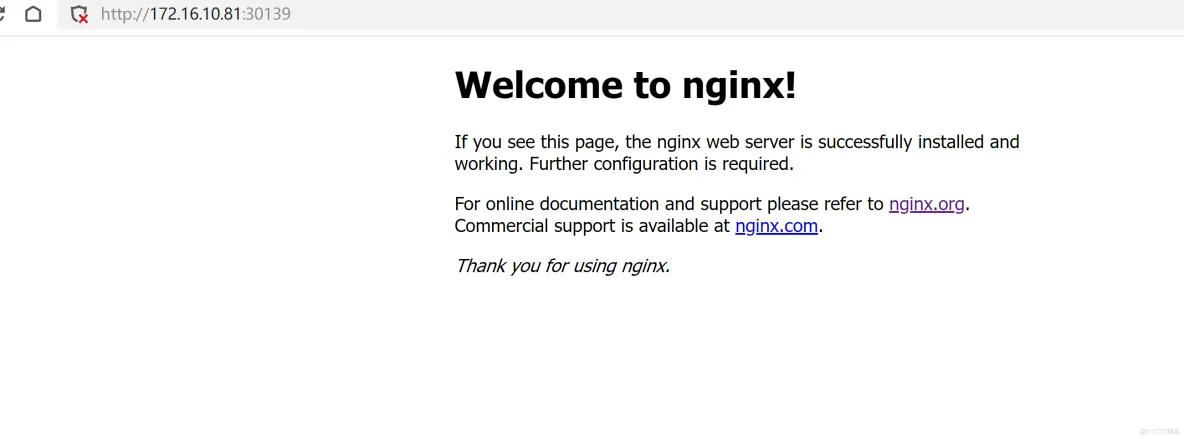

创建一个nginx pod 测试:

kubectl create deployment nginx --image=nginx

kubectl expose deployment nginx --port=80 --type=NodePort

kubectl get deploy,svc,pod

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK