Meta’s AI-powered audio codec promises 10x compression over MP3

source link: https://arstechnica.com/information-technology/2022/11/metas-ai-powered-audio-codec-promises-10x-compression-over-mp3/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Can you hear me now? —

Meta’s AI-powered audio codec promises 10x compression over MP3

Technique could allow high-quality calls and music on low-quality connections.

Benj Edwards - 11/1/2022, 9:18 PM

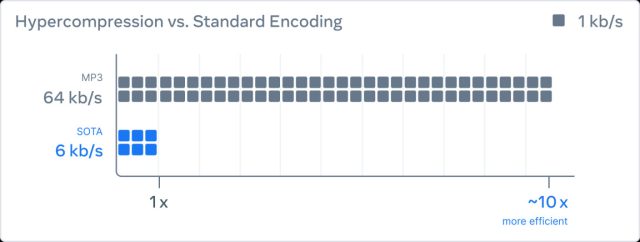

Last week, Meta announced an AI-powered audio compression method called "EnCodec" that can reportedly compress audio 10 times smaller than the MP3 format at 64kbps with no loss in quality. Meta says this technique could dramatically improve the sound quality of speech on low-bandwidth connections, such as phone calls in areas with spotty service. The technique also works for music.

Meta debuted the technology on October 25 in a paper titled "High Fidelity Neural Audio Compression," authored by Meta AI researchers Alexandre Défossez, Jade Copet, Gabriel Synnaeve, and Yossi Adi. Meta also summarized the research on its blog devoted to EnCodec.

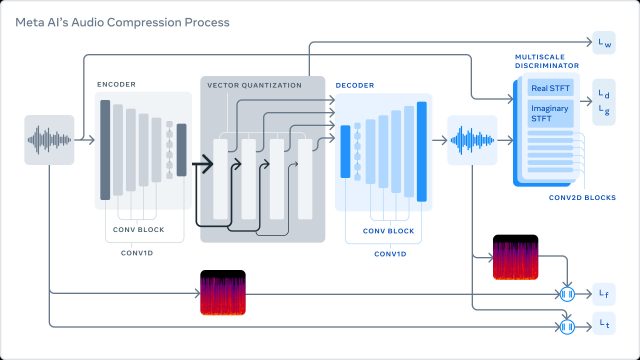

Meta describes its method as a three-part system trained to compress audio to a desired target size. First, the encoder transforms uncompressed data into a lower frame rate "latent space" representation. The "quantizer" then compresses the representation to the target size while keeping track of the most important information that will later be used to rebuild the original signal. (This compressed signal is what gets sent through a network or saved to disk.) Finally, the decoder turns the compressed data back into audio in real time using a neural network on a single CPU.

AdvertisementMeta's use of discriminators proves key to creating a method for compressing the audio as much as possible without losing key elements of a signal that make it distinctive and recognizable:

"The key to lossy compression is to identify changes that will not be perceivable by humans, as perfect reconstruction is impossible at low bit rates. To do so, we use discriminators to improve the perceptual quality of the generated samples. This creates a cat-and-mouse game where the discriminator’s job is to differentiate between real samples and reconstructed samples. The compression model attempts to generate samples to fool the discriminators by pushing the reconstructed samples to be more perceptually similar to the original samples."

It's worth noting that using a neural network for audio compression and decompression is far from new—especially for speech compression—but Meta's researchers claim they are the first group to apply the technology to 48 kHz stereo audio (slightly better than CD's 44.1 kHz sampling rate), which is typical for music files distributed on the Internet.

As for applications, Meta says this AI-powered "hypercompression of audio" could support "faster, better-quality calls" in bad network conditions. And, of course, being Meta, the researchers also mention EnCodec's metaverse implications, saying the technology could eventually deliver "rich metaverse experiences without requiring major bandwidth improvements."

Beyond that, maybe we'll also get really small music audio files out of it someday. For now, Meta's new tech remains in the research phase, but it points toward a future where high-quality audio can use less bandwidth, which would be great news for mobile broadband providers with overburdened networks from streaming media.

Promoted Comments

-

Astralock wrote:So what is the difference between this and Opus, other than one is a standard and the other by Meta?Opus is two different codecs which are selected from based on input. There is a voice codec (silk) used for voice data that models the range of sounds that human speech contains and then essentially transmits which voice sounds are used. Then there is a music codec (CELT) which is based on a modified discrete cosine transform, similar to Vorbis, AAC or WMA. The fourier transformed data is then compared to a model of human auditory perception and any inaudible detail is discarded (or rather quantized coarsely).

This codec is organized somewhat differently. Looking quickly at their paper, it looks like a convolution neural network that uses vector quantization on the network output. Training uses a fourier transform, but unless I'm misunderstanding something, it doesn't look like the codec itself does any frequency analysis at all aside from what the network has learned.brendanlong wrote:Why are they comparing this to an ancient audio compression format (MP3) instead of something modern like Opus? I can't even tell if this is good or not.They do compare to Opus:Quote:Results for EnCodec working at 6 kbps, EnCodec with Residual Vector Quantization (RVQ) at 6 kbps, and Opus at 6 kbps, and MP3 at 64 kbps are reported in Table 4. EnCodec is significantly outperforms Opus at 6kbps and is comparable to MP3 at 64kbps, while EnCodec at 12kpbs achieve comparable performance to EnCodec at 24kbps.https://arxiv.org/pdf/2210.13438.pdf

That said, it is also a lot slower. Opus is designed to be very low complexity so that it can be used on low power mobile devices running at ~ 25-30 MHz, whereas this is only a little faster than realtime on a 4600 MHz CoffeeLake CPU, so the comparison is a little unfair to Opus, which is intentionally doing orders of magnitude less processing for sake of efficiency.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK