How IT leaders can embrace responsible AI

source link: https://siliconangle.com/2022/09/11/leaders-can-embrace-responsible-ai/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

How IT leaders can embrace responsible AI

When artificial intelligence augments or even replaces human decisions, it amplifies good and bad outcomes alike.

There are numerous risks and potential harms created by AI systems, including bias and discrimination, financial or reputational loss, lack of transparency and explainability, or invasions of security and privacy. Responsible AI enables the right outcomes by resolving dilemmas rooted in delivering value versus tolerating risks.

Responsible AI must be a part of an organization’s wider AI strategy. Here are the steps that chief information officer and information technology leaders, in partnership with data and analytics leadership, can take to progress their organization towards a vision of responsible AI.

Define responsible AI

Responsible AI is an umbrella term for aspects of making appropriate business and ethical choices when adopting AI. It encompasses decisions around business and societal value, risk, trust, transparency, fairness, bias, mitigation, explainability, accountability, safety, privacy, regulatory compliance and more.

Before organizations design their AI strategy, they must define what responsible AI means within the context of their organization’s environment. There are many facets of responsible AI, but Gartner finds five principles to be the most common across different organizations.

These principles define responsible AI as that which is:

- Human-centric and socially beneficial, serving human goals and supporting ethical and more efficient automation while relying on a human touch and common sense.

- Fair so that individuals or groups are not systematically disadvantaged through AI-driven decisions, while addressing dissolution, isolation and polarization among users.

- Transparent and explainable to build trust, confidence and understanding in AI systems.

- Secure and safe to protect the interests and privacy of organizations and people while they interact with AI systems across different jurisdictions.

- Accountable to create channels for recourse and establish rights for individuals.

Understand how responsible AI benefits the business

A key component of a responsible AI journey entails making the case for changes to key stakeholders. Having a clear strategy for responsible AI and communicating the business benefits to executive leadership will help IT reach the goals that it set out to achieve with AI. This requires understanding how responsible AI can benefit the business.

Responsible AI supports the business by helping tackle uncertainties to maintain trust in AI. For example, responsible AI helps organizations proactively stay ahead of the regulatory curve, benefiting the enterprise’s credibility with customers, partners and other key stakeholders.

Across the business, responsible AI generates value by increasing AI adoption while mapping risk exposure shifts. This enhances employee, customer and societal value through increased safety, reliability and sustainability, helping to make AI more human-centric and inclusive.

Finally, a responsible AI program supports the development and execution teams. Using methods and techniques that minimize sampling bias, respect user privacy and are explainable can help ensure individual fairness, as well as fair representation, accuracy and errors. AI developers can ensure models are secure and resilient with thorough cycles of testing, retesting and updates to attacks and vulnerabilities through stress testing and validation.

Create a responsible AI roadmap

Once the business benefits are well-understood, craft a responsible AI roadmap by identifying gaps in the current strategy.

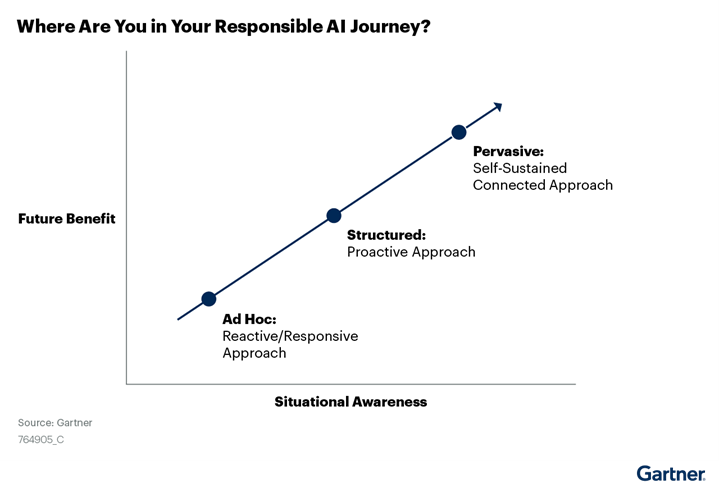

Organizations usually start with an ad hoc approach, which is reactive in nature and addresses challenges posed by AI systems on the go. This acts as a temporary fix and often becomes increasingly difficult over time as organizations must play catch-up with existing regulations to ensure compliance. Although this approach may work for smaller organizations, it may not be viable as systems become more intertwined and complex.

The next evolutionary step would be a proactive approach, where IT evaluates and accepts structured risk. However, to get the most benefit out of AI, organizations must embrace a pervasive approach that examines current risks against future or deferred benefits or risks.

Once organizations assess their current state of responsible AI, IT leaders can start crafting their goals and vision. Start with a foundational path, revisiting the strategy and vision for AI and working to shift from an ad hoc to a structured approach. Plan for the development and execution of responsible AI around existing resources and focus on communicating business value to key stakeholders. Lay the foundations for AI trust and enhance adoption across the organization.

After the foundational path is achieved, organizations can embark on the stability path. Focus on consistently becoming more proactive and increase testing and validation capabilities to ensure strong security and privacy.

Finally, organizations that have evolved along their responsible AI journey can begin on the transformational path, becoming a thought leader that supports AI for good. The transformational stage is for organizations that want to create a self-sustained approach to dealing with AI, where conversations and actions are focused around human centricity and sustainability.

Any organization embarking on an AI initiative must follow this structured approach toward understanding, utilizing and implementing responsible AI practices contextualized to their business. The journey toward responsible AI will be an evolving one as new challenges arise, but it becomes increasingly important as AI becomes more pervasive across business and society.

Farhan Choudhary is a principal analyst at Gartner Inc. researching the operationalization of machine learning and AI models, hiring and upskilling, and techniques to operationalize and achieve success with data science, machine learning and AI initiatives. He wrote this article for SiliconANGLE. Gartner analysts will provide additional insights on AI and other key topics for CIOs at Gartner IT Symposium/Xpo 2022, taking place Oct. 17-20 in Orlando, Florida.

Image: Gartner

A message from John Furrier, co-founder of SiliconANGLE:

Show your support for our mission by joining our Cube Club and Cube Event Community of experts. Join the community that includes Amazon Web Services and Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger and many more luminaries and experts.

Join Our Community

Click here to join the free and open Startup Showcase event.

We really want to hear from you, and we’re looking forward to seeing you at the event and in theCUBE Club.

Click here to join the free and open Startup Showcase event.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK