Kubernetes 标准化部署文档

source link: https://blog.51cto.com/wang/5381909

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

审核人 | 大数据运维组 |

重要性 | 中 |

紧迫性 | 中 |

拟制人 | 王昱翔 |

提交日期 | 2020年 12月28日 |

一: kubernetes的使用

1.1 kubernetes的概述与架构

1.1.1.kubernetes的概述:

kubernetes,简称K8s,是用8代替8个字符“ubernete”而成的缩写。是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes的目标是让部署容器化的应用简单并且高效(powerful),Kubernetes提供了应用部署,规划,更新,维护的一种机制

Kubernetes是Google开源的一个容器编排引擎,它支持自动化部署、大规模可伸缩、应用容器化管理。在生产环境中部署一个应用程序时,通常要部署该应用的多个实例以便对应用请求进行负载均衡。

在Kubernetes中,我们可以创建多个容器,每个容器里面运行一个应用实例,然后通过内置的负载均衡策略,实现对这一组应用实例的管理、发现、访问,而这些细节都不需要运维人员去进行复杂的手工配置和处理。

1.1.2 kubernetes的特点:

可移植: 支持公有云,私有云,混合云,多重云(multi-cloud)

可扩展: 模块化,插件化,可挂载,可组合

自动化: 自动部署,自动重启,自动复制,自动伸缩/扩展

1.2 kubernetes的体系架构

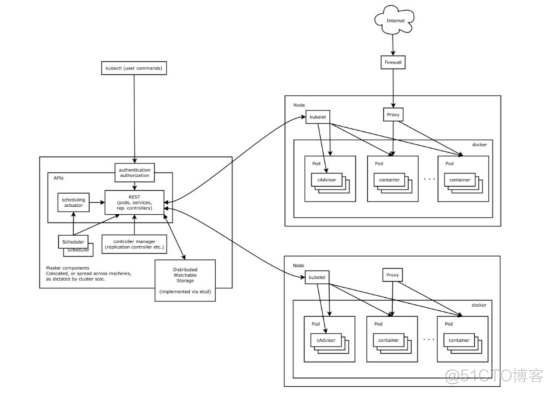

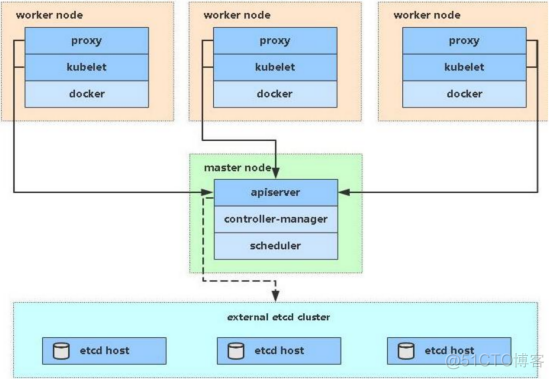

Kubernetes集群包含有节点代理kubelet和Master组件(APIs, scheduler, etc),一切都基于分布式的存储系统。下面这张图是Kubernetes的架构图。

1.3 kubernetes 组件特点

在这张系统架构图中,我们把服务分为运行在工作节点上的服务和组成集群级别控制板的服务。

Kubernetes节点有运行应用容器必备的服务,而这些都是受Master的控制。

每次个节点上当然都要运行Docker。Docker来负责所有具体的映像下载和容器运行。

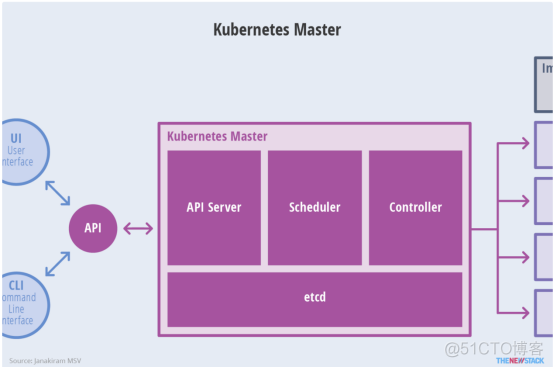

Kubernetes主要由以下几个核心组件组成:

etcd保存了整个集群的状态;

apiserver提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制;

controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等;

scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上;

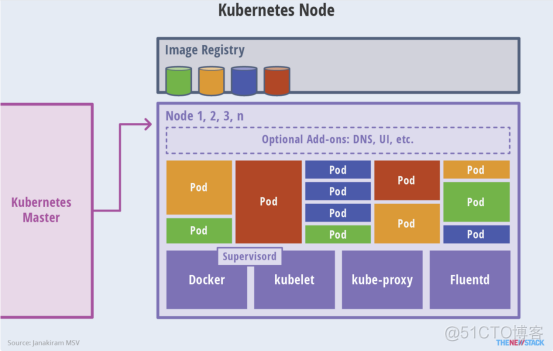

kubelet负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理;

Container runtime负责镜像管理以及Pod和容器的真正运行(CRI);

kube-proxy负责为Service提供cluster内部的服务发现和负载均衡;

除了核心组件,还有一些推荐的Add-ons:

kube-dns负责为整个集群提供DNS服务

Ingress Controller为服务提供外网入口

Heapster提供资源监控

Dashboard提供GUI

Federation提供跨可用区的集群

Fluentd-elasticsearch提供集群日志采集、存储与查询

二:kubernetes的部署

2.1 系统环境初始化

2.1.1 安装系统简介

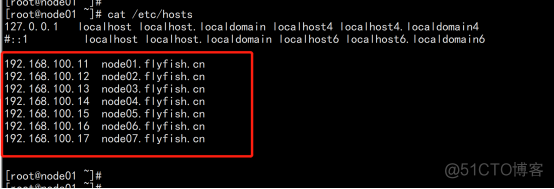

系统: CentOS7.9x64 部署环境: master 三台台 Slave 三台 Kubernetes 版本: k8s-v1.18.0 系统主机名配置: cat /etc/hosts 192.168.100.11 node01.flyfish.cn 192.168.100.12 node02.flyfish.cn 192.168.100.13 node03.flyfish.cn 192.168.100.14 node04.flyfish.cn 192.168.100.15 node05.flyfish.cn 192.168.100.16 node06.flyfish.cn 192.168.100.17 node07.flyfish.cn |

2.2 系统环境初始化

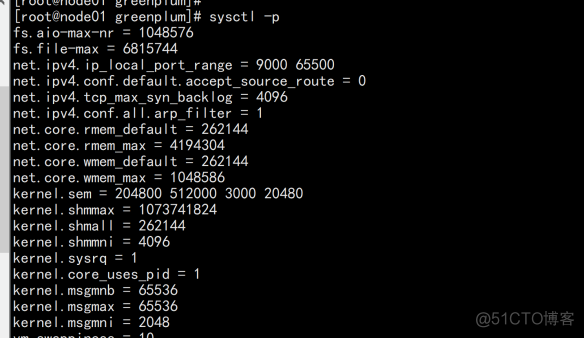

2.2.1 系统内核参数优化

cat >> /etc/sysctl.conf << EOF fs.aio-max-nr = 1048576 fs.file-max = 6815744 net.ipv4.ip_local_port_range = 9000 65500 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.tcp_max_syn_backlog = 4096 net.ipv4.conf.all.arp_filter = 1 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048586 kernel.sem = 204800 512000 3000 20480 kernel.shmmax = 1073741824 kernel.shmall = 262144 kernel.shmmni = 4096 kernel.sysrq = 1 kernel.core_uses_pid = 1 kernel.msgmnb = 65536 kernel.msgmax = 65536 kernel.msgmni = 2048 vm.swappiness = 10 vm.overcommit_memory = 2 vm.overcommit_ratio = 95 vm.zone_reclaim_mode = 0 vm.dirty_expire_centisecs = 500 vm.dirty_writeback_centisecs = 100 vm.dirty_background_ratio = 3 vm.dirty_ratio = 10 #64g- #vm.dirty_background_ratio = 3 #vm.dirty_ratio = 10 #64g+ #vm.dirty_background_ratio = 0 #vm.dirty_ratio = 0 #vm.dirty_background_bytes = 1610612736 #vm.dirty_bytes = 4294967296 EOF sysctl -p |

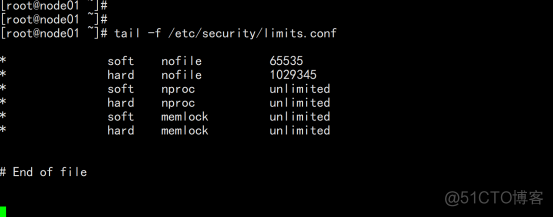

2.1.2 系统句柄数:

cat >> /etc/security/limits.conf << EOF * soft nproc unlimited * hard nproc unlimited * soft nofile 524288 * hard nofile 524288 * soft stack unlimited * hard stack unlimited * hard memlock unlimited * soft memlock unlimited tail -f /etc/security/limits.conf |

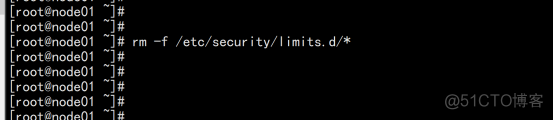

rm -f /etc/security/limits.d/* |

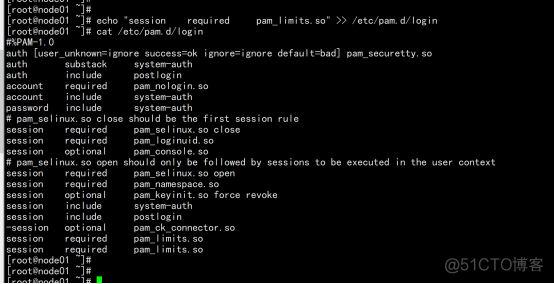

2.1.3 系统优化启动

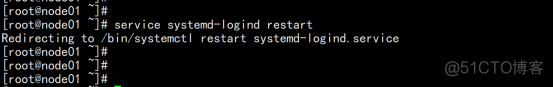

echo "session required pam_limits.so" >> /etc/pam.d/login cat /etc/pam.d/login echo "RemoveIPC=no" >> /etc/systemd/logind.conf service systemd-logind restart |

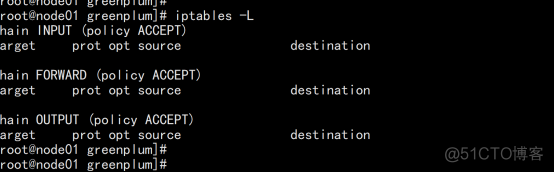

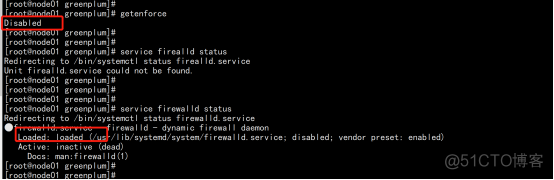

2.1.4 系统关闭firewalld,iptables,selinux

echo "SELINUX=disabled" > /etc/selinux/config setenforce 0 systemctl stop firewalld.service systemctl disable firewalld.service systemctl status firewalld.service systemctl set-default multi-user.target |

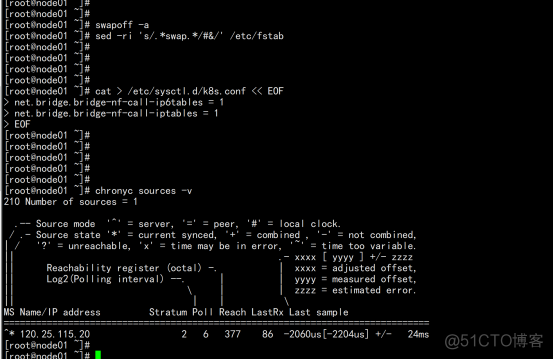

2.1.5 关闭swap内存

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab |

2.1.6 系统最大透明页

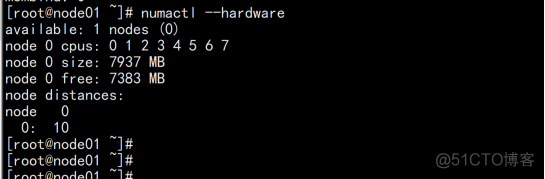

yum install numactl vim /etc/default/grub GRUB_CMDLINE_LINUX="crashkernel=auto rhgb quiet numa=off transparent_hugepage=never elevator=deadline" grub2-mkconfig -o /etc/grub2.cfg numastat numactl --show numactl --hardware |

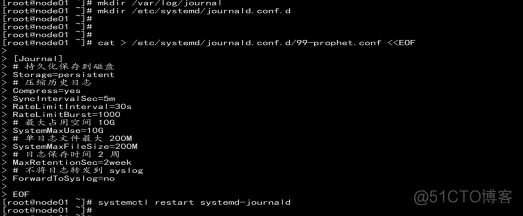

2.1.7 设置 rsyslogd 和 systemd journald

mkdir /var/log/journal # 持久化保存日志的目录 mkdir /etc/systemd/journald.conf.d cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF [Journal] # 持久化保存到磁盘 Storage=persistent # 压缩历史日志 Compress=yes SyncIntervalSec=5m RateLimitInterval=30s RateLimitBurst=1000 # 最大占用空间 10G SystemMaxUse=10G # 单日志文件最大 200M SystemMaxFileSize=200M # 日志保存时间 2 周 MaxRetentionSec=2week # 不将日志转发到 syslog ForwardToSyslog=no systemctl restart systemd-journald |

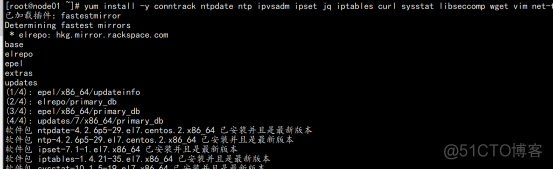

2.1.8 安装依赖包

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git |

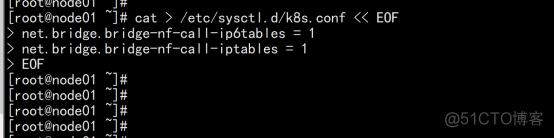

2.1.9 将桥接的 IPv4 流量传递到 iptables 的链:

# 将桥接的IPv4流量传递到iptables的链 cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 sysctl --system # 生效 |

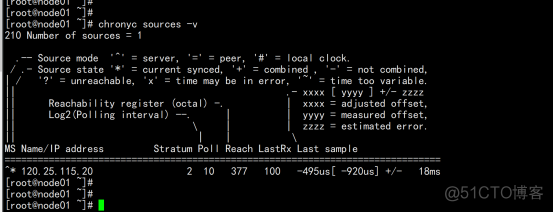

2.1.10 时间服务器同步

# 时间同步 yum install chronyd server ntp1.aliyun.com |

2.2 使用kuberadmin 部署k8s

2.2.1 部署角色

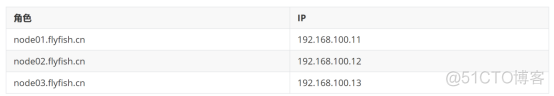

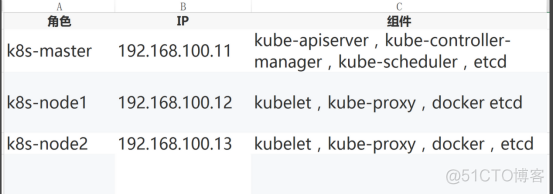

2.2.2 角色分配

node01.flyfish.cn ---> apiserver/controller-manager/scheduer/etcd node02.flyfish.cn ----> docker/kubelet/kube-proxy/etcd/ node03.flyfish.cn ----> docker/kubelet/kube-proxy/etcd/ |

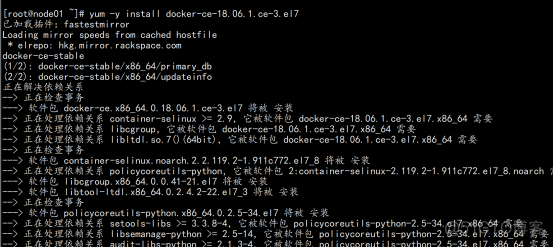

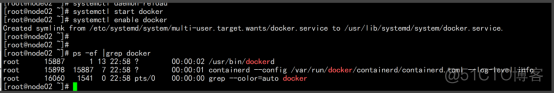

2.2.3 所有节点安装docker

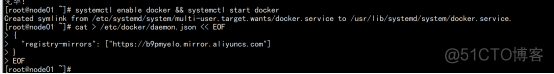

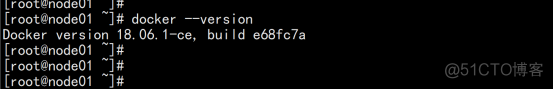

$ wgethttps://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo $ yum -y install docker-ce-18.06.1.ce-3.el7 $ systemctl enable docker && systemctl start docker $ docker --version Docker version 18.06.1-ce, build e68fc7a |

2.2.4 添加阿里云YUM软件源

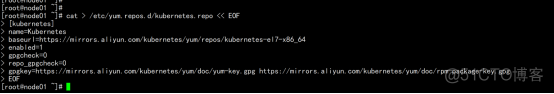

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF |

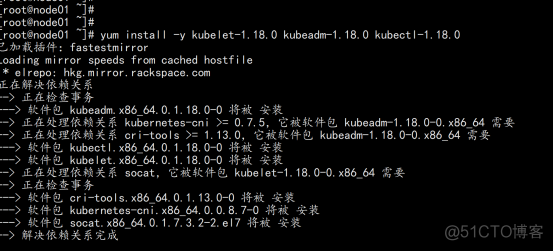

2.2.5安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署: yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0 |

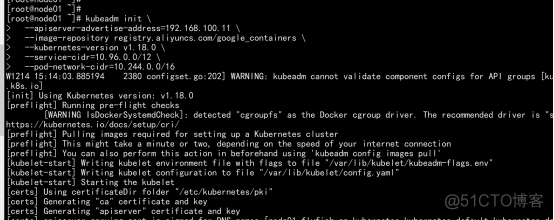

2.2.6 部署Kubernetes Master

在192.168.100.11(Master)执行。 kubeadm init \ --apiserver-advertise-address=192.168.100.11 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version v1.18.0 \ --service-cidr=10.96.0.0/12 \ --pod-network-cidr=10.244.0.0/16 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。 |

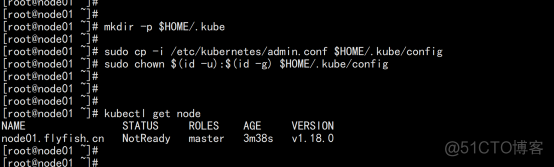

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.100.11:6443 --token nl7pab.2590lw4iemtzn604 \ --discovery-token-ca-cert-hash sha256:47bd4c128500dab38b51a79818b2c393ebbabf00d773c0dbc9b91902559cb210 |

2.2.7 使用kubectl工具:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config |

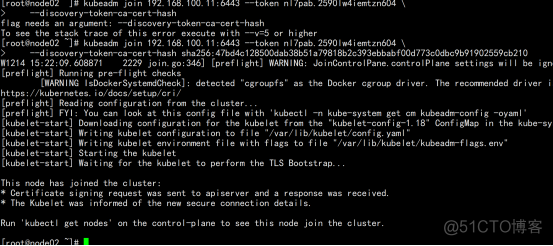

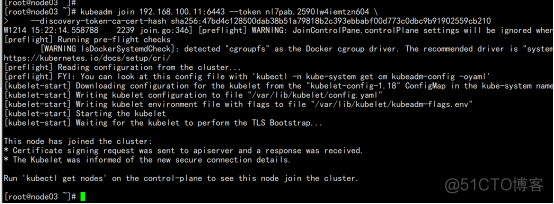

2.2.8 向master 加入 节点

kubeadm join 192.168.100.11:6443 --token nl7pab.2590lw4iemtzn604 \ --discovery-token-ca-cert-hash sha256:47bd4c128500dab38b51a79818b2c393ebbabf00d773c0dbc9b91902559cb210 |

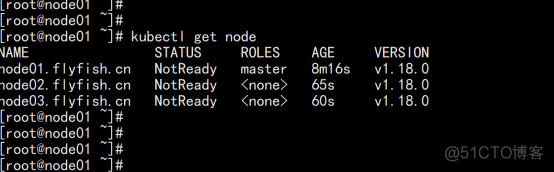

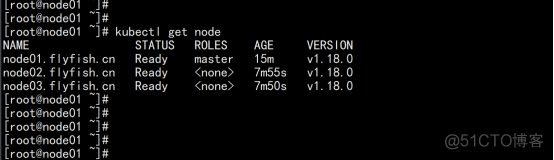

2.2.9 查看节点状态

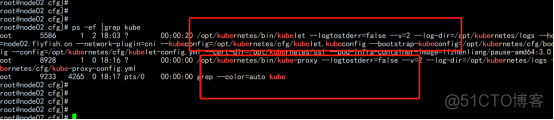

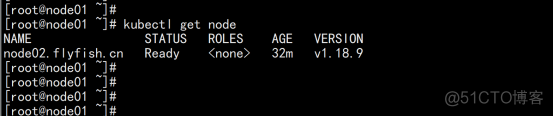

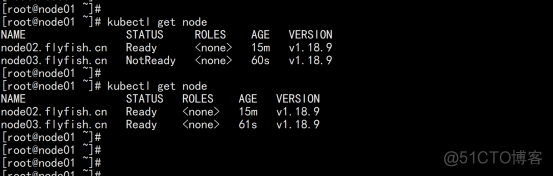

kubectl get node |

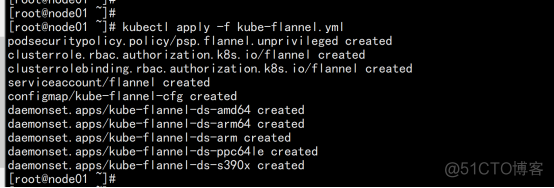

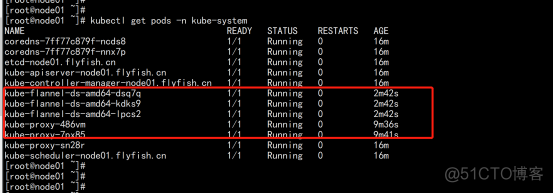

2.2.9 部署网络插件flannel

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml kubectl apply -f kube-flannel.yml |

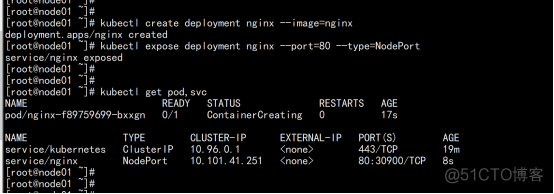

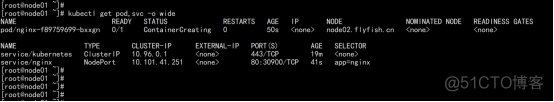

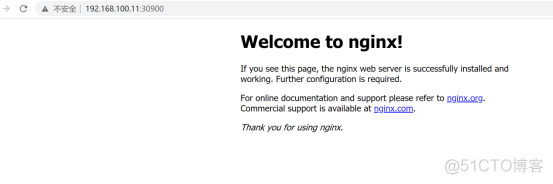

2.2.9 测试kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行: $ kubectl create deployment nginx --image=nginx $ kubectl expose deployment nginx --port=80 --type=NodePort $ kubectl get pod,svc |

2.3 使用二进制包部署k8s集群

2.3.1 部署角色安装:

2.3.2 操作系统初始化配置

# 关闭防火墙 systemctl stop firewalld systemctl disable firewalld # 关闭selinux sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久 setenforce 0 # 临时 # 关闭swap swapoff -a # 临时 sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久 # 根据规划设置主机名 hostnamectl set-hostname <hostname> # 在master添加hosts cat >> /etc/hosts << EOF 192.168.100.11 node01.flyfish.cn 192.168.100.12 node02.flyfish.cn 192.168.100.13 node03.flyfish.cn # 将桥接的IPv4流量传递到iptables的链 cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 sysctl --system # 生效 # 时间同步 yum install chronyd server ntp1.aliyun.com |

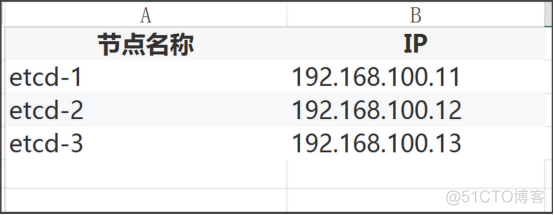

2.3.2 部署Etcd集群

Etcd 的概念: Etcd 是一个分布式键值存储系统,Kubernetes使用Etcd进行数据存储,所以先准备一个Etcd数据库,为解决Etcd单点故障,应采用集群方式部署,这里使用3台组建集群,可容忍1台机器故障,当然,你也可以使用5台组建集群,可容忍2台机器故障。 |

2.3.3 自签证书(准备cfssl证书生成工具)

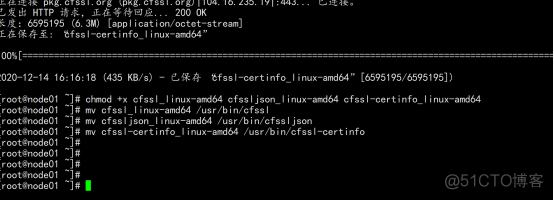

cfssl是一个开源的证书管理工具,使用json文件生成证书,相比openssl更方便使用。 找任意一台服务器操作,这里用Master节点。 wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/bin/cfssl mv cfssljson_linux-amd64 /usr/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo |

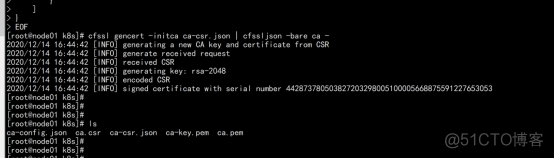

2.3.4 生成Etcd证书

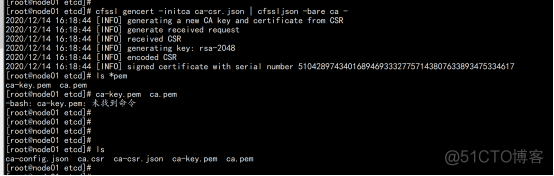

1. 自签证书颁发机构(CA) 创建工作目录: mkdir -p ~/TLS/{etcd,k8s} cd TLS/etcd 自签CA: cat > ca-config.json << EOF "signing": { "default": { "expiry": "87600h" "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" cat > ca-csr.json << EOF "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "L": "Beijing", "ST": "Beijing" --------------------------------------------------------------------- cfssl gencert -initca ca-csr.json | cfssljson -bare ca - |

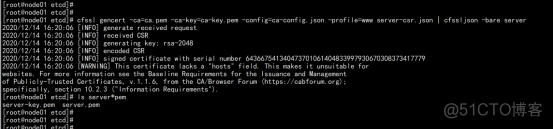

2. 使用自签CA签发Etcd HTTPS证书 创建证书申请文件: cat > server-csr.json << EOF "CN": "etcd", "hosts": [ "192.168.100.11", "192.168.100.12", "192.168.100.13", "192.168.100.14", "192.168.100.15", "192.168.100.16", "192.168.100.17", "192.168.100.100" "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "L": "BeiJing", "ST": "BeiJing" 生成证书: cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server ls server*pem server-key.pem server.pem |

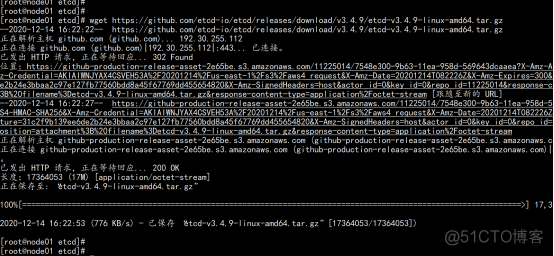

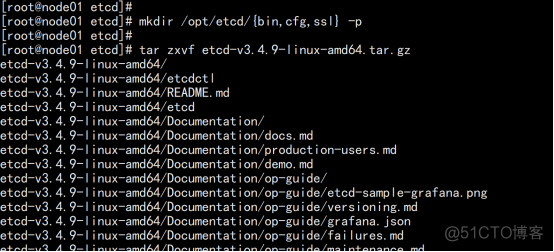

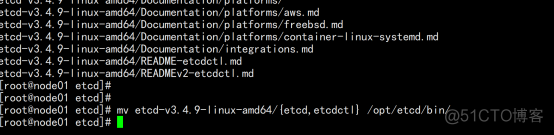

2.3.5 下载安装etcd

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz 以下在节点1上操作,为简化操作,待会将节点1生成的所有文件拷贝到节点2和节点3. 1. 创建工作目录并解压二进制包 mkdir /opt/etcd/{bin,cfg,ssl} -p tar zxvf etcd-v3.4.9-linux-amd64.tar.gz mv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/ |

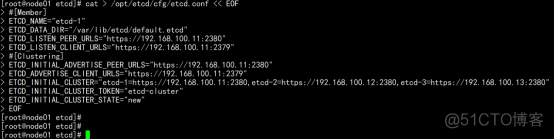

2.3.6创建etcd配置文件

cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_NAME:节点名称,集群中唯一 ETCD_DATA_DIR:数据目录 ETCD_LISTEN_PEER_URLS:集群通信监听地址 ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址 ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址 ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址 ETCD_INITIAL_CLUSTER:集群节点地址 ETCD_INITIAL_CLUSTER_TOKEN:集群Token ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群 |

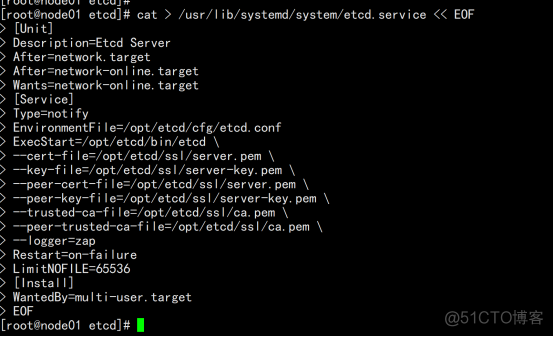

2.3.7 systemd管理etcd

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd \ --cert-file=/opt/etcd/ssl/server.pem \ --key-file=/opt/etcd/ssl/server-key.pem \ --peer-cert-file=/opt/etcd/ssl/server.pem \ --peer-key-file=/opt/etcd/ssl/server-key.pem \ --trusted-ca-file=/opt/etcd/ssl/ca.pem \ --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \ --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target |

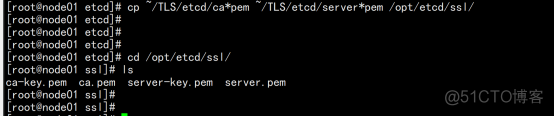

拷贝刚才生成的证书 把刚才生成的证书拷贝到配置文件中的路径: cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/ |

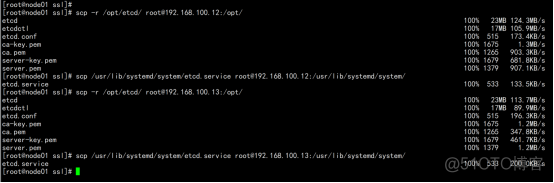

同步所有节点: scp -r /opt/etcd/ [email protected]:/opt/ scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/ scp -r /opt/etcd/ [email protected]:/opt/ scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/ |

然后在节点2和节点3分别修改etcd.conf配置文件中的节点名称和当前服务器IP: vi /opt/etcd/cfg/etcd.conf #[Member] ETCD_NAME="etcd-1" # 修改此处,节点2改为etcd-2,节点3改为etcd-3 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.100.11:2380" # 修改此处为当前服务器IP ETCD_LISTEN_CLIENT_URLS="https://192.168.100.11:2379" # 修改此处为当前服务器IP #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.100.11:2380" # 修改此处为当前服务器IP ETCD_ADVERTISE_CLIENT_URLS="https://192.168.100.11:2379" # 修改此处为当前服务器IP ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.100.11:2380,etcd-2=https://192.168.100.12:2380,etcd-3=https://192.168.100.13:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" |

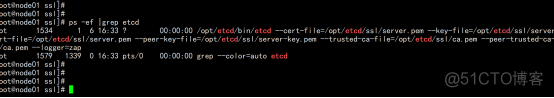

2.3.8 启动etcd

同步所有启动所有节点: systemctl daemon-reload systemctl start etcd systemctl enable etcd |

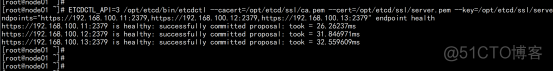

2.3.9 测试etcd

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379" endpoint health |

2.3.10 部署k8s的Master Node

生成kube-apiserver证书 1. 自签证书颁发机构(CA) cd /root/TLS/k8s/ cat > ca-config.json << EOF "signing": { "default": { "expiry": "87600h" "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" cat > ca-csr.json << EOF "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" 生成证书: cfssl gencert -initca ca-csr.json | cfssljson -bare ca - ls *pem ca-key.pem ca.pem |

使用自签CA签发kube-apiserver HTTPS证书 创建证书申请文件: cat > server-csr.json << EOF "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "192.168.100.11", "192.168.100.12", "192.168.100.13", "192.168.100.14", "192.168.100.15", "192.168.100.16", "192.168.100.17", "192.168.100.100", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" 注:上述文件hosts字段中IP为所有Master/LB/VIP IP,一个都不能少!为了方便后期扩容可以多写几个预留的IP。 |

生成证书: cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server ls server*pem server-key.pem server.pem |

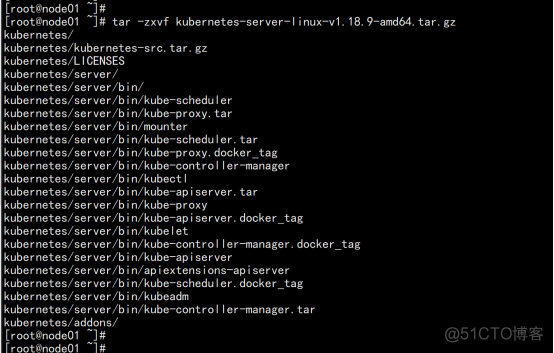

2.3.11 k8s 的下载安装

从Github下载二进制文件 下载地址: https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183 注:打开链接你会发现里面有很多包,下载一个server包就够了,包含了Master和Worker Node二进制文件。 |

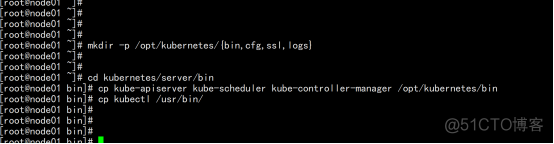

解压二进制包 mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} tar zxvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin cp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bin cp kubectl /usr/bin/ |

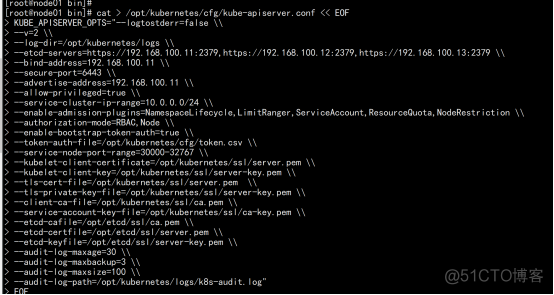

2.3.12 部署apiserver

部署kube-apiserver 1. 创建配置文件 cat > /opt/kubernetes/cfg/kube-apiserver.conf << EOF KUBE_APISERVER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --etcd-servers=https://192.168.100.11:2379,https://192.168.100.12:2379,https://192.168.100.13:2379 \\ --bind-address=192.168.100.11 \\ --secure-port=6443 \\ --advertise-address=192.168.100.11 \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --enable-bootstrap-token-auth=true \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-32767 \\ --kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\ --kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem \\ --audit-log-maxage=30 \\ --audit-log-maxbackup=3 \\ --audit-log-maxsize=100 \\ --audit-log-path=/opt/kubernetes/logs/k8s-audit.log" |

拷贝刚才生成的证书 把刚才生成的证书拷贝到配置文件中的路径: cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/ |

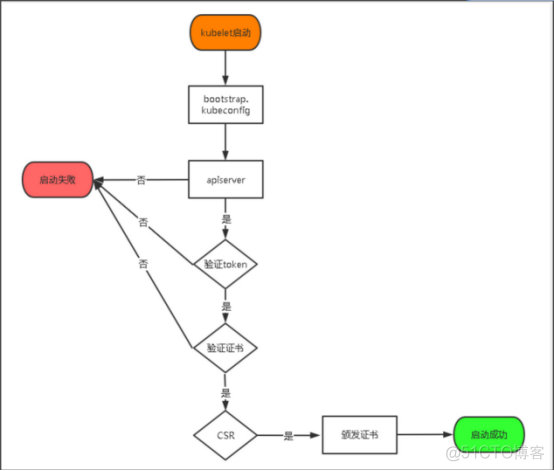

启用 TLS Bootstrapping 机制 TLS Bootstraping:Master apiserver启用TLS认证后,Node节点kubelet和kube-proxy要与kube-apiserver进行通信,必须使用CA签发的有效证书才可以,当Node节点很多时,这种客户端证书颁发需要大量工作,同样也会增加集群扩展复杂度。为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书,kubelet会以一个低权限用户自动向apiserver申请证书,kubelet的证书由apiserver动态签署。所以强烈建议在Node上使用这种方式,目前主要用于kubelet,kube-proxy还是由我们统一颁发一个证书。 TLS bootstraping 工作流程:  |

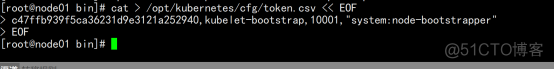

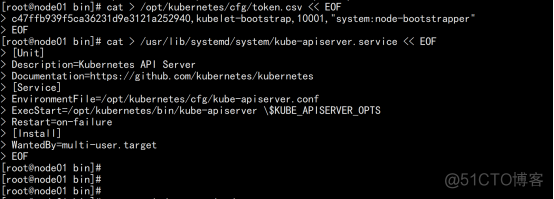

创建上述配置文件中token文件: cat > /opt/kubernetes/cfg/token.csv << EOF c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper" |

systemd管理apiserver cat > /usr/lib/systemd/system/kube-apiserver.service << EOF [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target |

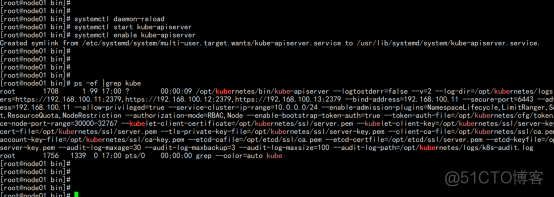

启动并设置开机启动 systemctl daemon-reload systemctl start kube-apiserver systemctl enable kube-apiserver |

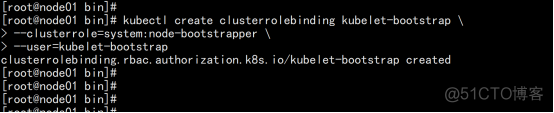

授权kubelet-bootstrap用户允许请求证书 kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap |

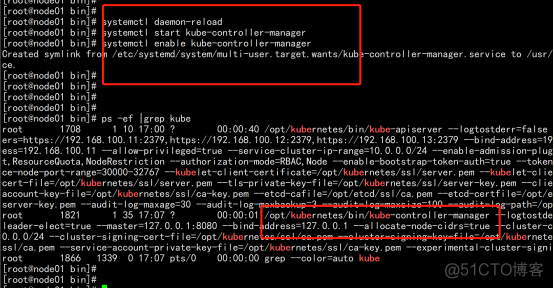

2.3.13 部署kube-controller-manager

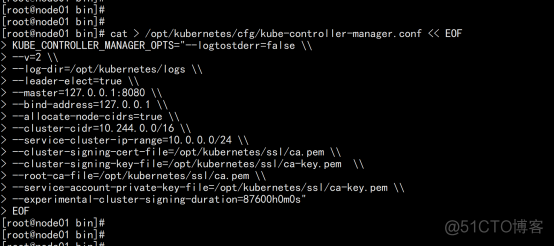

创建配置文件 cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOF KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --leader-elect=true \\ --master=127.0.0.1:8080 \\ --bind-address=127.0.0.1 \\ --allocate-node-cidrs=true \\ --cluster-cidr=10.244.0.0/16 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" |

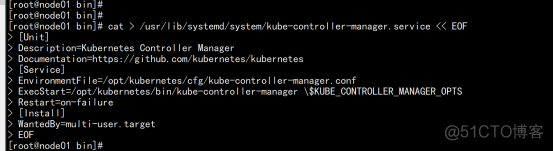

systemd管理controller-manager cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target |

启动并设置开机启动 systemctl daemon-reload systemctl start kube-controller-manager systemctl enable kube-controller-manager |

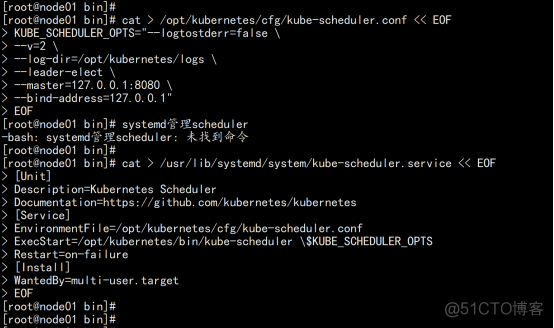

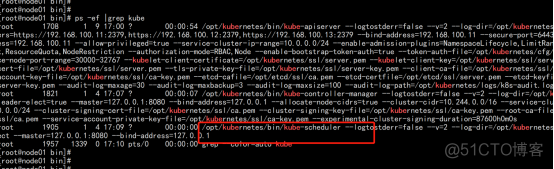

2.3.14 部署kube-scheduler

1. 创建配置文件 cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \ --v=2 \ --log-dir=/opt/kubernetes/logs \ --leader-elect \ --master=127.0.0.1:8080 \ --bind-address=127.0.0.1" |

systemd管理scheduler cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target |

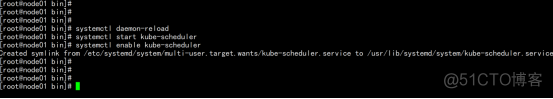

启动并设置开机启动 systemctl daemon-reload systemctl start kube-scheduler systemctl enable kube-scheduler |

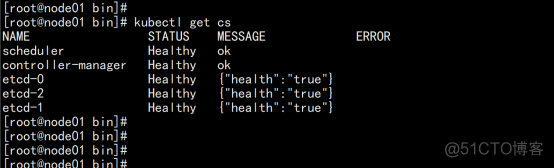

查看集群状态: kubectl get cs |

2.3.15 部署worker node 节点:

2.3.15.1 部署docker

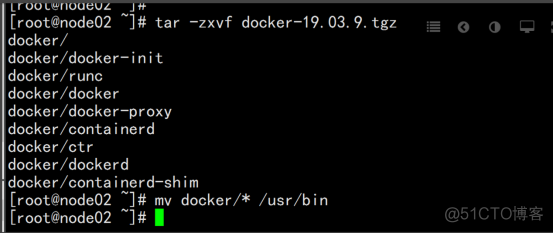

node02.flyfish.cn 节点部署docker: 下载地址: https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz 以下在所有节点操作。这里采用二进制安装,用yum安装也一样。 node02.flyfish 与 node03.flyfish 节点上面安装 |

解压二进制包 tar zxvf docker-19.03.9.tgz mv docker/* /usr/bin |

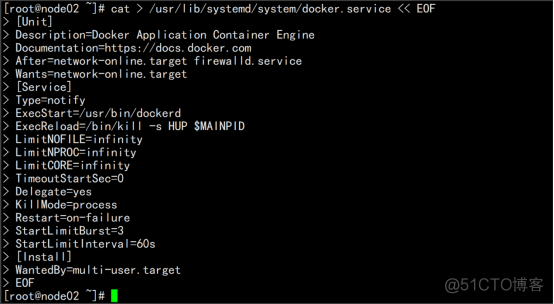

systemd管理docker cat > /usr/lib/systemd/system/docker.service << EOF [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target |

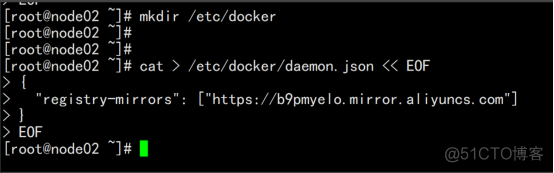

创建配置文件 mkdir /etc/docker cat > /etc/docker/daemon.json << EOF "registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"] registry-mirrors 阿里云镜像加速器 |

启动并设置开机启动 systemctl daemon-reload systemctl start docker systemctl enable docker |

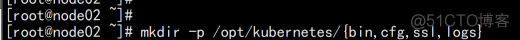

2.3.15.2 配置k8s目录

下面还是在Master Node上操作,即同时作为Worker Node 创建工作目录并拷贝二进制文件 在所有worker node创建工作目录: mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs} |

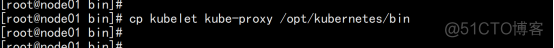

从master节点拷贝 cd /root/kubernetes/server/bin cp kubelet kube-proxy /opt/kubernetes/bin # 本地拷贝 |

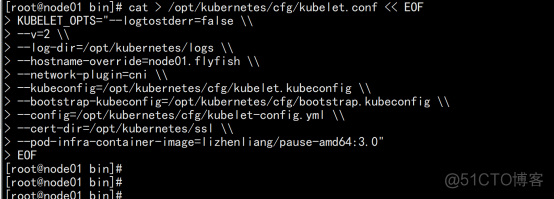

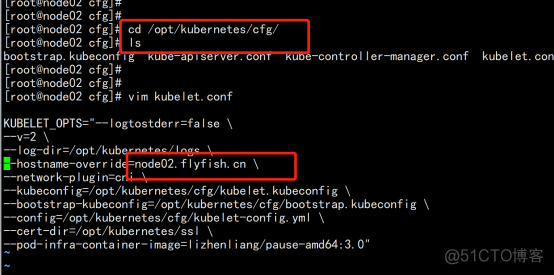

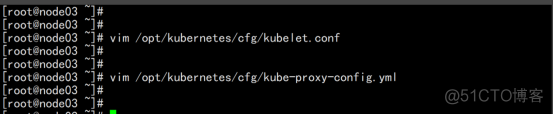

2.3.15.3 部署kubelet

部署kubelet 1. 创建配置文件 cat > /opt/kubernetes/cfg/kubelet.conf << EOF KUBELET_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --hostname-override=node01.flyfish \\ --network-plugin=cni \\ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\ --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\ --config=/opt/kubernetes/cfg/kubelet-config.yml \\ --cert-dir=/opt/kubernetes/ssl \\ --pod-infra-container-image=lizhenliang/pause-amd64:3.0" |

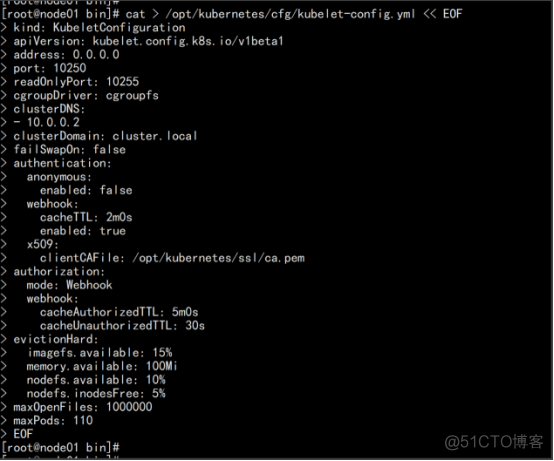

配置参数文件 cat > /opt/kubernetes/cfg/kubelet-config.yml << EOF kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 address: 0.0.0.0 port: 10250 readOnlyPort: 10255 cgroupDriver: cgroupfs clusterDNS: - 10.0.0.2 clusterDomain: cluster.local failSwapOn: false authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /opt/kubernetes/ssl/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% maxOpenFiles: 1000000 maxPods: 110 |

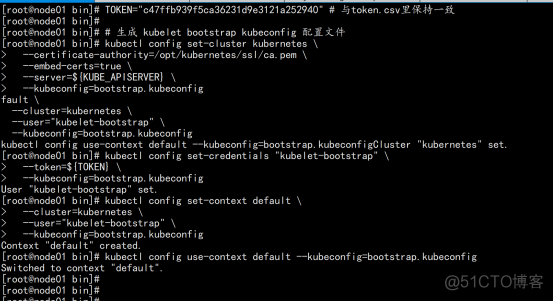

在 server节点上面执行 生成bootstrap.kubeconfig文件 写一个boot.sh 脚本 把下面的内容放进去 KUBE_APISERVER="https://192.168.100.11:6443" # apiserver IP:PORT TOKEN="c47ffb939f5ca36231d9e3121a252940" # 与token.csv里保持一致 # 生成 kubelet bootstrap kubeconfig 配置文件 kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-credentials "kubelet-bootstrap" \ --token=${TOKEN} \ --kubeconfig=bootstrap.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user="kubelet-bootstrap" \ --kubeconfig=bootstrap.kubeconfig kubectl config use-context default --kubeconfig=bootstrap.kubeconfig . ./boot.sh |

拷贝到配置文件路径: cp bootstrap.kubeconfig /opt/kubernetes/cfg |

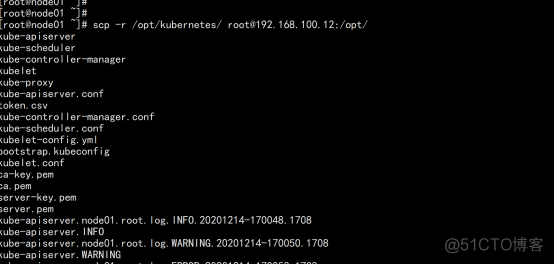

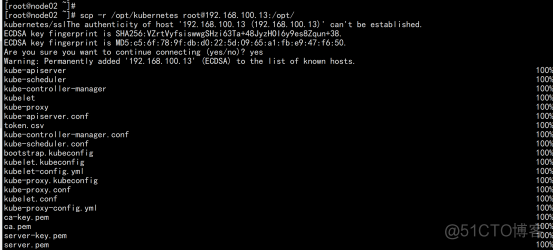

同步k8s 安装目录到 node02.flyfish.cn 节点: scp -r /opt/kubernetes/ [email protected]:/opt/ |

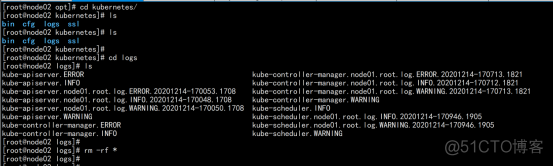

删掉 日志目录(node02.flyfish.cn) cd /opt/kubernetes/logs/ rm -rf * |

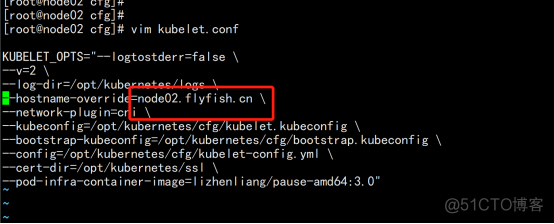

修改kubelet.conf 文件 cd /opt/kubernetes/cfg/ vim kubelet.conf --hostname-override=node02.flyfish.cn |

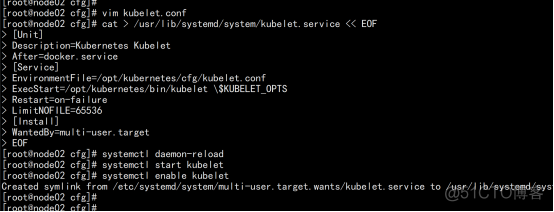

node02.flyfish.cn: systemd管理kubelet cat > /usr/lib/systemd/system/kubelet.service << EOF [Unit] Description=Kubernetes Kubelet After=docker.service [Service] EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target |

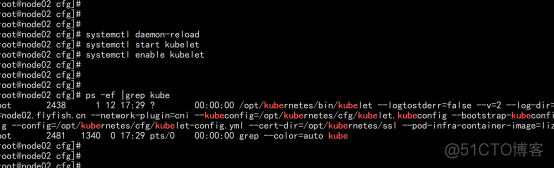

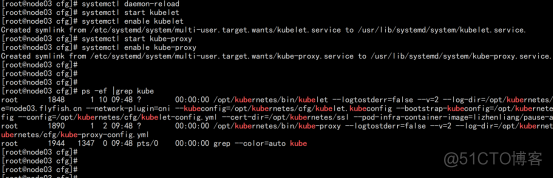

启动kubelet 开机启动 systemctl daemon-reload systemctl start kubelet systemctl enable kubelet |

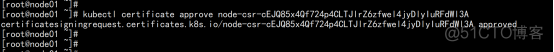

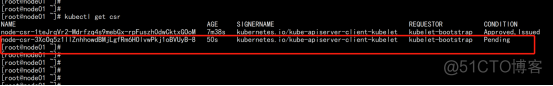

node01.flyfish.cn 配置授权 kubectl get csr |

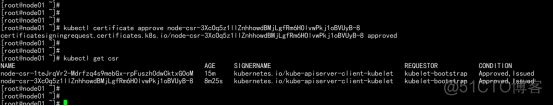

kubectl certificate approve node-csr-cEJQ85x4Qf724p4CLTJlrZ6zfwel4jyDlyIuRFdWl3A |

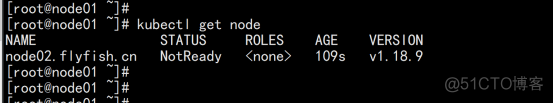

查看node: kubectl get node |

注:由于网络插件还没有部署,节点会没有准备就绪 NotReady |

2.3.15.4 部署kube-proxy

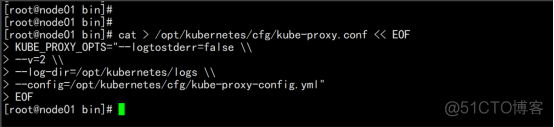

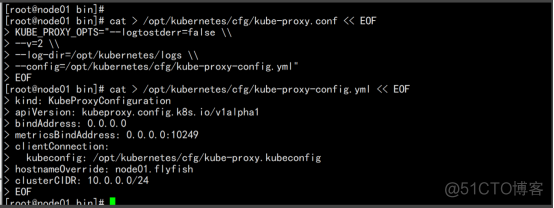

部署kube-proxy 1. 创建配置文件 cat > /opt/kubernetes/cfg/kube-proxy.conf << EOF KUBE_PROXY_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/opt/kubernetes/logs \\ --config=/opt/kubernetes/cfg/kube-proxy-config.yml" |

配置参数文件 cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOF kind: KubeProxyConfiguration apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 metricsBindAddress: 0.0.0.0:10249 clientConnection: kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig hostnameOverride: node01.flyfish clusterCIDR: 10.0.0.0/24 mode: ipvs ipvs: scheduler: "rr" iptables: masqueradeAll: true |

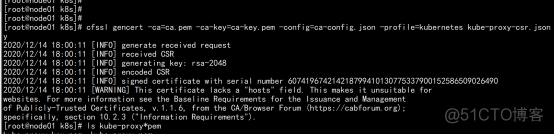

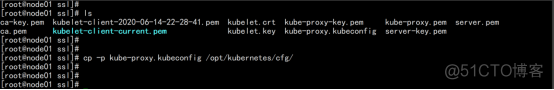

# 切换工作目录 cd /root/TLS/k8s # 创建证书请求文件 cat > kube-proxy-csr.json << EOF "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" # 生成证书 cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy ls kube-proxy*pem kube-proxy-key.pem kube-proxy.pem cp -p kube-proxy-key.pem kube-proxy.pem /opt/kubernetes/ssl/ |

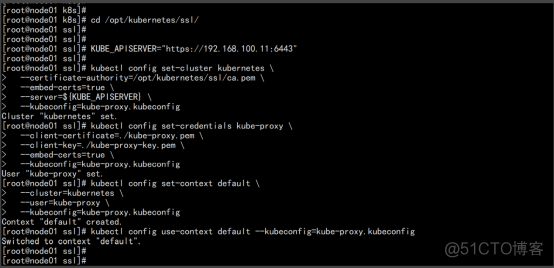

生成kubeconfig文件: cd /opt/kubernetes/ssl/ vim kubeconfig.sh KUBE_APISERVER="https://192.168.100.11:6443" kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=${KUBE_APISERVER} \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \ --client-certificate=./kube-proxy.pem \ --client-key=./kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig . ./kubeconfig.sh cp -p kube-proxy.kubeconfig /opt/kubernetes/cfg/ |

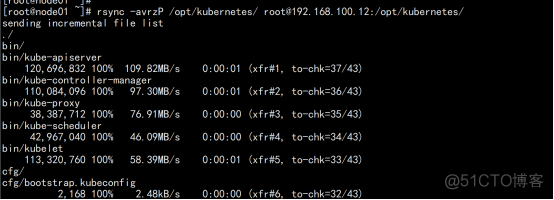

同步/opt/kubernetes 目录 rsync -avrzP /opt/kubernetes/ [email protected]:/opt/kubernetes/ |

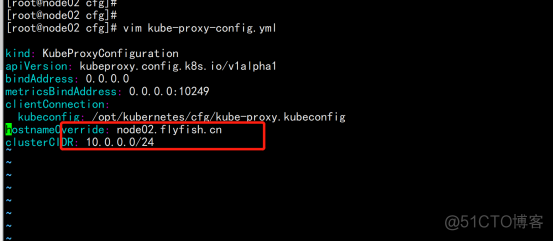

在node02.flyfish.cn 上面修改文件 cd /opt/kubernetes/cfg vim kubelet.conf --hostname-override=node02.flyfish.cn vim kube-proxy-config.yml hostnameOverride: node02.flyfish.cn |

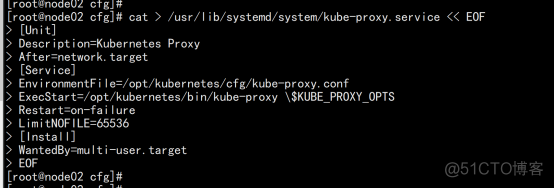

systemd管理kube-proxy cat > /usr/lib/systemd/system/kube-proxy.service << EOF [Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target |

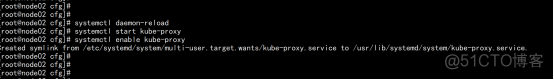

启动并设置开机启动 systemctl daemon-reload systemctl start kube-proxy systemctl enable kube-proxy |

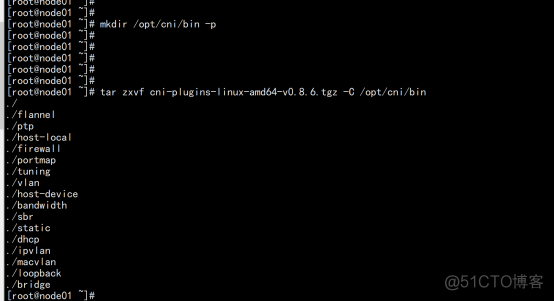

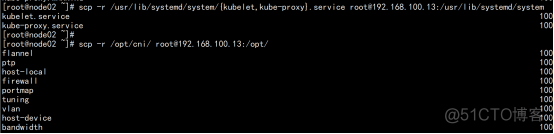

2.3.15.5 部署CNI

部署CNI网络 先准备好CNI二进制文件: 下载地址:https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz 解压二进制包并移动到默认工作目录: mkdir /opt/cni/bin -p tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin scp -r /opt/cni/ [email protected]:/opt/ |

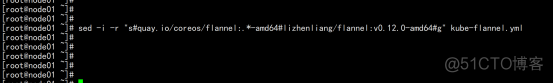

部署CNI网络: wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml sed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml |

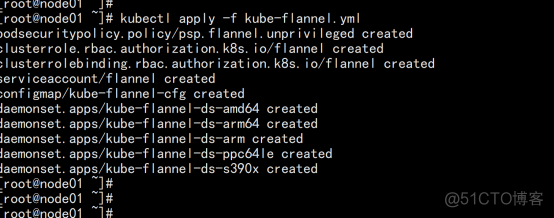

kubectl apply -f kube-flannel.yml kubectl get pods -n kube-system 部署好网络插件,Node准备就绪。 kubectl get node |

2.3.16 增加一个worker node

2.3.16.1 先部署docker

同上查考(2.3.15.1)

2.3.16.2 增加一个node

增加 一个 work节点:(从node02.flyfish.cn 节点同步目录) scp -r /opt/kubernetes [email protected]:/opt/ scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system scp -r /opt/cni/ [email protected]:/opt/ scp /opt/kubernetes/ssl/ca.pem [email protected]:/opt/kubernetes/ssl |

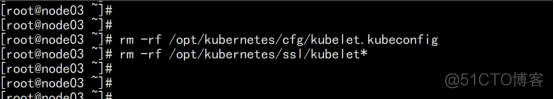

2.3.16.3 删掉 生成文件

删除kubelet证书和kubeconfig文件 rm -rf /opt/kubernetes/cfg/kubelet.kubeconfig rm -rf /opt/kubernetes/ssl/kubelet* |

2.3.16.4 修改主机名

修改主机名 vim /opt/kubernetes/cfg/kubelet.conf --hostname-override=node03.flyfish.cn vim /opt/kubernetes/cfg/kube-proxy-config.yml hostnameOverride: node03.flyfish.cn |

2.3.16.5 设置开机启动

启动并设置开机启动 systemctl daemon-reload systemctl start kubelet systemctl enable kubelet systemctl start kube-proxy systemctl enable kube-proxy |

2.3.16.6 设置授权节点

node01.flyfish.cn: kubectl get csr kubectl certificate approve node-csr-3XcOq5z1llZnhhowdBMjLgfRm6HOlvwPkj1oBVUyB-8 |

2.3.16.7 查看增加节点

kubectl get node |

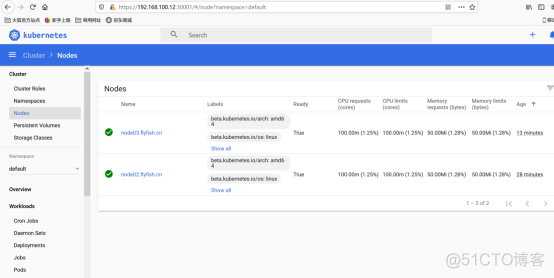

2.3.17 配置部署Dashboard和CoreDNS

2.3.17.1 部署Dashboard

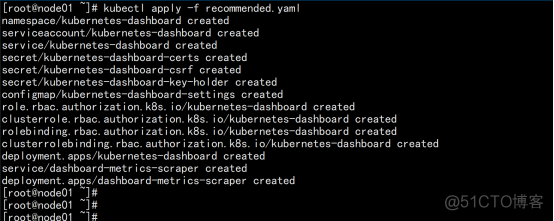

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml 默认Dashboard只能集群内部访问,修改Service为NodePort类型,暴露到外部: vim recommended.yaml kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: ports: - port: 443 targetPort: 8443 nodePort: 30001 type: NodePort selector: k8s-app: kubernetes-dashboard |

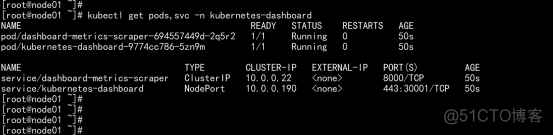

kubectl apply -f recommended.yaml kubectl get pods,svc -n kubernetes-dashboard |

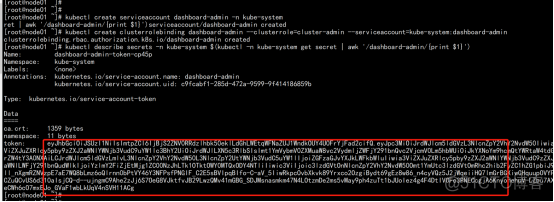

创建service account并绑定默认cluster-admin管理员集群角色: kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') |

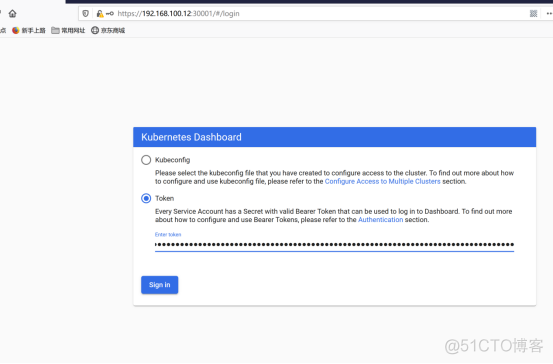

打开火狐浏览器:

https://192.168.100.12:30001

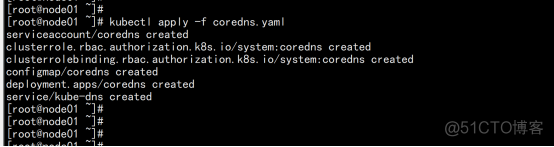

2.3.17.2 部署 CoreDNS

CoreDNS用于集群内部Service名称解析。 kubectl apply -f coredns.yaml |

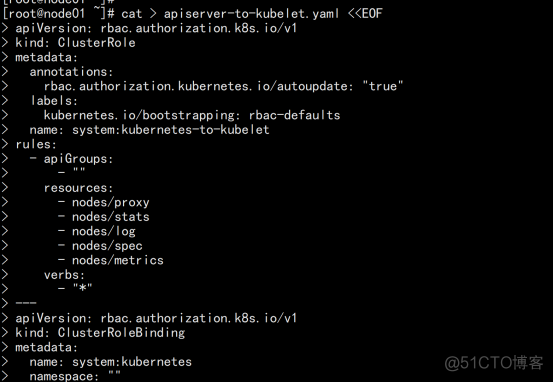

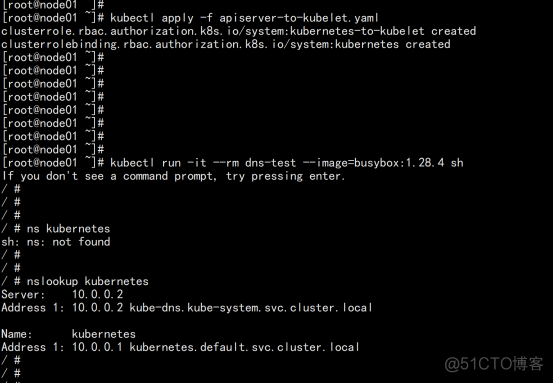

DNS解析测试: kubectl run -it --rm dns-test --image=busybox:1.28.4 sh 进入容器问题: 创建apiserver到kubelet的权限,就是没有给kubernetes用户rbac授权 error: unable to upgrade connection: Forbidden (user=kubernetes, verb=create, resource=nodes, subresource=proxy) |

cat > apiserver-to-kubelet.yaml <<EOF apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:kubernetes-to-kubelet rules: - apiGroups: resources: - nodes/proxy - nodes/stats - nodes/log - nodes/spec - nodes/metrics verbs: apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:kubernetes namespace: "" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:kubernetes-to-kubelet subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: kubernetes kubectl apply -f apiserver-to-kubelet.yaml |

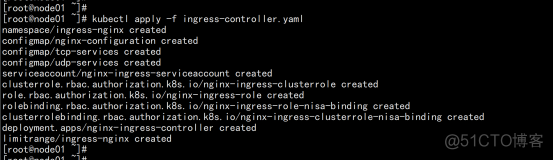

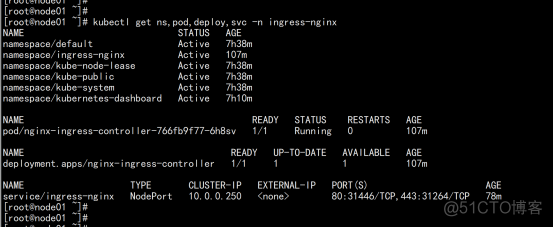

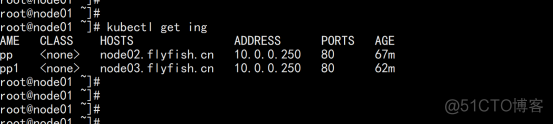

三:ingress 部署

3.1 ingress 配置

kubectl apply -f ingress-nginx.yaml Kubectl apply -f service-nodeport.yaml kubectl get pod -n ingress-nignx |

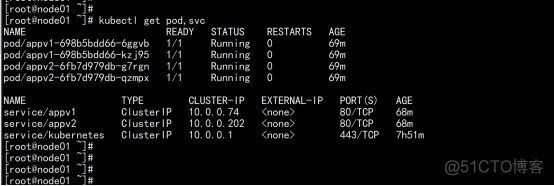

3.2 ingress 测试

部署两个nginx ## 部署两个deployment的http应用 apiVersion: apps/v1 kind: Deployment metadata: name: appv1 labels: app: v1 spec: replicas: 1 selector: matchLabels: app: v1 template: metadata: labels: app: v1 spec: containers: - name: nginx image: wangyanglinux/myapp:v1 ports: - containerPort: 80 apiVersion: apps/v1 kind: Deployment metadata: name: appv2 labels: app: v2 spec: replicas: 1 selector: matchLabels: app: v2 template: metadata: labels: app: v2 spec: containers: - name: nginx image: wangyanglinux/myapp:v2 ports: - containerPort: 80 ------- ### 分别为应用创建对应的service apiVersion: v1 kind: Service metadata: name: appv1 spec: selector: app: v1 ports: - protocol: TCP port: 80 targetPort: 80 apiVersion: v1 kind: Service metadata: name: appv2 spec: selector: app: v2 ports: - protocol: TCP port: 80 targetPort: 80 |

部署ingress 对外规则: #### ingress 限制访问规则 apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: app spec: rules: - host: node02.flyfish.cn http: paths: - path: / backend: serviceName: appv1 servicePort: 80 ----- apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: name: app1 spec: rules: - host: node03.flyfish.cn http: paths: - path: / backend: serviceName: appv1 servicePort: 80 |

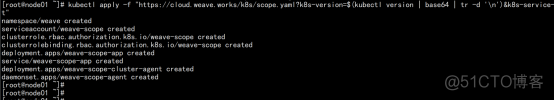

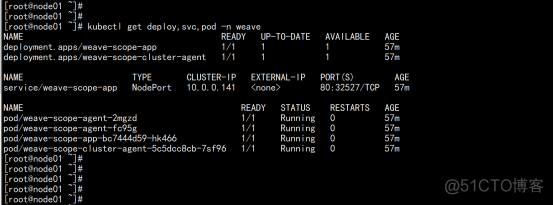

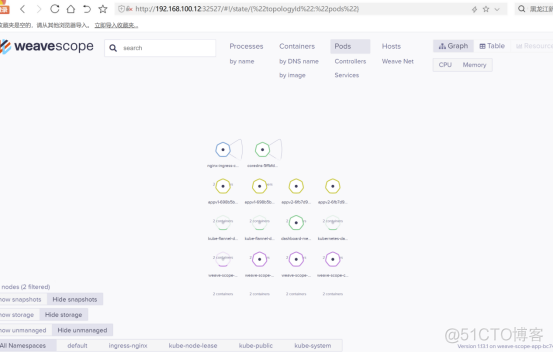

四:部署监控工具Weave Scope

4.1 安装

Weave Scope 是 Docker 和 Kubernetes 可视化监控工具。Scope 提供了至上而下的集群基础设施和应用的完整视图,用户可以轻松对分布式的容器化应用进行实时监控和问题诊断。 kubectl apply -f "https://cloud.weave.works/k8s/scope.yaml?k8s-version=$(kubectl version | base64 | tr -d '\n')&k8s-service-type=NodePort" |

kubectl get deploy,svc,pod -n weave |

4.2 浏览器打开监控

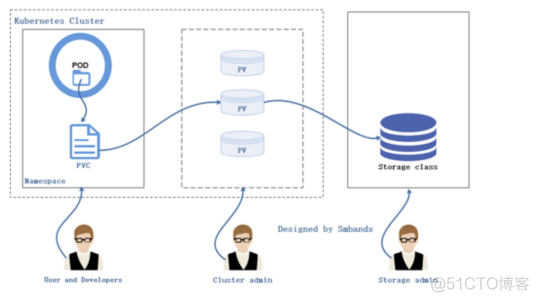

五:k8s 部署NFS 的storageclass

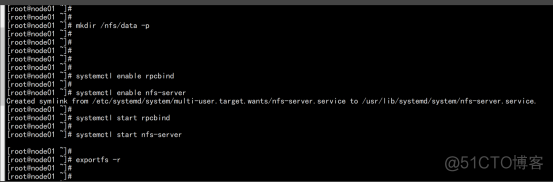

5.1 配置nfs 服务器

yum install -y nfs-utils echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports mkdir -p /nfs/data systemctl enable rpcbind systemctl enable nfs-server systemctl start rpcbind systemctl start nfs-server exportfs -r |

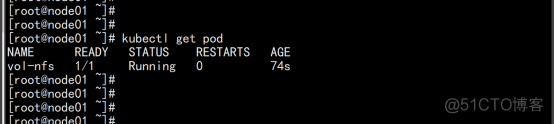

测试Pod直接挂载NFS了(主节点操作) 在opt目录下创建一个nginx.yaml的文件 vim nginx.yaml apiVersion: v1 kind: Pod metadata: name: vol-nfs namespace: default spec: volumes: - name: html path: /nfs/data #1000G server: 192.168.100.11 #自己的nfs服务器地址 containers: - name: myapp image: nginx volumeMounts: - name: html mountPath: /usr/share/nginx/html/ kubectl apply -f nginx.yaml cd /nfs/data/ echo " 11111" >> index.html |

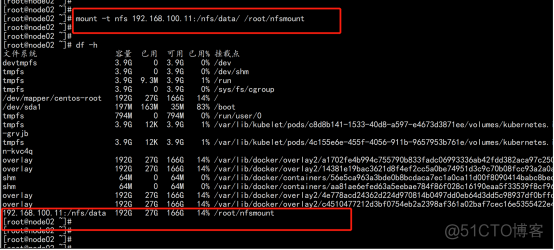

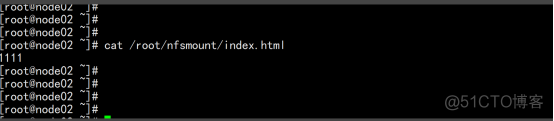

安装客户端工具(node节点操作) node02.flyfish.cn showmount -e 192.168.100.11 |

创建同步文件夹 mkdir /root/nfsmount 将客户端的/root/nfsmount和/nfs/data/做同步(node节点操作) mount -t nfs 192.168.100.11:/nfs/data/ /root/nfsmount |

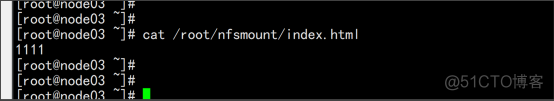

5.2设置动态供应链storageclass

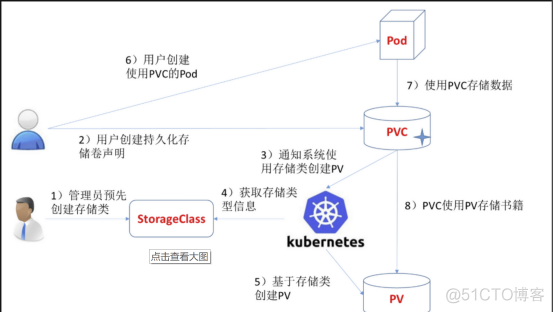

vim nfs-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get","create","list", "watch","update"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-client-provisioner image: lizhenliang/nfs-client-provisioner volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes - name: PROVISIONER_NAME value: storage.pri/nfs - name: NFS_SERVER value: 192.168.100.11 - name: NFS_PATH value: /nfs/data volumes: - name: nfs-client-root server: 192.168.100.11 path: /nfs/data kubectl apply -f nfs-rbac.yaml kubectl get pod |

#扩展"reclaim policy"有三种方式:Retain、Recycle、Deleted。 Retain #保护被PVC释放的PV及其上数据,并将PV状态改成"released",不将被其它PVC绑定。集群管理员手动通过如下步骤释放存储资源: 手动删除PV,但与其相关的后端存储资源如(AWS EBS, GCE PD, Azure Disk, or Cinder volume)仍然存在。 手动清空后端存储volume上的数据。 手动删除后端存储volume,或者重复使用后端volume,为其创建新的PV。 Delete 删除被PVC释放的PV及其后端存储volume。对于动态PV其"reclaim policy"继承自其"storage class", 默认是Delete。集群管理员负责将"storage class"的"reclaim policy"设置成用户期望的形式,否则需要用 户手动为创建后的动态PV编辑"reclaim policy" Recycle 保留PV,但清空其上数据,已废弃 |

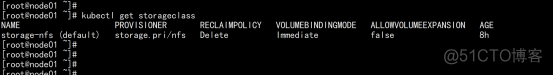

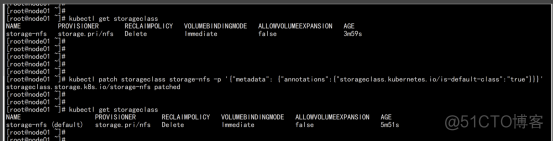

kubectl get storageclass |

改变默认sc kubectl patch storageclass storage-nfs -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' |

5.3验证nfs动态供应

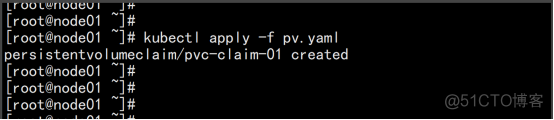

创建pvc vim pvc.yaml ----- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: pvc-claim-01 #annotations: # volume.beta.kubernetes.io/storage-class: "storage-nfs" spec: storageClassName: storage-nfs #这个class一定注意要和sc的名字一样 accessModes: - ReadWriteMany resources: requests: storage: 1Mi ----- kubectl apply -f pvc.yaml |

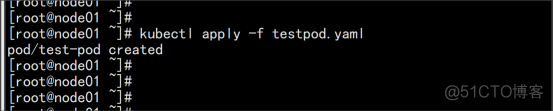

使用pvc vi testpod.yaml kind: Pod apiVersion: v1 metadata: name: test-pod spec: containers: - name: test-pod image: busybox command: - "/bin/sh" args: - "-c" - "touch /mnt/SUCCESS && exit 0 || exit 1" volumeMounts: - name: nfs-pvc mountPath: "/mnt" restartPolicy: "Never" volumes: - name: nfs-pvc persistentVolumeClaim: claimName: pvc-claim-01 ----- kubectl apply -f testpod.yaml |

5.4 配置metric server

5.4.1 部署metric-0.3.6

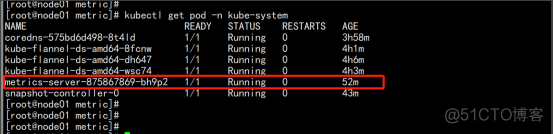

部署metric-0.3.6 下载:components.yaml wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml 下载:k8s.gcr.io/metrics-server-amd64:v0.3.6 镜像导入主机 docker load -i k8s.gcr.io/metrics-server-amd64:v0.3.6 kubectl apply -f components.yaml kubectl get pod -n kube-system |

5.4.2 关于metric的报错

kubectl top node Error from server (ServiceUnavailable): the server is currently unable to handle the request (get pods.metrics.k8s.io) https://blog.csdn.net/zorsea/article/details/105037533 |

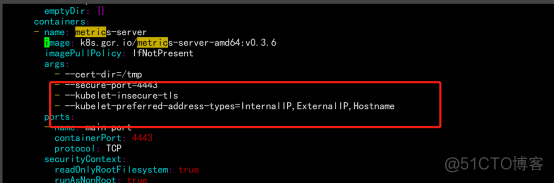

vim components.yaml - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname |

5.4.3 生成授权

生成metric 的 key 授权: vim metrics-server-csr.json "CN": "system:metrics-server", "hosts": [], "key": { "algo": "rsa", "size": 2048 "names": [ "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "system" cd TLS/k8s/ cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem -ca-key=/opt/kubernetes/ssl/ca-key.pem -config=ca-config.json -profile=kubernetes metrics-server-csr.json | cfssljson -bare metrics-server 配置metrics-server RBAC授权 cat > auth-metrics-server.yaml << EOF apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:auth-metrics-server-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-metrics-server-reader subjects: - kind: User name: system:metrics-server namespace: kube-system kubectl apply -f auth-metrics-server.yaml |

在k8s 的apiserver 配置文件的当中加上 metrics 的认证: cd /opt/kubernetes/cfg/ --proxy-client-cert-file=/opt/kubernetes/ssl/metrics-server.pem --proxy-client-key-file=/opt/kubernetes/ssl/metrics-server-key.pem |

kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous |

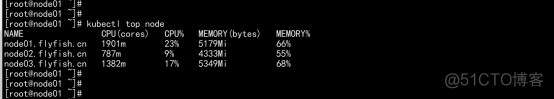

kubectl top node |

六:kubesphere 的部署配置

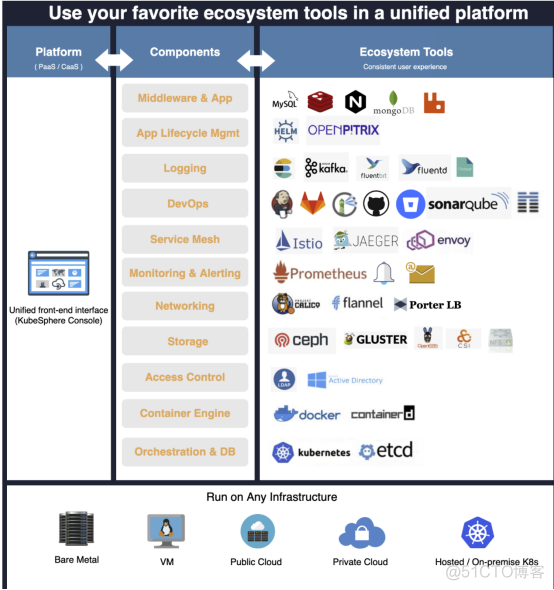

6.1 kubesphere 3.0 的架构

6.2 安装前置条件

1.k8s集群版本必须是1.15.x, 1.16.x, 1.17.x, or 1.18.x 4.必须要有storageclass 前面已经安装 |

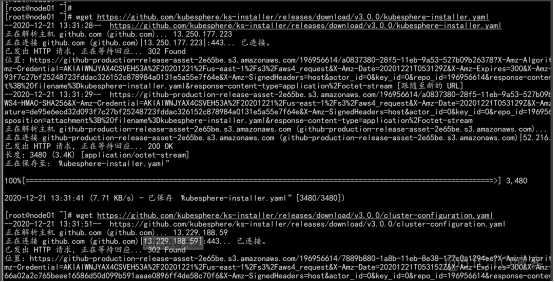

安装yaml文件: https://kubesphere.com.cn/docs/quick-start/minimal-kubesphere-on-k8s/ wget https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yaml wget https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml |

vim cluster-configuration.yaml apiVersion: installer.kubesphere.io/v1alpha1 kind: ClusterConfiguration metadata: name: ks-installer namespace: kubesphere-system labels: version: v3.0.0 spec: persistence: storageClass: "" # If there is not a default StorageClass in your cluster, you need to specify an existing StorageClass here. authentication: jwtSecret: "" # Keep the jwtSecret consistent with the host cluster. Retrive the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the host cluster. etcd: monitoring: true # Whether to enable etcd monitoring dashboard installation. You have to create a secret for etcd before you enable it. endpointIps: 192.168.100.11 # etcd cluster EndpointIps, it can be a bunch of IPs here. port: 2379 # etcd port tlsEnable: true common: mysqlVolumeSize: 20Gi # MySQL PVC size. minioVolumeSize: 20Gi # Minio PVC size. etcdVolumeSize: 20Gi # etcd PVC size. openldapVolumeSize: 2Gi # openldap PVC size. redisVolumSize: 2Gi # Redis PVC size. es: # Storage backend for logging, events and auditing. # elasticsearchMasterReplicas: 1 # total number of master nodes, it's not allowed to use even number # elasticsearchDataReplicas: 1 # total number of data nodes. elasticsearchMasterVolumeSize: 4Gi # Volume size of Elasticsearch master nodes. elasticsearchDataVolumeSize: 20Gi # Volume size of Elasticsearch data nodes. logMaxAge: 7 # Log retention time in built-in Elasticsearch, it is 7 days by default. elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log. console: enableMultiLogin: true # enable/disable multiple sing on, it allows an account can be used by different users at the same time. port: 30880 alerting: # (CPU: 0.3 Core, Memory: 300 MiB) Whether to install KubeSphere alerting system. It enables Users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from. enabled: true auditing: # Whether to install KubeSphere audit log system. It provides a security-relevant chronological set of records,recording the sequence of activities happened in platform, initiated by different tenants. enabled: true devops: # (CPU: 0.47 Core, Memory: 8.6 G) Whether to install KubeSphere DevOps System. It provides out-of-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image. enabled: true jenkinsMemoryLim: 2Gi # Jenkins memory limit. jenkinsMemoryReq: 1500Mi # Jenkins memory request. jenkinsVolumeSize: 8Gi # Jenkins volume size. jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters. jenkinsJavaOpts_Xmx: 512m jenkinsJavaOpts_MaxRAM: 2g events: # Whether to install KubeSphere events system. It provides a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters. enabled: true ruler: enabled: true replicas: 2 logging: # (CPU: 57 m, Memory: 2.76 G) Whether to install KubeSphere logging system. Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd. enabled: true logsidecarReplicas: 2 metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) Whether to install metrics-server. IT enables HPA (Horizontal Pod Autoscaler). enabled: false monitoring: # prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and provide high availability as well. prometheusMemoryRequest: 400Mi # Prometheus request memory. prometheusVolumeSize: 20Gi # Prometheus PVC size. # alertmanagerReplicas: 1 # AlertManager Replicas. multicluster: clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the role of host or member cluster. networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods). # Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net. enabled: true notification: # Email Notification support for the legacy alerting system, should be enabled/disabled together with the above alerting option. enabled: true openpitrix: # (2 Core, 3.6 G) Whether to install KubeSphere Application Store. It provides an application store for Helm-based applications, and offer application lifecycle management. enabled: true servicemesh: # (0.3 Core, 300 MiB) Whether to install KubeSphere Service Mesh (Istio-based). It provides fine-grained traffic management, observability and tracing, and offer visualization for traffic topology. enabled: true |

kubectl apply -f kubesphere-installer.yaml kubectl apply -f cluster-configuration1.yaml |

查看安装进度: kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f |

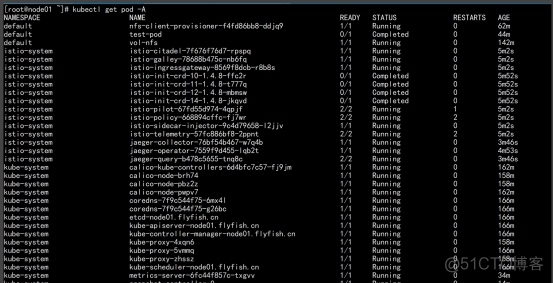

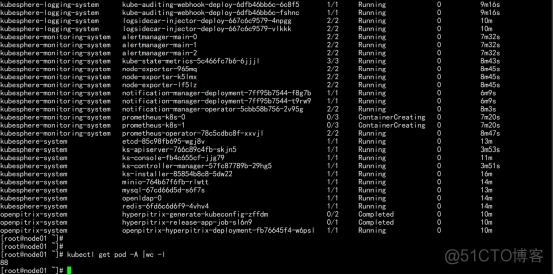

kubectl get pod -A |

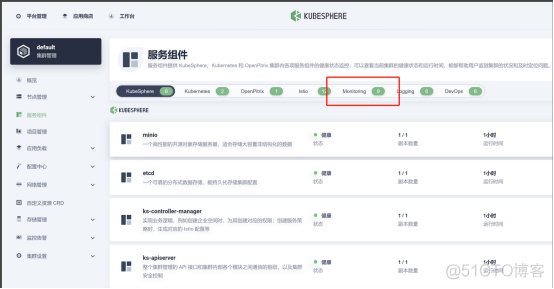

6.3 关于监控promethus的监控

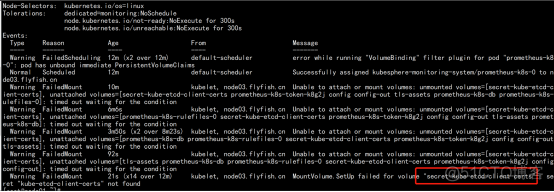

kubesphere-monitoring-system prometheus-k8s-0 0/3 ContainerCreating 0 7m20s kubesphere-monitoring-system prometheus-k8s-1 0/3 ContainerCreating 0 7m20s prometheus-k8s-1 这个一直在 ContainerCreating 这个 状态 |

kubectl describe pod prometheus-k8s-0 -n kubesphere-monitoring-system kube-etcd-client-certs 这个证书没有找到: |

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs \ --from-file=etcd-client-ca.crt=/opt/etcd/ssl/ca.pem \ --from-file=etcd-client.crt=/opt/etcd/ssl/ca-key.pem |

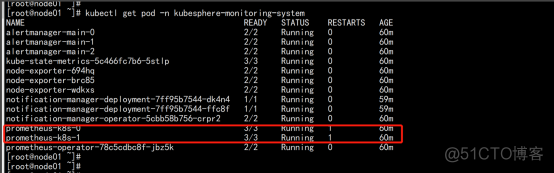

kubectl get pod -n kubesphere-monitoring-system |

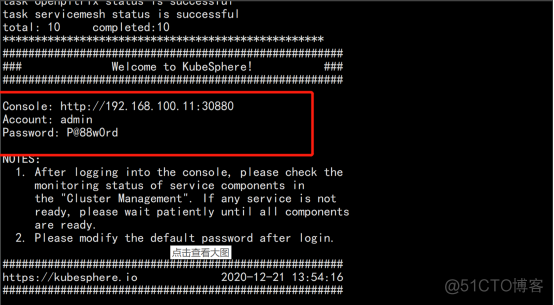

参考 日志 打开 页面: kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f |

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK