DeepMind's new AI can perform over 600 tasks, from playing games to controlling...

source link: https://finance.yahoo.com/news/deepminds-ai-perform-over-600-121528485.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

DeepMind's new AI can perform over 600 tasks, from playing games to controlling robots

The ultimate achievement to some in the AI industry is creating a system with artificial general intelligence (AGI), or the ability to understand and learn any task that a human can. Long relegated to the domain of science fiction, it's been suggested that AGI would bring about systems with the ability to reason, plan, learn, represent knowledge, and communicate in natural language.

Not every expert is convinced that AGI is a realistic goal -- or even possible. But it could be argued that DeepMind, the Alphabet-backed research lab, took a toward it this week with the release of an AI system called Gato,

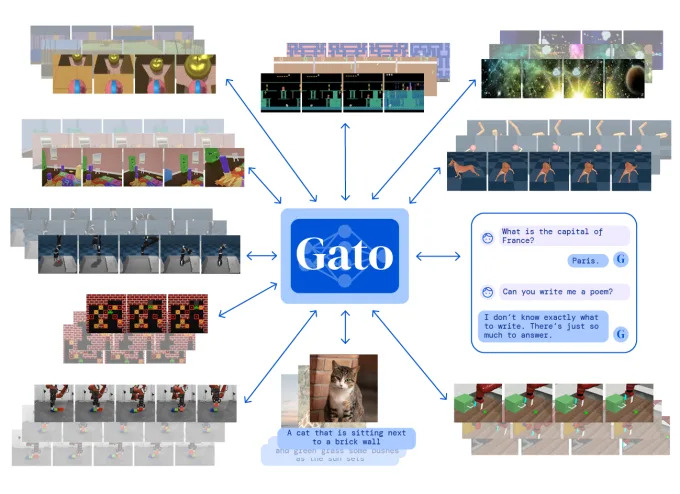

Gato is what DeepMind describes as a "general-purpose" system, a system that can be taught to perform many different types of tasks. Researchers at DeepMind trained Gato to complete 604, to be exact, including captioning images, engaging in dialogue, stacking blocks with a real robot arm, and playing Atari games.

Jack Hessel, a research scientist at the Allen Institute for AI, points out that a single AI system that can solve many tasks isn't new. For example, Google recently began using a system in Google Search called multitask unified model, or MUM, which can handle text, images, and videos to perform tasks from finding interlingual variations in the spelling of a word to relating a search query to an image. But what is potentially newer, here, Hessel says, is the diversity of the tasks that are tackled and the training method.

DeepMind's Gato architecture.

"We've seen evidence previously that single models can handle surprisingly diverse sets of inputs," Hessel told TechCrunch via email. "In my view, the core question when it comes to multitask learning ... is whether the tasks complement each other or not. You could envision a more boring case if the model implicitly separates the tasks before solving them, e.g., 'If I detect task A as an input, I will use subnetwork A. If I instead detect task B, I will use different subnetwork B.' For that null hypothesis, similar performance could be attained by training A and B separately, which is underwhelming. In contrast, if training A and B jointly leads to improvements for either (or both!), then things are more exciting."

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK