LoRA: Low-Rank Adaptation of Large Language Models 简读

source link: https://finisky.github.io/lora/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

LoRA: Low-Rank Adaptation of Large Language Models 简读

之前我们谈到 Adapters 与 Prompting 都是轻量级的训练方法,所谓 lightweight-finetuning。今天来看一下另一种轻量级训练大语言模型的方法:

LoRA: Low-Rank Adaptation of Large Language Models 。

首先来看finetune大规模语言模型的问题:

An important paradigm of natural language processing consists of large-scale pretraining on general domain data and adaptation to particular tasks or domains. As we pre-train larger models, full fine-tuning, which retrains all model parameters, becomes less feasible. Using GPT-3 175B as an example – deploying independent instances of fine-tuned models, each with 175B parameters, is prohibitively expensive.

已有方案的问题

为解决finetune大规模语言模型的问题,已有多种方案,比如部分finetune、adapters和prompting。但这些方法存在如下问题:

- Adapters引入额外的inference latency (由于增加了层数)

- Prefix-Tuning比较难于训练

- 模型性能不如finetuning

Adapter引入Inference Latency

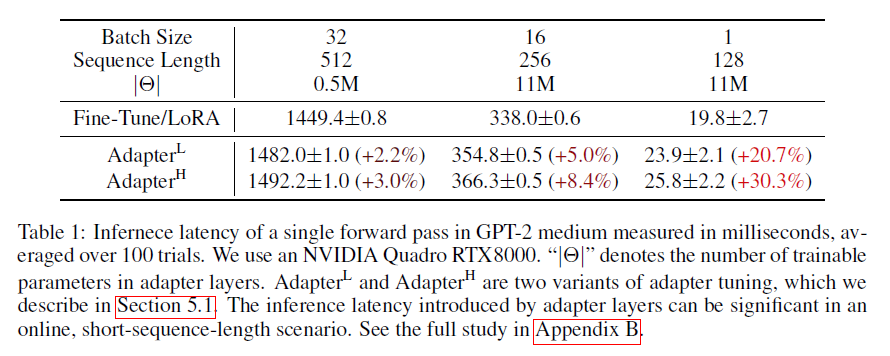

显然,增加模型层数会增加inference的时长:

While one can reduce the overall latency by pruning layers or exploiting multi-task settings, there is no direct ways to bypass the extra compute in adapter layers.

从上图可以看出,对于线上batch size为1,sequence length比较短的情况,inference latency的变化比例会更明显。不过个人认为,绝对延迟的区别不大。 :-)

Prefix-Tuning难于训练

与Prefix-Tuning的难于训练相比,LoRA则更容易训练:

We observe that prefix tuning is difficult to optimize and that its performance changes non-monotonically in trainable parameters, confirming similar observations in the original paper.

模型性能不如Full Finetuning

预留一些sequence做adaption会让处理下游任务的可用sequence长度变少,一定程度上会影响模型性能:

More fundamentally, reserving a part of the sequence length for adaptation necessarily reduces the sequence length available to process a downstream task, which we suspect makes tuning the prompt less performant compared to other methods.

先看看LoRA的motivation:

We take inspiration from Li et al. (2018a); Aghajanyan et al. (2020) which show that the learned over-parametrized models in fact reside on a low intrinsic dimension.

We hypothesize that the change in weights during model adaptation also has a low “intrinsic rank”, leading to our proposed Low-Rank Adaptation (LoRA) approach.

虽然模型的参数众多,但其实模型主要依赖low intrinsic dimension,那adaption应该也依赖于此,所以提出了Low-Rank

Adaptation (LoRA)。

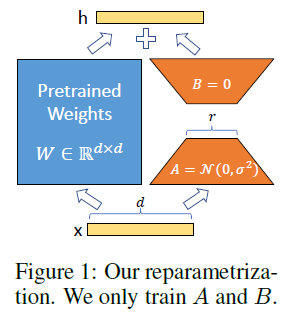

LoRA的思想也很简单,在原始PLM旁边增加一个旁路,做一个降维再升维的操作,来模拟所谓的intrinsic rank。训练的时候固定PLM的参数,只训练降维矩阵A与升维矩阵B。而模型的输入输出维度不变,输出时将BA与PLM的参数叠加。用随机高斯分布初始化A,用0矩阵初始化B,保证训练的开始此旁路矩阵依然是0矩阵。

具体来看,假设预训练的矩阵为 W0∈Rd×k,它的更新可表示为:

W0+ΔW=W0+BA,B∈Rd×r,A∈Rr×k

其中秩r<<min(d,k)。

这种思想有点类似于残差连接,同时使用这个旁路的更新来模拟full finetuning的过程。并且,full finetuning可以被看做是LoRA的特例(当r等于k时):

This means that when applying LoRA to all weight matrices and training all biases, we roughly recover the expressiveness of full fine-tuning by setting the LoRA rank r to the rank of the pre-trained weight matrices.

In other words, as we increase the number of trainable parameters, training LoRA roughly converges to training the original model, while adapter-based methods converges to an MLP and prefix-based methods to a model that cannot take long input sequences.

LoRA也几乎未引入额外的inference latency,只需要计算 W=W0+BA 即可。

LoRA与Transformer的结合也很简单,仅在QKV attention的计算中增加一个旁路,而不动MLP模块:

We limit our study to only adapting the attention weights for downstream tasks and freeze the MLP modules (so they are not trained in downstream tasks) both for simplicity and parameter-efficiency.

总结,基于大模型的内在低秩特性,增加旁路矩阵来模拟full finetuning,LoRA是个简单有效的方案来达成lightweight finetuning的目的。

</div

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK