The Scoop: Inside the Longest Atlassian Outage of All Time

source link: https://newsletter.pragmaticengineer.com/p/scoop-atlassian?s=r

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

The Scoop: Inside the Longest Atlassian Outage of All Time

Hundreds of companies have no access to JIRA, Confluence and OpsGenie. What can engineering teams learn from the poor handling of this outage?

👋 Hi, this is Gergely with a bonus, free issue of the Pragmatic Engineer Newsletter. If you’re not a full subscriber yet, you missed the deep-dive on Amazon’s engineering culture, one on Retaining software engineers and EMs, and a few others. Subscribe to get this newsletter every week 👇

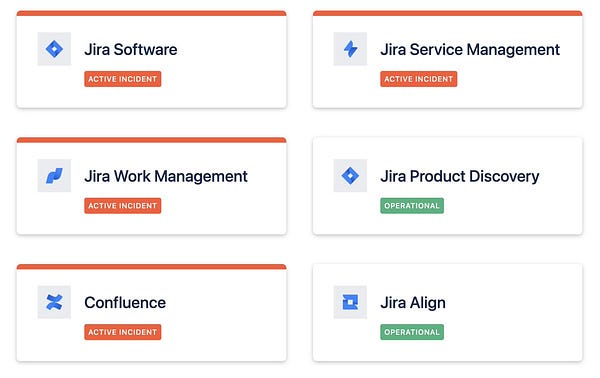

We are in the middle of the longest outage Atlassian has ever had. Close to 400 companies and anywhere from 50,000 to 800,000 users had no access to JIRA, Confluence, OpsGenie, JIRA Status page, and other Atlassian Cloud services.

The outage is on its 9th day, having started on Monday, 4th of April. Atlassian estimates many impacted customers will be unable to access their services for another two weeks. At the time of writing, 45% of companies have seen their access restored. Companies impacted I talked with were as large as 4,000 seats and the smallest company impacted I talked with had 150 seats.

For most of this outage, Atlassian has gone silent in communications across their main channels such as Twitter or the community forums. It took until Day 9 for executives at the company to acknowledge the outage.

While the company stayed silent, outage news started trending in niche communities like Hacker News and Reddit. In these forums, people tried to guess causes of the outage, wonder why there is full radio silence, and many took to mocking the company for how it is handling the situation.

Atlassian did no better with communicating with customers during this time. Impacted companies received templated emails and no answers to their questions. After I tweeted about this outage, several Atlassian customers turned to me to vent about the situation, and hope I can offer more details. Customers claimed how the company’s statements made it seem they received support, which they, in fact, did not. Several customers hoped I could help get the attention of the company which had not given them any details, beyond telling them to wait weeks until their data is restored.

Finally, I managed to get the attention many impacted Atlassian customers hoped for. Eight days into the outage, Atlassian issued the first statement from an executive. This statement was from Atlassian CTO Sri Viswanath and was also sent as a response to one of my tweets sharing a customer complaint.

In this issue, we cover:

What happened? A timeline of events.

The cause of the outage. What we know so far.

What Atlassian customers are saying. How did they observe the outage? What business impact did it mean for them? Will they stay Atlassian customers?

The impact of the outage on Atlassian’s business. The outage comes at a critical time when Atlassian starts to retire its Server product - which was immune to this outage - in favor of onboarding customers to its Cloud offering, which was advertised as offering higher reliability. Will customers trust the Atlassian Cloud after this lengthy incident? Which competitors benefitted from Atlassian's fumbling and why?

Learnings from this outage. What can engineering teams take from this incident? Both as customers to Atlassian, or as teams offering Cloud products to customers.

My take. I have been following this outage for a while and offer my summary.

What Happened

Day 1 - Monday, 4th of April

JIRA, Confluence, OpsGenie, and other Atlassian sites stop working at some companies.

Day 2 - Tuesday, 5th of April

Atlassian notices the incident and starts tracking it on their status page. They post several updates this day, confirming they are working on a fix. They close the day by saying “We will provide more detail as we progress through resolution”.

During this time, Atlassian staff and customers turned their attention to Atlassian’s flagship annual event, Team 22. Held in Las Vegas, many company employees, much of the leadership team, and many Atlassian partners traveled to attend the event in-person. In most years, product announcements at this event would have dominated the whole week for Atlassian news.

All while Atlassian focused on Team 22, customers were getting frustrated. Many of them tried to contact Atlassian, but most of them heard back nothing. Some customers take their frustration to Twitter. This thread from an impacted customer quickly drew in responses from other customers affected:

Day 3 - Wednesday, 6th April

Atlassian posts the same updates every few hours, without sharing any relevant information. The update reads:

“We are continuing work in the verification stage on a subset of instances. Once reenabled, support will update accounts via opened incident tickets. Restoration of customer sites remains our first priority and we are coordinating with teams globally to ensure that work continues 24/7 until all instances are restored.”

Customers get no direct communication. Some take it to social media to complain.

The post “The majority of Atlassian cloud services have been down for a subset of users for over 24 hours” is trending on the sysadmin subreddit. A Reddit user comments:

“Big Seattle tech company here. Won't say who I work for but I guarantee you've heard of us.

Our Atlassian products have been down since 0200 PST on the fifth - in other words, for about 29 hours now.

I've never seen a product outage last this long. The latest update says it may take several days to restore our stuff.“

Day 4 - Thursday, 7th April

The Atlassian Twitter account acknowledges the issue and offers some light details. These tweets would be the last communication from this official account before it goes silent for 5 days straight.

The Atlassian status page posts the exact same update every few hours:

“We continue to work on partial restoration to a cohort of customers. The plan to take a controlled and hands-on approach as we gather feedback from customers to ensure the integrity of this first round of restorations remains the same from our last update.”

Days 5-7 - Friday 8th April - Sunday 10th April

No real updates. Atlassian posts the same message to their status page again and again and again…

“The team is continuing the restoration process through the weekend and working toward recovery. We are continuously improving the process based on customer feedback and applying those learnings as we bring more customers online.”

On Sunday, 10th April I post about the outage on Twitter. Unhappy and impacted Atlassian customers start messaging me almost immediately with complaints.

The news on Atlassian’s outage also trends on Hacker News and on Reddit over the weekend. On Reddit, the highest-voted comment is questioning whether people will keep using Atlassian if forced to move to the cloud:

“Well. This is a big red flag in the decision making process of continuing to use Atlassians products, after all they basically force you to move to their cloud within the next two years.”

Day 8 - Monday, 11th April

No real updates from Atlassian beyond copy-pasting the same message.

News of the outage is trending on Hacker News. The highest-voted comment is someone claiming to be an ex-Atlassian employee and commenting that engineering practices internally used to be subpar:

“This does not suprise me at all. (...) at Atlassian, their incident process and monitoring is a joke. More than half of the incidents are customer detected.

Most of engineering practices at Atlassian focus on only the happy path, almost no one considers what can go wrong. Every system is so interconnected, and there are more SPOF than the employees.“

Day 9 - Tuesday, 12th April

Atlassian sends mass communication to customers. Several impacted customers receive the same message:

"We were unable to confirm a more firm ETA until now due to the complexity of the rebuild process for your site. While we are beginning to bring some customers back online, we estimate the rebuilding effort to last for up to 2 more weeks."

Atlassian also updates its Status Page, claiming 35% of the customers have been restored.

For the first time since the incident started, Atlassian issues a statement. They claim hundreds of engineers are working on the issue. In their statement they also claim:

“We’re communicating directly with each customer.”

A customer messages me saying this last statement is not true, as their company is receiving only canned responses, and no specifics, despite questions. I respond to the company, highlighting customers’ report that they have no direct communications, despite being paying customers:

In response is the first time that an executive acknowledges the issues from Atlassian. Replying to me, Atlassian CTO Sri Viswanath shares a statement the company publishes at that time, and which statement starts with an apology impacted customers have been waiting for:

“Let me start by saying that this incident and our response time are not up to our standard, and I apologize on behalf of Atlassian.”

Head of Engineering Stephen Deasy publishes a Q&A on the active incident in the Atlassian Community.

The cause of the outage

For the past week, everyone has been guessing about the cause of the outage. The most common suspicion coming from several sources like The Stack was how the legacy Insight Plug-In plugin was being retired. A script was supposed to delete all customer data from this plugin but accidentally deleted all customer data for anyone using this plugin. Up Day 9, Atlassian would neither confirm, nor deny these speculations.

On Day 9, Atlassian confirmed that this was, indeed, the main cause in their official update. From this report:

“The script we used provided both the "mark for deletion" capability used in normal day-to-day operations (where recoverability is desirable), and the "permanently delete" capability that is required to permanently remove data when required for compliance reasons. The script was executed with the wrong execution mode and the wrong list of IDs. The result was that sites for approximately 400 customers were improperly deleted.”

So why is the backup taking weeks? On their “How Atlassian Does Resilience” page Atlassian confirms they can restore data deleted in a matter of hours:

“Atlassian tests backups for restoration on a quarterly basis, with any issues identified from these tests raised as Jira tickets to ensure that any issues are tracked until remedied.”

There is a problem, though:

Atlassian can, indeed, restore all data to a checkpoint in a matter of hours.

However, if they did this, while the impacted ~400 companies would get back all their data, everyone else would lose all data committed since that point

So now each customer’s data needs to be selectively restored. Atlassian has no tools to do this in bulk.

They also confirm this is the root of the problem in the update:

“What we have not (yet) automated is restoring a large subset of customers into our existing (and currently in use) environment without affecting any of our other customers.”

For the first several days of the outage, they restored customer data with manual steps. They are now automating this process. However, even with the automation, restoration is small, and can only be done in small batches:

“Currently, we are restoring customers in batches of up to 60 tenants at a time. End-to-end, it takes between 4 and 5 elapsed days to hand a site back to a customer. Our teams have now developed the capability to run multiple batches in parallel, which has helped to reduce our overall restore time.”

What Atlassian customers are saying

Customers have zero access to their Atlassian products and data. The experience for them looks like this: whenever they try to access JIRA, Confluence or OpsGenie, they see this page:

Atlassian seems to be unable to grant even partial access to the data customers have or give any snapshots. I talked with customers who explicitly requested snapshots of key documents they urgently need: Atlassian was not able to provide even this before they would get around to a full restoration. A reminder to never store anything mission-critical not just in Confluence or JIRA, but in any SaaS without a backup independent from the SaaS vendor.

Customers could not even report the problem to Atlassian early on because to report, you need access to JIRA. In Days 1-4, customers struggled to get through to have contact with Atlassian.

This is because to report an issue to them, you need to create a JIRA ticket. To create a JIRA ticket, you need to enter your customer domain. When entering their domain, this domain did not exist - it was deleted! -, so the Atlassian system rejected the ticket as not a customer. Customers had to email or call Atlassian and have Atlassian open a separate ticket for them, through which they could communicate. If they heard anything back at all, as this was the next major complaint.

The biggest complaint from all customers has been the poor communication from Atlassian. These companies lost all access to key systems, were paying customers, and yet, they could not talk to a human. Up to Day 7, many of them got no communication on their JIRA ticket, despite asking questions. Even on Day 9, many still only got the bulk emails about the 2 weeks to restore that every impacted customer got sent.

Customers shared:

“We were not impressed with their comms either, they definitely botched it.” - engineering manager at a 2,000 person company which was impacted.

“Atlassian communication was poor. Atlassian was giving the same lame excuses to our internal support team as what was circulated online.” - software engineer at a 1,000 person company which was impacted.

The impact of the outage has been very large for those relying on OpsGenie. OpsGenie is the “PagerDuty for Atlassian” incident management system. Every company impacted by this outage got locked out of this tool.

While JIRA and Confluence not working were things many companies were able to work around, OpsGenie is a critical piece of infrastructure for all customers. Three out of three customers I talked to have onboarded to competitor PagerDuty, so they can keep their systems running securely.

The impact across customers has been large, as a whole. Many companies did not have backups of critical documents on Confluence. None of those I talked with had JIRA backups. Several companies use the Service Management tool for their helpdesk: meaning their IT helpdesk is out of service during this outage.

Company plannings have been delayed, projects had to be re-planned or delayed. The impact of this outage goes well beyond just engineering, as many companies used JIRA and Confluence to collaborate with other business functions.

Most companies fell back to tools provided by either Google or Microsoft to work around Atlassian products. Google Workspace customers started to use Sheets and Docs to coordinate work. Microsoft customers fell back on Sharepoint and O365.

I asked customers if they would offboard Atlassian as a result of the outage. Most of them said they won’t leave the Atlassian stack, as long as they don’t lose data. This is because moving is complex and they don’t see a move would mitigate a risk of a cloud provider going down. However, all customers said they will invest in having a backup plan in case a SaaS they rely on goes down.

The customers who confirmed to be moving are those onboarding to PagerDuty. They see PagerDuty as a more reliable offering and were all alarmed that Atlassian did not prioritize restoring OpsGenie ahead of other services.

What compensation can customers expect? Customers have not received details on compensation for the outage. Atlassian compensates using credits, which are discounts in pricing. These are issued based on what uptime their service has over the past month. Most customers impacted in the outage have a 73% uptime for the past 30 days, as we speak, and this is going down with every passing day. Atlassian’s credit compensation works like this:

99 - 99.9%: 10% discount

95-99%: 25% discount

Below 95%: 50% discount

As it stands, customers are eligible for a 50% discount for their next, monthly bill. Call me surprised if Atlassian does not offer something far more generous, given these customers are are at an unprecedented zero 9’s availability for the rolling 30 days’ window.

The impact of the outage on Atlassian’s business

Atlassian claims the customers impacted were “only” 0.18% of its customer base at 400 companies. They did not share the number of seats impacted. I estimate seats are between 50,000 - 800,000, based on the fact that I have not talked with any impacted customers with less than 150 seats. The majority of customers impacted I talked with had 1,000-2,000 seats, and one of them had 4,000 seats.

The biggest impact of this outage is not in lost revenue: it is reputational damage and might hurt longer-term Cloud sales efforts. The scary thing about how the outage played out is how it could have been any company that loses all Atlassian Cloud access for weeks. I am sure the company will do steps to mitigate this happening. However, trust is one that is easy to lose and hard to gain.

Unfortunately for the company, Atlassian has a history of a repeat incident of the worst kind. In 2015, their HipChat product suffered a security breach, this breach driving away customers like Coinbase at the time. Only two years later, in 2017, HipChat suffered yet another security breach. This second, repeat offense was the reason Uber suspended their HipChat usage effective immediately.

The irony of the outage is how Atlassian was pushing customers to its Cloud offering, highlighting reliability as a selling point. They discontinued selling Server licenses and will stop support for the product in February 2024.

This breach and the history of repeat offenses with a specific incident combined will raise questions to enterprises currently on the Server product on how much they will trust Atlassian on the Cloud. If forced to move, will they choose another vendor instead?

Another scenario is how Atlassian might be forced to backtrack on selling Server licenses and extend the support for the product by another few years. This approach would give customers ample reassurance that they can operate their Cloud product without massive downtimes like in this outage. I personally see this option as one that might have to be on the table, if Atlassian does not want to lose out large customers which hesitate to onboard following this incident.

Atlassian’s competitors are sure to win from this fumble, even if they are not immune to similar problems. However, unlike Atlassian, they don’t yet have an incident where all communications were shut down for close to a week, as customers scrambled to get hold of their vendor but were met with silence.

One company I would personally recommend is Linear. 90% of all tech startups I have invested in use them and are extremely happy with how well the tool works: the speed, the workflow, and how delightful the tool is. I have not been paid to write this and have no financial affiliation with Linear - however, I know one of the cofounders from Uber.

Linear already offered to help customers waiting on Atlassian to restore and not charge them for the first year:

Google and Microsoft could both benefit on the mid-term from this outage. Customers overwhelmingly fell back to using Google Docs/Sheets and Microsoft Sharepoint/O365 to work around the lack of Atlassian tools. None of these tools are sophisticated enough to match JIRA or Confluence. However, should Google or Microsoft offer a similar tool - or acquire a company - it would have a very strong case to convince businesses to move over to their platform.

Google and Microsoft have no similarly poorly handled outages in their past: which is a selling point they might be able to use for impacted customers. Of course, it is a question on how feasible such an approach would be.

What is sure is that all competing vendors will reference this Atlassian outage - and showcase how they would respond in a similar situation - in their sales pitches for years to come.

Learnings from this outage

There are many learnings on this outage that any engineering team can take. Don’t wait for an outage like this to hit your teams: prepare ahead instead.

Incident Handling:

Have a runbook for disaster recovery and black swan events. Expect the unexpected, and plan for how you will respond, assess, and communicate.

Follow your own runbook of disaster recovery. Atlassian published their disaster recovery runbook for Confluence, and yet, did not follow this runbook. Their runbook states that any runbook has communication and escalation guidelines. Either the company did not have communication guidelines, or they did not follow these. A bad look, either way.

Communicate directly and transparently. Atlassian did none of this until 9 days. This lack of communication eroded a huge amount of trust not just across impacted customers, but anyone being aware of the outage. While Atlassian might have assumed it is safe to not say anything: this is the worst choice to make. Take note of how transparent GitLab or Cloudflare communicates during outages - both of them publicly traded companies, just like Atlassian.

Speak your customer’s language. Atlassian status updates were vague, and lacked all technical details. However, their customers were not business people. They were Head of ITs and CTOs who made the choice to buy Atlassian products… and could now not answer what the problem with the system was. By dumbing down messaging, Atlassian put their biggest sponsors - the technical people! - in an impossible situation to defend the company. If the company sees customer churn, I greatly attribute it to this mistake.

An executive taking public ownership of the outage. It took until Day 9 for a C-level to acknowledge this outage. Again, at companies which developers trust, this happens almost immediately. Executives not issuing a statement signals the issue is too small for them to care about it. I wrote about how at Amazon, executives joining outage calls is common.

Reach out directly to customers, and talk to them. Customers did not feel heard during this outage and had no human talk to them. They were left with automated messages. During a black swan event, mobilize people to talk directly to customers - you can do this without impacting the mitigation effort.

Avoid status updates that say nothing. The majority of status updates on the incident page were copy-pasting the same update. Atlassian clearly did this to provide updates every few hours… but these were not updates. They added to the feeling that the company did not have the outage under control.

Avoid radio silence. Up to Day 9, Atlassian has been on radio silence. Avoid this approach at all costs.

Avoiding the incident:

Have a rollback plan for all migrations and deprecations. In the Migrations Done Well issue, we covered practices for migrations. Use the same principles for deprecations.

Do dry-runs of migrations and deprecations. As per the issue Migrations Done Well.

Do not delete data from production. Instead, mark data to be deleted, or use tenancies to avoid data loss.

My take

Atlassian is a tech company, built by engineers, building products for tech professionals. It wrote one of the most referenced book on Incident Handling. And yet, the company did not follow the guidelines it wrote about.

Now, while some people might feel outraged about this fact, just this week I wrote about how Big Tech can be messy from the inside and we should not hold a company on impossibly high expectations:

“You join a well-known tech company. You’ve heard only great things about the engineering culture, and after spending lots of time reading through their engineering blog, you are certain this is a place where the engineering bar - its standards - is high, and everyone seems to work on high-impact projects. Yet when you join, reality totally fails to live up to your expectations.”

What I found disappointing in this handling was the radio silence for days, coupled with how zero Atlassian executives took ownership of the incident in public. The company has two CEOs and a CTO, and none of them communicated anything externally until day 9 of the outage.

One of Atlassian’s company values is Don’t #@!% the customer. Why was this ignored? From the outside, it seemed that Atlassian leadership ignored this value for the first 9 days of the outage, opting for passive behaviour and close to zero communications to customers who were #@!%’d.

Why should a customer put its trust in Atlassian when its leadership doesn’t acknowledge when something goes terribly wrong for its customers? It’s not like it’s a small number of customers, even: it’s hundreds of companies and potentially hundreds of thousands of its users at those companies.

Outages happened, happen, and will happen. The root cause is less important in this case.

What is important is how companies respond when things go wrong, and how quickly they do this. And speed is where the company failed first and foremost.

Atlassian did not respond to this incident with the nimbleness that a well-run tech company would. The company will have ample time to find out the reasons for this poor response - once all customers regain access in the next few weeks.

Given the publicity and severity of the incident, I personally expect that following the mitigation of this outage, Atlassian will have an “allergic reaction response” and make a swarm of internal changes and investments to significantly improve their incident handling for the future.

Still, in the meantime, every engineering team and executive should ask themselves these questions:

What if we lost all JIRA, Confluence and Atlassian Cloud services for weeks - are we prepared? What about other SaaS providers we use? What happens if those services go down for weeks? What is out Plan B?

What are the learnings we should take away from this incident? What if we did a partial delete? Do we have partial restore runbooks? Do they work? Do we exercise them?

What is one piece of improvement that I will implement in my team, going forward?

What criteria do I use to choose my vendors between functionality, promised and actual SLAs? How do large outages shape my vendor selection process?

If you enjoyed this article, you might enjoy my weekly newsletter. It’s the #1 technology newsletter on Substack. Subscribe here 👇

Pragmatic Engineer Jobs

Check out jobs with great engineering culture for senior software engineers and engineering managers. These jobs score at least 10/12 on The Pragmatic Engineer Test. See all positions here or post your own.

Senior/Lead Engineer at TriumphPay. $150-250K + equity. Remote (US).

Senior Full Stack Engineer - Javascript at Clevertech. $60-160K. Remote (Global).

Senior Full-Stack Developer at Commit. $115-140K. Remote (Canada).

Staff Software Engineer at Steadily. $250-300K + equity. Remote (US, Canada) / Austin (TX).

Software Engineer at Anrok. Remote (US).

Senior Ruby on Rails Engineer at Aha!. Remote (US).

Senior Software Engineer at Clarisights. €80-140K + equity. Remote (EU).

Full Stack Engineer at Assemble. $145-205K + equity. Remote (US).

Senior Developer at OpsLevel. $122-166K + equity. Remote (US, Canada).

Senior Mobile Engineer at Bitrise. $100-240K + equity. Remote (US).

Senior Frontend Engineer at Hurtigruten. £70-95K. London, Remote (EU).

Mobile Engineer at Treecard. $120-180K + equity. Remote (US).

Engineering Manager at Clipboard Health. Remote (Global).

Senior Backend Engineer (PHP) at Insider. Remote (Global).

Senior Software Engineer at Intro. $150-225K + equity. Los Angeles, California.

Senior Software Engineer at OpenTable. Berlin.

Software Engineer at Gem. San Francisco.

Other openings:

Engineering Manager at Basecamp. $207K. Remote (Global).

Senior Full Stack Engineer at Good Dog. $150-170K. Remote (US), NYC.

Senior Full-Stack Developer at Commit. $80-175K. Remote (Canada).

Software Engineer at TrueWealth. €100-130K. Zürich.

Senior iOS Engineer at Castor. €60-100K + equity.Remote (EU) / Amsterdam.

Senior Backend Engineer at Akeero. €75-85K + equity. Remote (EU).

Recommend

-

7

7

30 Top Voice Influencers: The Inside Scoop You Need To Get Up To Speed Posted on November 14, 2020 by Heidi Cohen in Voice |

-

9

9

How Voice Marketing Works: The Inside Scoop You Need To Succeed Posted on August 16, 2021 by Heidi Cohen in Marketing,

-

7

7

Atlassian Cloud outage hits 400 customers Atlassian blamed a miscommunication and faulty script and said nearly half of the impacted users had been restored. It expe...

-

4

4

Devops Day 7 of the great Atlassian outage: IT giant still struggling to restore access...

-

8

8

SaaS Atlassian Jira, Confluence outage persists two days on'Routine maintenance script' b...

-

3

3

SaaS Atlassian outage lingers, sparking data loss fearsMicrosoft OneDrive: Missing docume...

-

4

4

Devops At last, Atlassian sees an end to its outage ... in two weeksCloud collaboration...

-

3

3

This PIR is available in the following langua...

-

6

6

ShareRing’s Network and NFT Technology Preview: The Inside Scoop on What Went Down

-

9

9

The inside scoop on ice cream innovationAdrienne Murray - Technology of Business reporter, AarhusFri, September 2, 2022, 8:02 AM·6 min read...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK

Mefi – Gabor Nadai @gabornadai

Mefi – Gabor Nadai @gabornadai