AMD Ryzen 5 5600 vs. Intel Core i5-12400F | TechSpot

source link: https://www.techspot.com/review/2448-amd-ryzen-5600-vs-intel-core-i5-12400f/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

With the launch of the Ryzen 5 5600, AMD's finally brought down the price of Zen 3 to just $200. In dropping the X, AMD also knocked 200 MHz off the boost clock, so as things stand today, the 5600X is priced at $230 and the newer non-X version comes in at $200 MSRP, which is 13% off.

On the Intel front, the Core i5-12400F currently retails for $180, so it's cheaper than the 5600, but we'll take a look at motherboard pricing towards the end of this GPU scaling benchmark test.

Now, because the 5600 is an unlocked CPU, it should be able to achieve 5600X-like performance via overclocking, and we're only talking about a 5% difference in clock frequency out of the box anyway. That said, we won't be exploring overclocking in this review.

The idea here is to compare the Ryzen 5 5600 and Core i5-12400F across a range of games at 1080p and 1440p using four tiers of GPU. To keep things simple, we've gone with the GeForce RTX 3060, RTX 3070, RTX 3080 12GB and RTX 3090 Ti.

Both CPUs have been tested in their stock configuration with the only exceptions of XMP, which has been loaded, and we've enabling Resizable BAR on both platforms. Furthermore, the same 32GB of DDR4-3200 CL14 dual rank memory has been used for both platforms, and the exact same graphics cards were used. Let's now get into the results...

Benchmarks

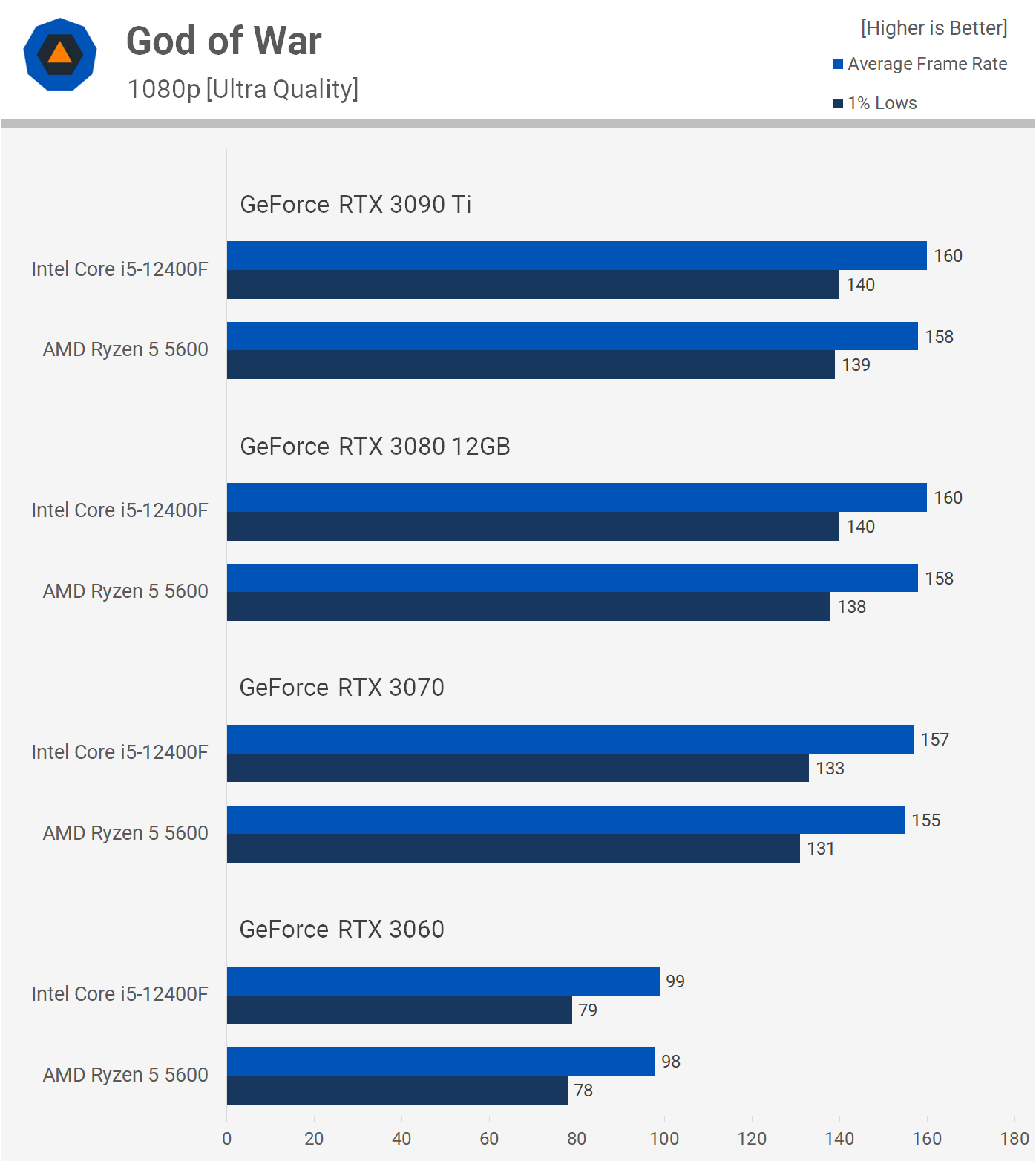

Starting with God of War at 1080p, we find that both CPUs enable the same level of performance with all four GeForce GPUs. The game has a 160 fps frame cap and this was reached using the RTX 3070 and up, while the RTX 3060 was good for around 100 fps, and this was with the highest quality settings enabled, so this is a well optimized title that looks amazing.

Jumping up to 1440p sees God of War become more GPU bound, so while we can't quite hit the 160 fps cap with the RTX 3080 12GB, there's still no real difference in performance between these two budget CPUs as the game is now heavily GPU bound.

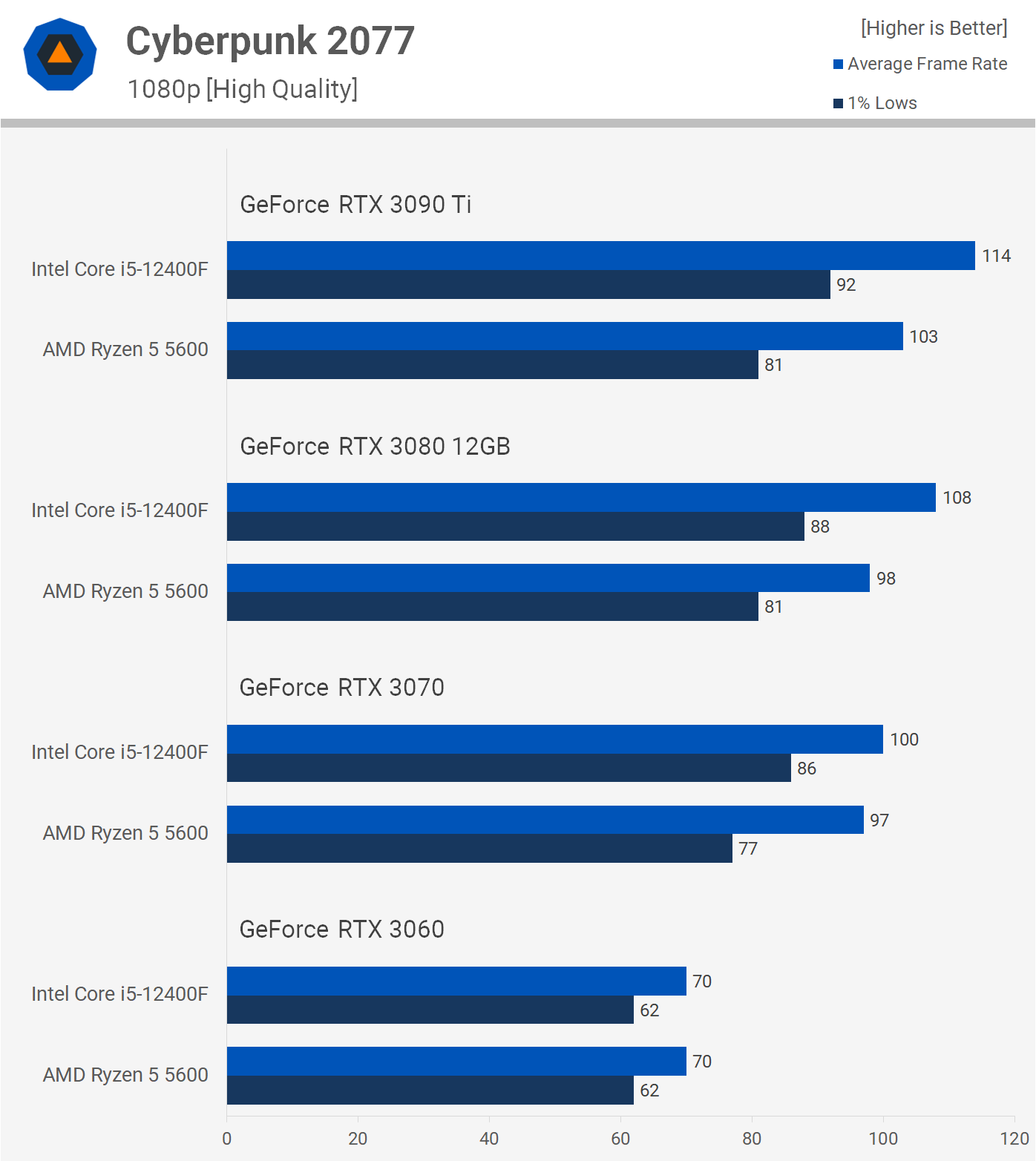

Next we have Cyberpunk 2077. The Core i5 does enjoy a slight performance advantage in this title, beating the R5 5600 by up to an 11% margin at 1080p, seen when using the RTX 3090 Ti and RTX 3080 12GB.

The margin for the average frame rate was reduced to just 3% with the 3070, but the Intel CPU was 12% faster when looking at the 1% lows. Then as we drop down to the 3060 the game becomes heavily GPU limited and now both CPUs are restricted to the same level of performance.

Increasing the resolution increases the GPU bottleneck and now we're looking at comparable performance right up to the RTX 3080 with identical performance seen with the 3070 and 3060. The 12400F did stretch its legs a little with the RTX 3090 Ti, offering up to 8% greater performance as it pushed up to 105 fps.

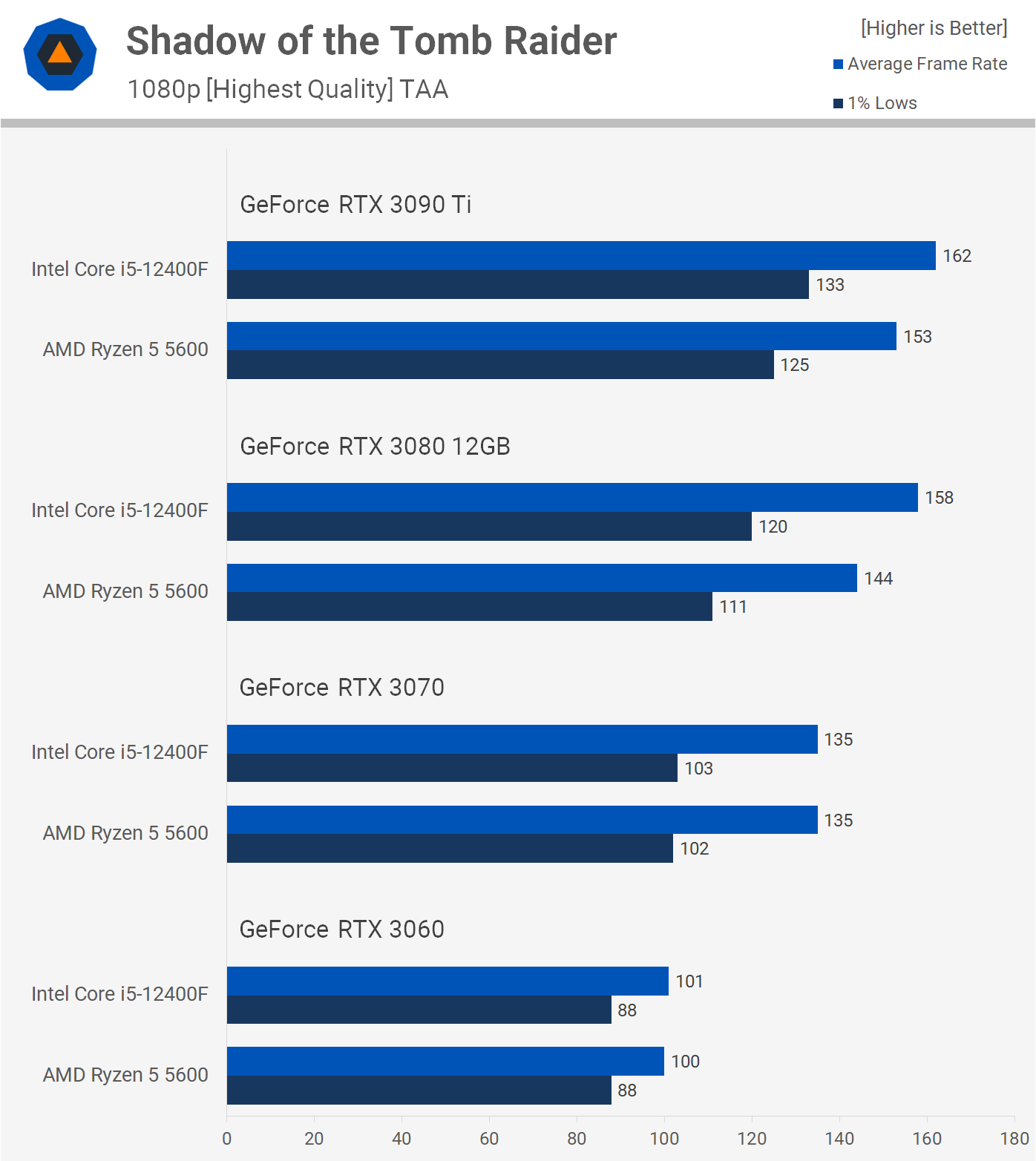

Shadow of the Tomb Raider shows up to a 10% performance advantage for the 12400F, seen with the RTX 3080. Oddly, the margin is reduced to 6% with the RTX 3090 Ti, but I do wonder if we're running into an Ampere architectural bottleneck at this low resolution. Either way, the Intel CPU was faster with these high-end GPUs, where as we saw the exact same performance using the RTX 3070 and RTX 3060.

Of course, increasing the resolution does increase the GPU load and therefore shift performance more in the GPU bound direction, so frame rates are identical using the RTX 3080, 3070 and 3060. The 12400F was up to 7% faster with the RTX 3090 Ti, seen when looking at the 1% lows, but you can expect no difference between these two CPUs at 4K, even with the 3090 Ti.

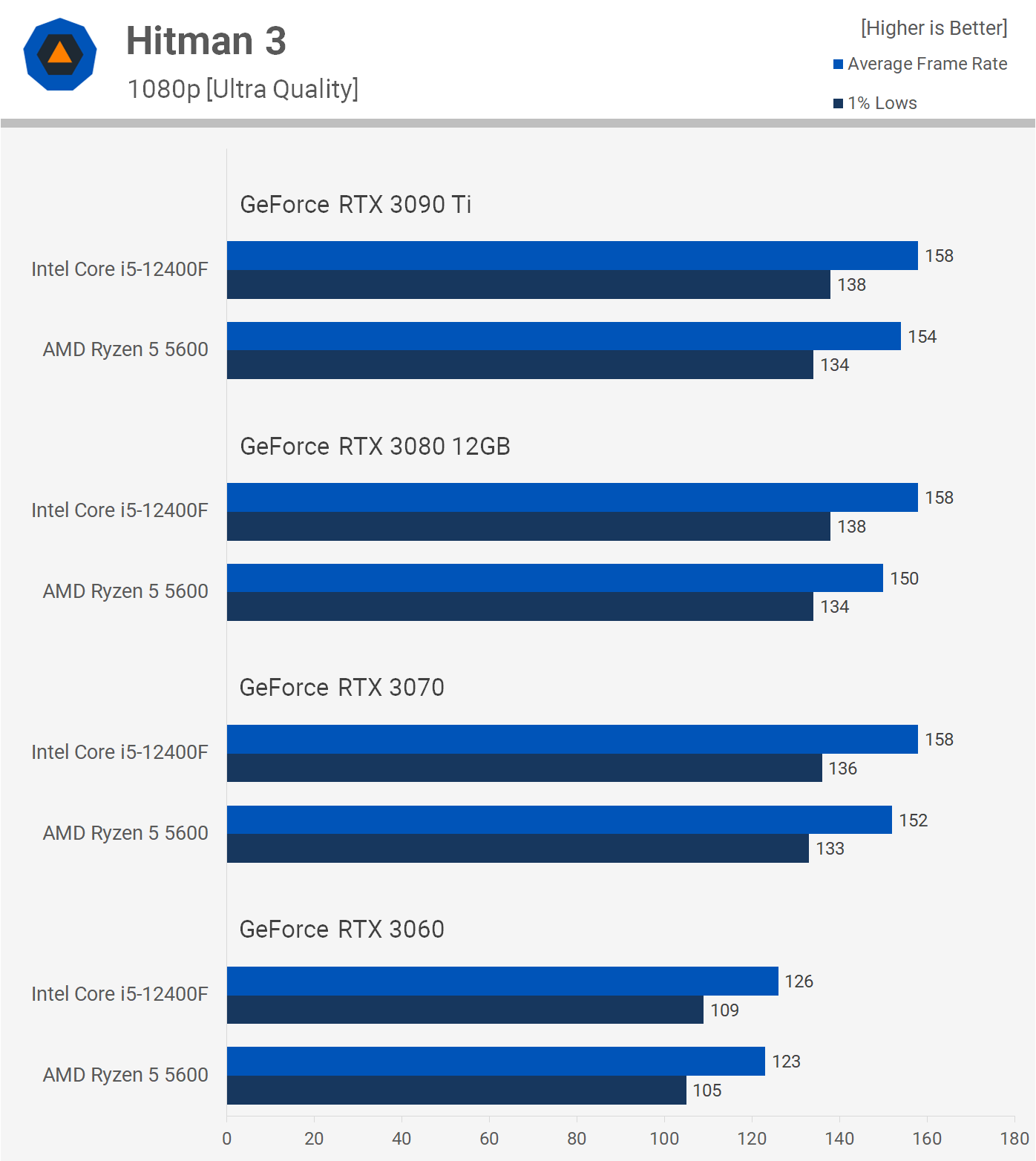

Hitman 3 runs into a hard CPU bottleneck at 1080p with even the RTX 3070. The Zen 3 architecture is limited to around 154 fps and Alder Lake 158 fps. Interestingly, even with the RTX 3060 we find that Alder Lake is a few frames faster and this was consistently seen in our Hitman 3 testing.

Moving to 1440p does increase the GPU bottleneck and now we're seeing virtually no difference between these two CPUs, even with the RTX 3090 Ti.

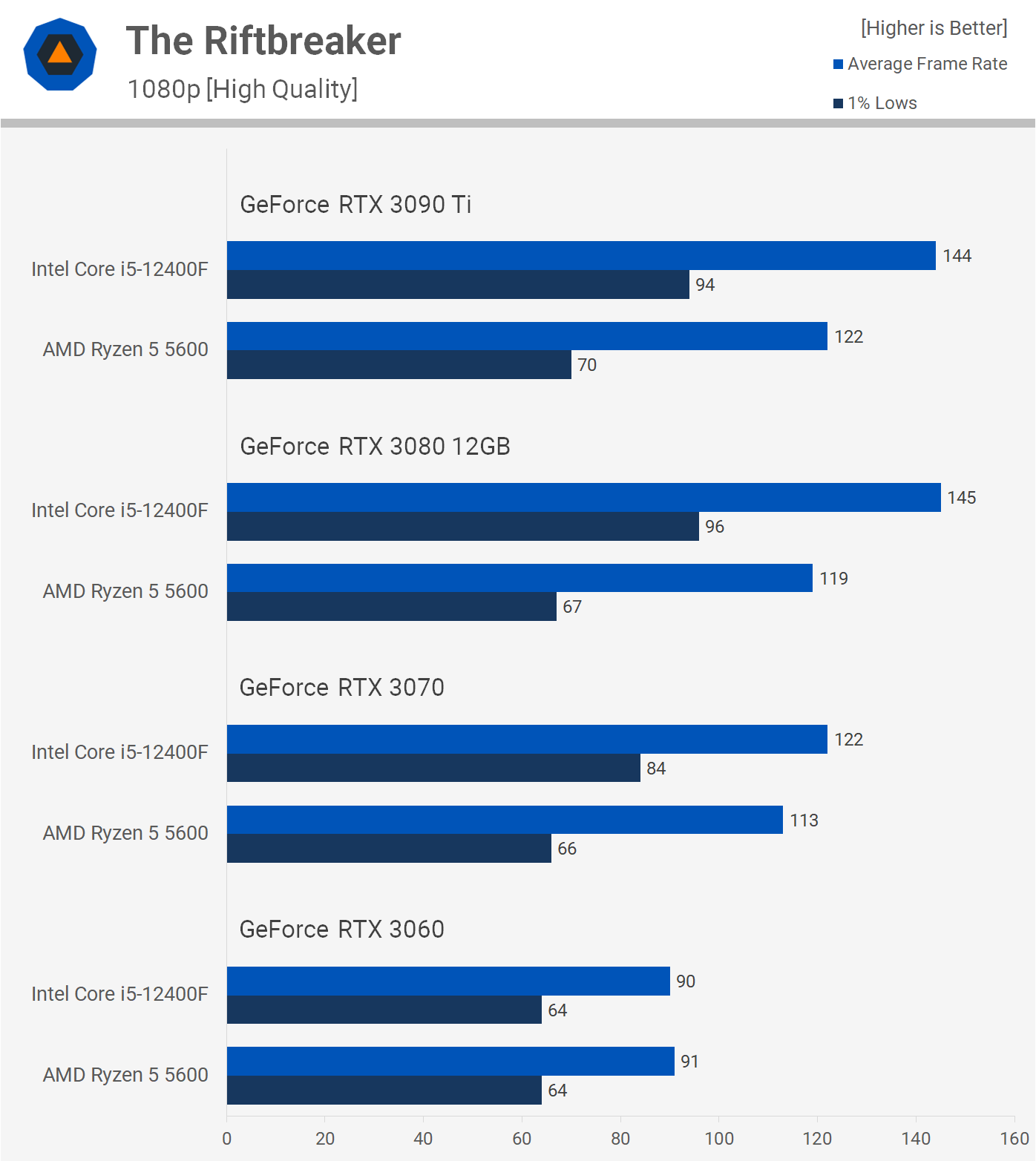

The Riftbreaker has proven to be a horrible title for AMD. We're seeing a pretty strong CPU bottleneck with the Ryzen 5 5600 here, even with the RTX 3070, where the 12400F was up to 27% faster, seen when looking at the 1% low data. That margin grew with the 3080 and 3090 Ti and the Intel CPU was up to 34% faster which is a massive performance margin.

Even at 1440p, the 12400F enjoyed a commanding lead, though this only seen with the RTX 3080 and 3090 Ti, but still it was up to 33% faster which is a significant margin.

Moving on to Horizon Zero Dawn, we find when using the RTX 3060 and 3070 that the game is heavily CPU limited, delivering the same performance using either the Core i5 or Ryzen 5 processor. Then with the faster 3080 and 3090 Ti, the 5600 is 9-10% faster than the Core i5 competition, so a reasonable performance advantage for AMD.

That margin shrunk to 5% at 1440p with the RTX 3090 Ti, while we see virtually no difference with the 3080 and no real difference with the 3070 and 3060.

Next we have F1 2021, where the 12400F provided stronger performance with the higher end GPUs. For example, the RTX 3090 Ti saw a 16% improvement to 1% lows with the Core i5 part and a 7% boost to the average frame rate.

That margin was reduced to 10% for the 1% lows with the RTX 3080 and just 4% for the average frame rate. Then we're looking at basically identical performance with the RTX 3070 and 3060.

Jumping up to 1440p reduced the margins and it's only the RTX 3090 Ti data where we see a reasonable disparity in performance, the average frame rate was similar, but the 12400F was 14% faster when looking at the 1% lows.

In Rainbow Six Extraction both CPUs are competitive, delivering basically the same gaming experience. By the time we reached the RTX 3080 the game was entirely CPU bound, hitting 300 fps. As you'd expect the 1440p data is very similar, though this time we're entirely GPU bound.

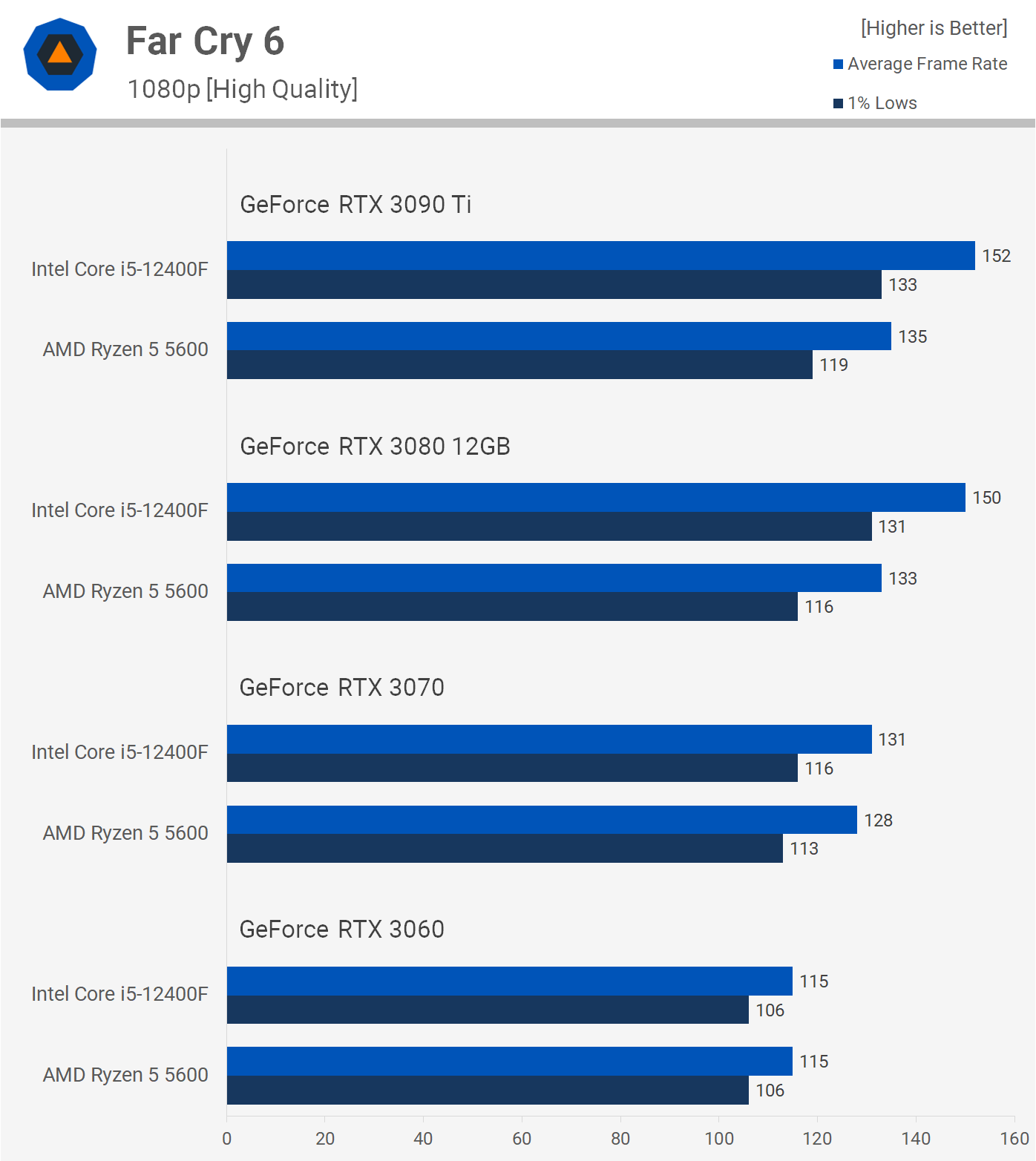

Far Cry 6 heavily favors the Intel i5-12400F, delivering up to 13-14% more performance, seen with the RTX 3080 and 3090 Ti. When using the slower GPUs there's little to no performance difference.

Increasing the resolution to 1440p reduces the margin with the RTX 3080 and 3090 Ti to 7%-8%, and of course, we're looking at no change in performance using the RTX 3070 and 3060.

9 Game Average

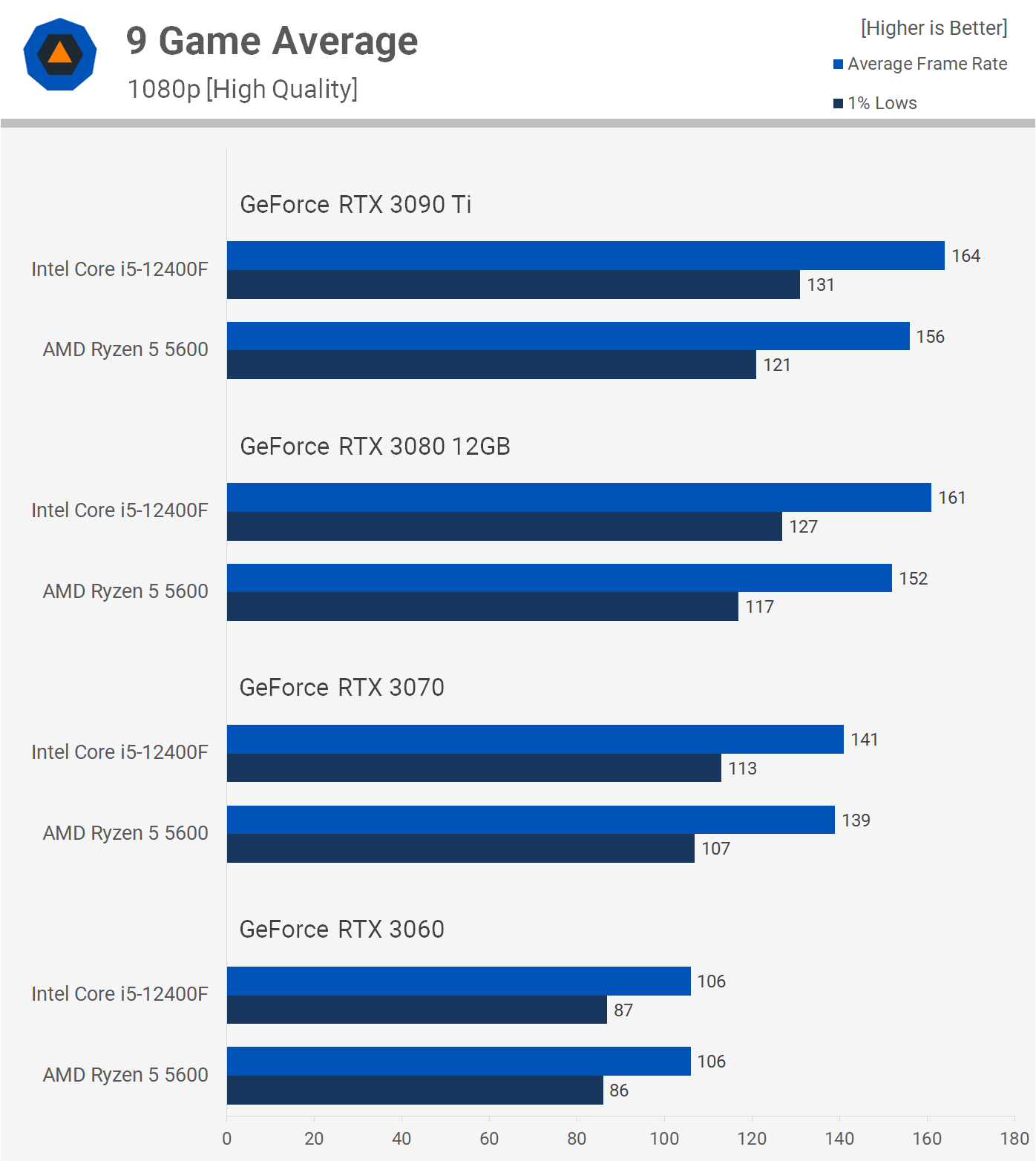

Here's a look at average frame rates, calculated using the geomean. We'll start with the 1080p results where the 12400F was 8% faster when comparing 1% lows, and 5% faster for the average frame rate. Not huge margins, but the Alder Lake CPU was faster overall. We're also looking at similar margins with the RTX 3080, so for high frame rate gaming the 12400F does look to be the better option.

Dropping down to the RTX 3070 saw the margins reduced, especially for the average frame rate and for the 1% lows the 12400F was 6% faster. Certainly not a big difference, but for those using lower quality settings in an effort to maximize frame rates, maybe for competitive gaming, the 12400F will be the way to go.

With the RTX 3060 performance is identical. That said, if you're going to game with an RTX 3060 or RX 6600 XT at 1080p using medium quality settings, the margin will open up in Intel's favor, though by a very small margin.

Moving to 1440p shows there isn't a big difference between these two CPUs when dipping below 140 fps, and at around 100 fps there is no difference. The 12400F doesn't jump ahead by a notable margin until you start pushing up over 140 fps, and even then it was only up to 9% faster with the RTX 3090 Ti.

Versus

That's how the Ryzen 5 5600 and Core i5-12400F compare across 9 games at 1080p and 1440p using four tiers of GeForce GPUs. As you might expect, they're evenly matched for the most part, though the Intel CPU is a tad faster, and it's worth noting that of the 9 games tested, AMD hit the lead in just Horizon Zero Dawn.

The 12400F dominated on games that lean heavily on the primary thread and that doesn't just mean games like Far Cry 6. The Riftbreaker, for example, heavily utilizes modern CPUs, spreading the load across many cores, but like most games, it still heavily relies on single core performance as the primary thread is hit the hardest, and this can give the 12400F a substantial performance advantage.

Typically, a best case result for the Ryzen 5 5600 will be the same or similar performance, like what was seen in Rainbow Six Extraction and God of War. Of course, how much of a performance difference will depend on the game and the quality settings used. If you're going to be using the highest quality settings your GPU can handle with a 60-90 fps target, then there's going to be no noticeable difference between these CPUs, and that will likely be the case well into the future.

However, if you're more interested in high refresh rate gaming -- 144+ fps -- then the 12400F will often prove to be the better option, delivering up to 33% stronger performance, seen in The Riftbreaker in the most extreme of cases.

In short, for casual gamers it won't matter which one of these CPUs you go with. For competitive gamers, we suggest the Core i5-12400F... but before we go too deep into recommendations, let's check out pricing.

As noted earlier, the Ryzen 5 5600 goes for $200, while the Core i5-12400F is slightly more affordable at $180. The other factor to consider is the motherboard price.

The best value B660 board in my opinion is the MSI B660M-A Pro, which currently costs $150 for the WiFi version, $140 for the non-WiFi model, and the equivalent AMD B550 board would be the B550-A Pro which also costs $150, though that board doesn't come with a WiFi option. That means that if you're looking at pairing either of these processors with a decent spec board, pricing is about the same at ~$150.

If you want the absolute cheapest motherboard available for the corresponding platforms, on the B660 front we'd buy the MSI B660M-A Pro for $140. For AMD B550, however, you could go as low as the Gigabyte B550M DS3H for $100. It's certainly not a great board, but it works. The MSI B550M Pro-VDH WiFi is also decent at $120. Those small savings mean going Intel or AMD at this price segment will cost you relatively the same.

In that scenario, for those upgrading their platform or building a new PC, the Core i5-12400F with a decent B660 board seems like the way to go. The most ideal path for the Ryzen 5 5600 would be if you already have a solid AM4 board, meaning the 5600 would be a drop-in CPU upgrade.

The advantage of Intel LGA 1700 platform is that it will support another CPU generation, so if you were to buy the 12400F now, it's conceivable that upgrading to a Raptor Lake Core i7 in the future would be of benefit, provided you got a decent B660 board now. The R5 5600 is limited this generation to the Zen 3 CPUs we already have, and the upcoming Ryzen 5 5800X3D.

Shopping Shortcuts:

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK