A Starting Point To Automate Infrastructure

source link: https://dzone.com/articles/a-starting-point-to-automate-infrastructure

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

A Starting Point To Automate Infrastructure

Using the Infrastructure as a code to automate your company's frameworks is the surest and quickest way possible to get the most out of the cloud implementation.

Join the DZone community and get the full member experience.

Join For FreeWhy automate IT infrastructure? Actually, automating is not the best solution only for IT infrastructure, but for many things in software development. And why?

Manual processes are slow, highly vulnerable to human failure, not scalable, hard to create, update and keep a standard, etc. I could cite many other reasons for anyone to run away from any manual processes. They are the opposite that is proposed in agile methodologies or DevOps culture. Now specifically about infrastructure, we can affirm that without automating it's impossible to get the best of the resources of any Cloud service. We can see the why in the next lines.

No matter how hard Cloud and hosting companies try to improve the usability of their web consoles, it will always very hard to remember every action performed to create a complex infrastructure and then replicate it or change it. Do not wait for a catastrophic day to start to do what is right.

The join of the tools, techniques, and practices can enable companies to have a mature technology culture that makes them more competitive. Infrastructure provides on-demand costs optimization, analyzes security, best practices, functional tests, source code versioned, and whatever else is needed. There are no more excuses to keep processes slow and susceptible to errors.

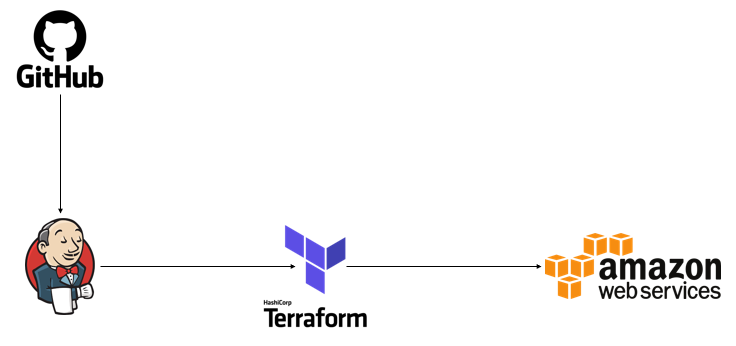

It's not harder to write declarative code than to navigate between many pages and to have to remember every step when required to do it again. It's chaos. I prepared some examples of automation using Terraform, Docker, Ansible, and Jenkins to create resources in AWS. It's only an example. I did let all source code in Github and you can use it as inspiration to do things with other tools and in else platform clouds as GCP, Azure, and others. Instead of Jenkins, it could be its own application, specific for this work and with better usability. You can evolve to much more robust solutions using artificial intelligence, monitoring, and others things.

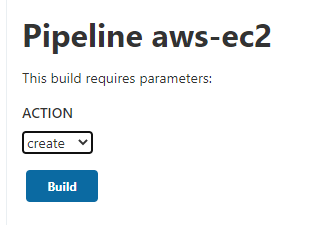

For this demonstration, I did start creating some scripts using Terraform for the provisioning of resources on AWS: EC2, S3, EKL, and others, but I would not want to have to run this manually every time I need to provision a new resource, so I created jobs on Jenkins. My goal is that I only need to press a button to provision new infrastructure.

Download the example code here.

To run this demo the requirements are: Docker installed, an S3 bucket for saving the states of the Terraform, a key pair to connect to EC2 instance and create a user with an access key and a secret access key. In this other article, I teach how to create these keys.

In all Terraform scripts, I have this code snippet telling where to save the states.

backend "s3" {

bucket = "tutorial-automation-with-jenkins"

key = "ec2/ec2"

region = "us-east-1"

}With the scripts ready I run the following command Docker to create the volume that will persist the data of the Jenkins.

docker volume create jenkins-volNow let's run the command to start the Jenkins.

docker container run -d -p 8080:8080 -v jenkins-vol:/var/jenkins_home --name jenkins-lab jenkins/jenkins:lts

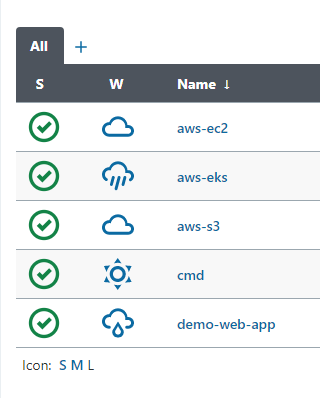

In this Jenkins, I created five jobs to automate the creation of some infrastructure resources on AWS. The resources were ec2, s3, a cluster EKS, a WordPress installation, and a cmd so I could see the Jenkins folders in case I needed them. You can feel at ease for collaborating adding new resources.

The following figure summarizes all that was done in this example.

Obviously, it's just a starting point. We can evolution this model by adding other tools, separating the responsibilities, and making the project more mature.

Every job that I created gets only one parameter: create or destroy, but they could be completely parameterizable. Only in the job demo-web-app that I install the WordPress using Ansible, I pass the public IP generated by job aws-ec2 as a parameter. Could these two jobs be one? Yes. You can evolve it!

All credentials were saved in the Jenkins Credentials. Nothing in repositories. The file tutorial.pem is dynamically generated and the value is obtained during the building. When we run any of these jobs we have in a few minutes a complex infrastructure completely ready through a simple action. If we need to repeat that ten more times the simplicity will the same.

PLAY RECAP *********************************************************************

54.167.124.23 : ok=16 changed=14 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

[Pipeline] }

[Pipeline] // withCredentials

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

Finished: SUCCESSIt may seem like an old argument, but there are still many companies and system administrators who, while surrendering to the cloud, are still trying to use it as an old infrastructure system. Without knowing its main benefits and without taking full advantage of them.

If anyone has knowledge about AWS or other Cloud servers, viewed the sample code in this article, and still has not worked with infra as code, you realized how much this makes life easier. I invite you to fork this example code or collaborate with the same including other platforms and other resources.

Recommend

-

25

25

Bolt is theonly CMS that aims to make all users happy. Whether you are developer, frontenddesigner or content creator. Install the standard Bolt theme to get a…

-

3

3

Design systems are all around us, but it is still hard to specify what it is. At some point, we all use a system (on different levels), but because it is resource-intensive, we usually don’t have the luxury to work on one.

-

6

6

HAWK-Rust Series: Automate Infrastructure using Terraform Reading Time: 3 minutes

-

6

6

Get better at Product Discovery by defining your starting pointDifferentiate between two types of discovery in order to approach them more effectivelyPhoto by

-

3

3

Hack The Box [Starting Point] 初始点 —— 了解渗透测试的基础知识。 这一章节对于一个渗透小白来说,可以快速的成长。以下将提供详细的解题思路,与实操步骤。 TIER 0

-

6

6

NextJS and GraphQL The goal of this project is to show how to build a Fullstack Web application With TypeScript, PostgreSQL, Next.js, Prisma & GraphQL (TypeGraphQL). No need to setup a separate server from the client. DO IT ALL IN...

-

9

9

Ranked #8 for today

-

5

5

It’s been months in the making but I’m excited to finally release our next website template — Protocol, a beautiful starter kit for building amazing API reference websites.

-

8

8

Press Release Transcend Raises $20M Series B to Automate Critical Infrastructure Design Investors in the round include Autodesk, Inc., Pur...

-

12

12

Support is great. Feedback is even better."Thanks for checking out the launch of NimbleDocs! This is still an early version of the product, so I'd really love to get your feedback. Even if you're not a customer, I'd love to know your...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK