BirdNET – The easiest way to identify birds by sound.

source link: https://birdnet.cornell.edu/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

The easiest way to identify birds by sound.

What is BirdNET?

How can computers learn to recognize birds from sounds? The Cornell Lab of Ornithology and the Chemnitz University of Technology are trying to find an answer to this question. Our research is mainly focused on the detection and classification of avian sounds using machine learning – we want to assist experts and citizen scientist in their work of monitoring and protecting our birds. BirdNET is a research platform that aims at recognizing birds by sound at scale. We support various hardware and operating systems such as Arduino microcontrollers, the Raspberry Pi, smartphones, web browsers, workstation PCs, and even cloud services. BirdNET is a citizen science platform as well as an analysis software for extremely large collections of audio. BirdNET aims to provide innovative tools for conservationists, biologists, and birders alike.

This page features some of our public demonstrations, including a live stream demo, a demo for the analysis of audio recordings, an Android and iOS app, and its visualization of submissions. All demos are based on an artificial neural network we call BirdNET. We are constantly improving the features and performance of our demos – please make sure to check back with us regularly.

We are currently featuring 984 of the most common species of North America and Europe. We will add more species and more regions in the near future. Click here for the list of supported species.

Have any questions or want to use BirdNET to analyze a large data collection?

Please let us know (we speak English and German): [email protected]

Learn more about BirdNET:

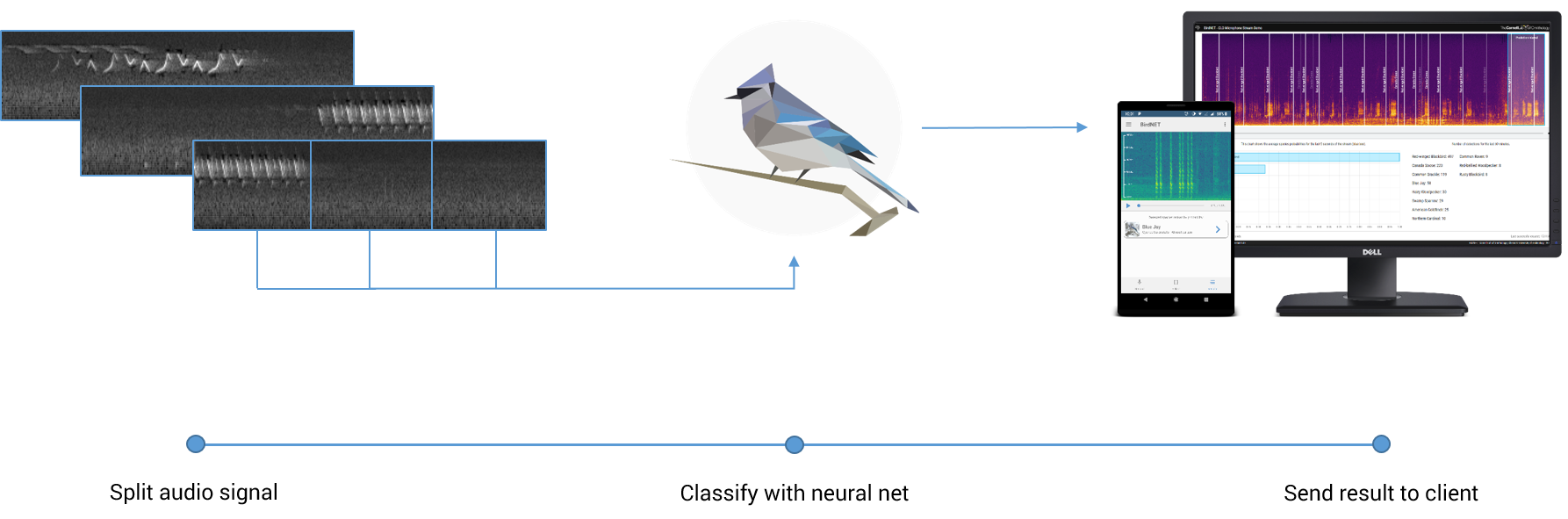

How it works:

Live Stream Demo

The live stream demo processes a live audio stream from a microphone outside the Cornell Lab of Ornithology, located in the Sapsucker Woods sanctuary in Ithaca, New York. This demo features an artificial neural network trained on the 180 most common species of the Sapsucker Woods area. Our system splits the audio stream into segments, converts those segments into spectrograms (visual representations of the audio signal) and passes the spectrograms through a convolutional neural network, all in near-real-time. The web page accumulates the species probabilities of the last five seconds into one prediction. If the probability for one species reaches 15% or higher, you can see a marker indicating an estimated position of the corresponding sound in the scrolling spectrogram of the live stream. This demo is intended for large screens.

Analysis of Audio Recordings

Reliable identification of bird species in recorded audio files would be a transformative tool for researchers, conservation biologists, and birders. This demo provides a web interface for the upload and analysis of audio recordings. Based on an artificial neural network featuring almost 1,000 of the most common species of North America and Europe, this demo shows the most probable species for every second of the recording. Please note: We need to transfer the audio recordings to our servers in order to process the files. This demo is intended for large screens.

Smartphone App

This app lets you record a file using the internal microphone of your Android or iOS device and an artificial neural network will tell you the most probable bird species present in your recording. We use the native sound recording feature of smartphones and tablets as well as the GPS-service to make predictions based on location and date. Give it a try! Please note: We need to transfer the audio recordings to our servers in order to process the files. Recording quality may vary depending on your device. External microphones will probably increase the recording quality.

Follow this link to download the app for Android.

Follow this link to download the app for iOS.

Note: We consider our app a prototype and by no means a final product. If you encounter any instabilities or have any question regarding the functionality, please let us know. We will add new features in the near future, you will receive all updates automatically.

About us:

Cornell Lab of Ornithology

Dedicated to advancing the understanding and protection of the natural world, the Cornell Lab joins with people from all walks of life to make new scientific discoveries, share insights, and galvanize conservation action. Our Johnson Center for Birds and Biodiversity in Ithaca, New York, is a global center for the study and protection of birds and biodiversity, and the hub for millions of citizen-science observations pouring in from around the world.

Chemnitz University of Technology

Chemnitz University of Technology is a public university in Chemnitz, Germany. With over 11,000 students, it is the third largest university in Saxony. It was founded in 1836 as Königliche Gewerbeschule (Royal Mercantile College) and was elevated to a Technische Hochschule, a university of technology, in 1963. With approximately 1,500 employees in science, engineering and management, TU Chemnitz counts among the most important employers in the region.

Meet the team:

Stefan Kahl

I am a postdoc within the K. Lisa Yang Center for Conservation Bioacoustics at the Cornell Lab of Ornithology and the Chemnitz University of Technology. My work includes the development of AI applications using convolutional neural networks for bioacoustics, environmental monitoring, and the design of mobile human-computer interaction. I am the main developer of BirdNET and our demonstrators.

Ashakur Rahaman

I am a research analyst within the K. Lisa Yang Center for Conservation Bioacoustics at the Cornell Lab of Ornithology and the community manager of the BirdNET app. I am actively involved in environmental conservation through scientific inquiries and public engagement. Understanding the relationship between natural sounds and the effects of anthropogenic factors on the communication space of animals is my passion.

Connor Wood

My primary interest as a postdoc within the K. Lisa Yang Center for Conservation Bioacoustics at the Cornell Lab of Ornithology is understanding how wildlife populations and ecological communities respond to environmental change, and thus contributing to their conservation. I use audio data collected during large-scale monitoring projects to study North American bird communities.

Amir Dadkhah

I am a software developer and computer scientist at the Chemnitz University of Technology with focus on applied computer science and human-centered design. I am the main developer of the iOS version of the BirdNET app.

Shyam Madhusudhana

As a postdoc within the K. Lisa Yang Center for Conservation Bioacoustics at the Cornell Lab of Ornithology, my current research involves developing solutions for automatic source separation in continuous ambient audio streams and the development of acoustic deep-learning techniques for unsupervised multi-class classification in the big-data realm. I have been actively involved with IEEE’s Oceanic Engineering Society (OES) and, currently, I serve as the coordinator of Technology Committees.

Holger Klinck

I joined the Cornell Lab of Ornithology in December 2015 and took over the directorship of the K. Lisa Yang Center for Conservation Bioacoustics (formerly known as Bioacoustics Research Program) in August 2016. I am also a Faculty Fellow with the Atkinson Center for a Sustainable Future at Cornell University. In addition, I hold an Adjunct Assistant Professor position at Oregon State University (OSU).

Support us:

Related publications:

Wood, C. M., Kahl, S., Chaon, P., Peery, M. Z., & Klinck, H. (2021). Survey coverage, recording duration and community composition affect observed species richness in passive acoustic surveys. Methods in Ecology and Evolution. [PDF]

Kahl, S., Wood, C. M., Eibl, M., & Klinck, H. (2021). BirdNET: A deep learning solution for avian diversity monitoring. Ecological Informatics, 61, 101236. [Source]

Kahl, S., Clapp, M., Hopping, W., Goëau, H., Glotin, H., Planqué, R., … & Joly, A. (2020). Overview of BirdCLEF 2020: Bird Sound Recognition in Complex Acoustic Environments. In CLEF 2020 (Working Notes). [PDF]

Joly, A., Goëau, H., Kahl, S., Deneu, B., Servajean, M., Cole, E., … & Lorieul, T. (2020). Overview of LifeCLEF 2020: A System-Oriented Evaluation of Automated Species Identification and Species Distribution Prediction In CLEF 2020 (Working Notes). [PDF]

Kahl, S. (2020). Identifying Birds by Sound: Large-scale Acoustic Event Recognition for Avian Activity Monitoring. Dissertation. Chemnitz University of Technology, Chemnitz, Germany. [PDF]

Kahl, S., Stöter, F. R., Goëau, H., Glotin, H., Planqué, R., Vellinga, W. P., & Joly, A. (2019). Overview of BirdCLEF 2019: Large-scale Bird Recognition in Soundscapes.

In CLEF 2019 (Working Notes). [PDF]

Joly, A., Goëau, H., Botella, C., Kahl, S., Servajean, M., Glotin, H., … & Müller, H. (2019). Overview of LifeCLEF 2019: Identification of Amazonian plants, South & North American birds, and niche prediction.

In International Conference of the Cross-Language Evaluation Forum for European Languages (pp. 387-401). Springer, Cham. [PDF]

Joly, A., Goëau, H., Botella, C., Kahl, S., Poupard, M., Servajean, M., … & Schlüter, J. (2019). LifeCLEF 2019: Biodiversity Identification and Prediction Challenges.

In European Conference on Information Retrieval (pp. 275-282). Springer, Cham. [PDF]

Kahl, S., Wilhelm-Stein, T., Klinck, H., Kowerko, D., & Eibl, M. (2018). Recognizing Birds from Sound – The 2018 BirdCLEF Baseline System.

arXiv preprint arXiv:1804.07177. [PDF]

Goëau, H., Kahl, S., Glotin, H., Planqué, R., Vellinga, W. P., & Joly, A. (2018). Overview of BirdCLEF 2018: monospecies vs. soundscape bird identification.

In CLEF 2018 (Working Notes). [PDF]

Kahl, S., Wilhelm-Stein, T., Klinck, H., Kowerko, D., & Eibl, M. (2018). A Baseline for Large-Scale Bird Species Identification in Field Recordings.

In CLEF 2018 (Working Notes). [PDF]

Kahl, S., Wilhelm-Stein, T., Hussein, H., Klinck, H., Kowerko, D., Ritter, M., & Eibl, M. (2017). Large-Scale Bird Sound Classification using Convolutional Neural Networks.

In CLEF 2018 (Working Notes). [PDF]

About:

This is a demo page intended to demonstrate the joint efforts of the Cornell Lab of Ornithology and the Chemnitz University of Technology. All demos on this page are free to use and we will keep them updated regularly. If you have trouble accessing the demos or have any questions, please do not hesitate to contact us.

If you are interested in this project and want to contribute or collaborate, don’t hesitate to contact us. Let’s provide researchers, conservation biologists, and birders with transformative tools of acoustic monitoring.

Recommend

-

20

20

The Easiest Way to Monitor Node.js: Automatic Instrumentation Milica Maksimović, Adam Yeats on Dec 8, 2020 “I absolutely love AppSignal.”

-

11

11

Popular DatabasesDatabase with all the continents, countries, states and cities of the world. This directory contains all 7 continents, 250 countries, 4k subdivisions (provinces, states, etc) and more than 127k thousand cities. All...

-

12

12

The Easiest Way to Update Spyder to the Latest VersionUpdate Python Environments in 1 min with Conda.

-

14

14

Setting up a proper monitoring overview over your application’s performance is a complex task. Normally, you’d first need to figure out what you need to monitor, then instrument your code, and finally make sense of all the data that has been...

-

16

16

The Easiest Way to Debug Kubernetes Workloads Debugging containerized workloads and Pods is a daily task for every developer and DevOps engineer that works with Kubernetes. Oftentimes simple kubectl logs or ...

-

26

26

BirdNET-Pi Copy and paste the command below on a R...

-

7

7

Identify Bird Sounds With BirdNET-Pi on Raspberry Pi By Phil King Published 2 hours ago With just a Raspberry Pi...

-

8

8

With Ships, Birds Find an Easier Way to Travel Why fly all the way across the ocean when you can chill out by the pool? by...

-

10

10

AdvertisementClose

-

4

4

Extract only birds sound from audio This paper introduced a method to extract only segments wi...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK