Drain Kubernetes Nodes... Wisely - Percona Database Performance Blog

source link: https://www.percona.com/blog/2021/01/20/drain-kubernetes-nodes-wisely/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

What is Node Draining?

What is Node Draining?

Anyone who ever worked with containers knows how ephemeral they are. In Kubernetes, not only can containers and pods be replaced, but the nodes as well. Nodes in Kubernetes are VMs, servers, and other entities with computational power where pods and containers run.

Node draining is the mechanism that allows users to gracefully move all containers from one node to the other ones. There are multiple use cases:

- Server maintenance

- Autoscaling of the k8s cluster – nodes are added and removed dynamically

- Preemptable or spot instances that can be terminated at any time

Why Drain?

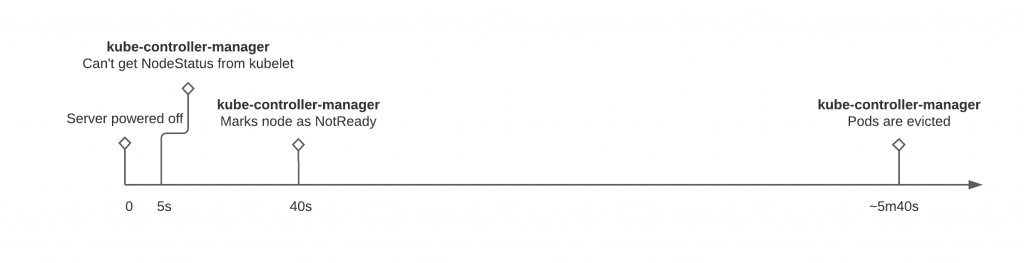

Kubernetes can automatically detect node failure and reschedule the pods to other nodes. The only problem here is the time between the node going down and the pod being rescheduled. Here’s how it goes without draining:

- Node goes down – someone pressed the power button on the server.

- kube-controller-manager, the service which runs on masters, cannot get the NodeStatus from the kubelet on the node. By default it tries to get the status every 5 seconds and it is controlled by --node-monitor-period parameter of the controller.

- Another important parameter of the kube-controller-manager is --node-monitor-grace-period, which defaults to 40s. It controls how fast the node will be marked as NotReady by the master.

- So after ~40 seconds kubectl get nodes shows one of the nodes as NotReady, but the pods are still there and shown as running. This leads us to --pod-eviction-timeout, which is 5 minutes by default (!). It means that after the node is marked as NotReady, only after 5 minutes Kubernetes starts to evict the Pods.

So if someone shuts down the server, then only after almost six minutes (with default settings), Kubernetes starts to reschedule the pods to other nodes. This timing is also valid for managed k8s clusters, like GKE.

These defaults might seem to be too high, but this is done to prevent frequent pods flapping, which might impact your application and infrastructure in a far more negative way.

Okay, Draining How?

As mentioned before – draining is the graceful method to move the pods to another node. Let’s see how draining works and what pitfalls are there.

Basics

kubectl drain {NODE_NAME} command most likely will not work. There are at least two flags that need to be set explicitly:

- --ignore-daemonsets – it is not possible to evict pods that run under a DaemonSet. This flag ignores these pods.

- --delete-emptydir-data – is an acknowledgment of the fact that data from EmptyDir ephemeral storage will be gone once pods are evicted.

Once the drain command is executed the following happens:

- The node is cordoned. It means that no new pods can be placed on this node. In the Kubernetes world, it is a Taint node.kubernetes.io/unschedulable:NoSchedule placed on the node that most of the pods tolerate.

- Pods, except the ones that belong to DaemonSets, are evicted and hopefully scheduled on another node.

Pods are evicted and now the server can be powered off. Wrong.

DaemonSets

If for some reason your application or service uses a DaemonSet primitive, the pod was not drained from the node. It means that it still can perform its function and even receive the traffic from the load balancer or the service.

The best way to ensure that it is not happening – delete the node from the Kubernetes itself.

- Stop the kubelet on the node.

- Delete the node from the cluster with kubectl delete {NODE_NAME}

If kubelet is not stopped, the node will appear again after the deletion.

Pods are evicted, node is deleted, and now the server can be powered off. Wrong again.

Load Balancer

Here is quite a standard setup:

The external load balancer sends the traffic to all Kubernetes nodes. Kube-proxy and Container Network Interface internals are dealing with routing the traffic to the correct pod.

There are various ways to configure the load balancer, but as you see it might be still sending the traffic to the node. Make sure that the node is removed from the load balancer before powering it off. For example, AWS node termination handler does not remove the node from the Load Balancer, which causes a short packet loss in the event of node termination.

Conclusion

Microservices and Kubernetes shifted the paradigm of systems availability. SRE teams are focused on resilience more than on stability. Nodes, containers, and load balancers can fail, but they are ready for it. Kubernetes is an orchestration and automation tool that helps here a lot, but there are still pitfalls that must be taken care of to meet SLAs.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK