Finding the link between heart rate and running pace with Spark ML – Fitting a l...

source link: https://vanwilgenburg.wordpress.com/2016/09/02/finding-the-link-between-heart-rate-and-running-pace-with-spark-ml-fitting-a-linear-regression-model/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Finding the link between heart rate and running pace with Spark ML – Fitting a linear regression model

Besides crafting software I’m an avid runner and cyclist. Firstly for my health and secondly because of all the cool gadgets there are available. Recently I started with a Coursera course on Machine Learning and with that knowledge I combined the output of my running watch with Spark ML. In this article I discuss how to load gps and heart rate data to a linear regression model and ultimately get a formula with heart rate as input and running pace as output.

A few weeks ago I started a Machine Learning course on Coursera I learned some things about gradient descent to solve linear regression problems. For me personally it was a bit too low level (but still very interesting nonetheless). To get all the nitty gritty details I suggest following the course on Coursera. When you have a bit of a background with derivatives and know that the sigma character does a sum you can try the lecture notes of lesson one. But without reading it you still finish this article and get the gist.

Also note that this article is written for Spark 1.6.2. and I’m aware version 2.0 is available for a while. Since it takes some time to figure everything out I haven’t had a change yet to write it for Spark 2.x, but I guess the changes won’t be dramatic and the concepts will be the same.

The data

My data sources are the .gpx files exported by Strava [https://www.strava.com] . You can export to .gpx by clicking the wrench icon (and then export) on your activity in Strava. I wrote some code to parse the xml with heart rate and speed as the output fields. Since I can dedicate a whole article on this I’ll skip this and assume our input is a tuple (heartRate in bpm, speed in km/h, lat, lon). Check the scala.xml.XML class for parsing and calculating the speed from two data points and for readability.

The result will be an RDD which we will use later.

val rows: RDD[(Int, Double,Double,Double)] = ???Filtering heart rates

The next step is to determine the proper range of heart rates we want to work with. There is a certain low point that doesn’t count as aerobic activity (and also happens to give unpredictable results). On the maximum side pick your anaerobic threshold. Above this threshold you heart rare will drift up while keeping the same speed (source is somewhere in this very well written book). So in short we work with this range because this range has a very high correlation between pace and heart rate, outside this range it’s considerably lower.

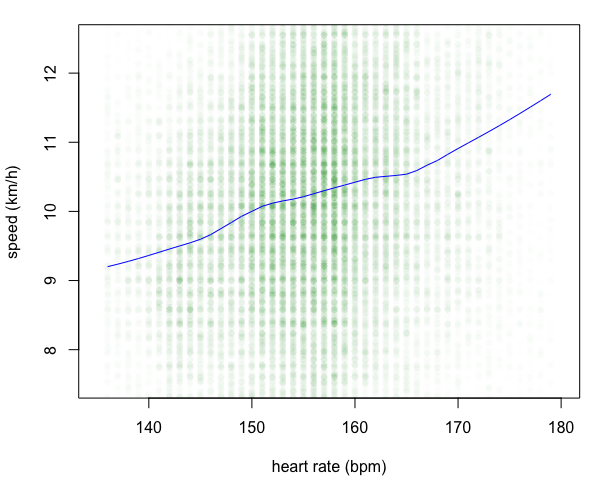

val filteredByHrAndSpeed = rows.filter(f => f._1 > 128 && f._1 < 180)Let’s plot the output data in a scatterplot with a line (smoothed with the lowess algorithm) in R so you can see what kind of answer we’re looking for:

There are so many dots I decided to plot them almost transparent to get a better result. As you can see this line is a bit linear, but of course we want to let Spark figure out what the real line is. R is fine for small data sets (like the 65K is used in this example), but larger things are better left to Spark.

Another nice thing to note is that much of my running is done around a heart rate of 153 (about 75% of my maximum heart rate).

DataFrames

Since Spark 2 the MLlib RDD-based API’s are in maintenance mode. So I’ll do it the right way and use DataFrames. A DataFrame is a relatively new way of organizing data in Spark. It’s still marked as experimental, but it’s gaining so much momentum I’m pretty sure it’s a stayer.

DataFrames are a faster data structure and much more memory efficient. Besides that it’s interchangeability (between Java, Python and Scala) is much better. I’m still figuring out the mechanics but Dean Wampler enthused me when he started to talk about CompactRow on Scala Days Berlin this year (slide 58)

Linear Regression

When you draw a straight line through your data points you want to validate how much sense this line makes. A method to do this is to minimize the average error (difference between line and actual point). Large errors are more serious, so the error is squared. This method is called ordinary least squares (CS229 page 4). The function to calculate the error is usually called the cost or loss function.

This is actually a very simple method for your cost function, more advanced methods exist (like ridge, Lasso or elastic net) and are omnipresent in self respecting machine learning libraries.

With a cost function you’re only half way. You want to find the optimal parameters for this cost function (in our case the A and B in the formula speed = A*heartRate + B).

It is often now possible to try all the values of the cost function. You want to take small steps and make them smaller when you’re reaching your target (you know you’re reaching it when the derivative is nearing 0). This algorithm is called (batch) gradient descent. There is also an algorithm called stochastic gradient descent, with this algorithm you start tuning the parameters as soon as you processed your first data point (instead of all the points with batch).

Note that this will lead to an almost perfect solution, in most cases good enough.

When your data set isn’t too big you can get the perfect solution, you need some nifty matrix calculations for this.

Loading data into DataFrames

Now that we know something about linear regression we can prepare our data for the next step.

First we have to define a schema, map our source file to Row objects and let sqlContext create a DataFrame from these objects .

The schema uses the default field names ‘label’ and ‘features’, used in the LinearRegression object.

An input csv-file is split by comma and mapped to a Row in an RDD.

The RDD and schema are passes as parameters to sqlContext.createDataFrame and will return a DataFrame.

import org.apache.spark.sql.{DataFrame, Dataset, Row, SQLContext}import org.apache.spark.mllib.linalg.VectorUDTval sqlContext: SQLContext = new SQLContext(sc)val schema = StructType(StructField("label", DoubleType, false) ::StructField("features", new VectorUDT, false) ::Nil)val input:RDD[Row] = filteredByHrAndSpeed.map(p => Row(p._2, Vectors.dense(p._1)))val df:DataFrame = sqlContext.createDataFrame(input, schema)Creating and fitting the model

The final step is to create a model and ‘fit’ it (produce a result in layman’s terms).

import org.apache.spark.ml.regression.LinearRegressionval lr = new LinearRegression().setMaxIter(100).setElasticNetParam(0.8).setTol(0.000000000001)val lrModel = lr.fit(training)MaxIter is the maximum number of iterations. More deliver a better result, but will take longer. Before the MaxIter is done it is checked wit Tol (for tolerance). Sometimes the difference between two iterations (the tolerance) is so small that we’re happy with the result and can stop processing. The final parameter is ElasticNetParam. I’m not able to explain what this parameter does, fiddling around with it will speed up the process of finding a good answer (have no clue about this parameter won’t affect the quality of the result, just the speed).

Interpreting the results

In this model we have to variables :

println(s"Coefficients: ${lrModel.coefficients} Intercept: ${lrModel.intercept}")The coefficient is the ‘direction’ of the line (the A in x*A + B).

Intercept is the B in x*A + B.

Now you have a formula that gives you the speed at a given heart rate.

Conclusion

The library is still marked as experimental so please let me know if anything stops working and I’ll update the article.

I’m not sure if there really is a linear relation between heart rate and pace, quadratic seems more logical, but that might be something for next time.

I hope to find the time to test things with Spark 2.x, it will probably makes things a bit easier.

sources

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK