Timeouts and Retries In Spring Cloud Gateway

source link: https://piotrminkowski.wordpress.com/2020/02/23/timeouts-and-retries-in-spring-cloud-gateway/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

In this article I’m going to describe two features of Spring Cloud Gateway: retrying based on GatewayFilter pattern and timeouts based on a global configuration. In some previous articles in this series I have described rate limiting based on Redis, and circuit breaker built with Resilience4J. For more details about those two features you may refer to the following blog posts:

Example

We use the same repository as for two previous articles about Spring Cloud Gateway. The address of repository is https://github.com/piomin/sample-spring-cloud-gateway.git. The test class dedicated for the current article is GatewayRetryTest.

Implementation and testing

As you probably know the most of operations in Spring Cloud Gateway are realized using filter pattern, which is an implementation of Spring Framework GatewayFilter. Here, we can modify incoming requests and outgoing responses before or after sending the downstream request.

The same as for examples described in my two previous articles about Spring Cloud Gateway we will build JUnit test class. It leverages Testcontainers MockServer for running mock exposing REST endpoints.

Before running the test we need to prepare a sample route containing Retry filter. When defining this type of GatewayFilter we may set multiple parameters. Typically you will use the following three of them:

retries– the number of retries that should be attempted for a single incoming request. The default value of this property is3statuses– the list of HTTP status codes that should be retried, represented by usingorg.springframework.http.HttpStatusenum name.backoff– the policy used for calculating timeouts between subsequent retry attempts. By default this property is disabled.

Let’s start from the simplest scenario – using default values of parameters. In that case we just need to set a name of GatewayFilter for a route – Retry.

@ClassRulepublic static MockServerContainer mockServer = new MockServerContainer();@BeforeClasspublic static void init() {System.setProperty("spring.cloud.gateway.routes[0].id", "account-service");System.setProperty("spring.cloud.gateway.routes[0].uri", "http://192.168.99.100:" + mockServer.getServerPort());System.setProperty("spring.cloud.gateway.routes[0].predicates[0]", "Path=/account/**");System.setProperty("spring.cloud.gateway.routes[0].filters[0]", "RewritePath=/account/(?<path>.*), /$\\{path}");System.setProperty("spring.cloud.gateway.routes[0].filters[1].name", "Retry");MockServerClient client = new MockServerClient(mockServer.getContainerIpAddress(), mockServer.getServerPort());client.when(HttpRequest.request().withPath("/1"), Times.exactly(3)).respond(response().withStatusCode(500).withBody("{\"errorCode\":\"5.01\"}").withHeader("Content-Type", "application/json"));client.when(HttpRequest.request().withPath("/1")).respond(response().withBody("{\"id\":1,\"number\":\"1234567891\"}").withHeader("Content-Type", "application/json"));// OTHER RULES}Our test method is very simple. It is just using Spring Framework TestRestTemplate to perform a single call to the test endpoint.

@AutowiredTestRestTemplate template;@Testpublic void testAccountService() {LOGGER.info("Sending /1...");ResponseEntity r = template.exchange("/account/{id}", HttpMethod.GET, null, Account.class, 1);LOGGER.info("Received: status->{}, payload->{}", r.getStatusCodeValue(), r.getBody());Assert.assertEquals(200, r.getStatusCodeValue());}Before running the test we will change a logging level for Spring Cloud Gateway logs, to see the additional information about retrying process.

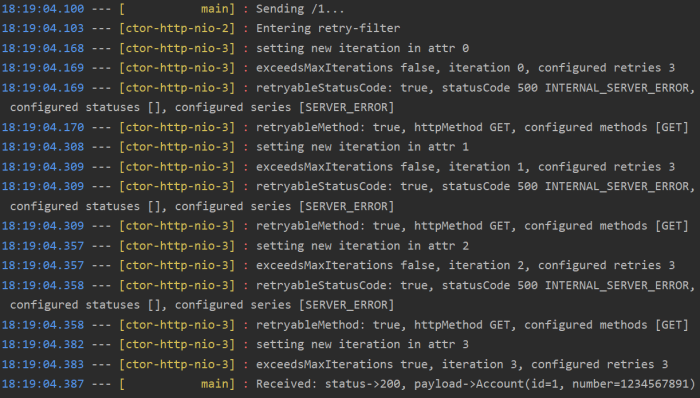

logging.level.org.springframework.cloud.gateway.filter.factory: TRACESince we didn’t set any backoff policy the subsequent attempts are replied without any delay. As you see on the picture below, a default number of retries is 3, and filter is trying to retry all HTTP 5XX codes (SERVER_ERROR).

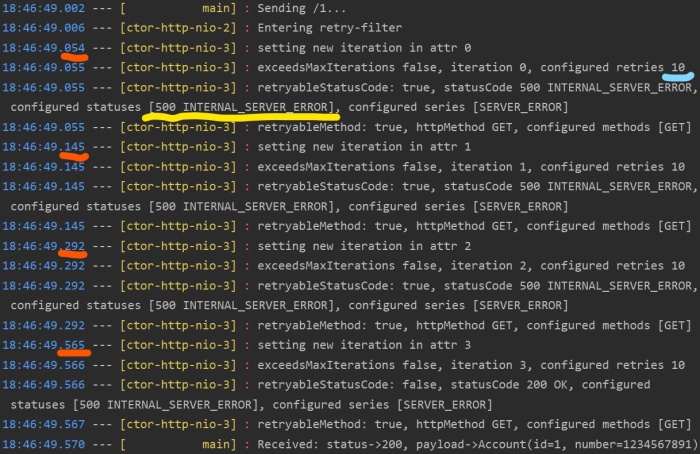

Now, let’s provide a little more advanced configuration. We can change number of retries and set the exact HTTP status code for retrying instead of the series of codes. In our case a retried status code is HTTP 500, since it is returned by our mock endpoint. We can also enable backoff retrying policy beginning from 50ms to max 500ms. The factor is 2 what means that the backoff is calculated by using formula prevBackoff * factor. A formula is becoming slightly different when you set property basedOnPreviousValue to false – firstBackoff * (factor ^ n). Here’s the appropriate configuration for our current test.

@ClassRulepublic static MockServerContainer mockServer = new MockServerContainer();@BeforeClasspublic static void init() {System.setProperty("spring.cloud.gateway.routes[0].id", "account-service");System.setProperty("spring.cloud.gateway.routes[0].uri", "http://192.168.99.100:" + mockServer.getServerPort());System.setProperty("spring.cloud.gateway.routes[0].predicates[0]", "Path=/account/**");System.setProperty("spring.cloud.gateway.routes[0].filters[0]", "RewritePath=/account/(?<path>.*), /$\\{path}");System.setProperty("spring.cloud.gateway.routes[0].filters[1].name", "Retry");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.retries", "10");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.statuses", "INTERNAL_SERVER_ERROR");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.backoff.firstBackoff", "50ms");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.backoff.maxBackoff", "500ms");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.backoff.factor", "2");System.setProperty("spring.cloud.gateway.routes[0].filters[1].args.backoff.basedOnPreviousValue", "true");MockServerClient client = new MockServerClient(mockServer.getContainerIpAddress(), mockServer.getServerPort());client.when(HttpRequest.request().withPath("/1"), Times.exactly(3)).respond(response().withStatusCode(500).withBody("{\"errorCode\":\"5.01\"}").withHeader("Content-Type", "application/json"));client.when(HttpRequest.request().withPath("/1")).respond(response().withBody("{\"id\":1,\"number\":\"1234567891\"}").withHeader("Content-Type", "application/json"));// OTHER RULES}If you run the same test one more time with a new configuration the logs looks a little different. I have highlighted the most important differences on the picture below. As you see the current number of retries 10 only for HTTP 500 status. After setting a backoff policy the first retry attempt is performed after 50ms, the second after 100ms, the third after 200ms etc.

We have already analysed retry mechanism in Spring Cloud Gateway. Timeouts is an another important aspect of request routing. With Spring Cloud Gateway we may easily set global read and connect timeout. Alternatively we may also define them for each route separately. Let’s add the following property to our test route definition. It sets global timeout on 100ms. Now, our test route contains already tester Retry filter with newly adres global read timeout on 100ms.

System.setProperty("spring.cloud.gateway.httpclient.response-timeout", "100ms");Alternatively, we may set timeout per single route. If we would prefer such a solution here a line we should add to our sample test.

System.setProperty("spring.cloud.gateway.routes[1].metadata.response-timeout", "100");Then we define another test endpoint available under context path /2 with 200ms delay. Our current test method is pretty similar to the previous one, except that we are expecting HTTP 504 as a result.

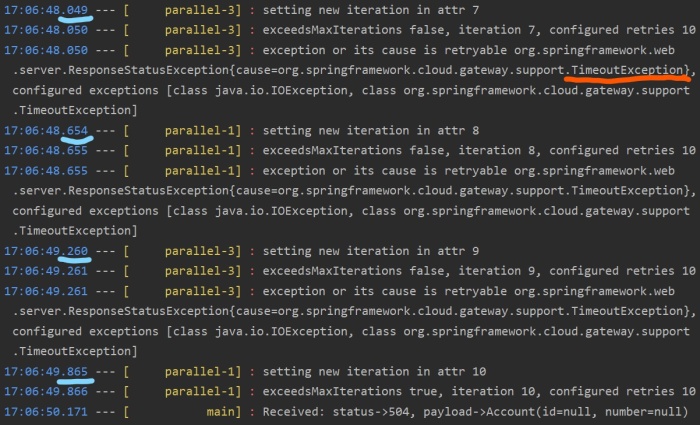

@Testpublic void testAccountServiceFail() {LOGGER.info("Sending /2...");ResponseEntity<Account> r = template.exchange("/account/{id}", HttpMethod.GET, null, Account.class, 2);LOGGER.info("Received: status->{}, payload->{}", r.getStatusCodeValue(), r.getBody());Assert.assertEquals(504, r.getStatusCodeValue());}Let’s run our test. The result is visible on the picture below. I have also highlighted the most important parts of the logs. After several failed retry attempts the delay between subsequent attempts has been set to the maximum backoff time – 500ms. Since the downstream service is delayed 100ms, the visible interval between retry attempts is around 600ms. Moreover, Retry filter by default handles IOException and TimeoutException, what is visible in the logs (exceptions parameter).

Summary

The current article is the last in series about traffic management in Spring Cloud Gateway. I have already described the following patterns: rate limiting, circuit breaker, fallback, failure retries and timeouts handling. That is only a part of Spring Cloud Gateway features. I hope that my articles help you in building API gateway for your microservices in an optimal way.

Recommend

-

17

17

The Python HTTP library requests is probably my favourite HTTP utility in all the languages I program in. It's simple, intuitive and ubiquitous in the Python commu...

-

12

12

How to Manage Database Timeouts and Cancellations in Go Posted on: 20th April 2020 Filed under:

-

8

8

Advanced usage of Python requests - timeouts, retries, hooks Dani Hodovic Feb. 28, 2020 8 min read ...

-

15

15

Circuit breaker and retries on Kubernetes with Istio and Spring Boot An ability to handle communication failures in an inter-service communication is an absolute necessity for every single service mesh framework. It includes...

-

4

4

Foreach Loops and Timeouts in 3 Languages I’m currently working on an IoT project that has components written in a variety of languages. Primarily we’re using TypeScript,...

-

65

65

In this series so far, we have learned how to use the Resilience4j Retry, RateLimiter,

-

4

4

Selenium: Handling Timeouts and Errors This is part 3 of my selenium exploration trying to fetch stories from the NY Times ((as a subscriber). At...

-

7

7

Reading Time: 4 minutes Origin: why? Everything happens for a reason in nature. And the world is changing perhaps the technology also changing with time, now we are using microservices instead of monoliths. Let’s...

-

8

8

EngineeringImplementing 429 retries and throttling for API rate-limitsBy Ben OgleMarch 29, 2021Learn how to handle 429 Too Many Requests r...

-

6

6

Timeouts and cancellation for humans Thu 11 January 2018 Your code might be perfect and never fail, but unfortunately the outside world is less reliable. Sometimes, ot...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK