Surround Sound (5.1 and 7.1) in lib#webrtc and Chrome

source link: http://webrtcbydralex.com/index.php/2020/04/08/surround-sound-5-1-and-7-1-in-libwebrtc-and-chrome/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Surround Sound (5.1 and 7.1) in lib#webrtc and Chrome

While scalability is often the first excuse some use not to use WebRTC, a persistent claim even though that myth has been debunked long ago and many time over since, “quality” is certainly the second one. Webrtc cannot do <put a resolution here>, it’s just good enough for webcams, it cannot use more than 2.5 Mbps, ….. . While all of those previous claims are false, there is one that was correct: the default audio codecs in webrtc implementations, and especially in browsers, are not as good when it comes to spatial info than their streaming or gaming counterparts… untill recently!

(@voluntasに大変ご失礼します^o^)

INTRODUCTION TO SURROUND SOUND

The first surround sound format (quadraphonic sound) came out in 1969. We are in 2020, and #webrtc supports stereo opus at best? Let’s not even compare to current audio standards AAC 5.1 you can get by default on AppleTV or Netflix, or Dolby Atmos in movie theatre. If you want a crash course on the past 40 years of audio (and feel old like I did), this site does a good job at showing the different technologies in an historical way, and simple words.

Wait, did I say netflix? It’s that VOD thingy over the internet, right? So it is possible to get 5.1 over the internet then? Well, yes, but they are nuances.

SURROUND SOUND OVER THE INTERNET

First, if you use native apps, you can do whatever you want. If you use a native iOS app, to send to an AppleTV for example, the HTML5 specifications do not apply to you, you are only limited by what the AppleTV let you do. Replace Apple Tv by Roku, AndroidTV, etc and the sentence is still true.

However, if you want interoperability with the web, you are limited to 3 options:

– What the Video tag can support directly (decoder only)

– Media Source Extension (still linked to the Video Tag)(decoder only)

– Webrtc

If we take the example of AAC, the support is here for the Video tag and the Media Source Extension, but not in Webrtc. For AV1, the support was added to browser more than a year ago, and to webrtc only right now. It is much easier to make a real-time decoder, which everyone want to use, than it is to make a real-time encoder, which only webrtc will use. You also need to have an RTP payload spec for the codecs you want to use in webrtc, where the definition of the codec itself is enough for streaming through MSE. That explains the delay in implementation and adoption of codecs between the media stack and the webrtc stack (question like, does Chrome support codec N are thus difficult to answer with a simple yes or no).

REAL TIME SURROUND SOUND IN WEBRTC

When it comes to Webrtc, H.264 made it in the list of the mandatory to implement codecs. If you were to add AAC support, you would have all you need to support the entire MPEG4 format, which has an existing RTP payload format, and a separate backward compatible extortion for Surround Audio. So why is it not here already? Even RTMP supported AAC, not surprisingly since AAC was released in 1997.

The adoption of H264 in the webrtc standard (I should say rtcweb) was facilitated by the release of openH264 by Cisco in 2014. Using the fact that the H264 licensing cost is capped, and that Cisco was already paying the maximum, Cisco de facto made H264 software implementation free (Hardware implementation license cost is shouldered by the hardware manufacturer), for anybody who would use Cisco provided pre-compiled binaries. (it s slightly more complicated, MP4 would require high profile, which openH264 does to provide but it’s enough for the sake of this discussion).

Extremely quickly the licensor of AAC removed the corresponding option; de facto preventing AAC from getting the same treatment. Eventually, the standard committee decided to for the more open Opus, whose stereo form (the only one standardised for RTP media transport) would be mandatory to implement.

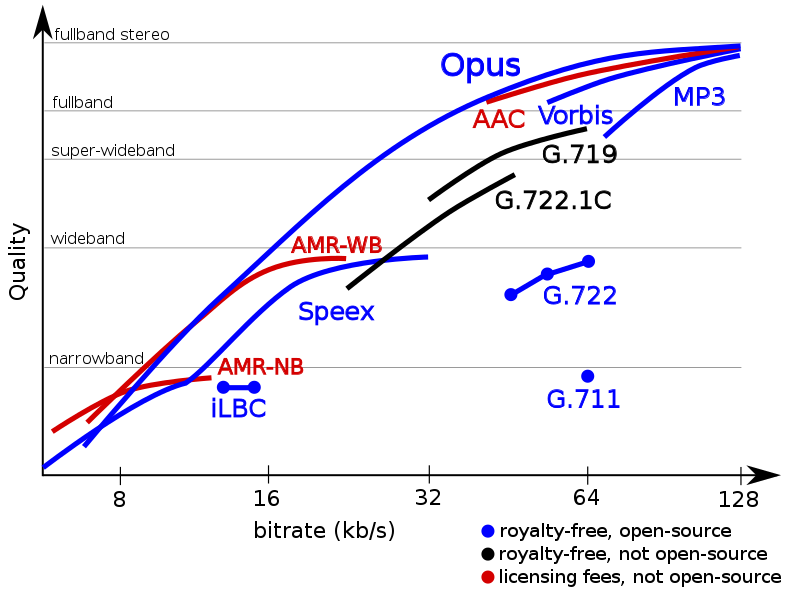

On paper, Opus as a lot to offer. It’s free as in beer (royalty free) and free as freedom (open source implementation and standard from 2012, updated in 2017). It has better quality than other default codecs for almost any given usage (see above). It even has an RTP Payload specification, so it can be used directly in webrtc. What’s not to like?

The devil is always in the detail.

The original codec specification details all of the 256 channels opus can handle. It defines how some channel can be standalone channels (mono) and some other coupled (stereo), and how one can for example reconstruct the usual surround audio 5.1 by having a set of stereos and audio channels together.

The ogg Vorbis container specification for Opus, describes how you can handle up to 5.1 and 7.1 surround sound channels with opus. A dramatic reduction from the 256 of the codec spec, but a practical one, aligned with the best sound acquisition/capture, and audio processing systems at the time.

Opus RTP payload specification though, would only define stereo channels at best…. While it fit the original use case of webrtc (1-1, p2p, web calls), in the evolution of the usages of the tech like the chromecast, and Stadia, having surround sound is mandatory to be on par with industry standards. For millicast.com, the leading real-time streaming platform, the interest was twofolds: how can I transcode incoming RTMP streams with AAC 5.1 into webrtc stream without losing spatial information (i.e. without down mixing the audio from 6 channels to two channels), and how to support surround sound for end-to-end webrtc with minimal latency.

SURROUND SOUND IN LIBWEBRTC / CHROME

Google then started implementing opus surround sound in 2017, in plain sight. By april 2019, it was in libwebrtc, by may 2019 in chrome (around chrome 78). That’s good to know, but I know what s coming next: how did they do it, and how can I use it??

Since there is no opus RTP payload specification available beyond 2 channels. Google used the best next thing: the ogg payload specification. In it, especially in section 5.1, you can see that any multiple channels implementation is based on a collection of mono and stereo streams, advertised as total number of streams, and coupled (stereo) streams, with a mapping for the spatial location.

The implementation in chrome/libwebrtc, uses the “multiopus” codec name to refer to those two (5.1 and 7.1) configurations of opus, and simply “opus” for the webrtc mandatory to implement stereo mode.

class AudioEncoderMultiChannelOpusImpl final : public AudioEncoder {

public:

// Static interface for use by BuiltinAudioEncoderFactory.

static constexpr const char* GetPayloadName() { return "multiopus"; }The default encoder and decoder factories are being provided with “multiopus” support, but they are being explicitly told not to advertise it which means that the default create offer and create answer APIs will NOT list those codecs, even though they are supported. You have to know it s there, and manually manage them at the signalling level.

rtc::scoped_refptr<webrtc::AudioEncoderFactory> CreateWebrtcAudioEncoderFactory() { return webrtc::CreateAudioEncoderFactory< webrtc::AudioEncoderOpus, webrtc::AudioEncoderIsac, webrtc::AudioEncoderG722, webrtc::AudioEncoderG711, NotAdvertisedEncoder<webrtc::AudioEncoderL16>, NotAdvertisedEncoder<webrtc::AudioEncoderMultiChannelOpus>>(); }

The SDP formats are twofold, map the ogg container specs, and are easy to extract from the implementation:

AudioCodecInfo surround_5_1_opus_info{48000, 6, /* default_bitrate_bps= */ 128000}; surround_5_1_opus_info.allow_comfort_noise = false; surround_5_1_opus_info.supports_network_adaption = false; SdpAudioFormat opus_format({ "multiopus", 48000, /* sampling in Hz */ 6, /* number of channels 5+1/ { {"minptime", "10"}, {"useinbandfec", "1"}, {"channel_mapping", "0,4,1,2,3,5"}, {"num_streams", "4"}, /* total number of streams */ {"coupled_streams", "2"}/*number of stereo streams */}}); specs->push_back({std::move(opus_format),surround_5_1_opus_info});

DEMO TIME

Those demos where prepared by the cosmo and Janus teams, collectively known as the “webrtc A-Team” ( The ones you go to see If you have a problem, if no one else can help. If you can find them, maybe you can hire… the A-Team [0]).

First, a loopback demo with cosmo’s medooze server. This web app will load the Fraunhoffer AAC 5.1 demo file from the official website (here), read it to you in the browser using the normal media stack, transcode it into a webrtc stream with multi opus, send it over a Medooze SFU, and play it back to you in chrome using the Webrtc stack. You get both displays in parallel to visually gauge the latency induced by the medooze SFU during the transcoding. [here]

Then, not ONE but TWO quick demos have been made available by our Meteecho team (what ~~):

This first demo uses the EchoTest plugin, and takes the Fraunhoffer official AAC 5.1 video to inject the surround streams.

A second demo using the VideoRoom plugin always publishes a surround stream using the same video. If you want to see how that behaves with a regular participant, you can join as a normal participant using this client.

They also illustrate two ways of handling the media: using either a single peer connection, or separate peer connections.

Happy Hacking.

Extra reading and references for the technical minded

[1] – https://www.digitaltrends.com/home-theater/ultimate-surround-sound-guide-different-formats-explained/

[2] – https://en.wikipedia.org/wiki/Comparison_of_audio_coding_formats

[3] – https://tools.ietf.org/html/rfc6416

[4] – https://tools.ietf.org/html/rfc5691

[5] – https://tools.ietf.org/html/rfc6716

[6] – https://tools.ietf.org/html/rfc8251

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK