Rethinking Web Performance with Service Workers

source link: https://medium.baqend.com/the-technology-behind-fast-websites-2638196fa60a

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Rethinking Web Performance with Service Workers

30 Man-Years of Research in a 30-Minute Read

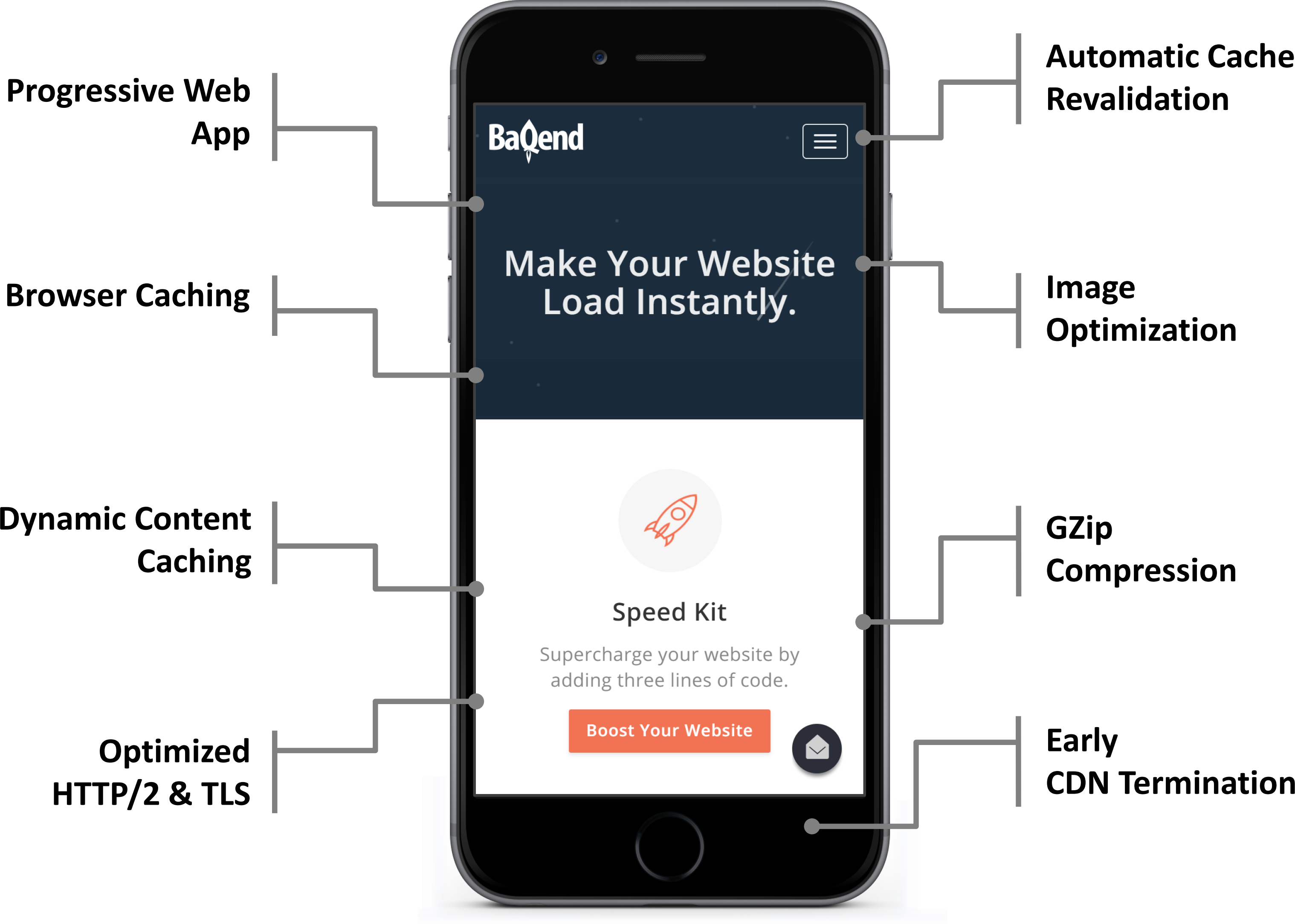

This article surveys the current state of the art in page speed optimization through Service Worker technology. It contains the gist of more than 30 man-years of research that went into Speed Kit, an easy-to-use web performance plugin to accelerate websites.

TL;DR

Users leave when page loads take too long. But even though page speed is critical for business success, slow page loads are still common for modern websites. Why is that and what can you do about it?

In the first part of this article, we motivate our quest for fast page load times and identify the 3 main web performance bottlenecks: frontend, backend, and network. Addressing all of these challenges, we summarize the current top-10 web performance best practices for the client side (frontend), the server side (backend), and data transfer (network). We go into more detail on caching as the go-to solution for accelerating static assets and discuss why dynamic data is typically considered uncachable. We then unfold Baqend’s research-backed system design that makes caching dynamic data feasible through a novel cache coherence mechanism based on Bloom filters (see VLDB paper): Baqend combines the latency benefits from HTTP caching with Δ-atomicity and linearizable read consistency. We then go into detail on how Baqend’s unique caching scheme can be used to accelerate any website through Speed Kit, an easy-to-use web performance plugin. Finally, we show you how to generate your own performance report to uncover potential for further optimization.

(For a structured overview over the specific questions answered in this article, consult the table of contents at the bottom.)

Let us start with a motivation for optimizing web performance.

Why is page speed important?

49% of users expect websites to load in 2 seconds or less, according to a survey by Akamai. But these expectations are not met in practice: The median top-500 e-commerce website, for example, has a page load time of 9.3 seconds (see source).

And there are many more studies that relate web performance to user behaviour. For example, Amazon found that 100 ms of additional loading time decrease sales revenue by 1%. With Amazon’s current revenue, the impact is over 1 billion USD per year. Similarly, Google measured a 20% drop in traffic, when comparing user reactions to 30 search results instead of 10: The decrease in engagement was caused by 500ms of additional latency for the search query. The other way around, GQ saw traffic go up permanently by 80% after improving page load time from 7 to 2 seconds.

Given that even small page speed improvements have a tremendous impact on business success, it really boggles the mind how so many websites are not fast at all. Throughout the rest of this article, we will cover how to measure when websites feel slow, discuss why many are slow, and explain what can be done about it.

When does a website feel fast?

Typical web performance measures relate to the Time To First Byte (TTFB), DomContentLoaded, or other technical aspects of the page load. While those metrics help engineers to perform diagnostics or low-level optimizations, they correspond only loosely to the customers’ experience: Users perceive a website as rather slow or fast, depending on when the first relevant content is displayed. Another indicator is how long it takes until they can enter data, click on the navigation bar, or interact with the website in some other way. While these aspects of web performance are easy to grasp intuitively, user-perceived page speed is not as easy to measure objectively.

In the illustration above, you can see how a website is loaded on a client device. The dashed line indicates the visual completeness (y-axis) from a blank screen (0) to the fully-rendered page (1). The example also illustrates two of the more popular measures for user-perceived page speed:

- First Meaningful Paint (FMP): The First Meaningful Paint is the point in time at which the user gets to see important information for the first time, e.g. headline and text in a blog or search bar and product overview in a webshop. In consequence, the time until the FMP is a reasonable measure for whether or not the user perceives the website as fast. It is usually measured in seconds or milliseconds. To make it objectively measurable, the FMP is typically defined as the moment at which the viewport experiences the greatest visual change. To identify the exact moment at which this happens, measurement tools resort to video analysis.

- Speed Index (SI): The Speed Index is the average time until a visible element appears on-screen. Like the time to FMP, the Speed Index is also determined through video analysis and is also typically measured in seconds or milliseconds. The Speed Index corresponds to the area above the dashed line in the illustration above — a small SI corresponds to a fast website.

Both FMP and SI are widely acknowledged as good numerical representations of user-perceived page speed; this distinguishes them from other measures that capture hidden components of the loading process such as the time to first byte. While the SI is more fine-grained in what it captures, the FMP has the advantage of being robust to outliers, e.g. asynchronous ads popping up after some time.

How fast is fast enough?

As described above, most users expect loading times below two seconds. However, cognitive science suggests that people start losing focus already when they have been waiting only for a single second:

Above the magic threshold of 1 second, users divert their attention to something else. Depending on the task at hand, individual users might be waiting for a few seconds; statistically speaking, though, practically any task will be abandoned when the page load takes 10 seconds or longer. According to the HTTP Archive that frequently measures the Alexa top 1M domains, many websites even fall short of the 10 second mark.

For any online business, page speed is therefore a make-or-break feature.

High bandwidth is not enough — we need to reduce latency.

Intuitively, a fast Internet connection should already guarantee fast page loads. Surprisingly, though, this is not the case. If your website feels sluggish using a 5 Mbps connection, upgrading to 10 or even 100 Mbps won’t solve the problem:

If you observe page load time under increasing bandwidth (blue bars), you’ll notice that there is no improvement beyond 5 Mbps. However, if you are able to decrease access latency (orange bars), you will see a proportional decrease in page load time. Or put differently:

2× Bandwidth ≈ Same Load Time

½ Latency ≈ ½ Load Time

In the following, we will discuss the different bottlenecks for web performance to explain why latency is the key performance factor.

What makes websites slow?

When opening a website, the browser sends a data request to the web server. Depending on the distance between client and server, message runtime alone can already cause a significant delay. On receiving the client’s request, the server assembles the requested data (which may also cost some time) and then sends it back.

Say, for example, a user from the US is visiting a website hosted in Europe. To make the website load fast, the following bottlenecks have to be resolved:

- Client-side rendering (details below): Even when the individual resources arrive fast, the browser still needs time to render the page. The time until content is finally displayed is often dominated by dependencies between individual resources, because they can block the rendering process.

- Server-side processing (details below): To assemble the requested data, the server has to perform some work such as rendering HTML templates or executing database queries — this requires time to complete. When there are many users active at the same time, the server may further be under a high load or even overloaded which will cause additional waiting time.

- Network latency (details below): For an average website, around 100 resources need to be transferred over a high-latency network connection. Thus, it can take a while until the browser has enough data to show something meaningful.

In summary of the above, the three main bottlenecks of web performance are (1) the frontend, (2) the backend, and (3) the network. In this part of the article, we will take a closer look at each of these three bottlenecks and discuss possible optimizations. Later in this article, we will describe how we implemented a performance plugin — Speed Kit — that optimizes these bottlenecks automatically.

1.) How to optimize frontend performance?

The key priciple of frontend optimization is to make the browser’s life as easy as possible. To this end, dispensable resources should be removed and the remaining ones should be compacted as much as possible. Further, critical resources should be fetched not only as quick as possible, but also in the right order to enable fluent processing. Lastly, content should be optimized for client devices before data is sent over the network.

Minimize Page Weight

One of the more obvious ways to accelerate page loads is to reduce the number of bytes that need to be transferred over the network. A good first step is removing nonessential artifacts such as unused HTML, comments, and dispensable scripts or stylesheets. To make the remaining resources as small as possible, minification tools can further remove unnecessary characters before upload to production. Finally, GZip compression should be enabled for all text resources, thus reducing their effective size before sending them over the network. Likewise, image compression can also improve bandwidth efficiency.

Optimize the Critical Rendering Path

The critical rendering path (CRP) is the sequence of actions the browser has to perform in order to start rendering the page. For ideal performance, it is mandatory to keep this sequence as short as possible. To this end, the resources needed to display the above-the-fold (i.e. the immediately visible area) should be minimized, for example by inclining the critical CSS. Also, loading scripts asynchronously can greatly reduce the number of critical resources.

Serve Responsive Images

Another optimization to reduce the amount of loaded data is resizing images at the server or CDN before they are sent to the client: Instead of transferring a high-resolution image which is then scaled down in the browser anyways, every client receives a picture that is already pixel-perfect for the given screen dimensions. When images are encoded in WebP or Progressive JPEG, the browser can even start displaying them before they are fully loaded, thus further reducing perceived waiting time.

Leverage Browser Caching

The browser cache is located within the user’s device and therefore does not only provide fast, but instantaneous access to cached data. To bound staleness, every data item is only cached for a specified Time To Live (TTL) after which it is implicitly removed. Static assets such as images or scripts can be invalidated at deployment time with cache busters. However, they are only applicable to linked assets: The HTML file itself is typically only cached for a very short time in order to guarantee freshness (micro-caching, see below) — which requires frequent and slow revalidations. Similarly, dynamic data (e.g. a shopping cart or a news ticker) is typically not put in the browser cache at all, because it is hard to keep the cached copies in-sync with the base data (see below). By using Etag & Last-Modified headers, it is possible to avoid reloading expired resources over the network when they are actually still up-to-date: If cached version and original version are identical, the server only sends a new (extended) TTL and omits the resource itself.

To learn more about client-side optimization, read our dedicated article on frontend technologies or watch our Code.Talks presentation on web performance (video).

2.) How to tune backend performance?

For ideal page load times, the backend has to produce a response for every incoming request as fast as possible. However, the even more difficult part is to provide these low response times in the presence of many concurrent users, network outages, machine failures, or other error scenarios.

Server Stack Efficiency

The Time To First Byte (TTFB) is the time from sending the first request until receiving the first data, measured at the client. It represents a lower bound for (and usually corresponds with) your page load time: the smaller, the better. On the server side, a minimal TTFB can be facilitated through efficient code and processing requests in parallel instead of sequentially. In a distributed backend, it is further recommended to optimize database calls for low latency and minimize shared state to avoid coordination where possible.

Scale horizontally

In order to cope with high load, a system needs to be designed in such a way that sustainable load grows with the number of machines in the cluster. In practice, a sharded database is often used in combination with (mostly) stateless application servers. In order to make sure that client requests are actually spread evenly across all machines, effective load balancing has to be implemented.

Ensure high availability

In order to shield against machine outages or network partitions, data is often distributed to several places. While this increases availability, it also opens the door for potential inconsistencies between the different copies. From the CAP Theorem, we know that every distributed storage system is subject to an availability-consistency trade-off: Under certain network partitions, you have to either sacrifice consistency (e.g. respond with stale data) or sacrifice availability (e.g. return an error). Before this background, choosing the right system for a given application is very complex. On the server side, databases can be configured with automatic failover to recover from errors on their own. On the client side (or at the CDN), failures can be compensated or hidden through other means, for example by serving (possibly) stale content from caches when the backend is unavailable (stale-on-error).

For more details on technologies used at the server side, see our related articles on Backend-as-a-Service (DBaaS) technology, NoSQL databases, real-time databases, and distributed stream processing.

3.) How to improve network performance?

With about 100 requests for an average website, networking often becomes a limiting factor for page load times. To reduce networking overhead, it is not only necessary to accelerate every individual access operation, but also to optimize all the protocols that are involved, especially HTTP.

Reduce Latency

As argued above, latency is the key factor in improving page speed. To keep it minimal, state-of-the-art approaches employ geo-replication or caching, for example through Content Delivery Networks (CDNs). However, maintaining consistency between cached copies (cache coherence) is critical: If you do not keep track of outdated copies, users will see outdated content. To guarantee fresh data for the users, caching is usually employed for static data only. Baqend’s unique caching scheme (see below), in contrast, allows caching everything: not only static assets, but also query results or other information that changes unpredictably.

Optimize the Protocol Stack

The Internet is organized in layers: For example, when HTTP traffic is sent over the wire, individual requests are chopped into encrypted TLS packets which are split into TCP segments each of which corresponds to several IP packets and so forth. While each of these layers is intended to abstract from the complexities of the layers below, understanding how each of them works and how they depend on one another is critical to avoid bottlenecks. For example, you should align TLS packet size with the TCP congestion window to avoid latency hiccups for encrypted HTTP traffic and you should use persistent TCP connections wherever possible to max out bandwidth (cf. TCP slow-start). TLS can also be optimized, for example by OCSP stapling, stateless session resumption, or terminating TLS connections in a nearby CDN node instead of the faraway origin server.

Leverage HTTP/2

HTTP/2 is the new standard for communication on the web. It introduces multiple significant performance improvements over its predecessor HTTP/1.1 in order to boost loading times. Some optimizations come out-of the-box with the new protocol version, e.g. multiplexing for increased concurrency or header compression to get the most out of the available bandwidth. Other features like server push or stream prioritization require some effort on the client or server side. Some HTTP/1.1 best practices are HTTP/2 anti-patterns (video) and should therefore be avoided: Domain sharding, for instance, was an effective way to overcome the limited concurrency of HTTP/1.1 by loading resources over different connections in parallel. With version HTTP/2, using a single connection is actually much faster.

To learn more on HTTP/2 tuning and network performance in general, have a look at our Heise article (German) or our HTTP/2 & Networking tutorial (150 slides).

In the first part of this article, we found that latency is critical for fast page loads. In the second part, we then summarized current best practices to avoid bottlenecks in the frontend, the backend, and the network. In this part, we focus on caching as the pivotal mechanism for reducing latency and explain how Baqend’s unique caching scheme enables caching dynamic data under strong consistency guarantees.

How does web caching make websites fast?

Even if you bring down processing time to almost 0 in both backend and frontend, network latency can still be prohibitive for fast page loads. Caching is the only way to effectively reduce the distance between clients and the data they need to access.

Caching brings data closer to the clients

In traditional web caching, copies of requested data items are stored in different places all over the world, for example in the clients’ devices (browser cache) or a globally distributed Content Delivery Network (CDN). Thus, a client request does not have to travel all the way to the original web server, but instead only to the nearest cache.

Accessing data from nearby caches instead of the original web server has two immediate benefits:

- Low Latency: Since every request can be answered by a nearby web cache (e.g. your browser cache or a CDN edge node), the client does not have to wait as long for responses.

- Less Processing: Since requests are only forwarded to the web server on cache misses (i.e. when the data is not available in the CDN node), load on the original web server is effectively reduced.

Challenge: dealing with staleness

The problem with caching is that you have to keep your copy in-sync with the original data: Whenever the base data changes, the corresponding caches have to be invalidated (i.e. cleared or marked as outdated) — or else users will see stale data. To bound the possible staleness, all cached resources expire after a certain amount of time and are then implicitly invalidated. This timeframe is called the Time To Live (TTL) in the HTTP standard. Since static resources change infrequently or not at all, they are typically cached with long TTLs.

Dynamic data: playing hard to cache

For dynamic data such as user comments, product recommendations, social feeds, or database queries, however, long TTLs correspond to a high probability of data staleness. Therefore, it is considered state of the art to either assign small TTLs (micro-caching, see below) for dynamic data or to not cache dynamic data at all.

How to cache dynamic content without staleness?

Traditional web caching brings data closer to the clients, but only works for static resources. Baqend is unique through its ability to cache anything— even dynamic data such as user profiles, counters, or database query results. The concrete algorithms behind this are not our secret sauce, but they are all published research. Here is the simple idea: First, we monitor all updates and invalidate stale caches where possible (e.g. in the CDN) — both in realtime. Second, we tell our clients which caches have become stale, so that they can avoid stale caches that our servers cannot invalidate (e.g. the browser caches in the clients’ devices).

Detect stale data in the backend

To make this possible, Baqend keeps track of every issued response and invalidates the corresponding caches upon change. In more detail, we continuously monitor all cached resources and remove them from the caches whenever they are updated. For invalidation-based caches (e.g. CDNs like Akamai or Fastly), this is no problem as they provide an interface to remove specific data items from the cache.

Bypass stale data in the client

The situation is more involved for expiration-based caches (e.g. the browser cache), because they keep data until the respective TTL expires: Data items cannot be removed. To invalidate changed information regardless, we therefore use a trick: We let the client know which currently cached resources have become stale and only let it use the fresh ones. This information is transmitted initially on connection, in fixed periods, and on-demand when staleness needs to be avoided for the next read operation.

Freshness guaranteed

Since the full list of stale URLs can get prohibitively long, we use a compressed representation based on Bloom filters. An entry in the Bloom filter indicates that the content was changed in the near past and that the content might be stale. In such cases, the client bypasses all expiration-based caches and fetches the content from the nearest CDN edge server — since our CDN (Fastly) is invalidation-based (and specialized in fast invalidations through bimodal multicast), the client knows it only contains fresh data. When the Bloom filter does not contain a specific resource, on the other hand, the browser cache is guaranteed to be up-to-date and can be used safely.

Zero-latency loads

By using the Bloom filter to control freshness, data can be taken from any expiration-based cache, without sacrificing consistency. The most significant performance boost comes from using the browser cache: It is located within the user’s device and therefore does not only provide fast, but instantaneous access to cached data. Using the browser cache further saves traffic and bandwidth, since data is immediately available and does not have to go over the network.

Example

Let’s illustrate this scheme with a concrete example:

The above animation shows Baqend caching in action where two clients respectively read and write the same user comment:

- Initial read: The reading client (left) loads a website that contains a user comment. When the read is completed, the user comment is cached in the accessed CDN edge node as well as the reading client’s browser cache.

- Update: The user comment is updated by the author. On receiving the write operation, the Baqend server implicitly updates the Bloom filter to reflect that the user comment has been changed.

- CDN invalidation: While the CDN is automatically invalidated by the server, the browser cache on the client’s device still contains the outdated version of the user comment.

- Refresh Bloom filter: The client loads a new Bloom filter from the server; the new Bloom filter reflects the updated comment. This happens upon page load as well as in configurable intervals.

- Check Bloom filter: The user refreshes the website. Before loading the user comment from the browser cache, though, the browser checks whether the version in the browser cache is still up-to-date: It is not!

- Bypass browser cache: Since the Bloom filter contains the user comment, the browser knows that the locally available version is outdated. Instead of using the browser cache, it therefore accesses the nearest CDN node and receives the current version (HTTP revalidation request).

Using the Bloom filter for staleness checks effectively solves the cache coherence problem for web caching: Since the client can check whether a given cache is up-to-date, there is no risk of seeing stale data. As a result, reads are blazingly fast and consistent at the same time. To learn about the nitty-gritty details of this appraoch, check out our Cache Sketch research paper.

What consistency guarantees does Baqend provide?

With traditional web caching, maximum staleness depends on the specified TTL, because the the browser cache and other purely expiration-based caches will serve any data item until it expires. With Baqend’s caching scheme, in contrast, clients will never see data that is older than the Bloom filter they use for the staleness check. As illustrated below, expiration-based caches (left side) are invalidated through the Bloom filter, while the invalidation-based caches (right side) are actively kept up-to-date by the backend.

Δ-Atomicity

With Baqend, staleness is not only limited by the TTL, but by the interval in which the Bloom filter is refreshed. All the client has to do is make sure that the Bloom filter is never older than the tolerable staleness. For example, in order to avoid staleness over 30 seconds, it is enough to refresh the Bloom filter roughly twice a minute — even if some TTLs are as long as 30 days. In the distributed systems community, this is called Δ-atomicity.

Linearizability

By frequently refreshing the Bloom filter, the client can minimize staleness. However, it can also perform every operation as a revalidation request against the original backend to enforce strong consistency: By bypassing all caches, the client thus eliminates all sources of data staleness apart from message runtime itself.

What optimizations does Baqend apply besides caching?

We have seen that caching optimizes latency of HTTP requests by putting copies of the data near the client. But as mentioned earlier, there are many more network-level optimizations beyond the mere proximity benefit of caching.

Protocol Stack Optimization

Baqend implements several network-related optimizations that clients implicitly use.

As one of the most significant features, each of Baqend’s CDN nodes maintains a pool of persistent TLS connections to our backend, so that a client only has to establish a TLS connection with the nearest CDN edge node. Since the initial TLS handshake takes at least two roundtrips, establishing a secure connection with a CDN node in close proximity can thus provide a substantial latency benefit over going all the way to the original web server. By employing OCSP stapling, stateless session resumption, dynamic record sizing, and several TCP tweaks, Baqend ensures that the handshake takes at most two round-trips to the nearest CDN node.

HTTP/2

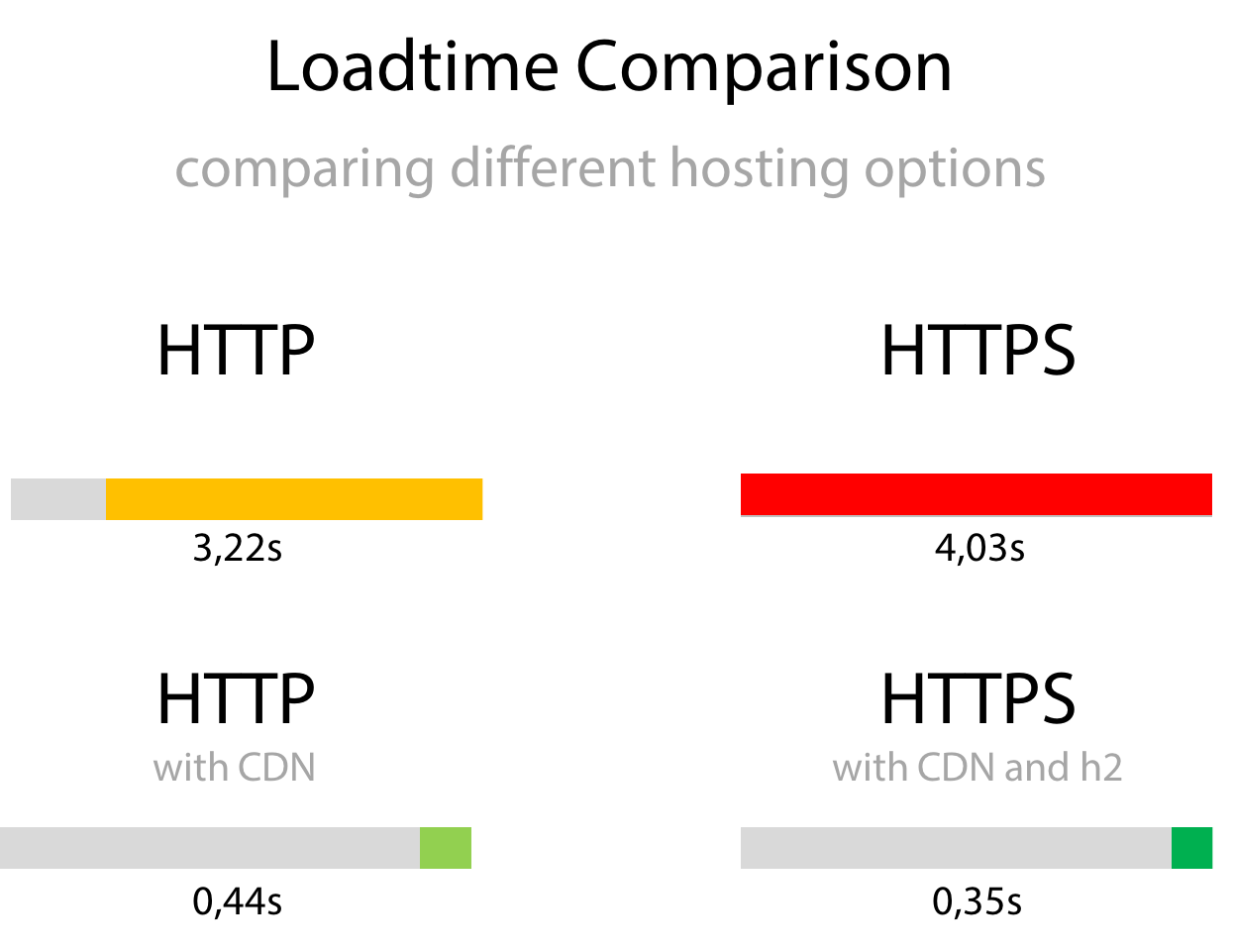

Another important optimization that Baqend uses by default is HTTP/2. For a typical website and in particular combined with caching, H2 can improve performance drastically as shown below:

Baqend (bottom) is not only faster than an uncached hosting solution (top). Perhaps more surprisingly, the TLS-secured Baqend website (bottom right) is faster than the non-TLS version (left). But even beyond TLS optimization, HTTP/2 introduces three significant improvements over HTTP/1.1:

- Multiplexing requests over TCP avoids head-of-line blocking.

- Server Push allows you to send data before the user requests it.

- Header Compression saves bandwidth, e.g. for repeatedly sent cookies.

And there is a lot more that Baqend optimizes with respect to the basic web protocols involved in loading a page (DNS, IP, TCP, TLS, HTTP). For details, check out our HTTP/2 & Networking tutorial (150 slides) which contains the gist of what we learned from building Baqend.

Image Optimization

Baqend is not only able to minimize latency and fine-tune network communication, but can also optimize the content itself for you:

Most notably, Baqend can transcode images to the most efficient formats (WebP and Progressive JPEG) and even rescale them to fit the requesting client’s screen: To minimize page size, a user with a high-resolution display will receive high-resolution images, while a users with an old mobile phone will receive a smaller version that is natively scaled to the smaller screen dimensions. For text-based content, Speed Kit similarly ensures that GZip compression is correctly applied to resources. While imperceptible for the user, these optimizations lead to significant load time improvements, especially when bandwidth is limited (e.g. on mobile connections).

Summary

In a nutshell, Baqend applies state-of-the-art optimizations transparently for all clients:

Most importantly, Baqend reduces latency by caching not only static resources, but caching everything. Further, Baqend performs transparent network optimizations to enable latencies near the physical optimum.

Next, we are going to discuss how modern browser standards can be leveraged to apply the above technology to any existing website.

So far in this article, we have established the importance of faster page loads (see part I), discussed state-of-the-art performance best practices (see part II), and described how Baqend provides many optimizations out-of-the-box and adds dynamic data caching on top (see part III). Next, we describe how Baqend’s unique benefits can be made available to any website using Baqend’s performance plugin: Speed Kit. To find out how fast your website currently is and by how much Speed Kit would improve it, skip ahead to the final part of this article.

How can Speed Kit accelerate your existing website?

To boost content delivery, Speed Kit intercepts requests made by the browser and reroutes them: Instead of loading content from the original domain, Speed Kit delivers data from Baqend.

To activate Speed Kit, you simply include a code snippet into your website which we generate for you. Whenever a user is visiting your website, the snippet then installs (i.e. launches) the Speed Kit Service Worker, a process running concurrently to the main thread in the browser. As soon as the Service Worker is active, all HTTP requests matching your speedup policies (see next paragraph) are rerouted to Baqend. If we have a cached copy of the requested resource (Media, text, etc.), it is served superfast; if the resource is requested for the very first time from our caches, it is served as fast as the origin allows — but will be cached from there on.

To learn more about how Service Workers revolutionize browser performance, check out our talk on Service Workers and web performance.

Opt-in acceleration: You define what will be cached

Many resources are easily cacheable through Speed Kit (e.g. images, stylesheets, etc.), while some are not (e.g. ads). Through, speedup policiesyou can define regex expressions as well as domain whitelists and blacklists to tell Speed Kit exactly which request should be accelerated and which ones should be left untouched: Speed Kit will not interfere with a request, unless explicitly configured to.

End-to-end example: a page load with Speed Kit

Here is what happens in detail, when a user visits a Speed Kit page:

- Compatibility check: The Speed Kit JavaScript snippet is executed and checks whether Service Workers are available in the browser. If not, the page is simply loaded without Speed Kit.

- Service Worker initialization: If the user visits the website for the first time, the browser starts loading the page like normal and initializes the Service Worker in the background. Speed Kit then becomes active during the load and starts serving requests from this point on. Initialization is required only on first load: For returning visitors where Speed Kit’s Service Worker is already installed, Speed Kit will serve every request right away — starting with the HTML itself.

- Request interception: Any request issued by the browser is automatically proxied through the Service Worker.

(a) Normal operation: By checking your configuration, Speed Kit determines whether it should accelerate the request or simply forward it to the browser’s network stack.

(b) Offline mode: If there is no network connection, Speed Kit serves everything from the cache. - Bloom filter lookup: For handled requests, Speed Kit consults the Bloom filter to confirm freshness of the local Service Worker cache. (Exception: In offline mode, the local cache is used regardless, because no other option is available.)

- Accelerated response: If the Bloom filter lookup reveals that a given requested resource is fresh, the response is served from the nearest cache:

(a) Local cache: If the requested response is fresh according to the Bloom filter and also available in the local cache, Speed Kit returns the cached copy instantaneously.

(b) CDN cache: Upon a local cache miss (or when the Bloom filter returned the resource as stale), Speed Kit issues the request over the HTTP/2 connection to Baqend’s CDN.

(c) Original server: If there is a cache miss in the CDN, the response is fetched from Baqend’s servers or — if it is not available there — ultimately from the original server. In the process, the metadata (URL, content type, etc.) are stored in Baqend to allow sophisticated query-based refreshing of cached data in the future.

Makes any website a Progressive Web App (PWA)

With Speed Kit, your website becomes a full-fledged Progressive Web App. Users will not only experience faster page loads through Speed Kit’s request acceleration, but also less downtime through the built-in offline mode:

In offline mode, Speed Kit displays cached content instead of showing an error message. For a Speed Kit user, the website thus appears to be blazingly fast, when a traditional website would not even be usable. In addition, mobile users can add any Speed Kit website to their homescreens, just like native mobile apps. Through web push (coming soon), Speed Kit further allows sending push notifications from the server to your users.

No vendor lock-in

Since Speed Kit is an opt-in solution, you can also opt-out any time. If you are not satisfied with Speed Kit’s performance boost, just click the button — really, there’s a button — and your website will be back to the old default instantaneously.

How does Speed Kit avoid stale content?

To make sure that data is always up-to-date, Speed Kit’s caches have to be refreshed whenever your content changes. Speed Kit does this periodically by default, but we also provide a convenient refresh API that allows you to update all caches on-demand in realtime (including the browser cache within the user’s device).

In more detail, cache synchronization (refresh) can be done both in two different ways:

- Realtime: By programmatically calling an API hook or by manually clicking a button in the Speed Kit dashboard, you can trigger immediate synchronization. Thus, you can make sure that changes in your original content are immediately reflected Speed Kit’s cached copy.

- Periodic: But even without any action on your part, Speed Kit automatically synchronizes itself in regular intervals (see illustration above for default values).

Through its refresh mechanisms, Speed Kit stays always up-to-date and in-synch with your website. Both refresh mechanisms work on simple URLs as well as complex query conditions (e.g. all URLs matching a specific regex expression and having content type “script”). This radically simplifies staying in-sync, because your application logic does not need to identify the exact place where the change occurred — Speed Kit can figure it out. Also, invalidations never happen unccessariliy: Caches are only purged, if the content actually changed according to byte-by-byte comparison in Baqend.

How does Speed Kit accelerate personalized content?

Some pages generate personalized or segmented content into their HTML pages, for example shopping carts, custom ads, or personal user greetings. Normally, there is no point in caching those HTML pages, since they are essentially unique for every user. With Speed Kit, however, there is a way to do exactly that through the concept of Dynamic Blocks:

The basic idea is to load and display personalized content in two steps:

- Load generic page: The initial request for an anonymous version of the page (e.g. with an empty shopping cart) can be accelerated with Speed Kit, because it is the same for all users.

- Inject personalized data: The user-specific data is loaded concurrently to the generic version from the original backend. As soon as it arrives, the personalized content replaces the generic placeholder.

With this approach, the browser can fetch linked assets much faster and can thus start rendering early, even before the personalized content is available. Thus, Dynamic Blocks mitigate the problems caused by a backend with high TTFB. All this requires from you as a developer is to identify dynamic blocks through a query selector.

With Dynamic Blocks, your website essentially behaves as though the personalized information (e.g. a shopping cart) was loaded through Ajax requests. Accordingly, you typically do not need Dynamic Blocks, when you are building a single-page app (e.g. based on React or Angular): You simply blacklist the personalized Ajax/REST API requests.

How does Speed Kit accelerate third-party content?

In practice, page load time is often governed by third-party dependencies. Typical examples are social media integrations (e.g. Facebook), tracking providers (e.g. Google Analytics), JavaScript library CDNs (e.g. CDN.js),

image hosters (e.g. eBay product images), service APIs (e.g. Google Maps),

and Ad Networks.

For example: Google analytics

The problem is that each external domain introduces its own handshakes at TCP and TLS level and therefore experiences an initially low-bandwidth connection (TCP slow start). All performance best practices fail, because third-party domains are out of control for the site owner. Even Google’s own recommendation tool (PageSpeed Insights) warns that its own tracking mechanism (Google Analytics) slows down websites: The provided TTL of 2 hours is simply ineffective. The only option left is to copy external resources — e.g. the Google Analytics script — to your own server. But while this may indeed be faster, it also makes things inconsistent and frequently breaks the scripts. In consequence, web developers have come to accept potentially slow dependencies as an inevitable fact.

Caching the uncacheable

Using the new Service Worker standard, Speed Kit does what was impossible until lately: Speed Kit is able to rewrite requests to slow third-party domains within the client, so that they are fetched through the established and fast HTTP/2 connection to Baqend.

This does not only save networking overhead by eliminating third-party connections, but allows applying the same caching mechanisms to all external resources. As the cached resources are also kept up-to-date by Speed Kit’s refresh mechanism, this optimization comes with no additional staleness.

Transparent content optimization

As a bonus, the accelerated third-party content itself is also optimized. If you run a price comparison search engine, for example, Speed Kit can cache the images from Amazon’s image API and transparently compress and resize them to fit your page layout the individual users’ screen sizes. This feature also comes out-of-the-box and without any configuration or integration overhead for the site owner.

What distinguishes Speed Kit from CDNs, micro-caching, AMP etc.?

Speed Kit has some similarities to other approaches for tackling web performance, but provides many benefits on top.

Speed Kit vs. CDN

Unlike content delivery networks like Akamai, Fastly, or CloudFlare, Speed Kit caches dynamic data and therefore accelerates content that is simply untouchable for all other caching solutions.

Similarly, Speed Kit is also accelerating third-party dependencies which are out of reach for state-of-the-art CDN caching. At the same time, integration is much simpler with Speed Kit, since it relies on a script instead of taking over your DNS. You also don’t need to code countless invalidation hooks into your backend code, because Speed Kit’s periodic refreshes handle cache invalidation automatically. With GDPR around the corner, Speed Kit has another great advanatage over CDNs: It does not see any personally identifiable information like cookies from your users. Therefore, you can add Speed Kit without violating GDPR compliance.

Speed Kit vs. Micro-Caching

Many web architectures employ micro-caching to increase scalability, for example by putting a cache server like Varnish in front of the actual web server. By design, though, micro-caching uses short TTLs in order to avoid staleness. But by using short TTLs, micro-caching also provokes frequent cache misses and thus slow responses for users:

In contrast, Speed Kit uses longer TTLs, because the TTL does not determine worst-case staleness: When a 5-minute refresh interval is scheduled, for instance, Speed Kit makes sure that no cached item is older than 5 minutes. If the resource changes, Speed Kit will discover and invalidate the change through the periodic refresh. On the other hand, if no change is detected during refresh, the data item will remain in the cache until expiration — which might be 5 minutes, 5 hours, or 5 days. Internally, Speed Kit learns with your workload: Through time series prediction, the delivered TTLs will get closer to real inter-update times, the longer your application runs.

Speed Kit vs. AMP & Instant Articles

Google’s Accelerated Mobile Pages (AMP) and Facebook’s Instant Articles (IA) can be used to create fast websites. However, AMP and IA don’t accelerate your existing website like Speed Kit does. Instead, Google and Facebook force you to rebuild your page on their platforms, using only those features that cannot hurt web performance.

For example, AMP enforces the following limitations on your website:

- Restricted HTML: You can only use a stripped-down version of HTML.

- Restricted JavaScript: No custom JavaScript allowed (except in iframes).

- Restricted CSS: All stylings must be inlined and below 50 KB overall.

- No repaints: You can’t resize DOM elements (only static sizes allowed).

- No desktop: Your website is only available to mobile users.

- Google styling: Your website has to include a Google bar at the top.

- Stale-while-revalidate: Users may see outdated content.

As a second reason why AMP pages feel fast, Google uses a trick that has nothing to do with the technology itself: Whenever you look at a Google result on your mobile device, the browser is already fetching the AMP pages featured at the top. Since they are loaded regardless of whether you click the link, AMP pages can be rendered instantly. However, this only works when the user is visiting through a Google search result. For a normal page load, AMP is relatively slow.

Instant Articles are technically similar to Google’s AMP. One of the most significant distinctions, however, is that Facebook’s approach is even more restrictive: Instant Articles are Facebook-only. Since they are only accessible for Facebook users, Instant Articles cannot be used to create public websites.

Through their featured positions, both AMP and IA appear to be attractive for publishing. It should be noted, however, that both are designed to boost their respective ecosystems — not your business: While you are still creating the content, users don’t recognize your brand as they get their news “on Google” or “from Facebook”. In consequence, your leads may drop, you lose traffic, you cannot create rich user experiences, and you may even hurt your e-commerce sales performance.

In contrast to AMP and IA, Speed Kit works for your existing website and has no limitations regarding what HTML, CSS, or JavaScript constructs are permitted. Speed Kit is compatible with any frontend framework and web server, working for both mobile and desktop users. Also, Speed Kit websites are never stale, since caches are refreshed in realtime — thus, you don’t have to wait for Google’s or Facebook’s CDN to reflect changes in your website. As the most important advantage, though, Speed Kit is simply faster than AMP.

At Baqend, we think that web performance should be brought forward through technological advance, be it with Speed Kit or simply through solid web engineering. To learn how you can achieve the benefits of AMP and IA without locking yourself into Google’s and Facebook’s ecosystem, watch our Code.Talks presentation on web performance (video).

Summary

In summary, we built Speed Kit to provide the following features:

In this final part of our article, we show how you can benchmark and optimize your own website with minimal time and effort.

How fast is your website?

To produce detailed performance reports and sophisticated optimization hints, we have developed our own performance measurement tool based on the open-source tool WebPagetest: Baqend’s Page Speed Analyzer measures your web performance and gives you suggestions where there is room for improvement.

The Page Speed analyzer compares your current website (left) against the same website accelerated by Speed Kit (right). To this end, the analyzer runs a series of tests against your page and the accelerated version; finally, it gives you a detailed performance report of your current website and a measurement of the performance edge provided during our test with Speed Kit.

To produce objective results, the analyzer uses Puppeteer and several instances of WebPagetest, an open-source tool for measuring web performance. By using an actual browser (Chrome) to load the page under test, WebPagetest generates realistic test results. In our analyzer, you can specify from where the test should be executed (EU vs. US) and which version of your site should be loaded (mobile vs. desktop). To provide you with the maximum level of details regarding your website’s performance, our analyzer also makes the waterfall diagrams available that are generated by WebPagetest.

Quantifiable Metrics

Among others, the Page Speed Analyzer collects the following metrics:

- Speed Index & First Meaningful Paint: Represent how quickly the page rendered the user-visible content (see above).

- Domains: Number of unique hosts referenced.

- Resources: Number of HTTP resources loaded.

- Response Size: Number of compressed response bytes for resources.

- Time To First Byte (TTFB): Represents the time between connecting to the server and receiving the first content.

- DOMContentLoaded: Represents the time after which the initial HTML document has been completely loaded and parsed, without waiting for external resources.

- FullyLoaded: Represents the time until all resources are loaded, including activity triggered by JavaScript. (Measures the time until which there was 2 seconds of no network activity after Document Complete.)

- Last Visual Change: Represents the time after which the final website is visible (no change thereafter).

Video

In addition to the metrics listed above, the Page Speed Analyzer takes a performance video of both website versions to visualize the rendering process. Through this side-by-side comparison, you can literally see the effect that Speed Kit has.

Continuous Monitoring

If you are using Speed Kit already, the analyzer shows you what Speed Kit is currently doing for your performance: On the left, you see how your website would perform after removing Speed Kit; on the right, you see a test of your current website. The analyzer will also give you hints, if your Speed Kit configuration can be tuned or when you missed a setting. Thus, you can use the analyzer to validate Speed Kit’s worth to your online business. But you can also try out new configurations to improve your existing Speed Kit configuration.

What systems are supported?

Speed Kit works for any site — and it is easy to integrate into your current tech stack! The generic approach for custom websites is described in the next section.

For additional comfort, there are also native plugins for different content management and web hosting platforms. You can activate Speed Kit for your WordPress website within minutes by using our WordPress plugin. For web hosters, we recommend our Plesk plugin which is available for Plesk 17.5.3 and higher versions.

How to activate Speed Kit for your website?

For Plesk and WordPresswebsites, we recommend the native plugins. However, Speed Kit is also easy-to-use for custom websites.

To let you try out Speed Kit in production without any costs at all, Speed Kit provides an extensive free tier. To activate Speed Kit for your website, follow these steps:

- Register a Speed Kit app:Create a Baqend account and choose app type Speed Kit.

- Follow the instructions: You will be guided through the installation process by our setup Wizard. In essence, you will do the following:

(a) Specify the domain to accelerate.

(b) Include a code snippet into your website (we will generate it for you).

(c) Adjust refresh policies to make sure our caching infrastructure is always synchronized with your original content.

(d) Optionally: Confine Speed Kit to parts of you website (e.g. “/blog”) to try it in a limited scope first.

Questions? If you require help or have a question, simply drop us a line via Gitter or email!

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK