What Is a Householder Matrix?

source link: https://nhigham.com/2020/09/15/what-is-a-householder-matrix/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

What Is a Householder Matrix?

A Householder matrix is an orthogonal matrix of the form

It is easily verified that is

- orthogonal (

),

- symmetric (

),

- involutory (

that is,

is a square root of the identity matrix),

where the last property follows from the first two.

A Householder matrix is a rank- perturbation of the identity matrix and so all but one of its eigenvalues are

. The eigensystem can be fully described as follows.

has an eigenvalue

with eigenvector

, since

.

has

eigenvalues

with eigenvectors any set of

linearly independent vectors orthogonal to

, which can be taken to be mutually orthogonal:

for every such

.

has trace

and determinant

, as can be derived directly or deduced from the facts that the trace is the sum of the eigenvalues and the determinant is the product of the eigenvalues.

For , a Householder matrix can be written as

Simple examples of Householder matrices are obtained by choosing , for which

. For

we obtain the matrices

Note that the matrix is

times a Hadamard matrix.

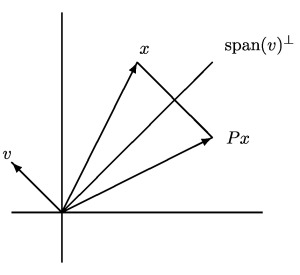

Applying to a vector

gives

This equation shows that reflects

about the hyperplane

, as illustrated in the following diagram, which explains why

is sometimes called a Householder reflector. Another way of expressing this property is to write

, where

is orthogonal to

. Then

, so the component of

in the direction

has been reversed. If we take

, the

th unit vector, then

, which has

in the

position. In this case premultiplying a vector by

flips the sign of the

th component.

Transforming a Vector

Householder matrices are powerful tools for introducing zeros into vectors. Suppose we are given vectors and

and wish to find a Householder matrix

such that

. Since

is orthogonal, we require that

, and we exclude the trivial case

. Now

and this last equation has the form for some

. But

is independent of the scaling of

, so we can set

. Now with

we have

and, since ,

Therefore

as required. Most often we choose to be zero in all but its first component.

Square Roots

What can we say about square roots of a Householder matrix, that is, matrices such that

?

We note first that the eigenvalues of are the square roots of those of

and so

of them will be

and one will be

. This means that

cannot be real, as the nonreal eigenvalues of a real matrix must appear in complex conjugate pairs.

Write , where

is normalized so that

. It is natural to look for a square root of the form

. Setting

leads to the quadratic equation

, and hence

. As expected, these two square roots are complex even though

is real. As an example,

gives the following square root of the matrix above corresponding to

with

:

A good way to understand all the square roots is to diagonalize , which can be done by a similarity transformation with a Householder matrix! Normalizing

again, let

and

. Then from the construction above we know that

. Hence

Then and so

gives

square roots on taking all possible combinations of signs on the diagonal for

. Because

has repeated eigenvalues these are not the only square roots. The infinitely many others are obtained by taking non-diagonal square roots of

, which are of the form

, where

is any non-diagonal square root of the

identity matrix, which in particular could be a Householder matrix!

Block Householder Matrix

It is possible to define an block Householder matrix in terms of a given

, where

, as

Here, “” denotes the Moore–Penrose pseudoinverse. For

,

clearly reduces to a standard Householder matrix. It can be shown that

(this is most easily proved using the SVD), and so

where is the orthogonal projector onto the range of

(that is,

,

, and

). Hence, like a standard Householder matrix,

is symmetric, orthogonal, and involutory. Furthermore, premultiplication of a matrix by

has the effect of reversing the component in the range of

.

As an example, here is the block Householder matrix corresponding to :

One can show (using the SVD again) that the eigenvalues of are

repeated

times and

repeated

times, where

. Hence

and

.

Schreiber and Parlett (1988) note the representation for ,

where and

are orthogonal and

is symmetric positive definite. This formula neatly generalizes the formula for a standard Householder matrix for

given above, and a similar formula holds for odd

.

Schreiber and Parlett also show how given (

) one can construct a block Householder matrix

such that

The polar decomposition plays a key role in the theory and algorithms for such .

Rectangular Householder Matrix

We can define a rectangular Householder matrix as follows. Let ,

,

, and

Then , that is,

has orthonormal columns, if

Of course, is just the first

columns of the Householder matrix built from the vector

.

Historical Note

The earliest appearance of Householder matrices is in the book by Turnbull and Aitken (1932). These authors show that if (

) then a unitary matrix of the form

(in their notation) can be constructed so that

. They use this result to prove the existence of the Schur decomposition. The first systematic use of Householder matrices for computational purposes was by Householder (1958) who used them to construct the QR factorization.

References

This is a minimal set of references, which contain further useful references within.

- Massimiliano Fasi and Nicholas J. Higham, Generating Extreme-Scale Matrices with Specified Singular Values or Condition Numbers, MIMS EPrint 2020.8, Manchester Institute for Mathematical Sciences, The University of Manchester, UK, March 2020. (For the use of rectangular Householder matrices.)

- Nicholas J. Higham, Accuracy and Stability of Numerical Algorithms, second edition, Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 2002. (Chapter 19.)

- Nicholas J. Higham, Functions of Matrices: Theory and Computation, Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 2008. (Sections 1.5 and 1.6 for the theory of matrix square roots.)

- Robert S. Schreiber and Beresford N. Parlett, Block Reflectors: Theory and Computation, SIAM J. Numer. Anal. 25(1), 189–205, 1988.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK