Miguel de Icaza

source link: https://tirania.org/blog/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

AppStore Reviews Should be Stricter

Since the AppStore launched, developers have complained about the review process as too strict. Applications are mostly rejected either for not meeting requirements, not having enough functionality or circumventing Apple’s business model.

Yet, the AppStore reviews are too lax and they should be much stricter.

Let me explain why I think so, what I believe some new rules need to be, and how the AppStore can be improved.

Prioritizing the Needs of the Many

Apple states that they have 28 million registered developers, but I believe that only a fraction of those are actively developing applications on a daily basis. That number is closer to 5 million developers.

I understand deeply why developers are frustrated with the AppStore review process - I have suffered my fair share of AppStore rejections: both by missing simple issues and by trying to push the limits of what was allowed. I founded Xamarin, a company that built tools for mobile developers, and had a chance to become intimately familiar with the rejections that our own customers got.

Yet, there are 1.5 billion active Apple devices, devices that people trust to be keep their data secure and private. The overriding concern should be the 1.5 billion active users, and not the 0.33% (or 1.86% if you are feeling generous).

People have deposited their trust on Apple and Google to keep their devices safe. I wrote about this previously. While it is an industry sport to make fun of Google, I respect the work that Google puts on securing and managing my data - so much that I have trusted them with my email, photographs and documents for more than 15 years.

I trust both companies both because of their public track record, and because of conversations that I have had with friends working at both companies about their processes, their practices and the principles that they care about (Keeping up with Information Security is a part-time hobby of minex).

Today’s AppStore Policies are Insufficient

AppStore policies, and their automated and human reviews have helped nurture and curate the applications that are available. But with a target market as large and rich as iOS and Android these ecosystems have become a juicy target for scammers, swindlers, gangsters, nation states and hackers.

While some developers are upset with the Apple Store rejections, profiteers have figured out that they can make a fortune while abiding by the existing rules. These rules allow behaviors that are in either poor taste, or explicitly manipulating the psyche of the user.

First, let me share my perspective as a parent, and

I have kids aged 10, 7 and 4, and my eldest had access to an iPad since she was a year old, and I have experienced first hand how angering some applications on the AppStore can be to a small human.

It breaks my heart every time they burst out crying because something in these virtual worlds was designed to nag them, is frustrating or incomprehensible to them. We sometimes teach them how to deal with those problems, but this is not always possible. Try explaining to a 3 year old why they have to watch a 30 seconds ad in the middle of a dinosaur game to continue playing, or teach them that at arbitrary points during the game tapping on the screen will not dismiss an ad, but will instead take them to download another app, or direct them another web site.

This is infuriating.

Another problem happens when they play games defective by design. By this I mean that these games have had functionality or capabilities removed that can be solved by purchasing virtual items (coins, bucks, costumes, pets and so on).

I get to watch my kids display a full spectrum of negative experiences when they deal with these games.

We now have a rule at home “No free games or games with In-App Purchases”. While this works for “Can I get a new game?”, it does not work for the existing games that they play, and those that they play with their friends.

Like any good rule, there are exceptions, and I have allowed the kids to buy a handful of games with in-app purchases from reputable sources. They have to pay for those from their allowance.

These dark patterns are not limited applications for kids, read the end of this post for a list of negative scenarios that my followers encountered that will ring familiar.

Closing the AppStore Loopholes

Applications using these practices should be banned:

Those that use Dark Patterns to get users to purchase applications or subscriptions: These are things like “Free one week trial”, and then they start charging a high fee per week. Even if this activity is forbidden, some apps that do this get published.

Defective-by-design: there are too many games out there that can not be enjoyed unless you spend money in their applications. They get the kids hooked up, and then I have to deal with whiney 4 year olds, 7 year olds and 10 year old to spend their money on virtual currencies to level up.

Apps loaded with ads: I understand that using ads to monetize your application is one way of supporting the development, but there needs to be a threshold on how many ads are on the screen, and shown by time, as these apps can be incredibly frustrating to use. And not all apps offer a “Pay to remove the ad”, I suspect because the pay-to-remove is not as profitable as showing ads non-stop.

Watch an ad to continue: another nasty problem are defective-by-design games and application that rather than requesting money directly, steer kids towards watching ads (sometimes “watch an ad for 30 seconds”) to get something or achieve something. They are driving ad revenue by forcing kids to watch garbage.

Install chains: there are networks of ill-behaved applications that trick kids into installing applications that are part of their network of applications. It starts with an innocent looking app, and before the day is over, you are have 30 new scammy apps installed on your machine.

Notification Abuse: these are applications that send advertisements or promotional offers to your device. From discount offers, timed offers and product offerings. It used to be that Apple banned these practices on their AppReview guidelines, but I never saw those enforced and resorted to turning off notifications. These days these promotions are allowed. I would like them to be banned, have the ability to report them as spam, and infringers to have their notification rights suspended.

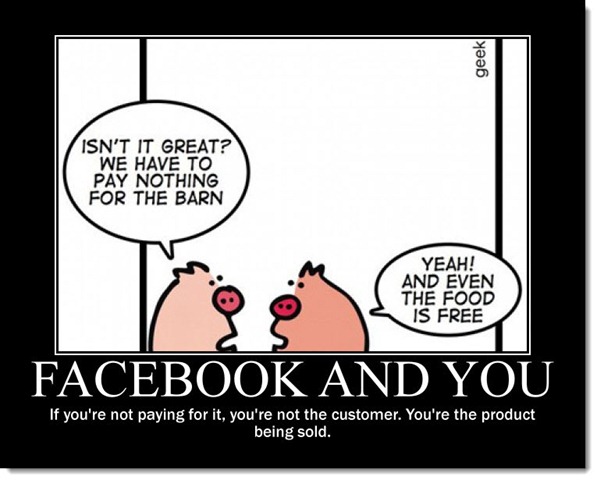

- ) Ban on Selling your Data to Third Parties: ban applications that sell your data to third parties. Sometimes the data collection is explicit (for example using the Facebook app), but sometimes unknowingly, an application uses a third party SDK that does its dirty work behind the scenes. Third party SDKs should be registered with Apple, and applications should disclose which third party SDKs are in use. If one of those 3rd party SDKs is found to abuse the rules or is stealing data, all applications that rely on the SDK can be remotely deactivated. While this was recently in the news this is by no means a new practice, this has been happening for years.

One area that is grayer are Applications that are designed to be addictive to increase engagement (some games, Facebook and Twitter) as they are a major problem for our psyches and for our society. Sadly, it is likely beyond the scope of what the AppStore Review team can do. One option is to pass legislation that would cover this (Shutdown Laws are one example).

Changes in the AppStore UI

It is not apps for children that have this problem. I find myself thinking twice before downloading applications with "In App Purchases". That label has become a red flag: one that sends the message "scammy behavior ahead"

I would rather pay for an app than a free app with In-App Purchases. This is unfair to many creators that can only monetize their work via an In-App Purchases.

This could be addressed either by offering a free trial period for the app (managed by the AppStore), or by listing explicitly that there is an “Unlock by paying” option to distinguish these from “Offers In-App Purchases” which is a catch-all expression for both legitimate, scammy or nasty sales.

My list of wishes:

Offer Trial Periods for applications: this would send a clear message that this is a paid application, but you can try to use it. And by offering this directly by the AppStore, developers would not have to deal with the In-App purchase workflow, bringing joy to developers and users alike.

Explicit Labels: Rather than using the catch-all “Offers In-App Purchases”, show the nature of the purchase: “Unlock Features by Paying”, “Offers Subscriptions”, “Buy virtual services” and “Sells virtual coins/items”

Better Filtering: Today, it is not possible to filter searches to those that are paid apps (which tend to be less slimy than those with In-App Purchases)

Disclose the class of In-App Purchases available on each app that offers it up-front: I should not have to scroll and hunt for the information and mentally attempt to understand what the item description is to make a purchase

Report Abuse: Human reviewers and automated reviews are not able to spot every violation of the existing rules or my proposed additional rules. Users should be able to report applications that break the rules and developers should be aware that their application can be removed from circulation for breaking the rules or scammy behavior.

Some Bad Practices

Check some of the bad practices in this compilation

Posted on 24 Sep 2020

Some Bad App Practices

Some bad app patterns as some followers described them

So many dark patterns in games. I remember downloading Tiny Tower back in the day and being blown away that you would be told to stop playing and come back in an hour to play more. We made a house rule that we don't play games with gems, they're all optimized for addiction/profit

— Tom Finnigan (@tomfinnigan) August 27, 2020

My daughter downloaded a free quiz game, that started charging a subscription of $60 per WEEK.

— Cam Foale (@kibibu) August 28, 2020

Legit apps do some shit stuff. Grab (car hire) recently threw up a screen as I was booking a car and I thought I was dismissing it, but what I was doing was enrolling in an insurance scheme for an extra $.30 per ride going forward and no idea how to turn it off.

— Alan Graham: he’s just this guy, you know? (@agraham999) August 27, 2020

Top-rated Sudoku app on the App Store had you accept a privacy policy which contained language that they tracked your location while playing. Sudoku!

— Mihai Chereji (@croncobaurul) August 28, 2020

You can read more in the replies to my request a few weeks ago:

Have you downloaded an app and had an unsavory experience? Scammy, swindley, dark patterny? Share with me your bad experiences.

— Miguel de Icaza (@migueldeicaza) August 27, 2020

Posted on 23 Sep 2020

Apple versus Epic Games: Why I’m Willing to Pay a Premium for Security, Privacy, and Peace of Mind

In the legal battle over the App Store’s policies, fees, and review processes, Epic Games wants to see a return to the good old days – where software developers retained full control over their systems and were only limited by their imaginations. Yet those days are long gone.

Granted, in the early 90s, I hailed the Internet as humanity’s purest innovation. After all, it had enabled a group of global developers to collaboratively build the Linux operating system from the ground up. In my X years of experience as a developer, nothing has come close to the good will, success, and optimistic mood of those days.

Upon reflection, everything started to change the day I received my first spam message. It stood out not only because it was the first piece of unsolicited email I received, but also because it was a particularly nasty piece of spam. The advertiser was selling thousands of email addresses for the purposes of marketing and sales. Without question, I knew that someone would buy that list, and that I would soon be on the receiving end of thousands of unwanted pieces of mail. Just a few months later, my inbox was filled with garbage. Since then, the Internet has become increasingly hostile – from firewalls, proxies, and sandboxes to high-profile exploits and attacks.

Hardly a new phenomenon, before the Internet, the disk operating system (DOS) platform was an open system where everyone was free to build and innovate. But soon folks with bad intentions destroyed what was a beautiful world of creation and problem solving, and turned it into a place riddled with viruses, trojans, and spyware.

Like most of you alive at the time, I found myself using anti-virus software. In fact, I even wrote a commercial product in Mexico that performed the dual task of scanning viruses and providing a Unix-like permission system for DOS (probably around 1990). Of course, it was possible to circumvent these systems, considering DOS code had full access to the system.

In 1993, Microsoft introduced a family of operating systems that came to be known as Windows NT. Though it was supposed to be secure from the ground up, they decided to leave a few things open due to compatibility concerns with the old world of Windows 95 and DOS. Not only were there bad faith actors in the space, developers had made significant mistakes. Perhaps not surprisingly, users began to routinely reinstall their operating systems following the gradual decays that arose from improper changes to their operating systems.

Fast-forward to 2006 when Windows Vista entered the scene – attempting to resolve a class of attacks and flaws. The solution took many users by surprise. It’s more apt to say that it was heavily criticized and regarded as a joke in some circles. For many, the old way of doing things had been working just fine and all the additional security got in the way. While users hated the fact that software no longer worked out of the box, it was an important step towards securing systems.

With the benefit of hindsight, I look back at the early days of DOS and the Internet as a utopia, where good intentions, innovation, and progress were the norm. Now swindlers, scammers, hackers, gangsters, and state actors routinely abuse open systems to the point that they have become a liability for every user.

In response, Apple introduced iOS – an operating system that was purpose-build to be secure. This avoided backwards compatibility problems and having to deal with users who saw unwanted changes to their environment. In a word, Apple managed to avoid the criticism and pushback that had derailed Windows Vista.

It’s worth pointing out that Apple wasn’t the first to introduce a locked-down system that didn’t degrade. Nintendo, Sony, and Microsoft consoles restricted the software that could be modified on their host operating systems and ran with limited capabilities. This resulted in fewer support calls, reduced frustration, and limited piracy.

One of Apple’s most touted virtues is that the company creates secure devices that respect user’s privacy. In fact, they have even gone to court against the US government over security. Yet iOS remains the most secure consumer operating system. This has been made possible through multiple layers of security that address different threats. (By referring to Apple’s detailed platform security, you can get a clear sense of just how comprehensive it is.)

Offering a window into the development process, security experts need to evaluate systems from end-to-end and explore how the system can be tampered with, attacked, or hacked, and then devise both defense mechanisms and plans for when things will inevitably go wrong.

Consider the iPhone. The hardware, operating system, and applications were designed with everything a security professional loves in mind. Even so, modern systems are too large and too complex to be bullet-proof. Researchers, practitioners, hobbyists, and businesses all look for security holes in these systems – some with the goal of further protecting the system, others for educational purposes, and still others for profit or to achieve nefarious goals.

Whereas hobbyists leverage these flaws to unlock their devices and get full control over their systems, dictatorships purchase exploits in the black market to use against their enemies and gain access to compromising data, or to track the whereabouts of their targets.

This is where the next layer of security comes in. When a flaw is identified – whether by researchers, automated systems, telemetry, or crashes – software developers design a fix for the problem and roll out the platform update. The benefits of keeping software updated extend beyond a few additional emoji characters; many software updates come with security fixes. Quite simply, updating your phone keeps you more secure. However, it’s worth emphasizing that this only works against known attacks.

The App Store review process helps in some ways; namely, it can:

Force applications to follow a set of guidelines aimed at protecting privacy, the integrity of the system, and meet the bar for unsuspecting users

Reduce applications with minimal functionality – yielding less junk for users to deal with and smaller attack surfaces

Require a baseline of quality, which discourages quick hacks

Prevent applications from using brittle, undocumented, or unsupported capabilities

Still, the App Store review process is not flawless. Some developers have worked around these restrictions by: (1) distributing hidden payloads, (2) temporarily disabling features while their app was being tested on Apple’s campus, (3) using time triggers, or (4) remotely controlling features to evade reviewers.

As a case in point, we need look no further than Epic Games. They deceptively submitted a “hot fix,” which is a practice used to fix a critical problem such as a crash. Under the covers, they added a new purchasing system that could be remotely activated at the time of their choosing. It will come as no surprise that they activated it after they cleared the App Store’s review process.

Unlike a personal computer, the applications you run on your smartphone are isolated from the operating system and even from each other to prevent interference. Since apps run under a “sandbox” that limits what they can do, you do not need to reinstall your iPhone from scratch every few months because things no longer work.

Like the systems we described above, the sandbox is not perfect. In theory, a bad actor could include an exploit for an unknown security hole in their application, slip it past Apple, and then, once it is used in the wild, wake up the dormant code that hijacks your system.

Anticipating this, Apple has an additional technical and legal mitigation system in place. The former allows Apple to remotely disable and deactivate ill-behaved applications, in cases where an active exploit is being used to harm users. The legal mitigation is a contract that is entered into between Apple and the software developer, which can be used to bring bad actors to court.

Securing a device is an ongoing arms race, where defenders and attackers are constantly trying to outdo the other side, and there is no single solution that can solve the problem. The battlegrounds have recently moved and are now being waged at the edges of the App Store’s guidelines.

In the same way that security measures have evolved, we need to tighten the App Store’s guidelines, including the behaviors that are being used for the purposes of monetization and to exploit children. (I plan to cover these issues in-depth in a future post.) For now, let me just say that, as a parent, there are few things that would make me happier than more stringent App Store rules governing what applications can do. In the end, I value my iOS devices because I know that I can trust them with my information because security is paramount to Apple.

Coming full-circle, Epic Games is pushing for the App Store to be a free-for-all environment, reminiscent of DOS. Unlike Apple, Epic does not have an established track record of caring about privacy and security (in fact, their privacy policy explicitly allows them to sell your data for marketing purposes). Not only does the company market its wares to kids, they recently had to backtrack on some of their most questionable scams – i.e., loot boxes – when the European Union regulated them. Ultimately, Epic has a fiduciary responsibility to their investors to grow their revenue, and their growth puts them on a war path with Apple.

In the battle over the security and privacy of my phone, I am happy to pay a premium knowing that my information is safe and sound, and that it is not going to be sold to the highest bidder.

Posted on 28 Aug 2020

Yak Shaving - Swift Edition

At the TensorFlow summit last year, I caught up with Chris Lattner who was at the time working on Swift for TensorFlow - we ended up talking about concurrency and what he had in mind for Swift.

I recognized some of the actor ideas to be similar to those from the Pony language which I had learned about just a year before on a trip to Microsoft Research in the UK. Of course, I pointed out that Pony had some capabilities that languages like C# and Swift lacked and that anyone could just poke at data that did not belong to them without doing too much work and the whole thing would fall apart.

For example, if you build something like this in C#:

class Chart {

float [] points;

public float [] Points { get { return points; } }

}

Then anyone with a reference to Chart can go and poke at the internals of the points array that you have surfaced. For example, this simple Plot implementation accidentally modifies the contents:

void Plot (Chart myChart)

{

// This code accidentally modifies the data in myChart

var p = myChart.points;

for (int i = 0; i < p.Length; i++) {

Plot (0, p [i]++)

}

}

This sort of problem is avoidable, but comes at a considerable development cost. For instance, in .NET you can find plenty of ad-hoc collections and interfaces whose sole purpose is to prevent data tampering/corruption. If those are consistently and properly used, they can prevent the above scenario from happening.

This is where Chris politely pointed out to me that I had not quite understood Swift - in fact, Swift supports a copy-on-write model for its collections out of the box - meaning that the above problem is just not present in Swift as I had wrongly assumed.

It is interesting that I had read the Swift specification some three or four times, and I was collaborating with Steve on our Swift-to-.NET binding tool and yet, I had completely missed the significance of this design decision in Swift.

This subtle design decision was eye opening.

It was then that I decided to gain some real hands-on experience in Swift. And what better way to learn Swift than to start with a small, fun project for a couple of evenings.

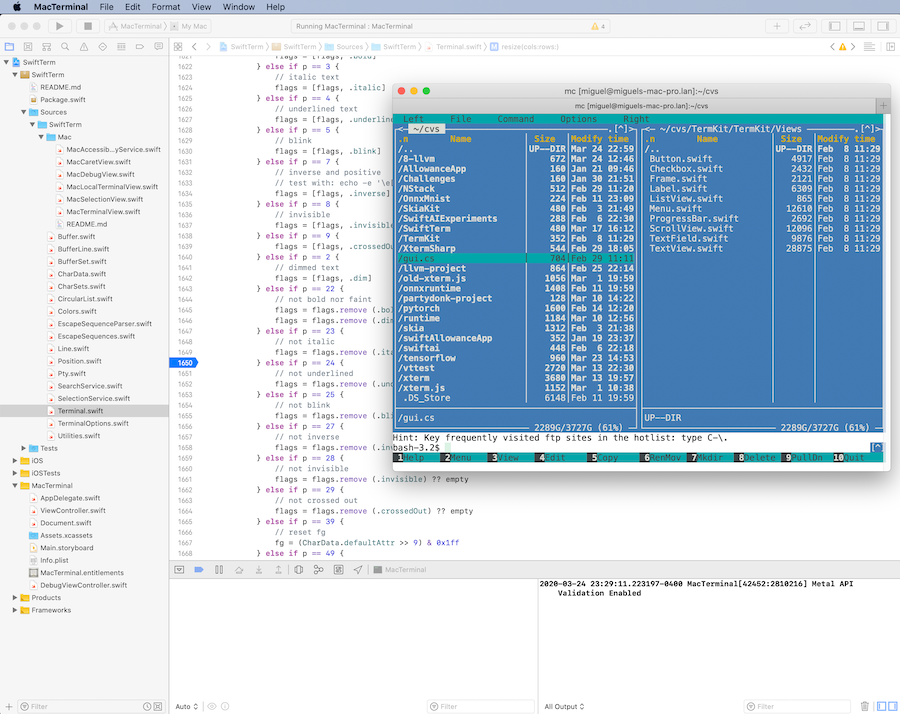

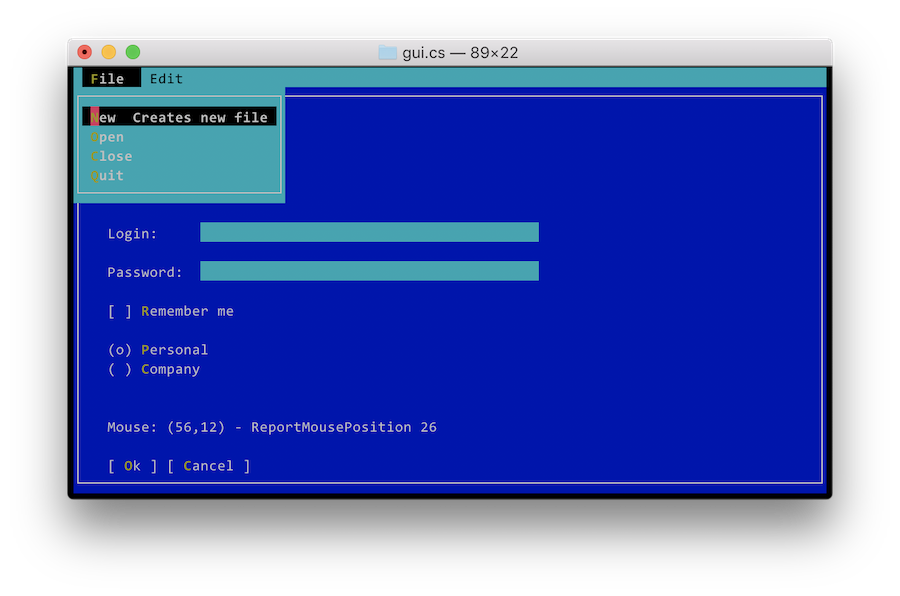

Rather than building a mobile app, which would have been 90% mobile design and user interaction, and little Swift, I decided to port my gui.cs console UI toolkit from C# to Swift and called it TermKit.

Both gui.cs and TermKit borrow extensively from Apple’s UIKit design - it is a design that I have enjoyed. It notably avoids auto layout, and instead uses a simpler layout system that I quite love and had a lot of fun implementing (You can read a description of how to use it in the C# version).

This journey was filled with a number of very pleasant capabilities in Swift that helped me find some long-term bugs in my C# libraries. I remain firmly a fan of compiled languages, and the more checking, the better.

Dear reader, I wish I had kept a log of those but that is now code that I wrote a year ago so I could share all of those with you, but I did not take copious notes. Suffice to say, that I ended up with a warm and cozy feeling - knowing that the compiler was looking out for me.

There is plenty to love about Swift technically, and I will not enumerate all of those features, other people have done that. But I want to point out a few interesting bits that I had missed because I was not a practitioner of the language, and was more of an armchair observer of the language.

The requirement that constructors fully initialize all the fields in a type before calling the base constructor is a requirement that took me a while to digest. My mental model was that calling the superclass to initialize itself should be done before any of my own values are set - this is what C# does. Yet, this prevents a bug where the base constructor can call a virtual method that you override, and might not be ready to handle. So eventually I just learned to embrace and love this capability.

Another thing that I truly enjoyed was the ability of creating a

typealias, which once defined is visible as a new type. A

capability that I have wanted in C# since 2001 and have yet to get.

I have a love/hate relationship with Swift protocols and extensions. I love them because they are incredibly powerful, and I hate them, because it has been so hard to surface those to .NET, but in practice they are a pleasure to use.

What won my heart is just how simple it is to import C code into Swift

- to bring the type definitions from a header file, and call into the C code transparently from Swift. This really is a gift of the gods to humankind.

I truly enjoyed having the Character data type in Swift which

allowed my console UI toolkit to correctly support Unicode on the

console for modern terminals.

Even gui.cs with my port of Go’s Unicode libraries to C# suffers from being limited to Go-style Runes and not having support for emoji (or as the nerd-o-sphere calls it “extended grapheme clusters”).

Beyond the pedestrian controls like buttons, entry lines and checkboxes, there are two useful controls that I wanted to develop. An xterm terminal emulator, and a multi-line text editor.

In the C# version of my console toolkit my multi-line text

editor

was a quick hack. A List<T> holds all the lines in the buffer, and

each line contains the runes to display. Inserting characters is

easy, and inserting lines is easy and you can get this done in a

couple of hours on the evening (which is the sort of time I can devote

to these fun explorations). Of course, the problem is cutting regions

of text across lines, and inserting text that spans multiple lines.

Because what looked like a brilliant coup of simple design, turns out

to be an ugly, repetitive and error-prone code that takes forever to

debug - I did not enjoy writing that code in the end.

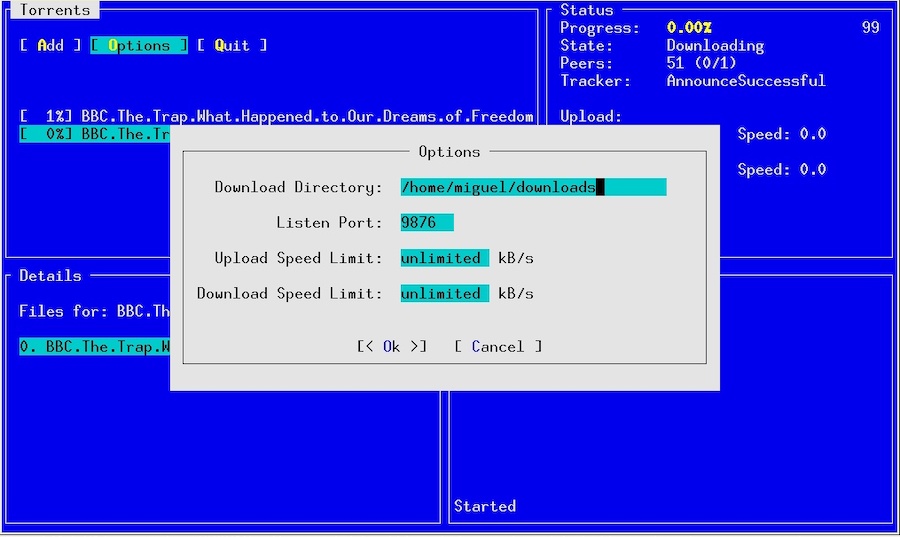

For my Swift port, I decided that I needed something better. Of course, in the era of web scale, you gotta have a web scale data structure. I was about to implement a Swift version of the Rope data structure, when someone pointed to me a blog post from the Visual Studio Code team titled “Text Buffer Reimplementation”. I read it avidly, founds their arguments convincing, and in the end, if it is good enough for Visual Studio Code, it should be good enough for the gander.

During my vacation last summer, I decided to port the TypeScript implementation of the Text Buffer to Swift, and named it TextBufferKit. Once again, porting this code from TypeScript to Swift turned out to be a great learning experience for me.

By the time I was done with this and was ready to hook it up to TermKit, I got busy, and also started to learn SwiftUI, and started to doubt whether it made sense to continue work on a UIKit-based model, or if I should restart and do a SwiftUI version. So while I pondered this decision, I did what every other respected yak shaver would do, I proceeded to my xterm terminal emulator work.

Since about 2009 or so, I wanted to have a reusable terminal emulator control for .NET. In particular, I wanted one to embed into MonoDevelop, so a year or two ago, I looked for a terminal emulator that I could port to .NET - I needed something that was licensed under the MIT license, so it could be used in a wide range of situations, and was modern enough. After surveying the space, I found “xterm.js” fit the bill, so I ported it to .NET and modified it to suit my requirements. XtermSharp - a terminal emulator engine that can have multiple UIs and hook up multiple backends.

For Swift, I took the XtermSharp code, and ported it over to Swift, and ended up with SwiftTerm. It is now in quite a decent shape, with only a few bugs left.

I have yet to built a TermKit UI for SwiftTerm, but in my quest for the perfect shaved yak, now I need to figure out if I should implement SwiftUI on top of TermKit, or if I should repurpose TermKit completely from the ground up to be SwiftUI driven.

Stay tuned!

Posted on 24 Mar 2020

Scripting Applications with WebAssembly

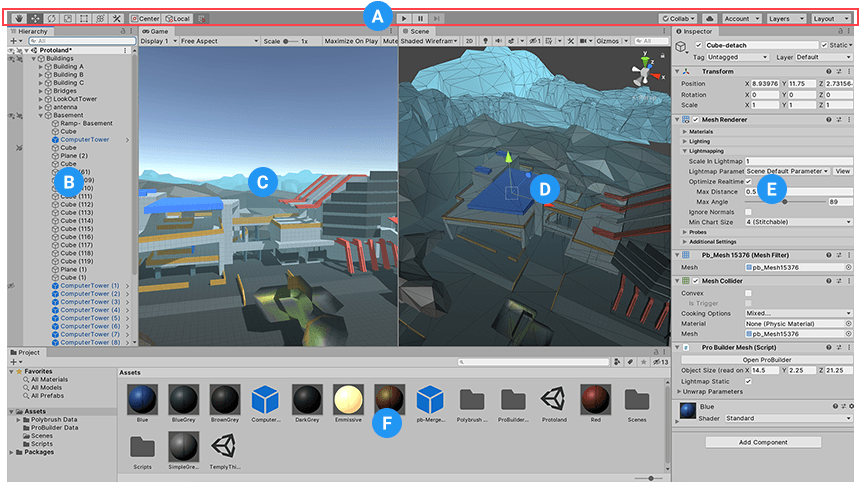

The Unity3D engine features a capability where developers can edit the code for their program, hit “play” and observe the changes right away, without a visible compilation step. C# code is compiled and executed immediately inside the Unity editor in a seamless way.

This is a scenario where scripted code runs with full trust within the original application. The desired outcome is to not crash the host, be able to reload new versions of the code over and over, and not really about providing a security boundary.

This capability was built into Unity using .NET Application Domains: a code isolation technology that was originally built in .NET that allowed code to be loaded, executed and discarded after it was no longer needed.

Other developers used Application Domains as a security boundary in conjuction with other security technologies in .NET. But this combination turned out to have some holes, and Application Domains, once popular among .NET developers, fell from grace.

With .NET Core, Application domains are no longer supported, and alternative options for code-reloading have been created (dynamic loading of code can be achieved these days with AssemblyLoadContext).

While Unity was ahead of the industry in terms of code hot reloading, but other folks have used embedded runtimes to provide this sort of capability over the years, Javascript being one of the most popular ones.

Recently, I have been fascinated by WebAssembly for solving this particular scenario and solve it very well (some folks are also using WebAssembly to isolate sensitive code).

WebAssembly was popularized by the Web crowd, and it offers a number of capabilities that neither Javascript, Application Domains or other scripting languages solve very well for scripting applications.

Outside of the Web browser domain, WebAssembly checks all of the boxes in my book:

- Provides great code isolation and memory isolation

- Easily discard unused code and data

- Wide reach: in addition to being available on the Web there are runtimes suitable for almost every scenario: fast JIT compilation, optimizing compilers, static compilation and assorted interpreters. One of my favorites is Wasmer

- Custom operations can be surfaced to WebAssembly to connect the embedded code with the host.

- Many languages can target this runtime. C, C++, C#, F#, Go, Rust and Swift among others.

WebAssembly is low-level enough that it does not come with a garbage collector, which means that it will not pause to garbage collect your code like .NET/Mono or JavaScript would. That depends entirely on the language that you run inside WebAssembly. If you run C, Rust or Swift code, there would be no time taken by a garbage collector, but if you run .NET or Go code there would be.

Going back to the Unity scenario: the fascinating feature for me, is that IDEs/Editors/Tools could leverage WebAssembly to host their favorite language for scripting during the development stage, but for the final build of a product (like Unity, Godot, Rhino3D, Unreal Engine and really any other application that offers scripting capabilities) they could bundle the native code without having to take a WebAssembly dependency.

For the sake of the argument, imagine the Godot game engine. Today Godot has support for GodotScript and .NET. But it could be extended to support for Swift for scripting, and use WebAssembly during development to hot-reload the code, but generate Swift code directly for the final build of a game.

The reason I listed the game engines here is that users of those products are as happy with the garbage collector taking some time to tidy up your heap as they are with a parent calling them to dinner just as they are swarming an enemy base during a 2-hour campaign.

WebAssembly is an incredibly exciting space, and every day it seems like it opens possibilities that we could only dream of before.

Posted on 02 Mar 2020

Blog: Revisting the gui.cs framework

12 years ago, I wrote a small UI Library to build console applications in Unix using C#. I enjoyed writing a blog post that hyped this tiny library as a platform for Rich Internet Applications (“RIA”). The young among you might not know this, but back in 2010, “RIA” platforms were all the rage, like Bitcoin was two years ago.

The blog post was written in a tongue-in-cheek style, but linked to actual screenshots of this toy library, which revealed the joke:

This was the day that I realized that some folks did not read the whole blog post, nor clicked on the screenshot links, as I received three pieces of email about it.

The first was from an executive at Adobe asking why we were competing, rather than partnering on this RIA framework. Back in 2010, Adobe was famous for building the Flash and Flex platforms, two of the leading RIA systems in the industry. The second was from a journalist trying to find out more details about this new web framework, he was interested in getting on the phone to discuss the details of the announcement, and the third piece was from an industry analyst that wanted to understand what this announcement did for the strategic placement of my employer in their five-dimensional industry tracking mega-deltoid.

This tiny library was part of my curses binding for Mono in a time where I dreamed of writing and bringing a complete terminal stack to .NET in my copious spare time. Little did I know, that I was about to run out of time, as in little less than a month, I would start Moonlight - the open source clone of Microsoft Silverlight and that would consume my time for a couple of years.

Back to the Future

While Silverlight might have died, my desire to have a UI toolkit for

console applications with .NET did not. Some fourteen months ago, I

decided to work again on gui.cs, this is a screenshot of the result:

In many ways the world had changed. You can now expect a fairly

modern version of curses to be available across all Unices and Unix

systems have proper terminfo databases installed.

Because I am a hopeless romantic, I called this new incarnation of the

UI toolkit, gui.cs. This time around, I have updated it to modern

.NET idioms, modern .NET build systems, and embraced the UIKit design

for some of the internals of the framework and Azure DevOps to run my

continuous builds and manage my releases to NuGet.

In addition, the toolkit is no longer tied to Unix, but contains

drivers for the Windows console, the .NET System.Console (a less

powerful version of the Windows console) and the ncurses library.

You can find the result in GitHub

https://github.com/migueldeicaza/gui.cs and you can install it on your

favorite operating system by installing the Terminal.Gui NuGet

package.

I have published both conceptual and API documentation for folks to get started with. Hopefully I will beat my previous record of two users.

The original layout system for gui.cs was based on absolute

positioning - not bad for a quick hack. But this time around I wanted

something simpler to use. Sadly, UIKit is not a good source of

inspiration for simple to use layout systems, so I came up with a

novel system for widget

layout,

one that I am quite fond of. This new system introduces two data

types Pos for specifying positions and Dim for specifying

dimensions.

As a developer, you assign Pos values to X, Y and Dim values

to Width and Height. The system comes with a range of ways of

specifying positions and dimensions, including referencing properties

from other views. So you can specify the layout in a way similar to

specifying formulas in a spreadsheet.

There is a one hour long presentation introducing various tools for

console programming with .NET. The section dealing just with gui.cs

starts at minute

29:28, and you

can also get a copy of the slides.

Posted on 22 Apr 2019

First Election of the .NET Foundation

Last year, I wrote about structural changes that we made to the .NET Foundation.

Out of 715 applications to become members of the foundation, 477 have been accepted.

Jon has posted the results of our first election. From Microsoft, neither Scott Hunter or myself ran for the board of directors, and only Beth Massi remains. So we went from having a majority of Microsoft employees on the board to only having Beth Massi, with six fresh directors joining: Iris Classon, Ben Adams, Jon Skeet, Phil Haack, Sara Chipps and Oren Novotny

I am stepping down very happy knowing that I achieved my main goal, to turn the .NET Foundation into a more diverse and member-driven foundation.

Congratulations and good luck .NET Board of 2019!

Posted on 29 Mar 2019

.NET Foundation Changes

Today we announced a major change to the .NET Foundation, in which we fundamentally changed the way that the foundation operates. The new foundation draws inspiration from the Gnome Foundation and the F# Foundation.

We are making the following changes:

The Board of Directors of the Foundation will now be elected by the .NET Foundation membership, and they will be in charge of steering the direction of the foundation. The Board of Directors will be elected annually via direct vote from the members of the Foundation, with just one permanent member from Microsoft.

Anyone contributing to projects in the .NET Foundation can become a voting member of the Foundation. The main benefit is that you get to vote for who should represent you in the board of directors. To become a member, we will judge contributions to the projects in the foundation, which can either be code contributions, documentation, evangelism or other activities that advance .NET and its ecosystem.

Membership fee: we are adding a membership fee that will give the .NET Foundation independence from Microsoft when it comes to how it chooses to promote .NET and the ecosystem around it. We realize that not everyone can pay this fee, so this fee can be waived. But those that contribute to the Foundation will help us fund activities that will expand .NET.

We intend to have elections every year, so individuals will campaign on what they intend to bring to the board.

There is a limit in the number of members on the board representing a single company, which prevents the board from being stacked up by contributors for a single company, and will encourage our community to vote for board members with diverse backgrounds, strengthening the views of the board.

Companies do not vote. The only way to vote is for contributors to the .NET ecosystem, which could be affiliated with a company to vote, but the companies themselves have no vote. Our corporate sponsors are sponsors that care as much as we care as the growth and health of our ecosystem.

These changes are very close to my heart and took a lot of work to make them happen and make sure that Microsoft the company was comfortable with giving up the control over the .NET Foundation.

I want to thank Jon Galloway, the Executive Director of the current .NET Foundation to help make this a reality.

Going from the idea to the execution took a long time. Martin Woodward did some of the early foot work to get various people at Microsoft comfortable with the idea. Then Jon took over, and had to continue this process to get everyone on board and get everyone to accept that our little baby was ready to graduate, go to college and start its own independent life.

I want to thank my peers in the board of directors that supported this move, Scott Hunter, Oren Novotny, Rachel Reese as well as the entire supporting crew that helped us make this happen, Beth Massi, Jay Schmelzer and the various heroes in the Microsoft legal department that crossed all the t’s and dotted all the i’s.

See you on the campaign trail!

Posted on 04 Dec 2018

Startup Improvements in Xamarin.Forms on Android

With Xamarin.Forms 3.0 in addition to the many new feature work that we did, we have been doing some general optimizations across the board, from compile times to startup times and wanted to share some recent results on the net effect on one of our larger sample apps.

These are the results when doing a cold start for the SmartHotel360 application on Android when compiled for 32bits (armeabi-v7a) on a Google Pixel (1st gen).

Release Release/AOT Release/AOT+LLVM Forms 2.5.0 6.59s 1.66s 1.61s Forms 3.0.0 3.52s 1.41s 1.38sThis is independent of the work that we are doing to improve Android's startup speed, that both brings additional benefits today, and will bring additional benefits in the future.

One of the areas that we are investing on for Android is to remove any dynamic code execution at startup to integrate with the Java runtime, instead all of this is being statically computed, similar to what we are doing on Mac and iOS where we completely eliminated reflection and code generation from startup.

Posted on 18 May 2018

How we doubled Mono’s Float Speed

My friend Aras recently wrote the same ray tracer in various languages, including C++, C# and the upcoming Unity Burst compiler. While it is natural to expect C# to be slower than C++, what was interesting to me was that Mono was so much slower than .NET Core.

The numbers that he posted did not look good:

- C# (.NET Core): Mac 17.5 Mray/s,

- C# (Unity, Mono): Mac 4.6 Mray/s,

- C# (Unity, IL2CPP): Mac 17.1 Mray/s,

I decided to look at what was going on, and document possible areas for improvement.

As a result of this benchmark, and looking into this problem, we identified three areas of improvement:

- First, we need better defaults in Mono, as users will not tune their parameters

- Second, we need to better inform the world about the LLVM code optimizing backend in Mono

- Third, we tuned some of the parameters in Mono.

The baseline for this test, was to run Aras ray tracer on my machine, since we have different hardware, I could not use his numbers to compare. The results on my iMac at home were as follows for Mono and .NET Core:

Runtime Results MRay/sec .NET Core 2.1.4, debug builddotnet run

3.6

.NET Core 2.1.4, release build, dotnet run -c Release

21.7

Vanilla Mono, mono Maths.exe

6.6

Vanilla Mono, with LLVM and float32

15.5

During the process of researching this problem, we found a couple of problems, which once we fixed, produced the following results:

Runtime Results MRay/sec Mono with LLVM and float32 15.5 Improved Mono with LLVM, float32 and fixed inline 29.6Aggregated:

Just using LLVM and float32 your code can get almost a 2.3x performance improvement in your floating point code. And with the tuning that we added to Mono’s as a result of this exercise, you can get 4.4x over running the plain Mono - these will be the defaults in future versions of Mono.

This blog post explains our findings.

32 and 64 bit Floats

Aras is using 32-bit floats for most of his math (the float type in

C#, or System.Single in .NET terms). In Mono, decades ago, we made

the mistake of performing all 32-bit float computations as 64-bit

floats while still storing the data in 32-bit locations.

My memory at this point is not as good as it used to be and do not quite recall why we made this decision.

My best guess is that it was a decision rooted in the trends and ideas of the time.

Around this time there was a positive aura around extended precision computations for floats. For example the Intel x87 processors use 80-bit precision for their floating point computations, even when the operands are doubles, giving users better results.

Another theme around that time was that the Gnumeric spreadsheet, one of my previous projects, had implemented better statistical functions than Excel had, and this was well received in many communities that could use the more correct and higher precision results.

In the early days of Mono, most mathematical operations available

across all platforms only took doubles as inputs. C99, Posix and ISO

had all introduced 32-bit versions, but they were not generally

available across the industry in those early days (for example, sinf

is the float version of sin, fabsf of fabs and so on).

In short, the early 2000’s were a time of optimism.

Applications did pay a heavier price for the extra computation time, but Mono was mostly used for Linux desktop application, serving HTTP pages and some server processes, so floating point performance was never an issue we faced day to day. It was only noticeable in some scientific benchmarks, and those were rarely the use case for .NET usage in the 2003 era.

Nowadays, Games, 3D applications image processing, VR, AR and machine learning have made floating point operations a more common data type in modern applications. When it rains, it pours, and this is no exception. Floats are no longer your friendly data type that you sprinkle in a few places in your code, here and there. They come in an avalanche and there is no place to hide. There are so many of them, and they won’t stop coming at you.

The “float32” runtime flag

So a couple of years ago we decided to add support for performing

32-bit float operations with 32-bit operations, just like everyone

else. We call this runtime feature “float32”, and in Mono, you enable

this by passing the --O=float32 option to the runtime, and for

Xamarin applications, you change this setting on the project

preferences.

This new flag has been well received by our mobile users, as the majority of mobile devices are still not very powerful and they rather process data faster than they need the precision. Our guidance for our mobile users has been both to turn on the LLVM optimizing compiler and float32 flag at the same time.

While we have had the flag for some years, we have not made this the default, to reduce surprises for our users. But we find ourselves facing scenarios where the current 64-bit behavior is already surprises to our users, for example, see this bug report filed by a Unity user.

We are now going to change the default in Mono to be float32, you

can track the progress here: https://github.com/mono/mono/issues/6985.

In the meantime, I went back to my friend Aras project. He has been

using some new APIs that were introduced in .NET Core. While .NET

core always performed 32-bit float operations as 32-bit floats, the

System.Math API still forced some conversions from float to

double in the course of doing business. For example, if you wanted

to compute the sine function of a float, your only choice was to call

Math.Sin (double) and pay the price of the float to double

conversion.

To address this, .NET Core has introduced a new System.MathF type,

which contains single precision floating point math operations, and we

have just brought this

[System.MathF](https://github.com/mono/mono/pull/7941) to

Mono now.

While moving from 64 bit floats to 32 bit floats certainly improves the performance, as you can see in the table below:

Runtime and Options Mrays/second Mono with System.Math 6.6 Mono with System.Math, using-O=float32

8.1

Mono with System.MathF

6.5

Mono with System.MathF, using -O=float32

8.2

So using float32 really improves things for this test, the MathF had

a small effect.

Tuning LLVM

During the course of this research, we discovered that while Mono’s

Fast JIT compiler had support for float32, we had not added this

support to the LLVM backend. This meant that Mono with LLVM was still

performing the expensive float to double conversions.

So Zoltan added support for float32 to our LLVM code generation

engine.

Then he noticed that our inliner was using the same heuristics for the Fast JIT than it was using for LLVM. With the Fast JIT, you want to strike a balance between JIT speed and execution speed, so we limit just how much we inline to reduce the work of the JIT engine.

But when you are opt into using LLVM with Mono, you want to get the

fastest code possible, so we adjusted the setting accordingly. Today

you can change this setting via an environment variable

MONO_INLINELIMIT, but this really should be baked into the defaults.

With the tuned LLVM setting, these are the results:

Runtime and Options Mrays/seconds Mono with System.Math--llvm -O=float32

16.0

Mono with System.Math --llvm -O=float32 fixed heuristics

29.1

Mono with System.MathF --llvm -O=float32 fixed heuristics

29.6

Next Steps

The work to bring some of these improvements was relatively low. We

had some on and off discussions on Slack which lead to these

improvements. I even managed to spend a few hours one evening to

bring System.MathF to Mono.

Aras RayTracer code was an ideal subject to study, as it was self-contained, it was a real application and not a synthetic benchmark. We want to find more software like this that we can use to review the kind of bitcode that we generate and make sure that we are giving LLVM the best data that we can so LLVM can do its job.

We also are considering upgrading the LLVM that we use, and leverage any new optimizations that have been added.

SideBar

The extra precision has some nice side effects. For example, recently, while reading the pull requests for the Godot engine, I saw that they were busily discussing making floating point precision for the engine configurable at compile time (https://github.com/godotengine/godot/pull/17134).

I asked Juan why anyone would want to do this, I thought that games were just content with 32-bit floating point operations.

Juan explained to that while floats work great in general, once you “move away” from the center, say in a game, you navigate 100 kilometers out of the center of your game, the math errors start to accumulate and you end up with some interesting visual glitches. There are various mitigation strategies that can be used, and higher precision is just one possibility, one that comes with a performance cost.

Shortly after our conversation, this blog showed up on my Twitter timeline showing this problem:

http://pharr.org/matt/blog/2018/03/02/rendering-in-camera-space.html

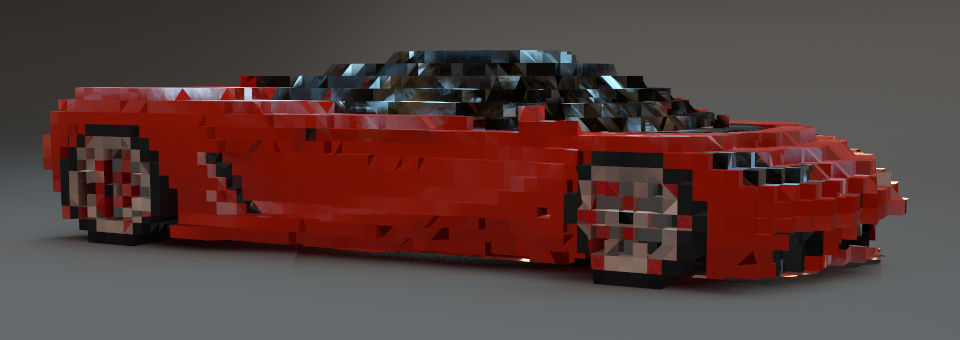

A few images show the problem. First, we have a sports car model from the pbrt-v3-scenes **distribution. Both the camera and the scene are near the origin and everything looks good.

** (Cool sports car model courtesy Yasutoshi Mori.) Next, we’ve translated both the camera and the scene 200,000 units from the origin in xx, yy, and zz. We can see that the car model is getting fairly chunky; this is entirely due to insufficient floating-point precision.

** (Thanks again to Yasutoshi Mori.) If we move 5×5× farther away, to 1 million units from the origin, things really fall apart; the car has become an extremely coarse voxelized approximation of itself—both cool and horrifying at the same time. (Keanu wonders: is Minecraft chunky purely because everything’s rendered really far from the origin?)

** (Apologies to Yasutoshi Mori for what has been done to his nice model.)

Posted on 11 Apr 2018

Older entries »

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK