Multitenant HA Redis on AWS

source link: http://www.linux-admins.net/2015/09/multitenant-ha-redis-on-aws.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Multitenant HA Redis on AWS

In this post I'll demonstrate one of the many ways to setup a multitenant and highly available Redis cluster using Amazon Web Services, OpenVZ containers, Open vSwitch with GRE tunneling, HAProxy and keepalived on CentOS 6.5.

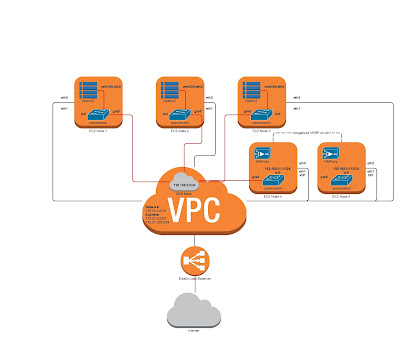

This is what the architecture looks like:

I'll use one VPC, with two Subnets, 3 EC2 instances for the Redis and Sentinel containers, and two more EC2 instances for the HAProxy and keepalived proxy layer.

Each OpenVZ container will be part of an isolated network, the entry point to which is the HAProxy node. This is achieved by using Open vSwitch with a mesh of GRE tunnels.

First create the VPC subnets and the EC2 instances, then on the Redis instances install the OVZ Kernel:

root@ovz_nodes:~# yum install -y wget vim root@ovz_nodes:~# wget -P /etc/yum.repos.d/ http://ftp.openvz.org/openvz.repo root@ovz_nodes:~# rpm --import http://ftp.openvz.org/RPM-GPG-Key-OpenVZ root@ovz_nodes:~# yum install -y vzkernel root@ovz_nodes:~# echo "SELINUX=disabled" > /etc/sysconfig/selinux root@ovz_nodes:~# yum install -y vzctl vzquota ploop root@ovz_nodes:~# chkconfig iptables off root@ovz_nodes:~# reboot

root@ovz_nodes:~# wget http://www.forwardingplane.net/wp-content/uploads/OVS2.0/OpenvSwitch-2.3.0-RPM.tgz root@ovz_nodes:~# tar zxfv OpenvSwitch-2.3.0-RPM.tgz && cd OVS-2.3.0/ root@ovz_nodes:~# yum localinstall openvswitch-2.3.0-1.x86_64.rpm root@ovz_nodes:~# /etc/init.d/openvswitch start root@ovz_nodes:~# modprobe ixgbevf root@ovz_nodes:~# dracut -f

root@some_host:~# aws ec2 modify-instance-attribute --instance-id i-07af60d2 --sriov-net-support simple

root@ovz_nodes:~# ovs-vsctl add-br br0 root@ovz_nodes:~# ovs-vsctl add-port br0 gre0 – set interface gre0 type=gre options:remote_ip=172.31.255.76 root@ovz_nodes:~# ovs-vsctl add-port br0 gre1 – set interface gre1 type=gre options:remote_ip=172.31.255.133 root@ovz_nodes:~# ovs-vsctl add-port br0 gre2 – set interface gre2 type=gre options:remote_ip=172.31.255.202 root@ovz_nodes:~# ovs-vsctl set bridge br0 stp_enable=true root@ovz_nodes:~# ifconfig br0 hw ether 0A:A8:41:41:EE:9F

root@ovz_nodes:~# cat /etc/vz/vznet.conf #!/bin/bash EXTERNAL_SCRIPT="/usr/sbin/vznetaddbr"

root@ovz_nodes:~# cat /usr/sbin/vznetaddbr #!/bin/sh # # Add virtual network interfaces (veth's) in a container to a bridge on CT0

CONFIGFILE=/etc/vz/conf/$VEID.conf . $CONFIGFILE

NETIFLIST=$(printf %s "$NETIF" |tr ';' '\n')

if [ -z "$NETIFLIST" ]; then echo >&2 "According to $CONFIGFILE, CT$VEID has no veth interface configured." exit 1 fi

for iface in $NETIFLIST; do bridge= host_ifname=

for str in $(printf %s "$iface" |tr ',' '\n'); do case "$str" in bridge=*|host_ifname=*) eval "${str%%=*}=\${str#*=}" ;; esac done

[ "$host_ifname" = "$3" ] || continue

[ -n "$bridge" ] || bridge=vmbr0

echo "Adding interface $host_ifname to bridge $bridge on CT0 for CT$VEID" ip link set dev "$host_ifname" up ip addr add 0.0.0.0/0 dev "$host_ifname" echo 1 >"/proc/sys/net/ipv4/conf/$host_ifname/proxy_arp" echo 1 >"/proc/sys/net/ipv4/conf/$host_ifname/forwarding" ovs-vsctl add-port "$bridge" "$host_ifname"

break done

exit 0

On each host, create a container and install Redis and Sentinel inside of it:

root@ovz_nodes:~# vzctl create 100 --ostemplate wheezy_template --layout simfs root@ovz_nodes:~# vzctl set 100 --save --netif_add eth0,,veth100.eth0,,br0 root@ovz_nodes:~# vzctl exec 100 "ifconfig eth0 192.168.0.100 netmask 255.255.255.0" root@ovz_nodes:~# vzctl exec 100 "route add default gw 192.168.0.111 netmask 255.255.255.0" root@ovz_nodes:~# vzctl exec 100 "dpkg --install redis-server-3.0.3.deb" root@ovz_nodes:~# vzctl exec 100 "/etc/init.d/redis-server start" root@ovz_nodes:~# vzctl exec 100 "redis-sentinel /etc/redis/sentinel.conf"

I created my own Debian Wheezy template using debootstrap, but you can use upstream templates as well.

The Redis and Sentinel configs for the cluster follow:

root@ovz_nodes:~# vzctl exec 100 "cat /etc/redis/redis.conf" daemonize yes pidfile "/var/run/redis.pid" port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 0 loglevel verbose logfile "/var/log/redis/redis-server.log" syslog-enabled yes databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename "dump.rdb" dir "/tmp" slave-serve-stale-data yes slave-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no slave-priority 100 maxmemory 256 appendonly no appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-entries 512 list-max-ziplist-value 64 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes

root@ovz_nodes:~# vzctl exec 100 "cat /etc/redis/sentinel.conf" daemonize yes port 26379 dir "/tmp" sentinel monitor mymaster 192.168.0.100 6379 2 sentinel down-after-milliseconds mymaster 5000 sentinel failover-timeout mymaster 60000 sentinel config-epoch mymaster 15

root@ovz_nodes:~# ovs-vsctl show 2cc96026-dd26-4d80-aab9-64c3e963cd07 Bridge "br0" Port "br0" Interface "br0" type: internal Port "gre0" Interface "gre0" type: gre options: {remote_ip="172.31.255.133"} Port "gre2" Interface "gre2" type: gre options: {remote_ip="172.31.255.76"} Port "gre1" Interface "gre1" type: gre options: {remote_ip="172.31.255.202"} Port "veth100.eth0" Interface "veth100.eth0" ovs_version: "2.3.0"

First, create the same GRE tunnels to the other 3 EC2 nodes as shown previously, the main difference here is that the bridge interface will have an IP that's part of the GRE mesh subnet to allow the other hosts to reach the containers:

root@haproxy_nodes:~# ifconfig br0 192.168.0.111 netmask 255.255.255.0 root@haproxy_nodes:~# yum install haproxy keepalived root@haproxy_nodes:~# curl -O https://bootstrap.pypa.io/get-pip.py root@haproxy_nodes:~# python get-pip.py root@haproxy_nodes:~# pip install awscli root@haproxy_nodes:~# cat /etc/keepalived/keepalived.conf

vrrp_instance NODE1 { interface eth2

track_interface { eth2 }

state MASTER virtual_router_id 1 priority 100 nopreempt

unicast_src_ip 172.31.255.236 unicast_peer { 172.31.255.133 }

virtual_ipaddress { 172.31.255.240 dev eth2 }

notify /usr/local/bin/keepalived.state.sh }

root@haproxy_nodes:~# cat /usr/local/bin/keepalived.state.sh

#!/bin/bash

source /root/aws_auth

TYPE=$1 NAME=$2 STATE=$3 VIP="172.31.255.240" SELF_NIC_ID="eni-9dceb9d7" PEER_NIC_ID="eni-bf2157f5"

echo $STATE > /var/run/keepalived.state

if [ "$STATE" = "MASTER" ] then logger "Entering MASTER state" logger "Unassigning $VIP from peer NIC $PEER_NIC_ID" aws ec2 unassign-private-ip-addresses --network-interface-id $PEER_NIC_ID --private-ip-addresses $VIP logger "Assigning $VIP to self NIC $SELF_NIC_ID" aws ec2 assign-private-ip-addresses --network-interface-id $SELF_NIC_ID --private-ip-addresses $VIP --allow-reassignment fi

if [ "$STATE" = "BACKUP" ] then logger "Entering BACKUP state" logger "Unassigning $VIP from self NIC $SELF_NIC_ID" aws ec2 unassign-private-ip-addresses --network-interface-id $SELF_NIC_ID --private-ip-addresses $VIP logger "Assigning $VIP to peer NIC $PEER_NIC_ID" aws ec2 assign-private-ip-addresses --network-interface-id $PEER_NIC_ID --private-ip-addresses $VIP --allow-reassignment fi

root@haproxy_nodes:~# cat /root/aws_auth

[ -f /tmp/temp_creds ] && rm -f /tmp/temp_creds

for AWS_VAR in $(env | grep AWS | cut -d"=" -f1);do unset $AWS_VAR;done

aws sts get-session-token --output json > /tmp/temp_creds

export AWS_ACCESS_KEY_ID=$(cat /tmp/temp_creds | grep AccessKeyId | cut -d":" -f2 | sed -e 's/^[ \t]*//' -e 's/[ \t]*$//' | tr -d "\"") export AWS_SECRET_ACCESS_KEY=$(cat /tmp/temp_creds | grep SecretAccessKey | cut -d":" -f2 | sed -e 's/^[ \t]*//' -e 's/[ \t]*$//' | tr -d "\"" | sed 's/.$//') export AWS_SESSION_TOKEN=$(cat /tmp/temp_creds | grep SessionToken | cut -d":" -f2 | sed -e 's/^[ \t]*//' -e 's/[ \t]*$//' | tr -d "\"" | sed 's/.$//')

root@haproxy_nodes:~# cat /etc/haproxy/haproxy.cfg global log 127.0.0.1 local1 log-tag haproxy

chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon

stats socket /var/lib/haproxy/stats

defaults mode tcp log global retries 3 timeout client 1m timeout server 1m timeout check 10s timeout connect 1m maxconn 3000

frontend redis *:6379 mode tcp default_backend redis_cluster_1

backend redis_cluster_1 mode tcp option tcp-check tcp-check send PING\r\n tcp-check expect string +PONG tcp-check send info\ replication\r\n tcp-check expect string role:master tcp-check send QUIT\r\n tcp-check expect string +OK server redis1 192.168.0.100:6379 check inter 1s server redis2 192.168.0.200:6379 check inter 1s server redis3 192.168.0.240:6379 check inter 1s

Keepliaved will be using unicast messages between Node 4 and Node 5. When the state of the node changes it will trigger a script defined on line 29, which will re-assign the private IP from one of the EC2 instances to the other, and keepliaved will raise the IP and send an unsolicited ARP broadcast. For this to work we need to have the aws cli installed (lines 3-5), and auth working (lines 71).

If Node 4 and 5 have a public IP you should be able to connect to the current Redis master, as decided by the Sentinels by using the redis-cli command. To test a failover just shut down redis-server on the current master container and watch the sentinels promote a new master and HAProxy detecting the change (lines 107-113).

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK