Reduce Rails Memory Usage, Fix Leaks, R14, Save Money on Heroku [4 Tips]

source link: https://pawelurbanek.com/2018/01/15/limit-rails-memory-usage-fix-R14-and-save-money-on-heroku/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Reduce Rails Memory Usage, Fix Leaks, R14 and Save Money on Heroku

Updated Jun 7, 2020 14 comments

5 minute read

I'm available to conduct a performance tuning and security audit of your Rails app.

In theory, you can run both Rails web server and Sidekiq process on one 512mb Heroku dyno. For side projects with small traffic, saving $7/month always comes in handy. Unfortunately when trying to fit two Ruby processes on one dyno you can run into memory issues, leaks and R14 quota exceeded errors. In this post, I will explain how you can reduce memory usage in Rails apps.

Recently, I read a great article by Bilal Budhani explaining how to run Sidekiq process alongside Puma on a single Heroku dyno. After applying it to one of my side projects, I started running into those dreaded R14 (memory quota exceeded) errors.

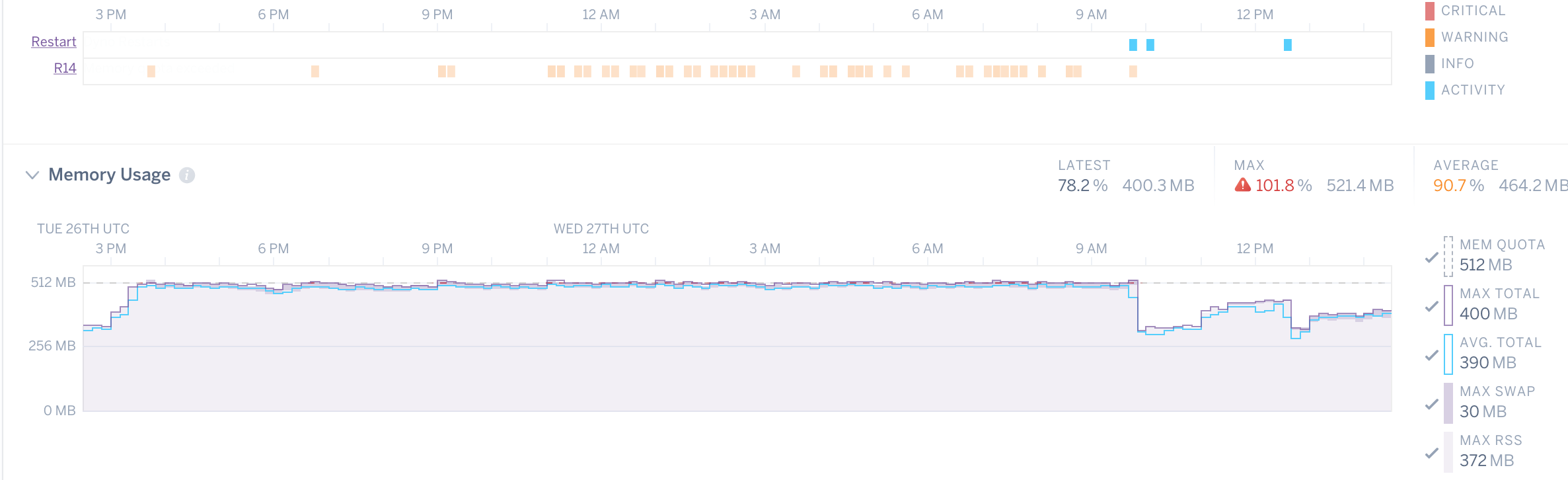

Memory usage spiked followed by a bunch of memory errors and automatic restart

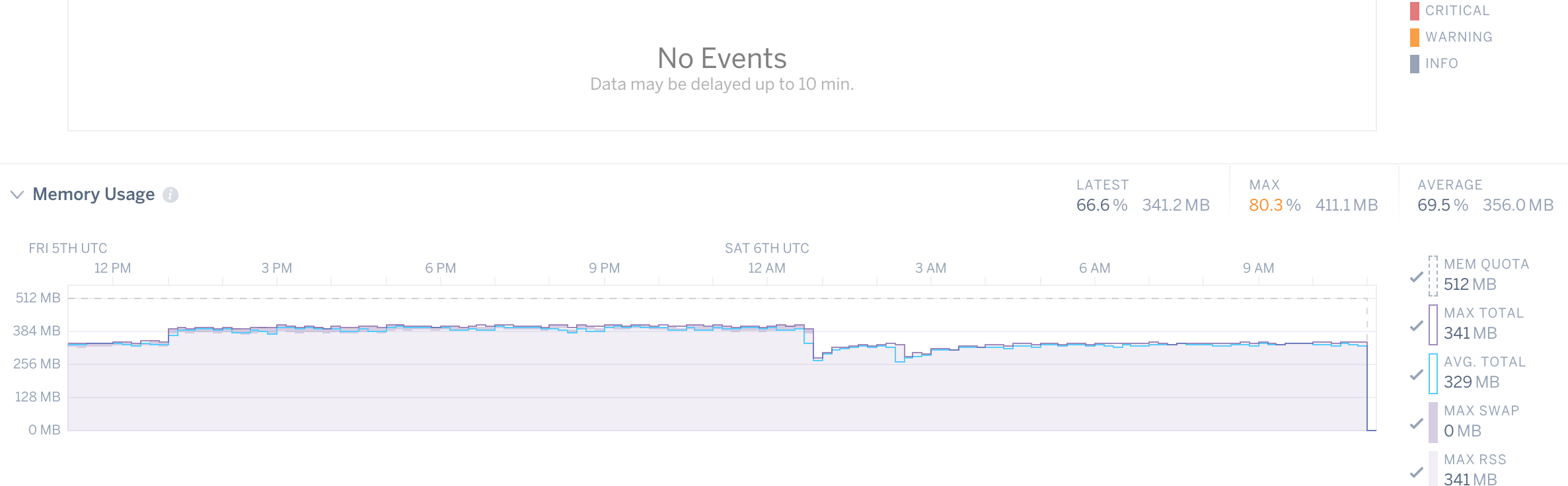

I did some digging using Scout and, after a couple of optimizations memory usage charts started looking like this:

Stable memory usage followed by garbage collection

Here’s what I did:

Put your Gemfile on a diet

There is a Gem for that… In the Ruby world, dropping in a Gem to solve a problem is usually the “easiest” solution. Apart from other costs, memory bloat is one that can easily get overlooked.

The best way to check how much memory each of your gems consumes is to use derailed benchmarks. Just add:

gem 'derailed_benchmarks', group: :developmentto your Gemfile and run bundle exec derailed bundle:mem.

My project powers Twitter bot profile. I was surprised to find out that a popular twitter gem uses over 13 MB of memory on startup. First I replaced it with its lightweight alternative grackle (~1 MB) and finally ended up writing a custom code making a HTTP call to Twitter API. I also managed to get rid of koala gem (~2 MB) in the same way.

Another quick win was replacing gon gem (~6 MB) with custom JavaScript data attributes.

Importing a couple of MBs worth of gem files to simplify making one HTTP call or preventing writing a couple of JavaScript lines should definitely be avoided.

Use jemalloc to reduce Rails memory usage

jemalloc is an alternative to official MRI memory allocator. On Heroku, you can add jemalloc using a buildpack. For my app, it resulted in ~20% memory usage decrease. Just make sure to test your app with jemalloc thoroughly on a staging environment before deploying it to production.

You can also check out my other blogpost for tips on how to use Ruby with jemalloc for Docker and Dokku based Rails apps.

Avoid instantiating ActiveRecord objects

To extract columns from the database, you should use pluck instead of map. The former does not instantiate heavyweight ActiveRecord objects and uses less memory to get the same data.

# bad

products = Product.last(200).map do |pr|

[pr.id, pr.name, pr.price]

end

# good

products = Product.last(200).pluck(:id, :name, :price)If you must use full-blown AR objects, you should always limit the size of the collection instantiated at a given time. If you have to execute a method on multiple objects, make sure to use find_each instead of each to avoid instantiating them all to the memory. Optionally you can also reduce the default batch size of 1000. It should be considered if each object instantiates more e.g., through relations.

Product.find_each(batch_size: 200) do |pr|

pr.refresh_price!

endLimit concurrency and workers

For a side project with limited traffic you probably don’t need a lot of throughput anyway. You can limit memory usage by reducing Sidekiq and Puma workers and threads count. Here’s my config/puma.rb file:

threads_count = 1

threads threads_count, threads_count

port ENV.fetch("PORT") { 3000 }

environment ENV.fetch("RAILS_ENV") { "production" }

workers 1

preload_app!

on_worker_boot do

@sidekiq_pid ||= spawn('bundle exec sidekiq -t 1')

ActiveRecord::Base.establish_connection if defined?(ActiveRecord)

end

on_restart do

Sidekiq.redis.shutdown { |conn| conn.close }

end

plugin :tmp_restartand config/sidekiq.yml:

---

:concurrency: 1

:queues:

- urgent

- defaultAlthough we specify 1 as the max number of threads, Puma can spawn up to 7 threads. With those minimal settings, Smart Wishlist is still able to process around 100k Sidekiq jobs daily and serve both React frontend and mobile JSON API.

Freeze string literals

Since Ruby 2.3 you can add a magic comment on top of a file to automatically freeze all the strings:

# frozen_string_literal: trueWhen it is present, string objects behave more like symbols, so that two strings with the same value will always occupy the same spot in memory.

Just be careful because it limits a range of mutable operations e.g.

"foo".concat("bar") # => FrozenError (can't modify frozen String)Optimize JSON parsing

All those Sidekiq jobs are necessary to download up to date prices from iTunes API and send push notifications about discounts. This means there’s quite a lot of JSON parsing going on there. There is an simple fix which can help optimize both memory usage and performance in such cases:

Gemfile

gem 'oj'config/initializers/oj.rb

require 'oj'

Oj.optimize_railsoj gem offers a Rails Compatibility API, which hooks into JSON.parse calls, improving their performance and memory usage.

Summary

Working in constrained environments is a great way to flex your programming muscles and discover new optimization techniques. In theory, you could always solve memory issues by throwing more money at your servers, but why not keep those $7 instead?

Check out my other blog post for more tips on how to improve performance and reduce memory usage of Rails apps.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK