"We Didn't Ask that Question" | Akendi UX Blog

source link: https://www.akendi.com/blog/we-didnt-ask-that-question/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Posted on: 2 June 2020

“We Didn’t Ask that Question”

As a user researcher, part of the work is communicating the results of our research. And to get to that moment, we engage with users and customers, we analyse the data, found themes and trends, synthesized all that into a set of insights.

Then, during the final presentation, you are asked that dreaded question “Ah, all this is great stuff….very well done…so I wondered…did anyone say something about ….. ?” and what follows is a question that the audience member is specifically interested in. And regularly the answer is, “we didn’t ask that question”, or “we didn’t find anything relating to that point”.

There is no escaping it, you feel somehow that you have let down that person. Even though you uncovered many other items of value, many insights that would help the project, product, company even, it still feels we missed out on an opportunity to get more, find out that specific nugget that could have been discovered if we’d only dug a little deeper. Why is that? I’d like to explore this here.

Uncovering all insights

Let’s go back to our audience member, our stakeholder that asked that question. Where did this question come from, as often these questions seem to come from left field? When you think back, you realise this topic never came up during the preparation of the study. On top of this, sometimes this stakeholder was even in the room when you reviewed the research approach, the interview questions, the survey questions. So why not say it then?

One answer to this is that we didn’t know what we were looking for at the time. User research is often done in an exploratory phase of the project, at a time we don’t have the answers yet, but are on a path to discovery. So now we have arrived at a point where we do have insights, we have some answers and have discovered things. To start from there and then reason forward is inevitably going to lead to better questions, a better focus, but also to new unanswered questions that come up during a presentation.

I’m ok with the above reason, most of the time. But there are times where I wondered if we could have done more, think ahead about where our stakeholder’s minds are at when they see the results.

Have we taken the complete opportunity to uncover insights from our users/customers and explored ‘all’ they had to say? I think the answer is not ‘yes’ as often as I’d like.

Research analysis

The feeling of a missed opportunity is in my view rooted in the way we do our analysis. And data collection. But let’s start with the analysis.

You take an in-person user interview / contextual inquiry as the methods, then, when the interview guide is created, the questions in there are often a mix of open and closed questions, prompts to tell stories, exploration of a couple of areas more deeply.

After you’ve done your research, the result is a rich set of somewhat unstructured data that we use as the input to our analysis. We look for examples of particular pain points, task flows, mental models that stand out, but on the whole, we aim to identify themes from the data. The identification of these themes follows the idea of grounded theory: we see themes in what our users say, we combined them into bigger themes, or new themes and iteratively build out our understanding of what is happening. Synthesized in an affinity diagram, empathy map, journey map and sometimes just key insights.

And this is what is presented to the audience. The themes that emerged from the data. But that data comes in through a lens. We focus on the product / service experience. When we talk about pain points, we talk about experience pain points. And what we’re after most of the time is understanding how our audience uses/buys products and services so we can design the right experience or improve the right pieces of that experience.

Data collection structures

The challenge is that not only UX/CX talks about the experience, so does tech, content and so does the business. When they hear you talk about experience insights, not surprisingly, there is an immediate connection to what their own world is, they will frame the insights according to what their challenges are in business, content or technology. And it’s not the same research direction as that of the user researcher per se.

If this is the case, how about structuring the analysis or better still, the data gathering more along these lines? One objection is that we don’t know yet what we don’t know, we have to stay open. Yes, I get that. I’d argue it doesn’t preclude us from being aware of these other experiences and make the best of the, sometimes rare, opportunity to talk to users and customers. What if we are aware of the kind of data we are collecting, and we’d inject probing questions that relate to the other experience types?

And that is what I feel is missing. Are we maximizing the research opportunity here, do we discover as much insight we can from the people we talk to?

What if we would go in with more of a vague structure of what we’re after that keeps the exploratory nature? It would extend the prevailing focus of insight gathering on what people do (tasks, context) and feel (emotion) as they interact with products and services.

Uncovering B-DCT

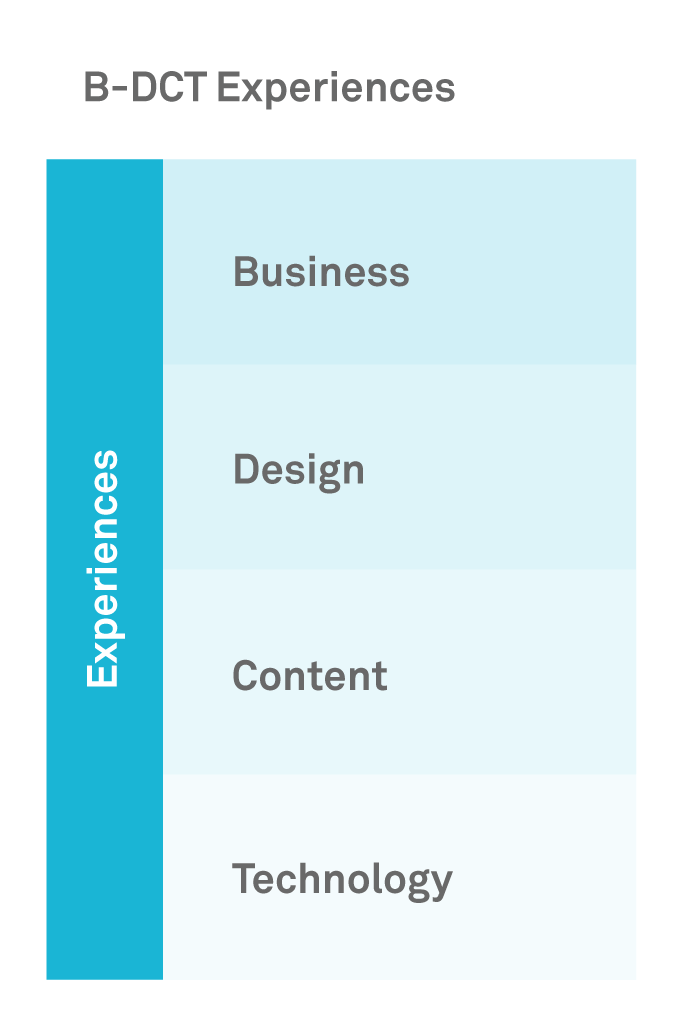

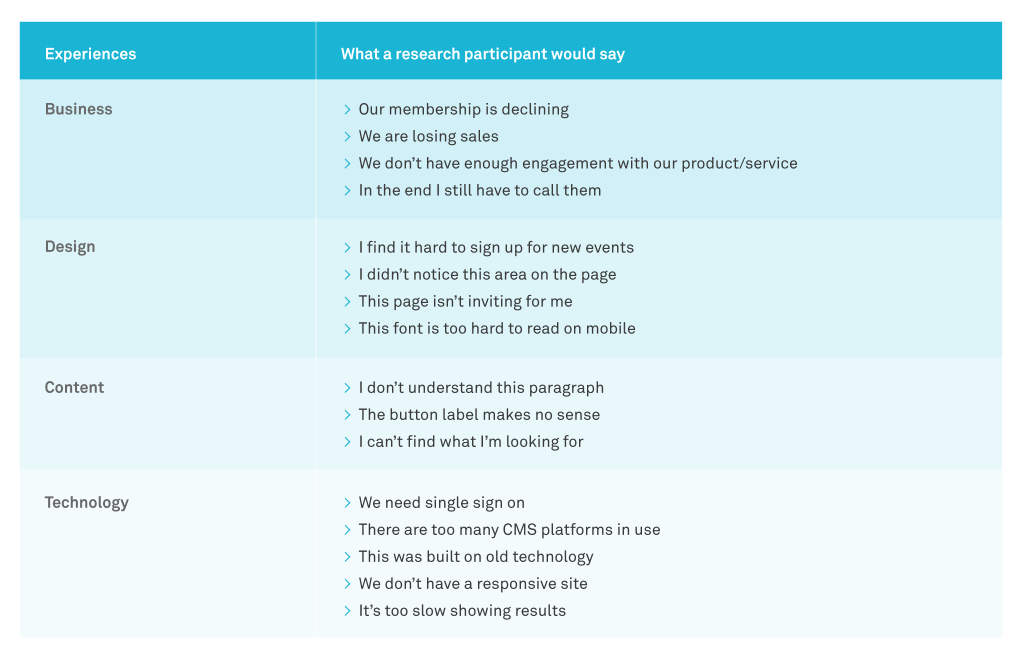

This thinking of gathering experience insights in other areas of the business during the research, it starts with identifying these key areas: Business, Design, Content and Technology.

Each area in the framework touches an Experience layer you see on the right so users and customers will talk about their different experiences while touching on elements at these levels.

If a researcher is aware of the levels, it would do two things, one is that what the user/customer is saying can be attributed to one of the types, once you know that it allows you to explore and probe further from there into other areas, if that is something the user can talk to.

You can ask why the user thinks this is an issue or let them give examples that will lead to pain points on other layers and provide indications of potentially the root-cause of that pain point.

Here a few examples:

Now what ?

For your next research project, consider the B-DCT framework in either the question/interview guide or as an informal structure you keep in the back of your mind as the data gathering is unfolding.

The B-DCT framework would also give you that initial analysis structure to more rapidly arrive at the themes in your user and stakeholder research data. It fits an exploratory analysis phase and the subsequent synthesis of insights captured in, for example, a journey map.

Success with your next research project!

Tedde infuses Akendi, its services and methodology with his strong belief that customer and user experience design must go beyond a singular product interface, service or content. It should become deeply rooted in an organization’s research and design processes, culture,and ultimately be reflected in their products and services.

A graduate of Radboud University, the Netherlands in Cognitive Ergonomics, Tedde has more than two decades of experience in experience research, usability testing and experience design in both public and private sectors.

Prior to founding Akendi, Tedde was a founding partner of Maskery & Associates. He has worked for companies including Nortel Networks, KPMG Management Consulting and Philips Design.

Share this article

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK