K8s多节点部署详细步骤,附UI界面的搭建-程亚庆的博客

source link: https://blog.51cto.com/14469918/2469936

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

K8s多节点部署

需要准备的环境:

6台centos7设备:

192.168.1.11 master01

192.168.1.12 node1

192.168.1.13 node2

192.168.1.14 master02

192.168.1.15 lb1

192.168.1.16 lb2

VIP:192.168.1.100

实验步骤:

1:自签ETCD证书

2:ETCD部署

3:Node安装docker

4:Flannel部署(先写入子网到etcd)

---------master----------

5:自签APIServer证书

6:部署APIServer组件(token,csv)

7:部署controller-manager(指定apiserver证书)和scheduler组件

----------node----------

8:生成kubeconfig(bootstrap,kubeconfig和kube-proxy.kubeconfig)

9:部署kubelet组件

10:部署kube-proxy组件

----------加入群集----------

11:kubectl get csr && kubectl certificate approve 允许办法证书,加入群集

12:添加一个node节点

13:查看kubectl get node 节点

一.etcd群集搭建

1. 在master01 上操作进行etcd证书自签

[root@master ~]# mkdir k8s

[root@master ~]# cd k8s/

[root@master k8s]# mkdir etcd-cert

[root@master k8s]# mv etcd-cert.sh etcd-cert

[root@master k8s]# ls

etcd-cert etcd.sh

[root@master k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

[root@master k8s]# bash cfssl.sh

[root@master k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

[root@master k8s]# cd etcd-cert/

`定义CA证书`

cat > ca-config.json <<EOF

{

"signing":{

"default":{

"expiry":"87600h"

},

"profiles":{

"www":{

"expiry":"87600h",

"usages":[

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

`实现证书签名`

cat > ca-csr.json <<EOF

{

"CN":"etcd CA",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"Nanjing",

"ST":"Nanjing"

}

]

}

EOF

`生产证书,生成ca-key.pem ca.pem`

[root@master etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/01/15 11:26:22 [INFO] generating a new CA key and certificate from CSR

2020/01/15 11:26:22 [INFO] generate received request

2020/01/15 11:26:22 [INFO] received CSR

2020/01/15 11:26:22 [INFO] generating key: rsa-2048

2020/01/15 11:26:23 [INFO] encoded CSR

2020/01/15 11:26:23 [INFO] signed certificate with serial number 58994014244974115135502281772101176509863440005

`指定etcd三个节点之间的通信验证`

cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"192.168.1.11",

"192.168.1.12",

"192.168.1.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing"

}

]

}

EOF

`生成ETCD证书 server-key.pem server.pem`

[root@master etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

2020/01/15 11:28:07 [INFO] generate received request

2020/01/15 11:28:07 [INFO] received CSR

2020/01/15 11:28:07 [INFO] generating key: rsa-2048

2020/01/15 11:28:07 [INFO] encoded CSR

2020/01/15 11:28:07 [INFO] signed certificate with serial number 153451631889598523484764759860297996765909979890

2020/01/15 11:28:07 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

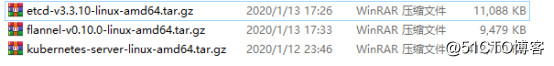

specifically, section 10.2.3 ("Information Requirements").上传以下三个压缩包到/root/k8s目录:

[root@master k8s]# ls

cfssl.sh etcd.sh flannel-v0.10.0-linux-amd64.tar.gz

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@master k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

[root@master k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@master k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

`证书拷贝`

[root@master k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

`进入卡住状态等待其他节点加入`

[root@master k8s]# bash etcd.sh etcd01 192.168.1.11 etcd02=https://192.168.12.148:2380,etcd03=https://192.168.1.13:2380

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service2.重新打开一个master01 终端

[root@master ~]# ps -ef | grep etcd

root 3479 1780 0 11:48 pts/0 00:00:00 bash etcd.sh etcd01 192.168.1.11 etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380

root 3530 3479 0 11:48 pts/0 00:00:00 systemctl restart etcd

root 3540 1 1 11:48 ? 00:00:00 /opt/etcd/bin/etcd

--name=etcd01 --data-dir=/var/lib/etcd/default.etcd

--listen-peer-urls=https://192.168.1.11:2380

--listen-client-urls=https://192.168.1.11:2379,http://127.0.0.1:2379

--advertise-client-urls=https://192.168.1.11:2379

--initial-advertise-peer-urls=https://192.168.1.11:2380

--initial-cluster=etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380

--initial-cluster-token=etcd-cluster

--initial-cluster-state=new

--cert-file=/opt/etcd/ssl/server.pem

--key-file=/opt/etcd/ssl/server-key.pem

--peer-cert-file=/opt/etcd/ssl/server.pem

--peer-key-file=/opt/etcd/ssl/server-key.pem

--trusted-ca-file=/opt/etcd/ssl/ca.pem

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

root 3623 3562 0 11:49 pts/1 00:00:00 grep --color=auto etcd拷贝证书去2个node节点`

[root@master k8s]# scp -r /opt/etcd/ [email protected]:/opt/

[email protected]'s password:

etcd 100% 518 426.8KB/s 00:00

etcd 100% 18MB 105.0MB/s 00:00

etcdctl 100% 15MB 108.2MB/s 00:00

ca-key.pem 100% 1679 1.4MB/s 00:00

ca.pem 100% 1265 396.1KB/s 00:00

server-key.pem 100% 1675 1.0MB/s 00:00

server.pem 100% 1338 525.6KB/s 00:00

[root@master k8s]# scp -r /opt/etcd/ [email protected]:/opt/

[email protected]'s password:

etcd 100% 518 816.5KB/s 00:00

etcd 100% 18MB 87.4MB/s 00:00

etcdctl 100% 15MB 108.6MB/s 00:00

ca-key.pem 100% 1679 1.3MB/s 00:00

ca.pem 100% 1265 411.8KB/s 00:00

server-key.pem 100% 1675 1.4MB/s 00:00

server.pem 100% 1338 639.5KB/s 00:00

复制etcd的启动脚本到2个node节点

[root@master k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[email protected]'s password:

etcd.service 100% 923 283.4KB/s 00:00

[root@master k8s]# scp /usr/lib/systemd/system/etcd.service [email protected]:/usr/lib/systemd/system/

[email protected]'s password:

etcd.service 100% 923 347.7KB/s 00:003.分别取2个node节点修改etcd的配置文件并启动etcd服务

node1

[root@node1 ~]# systemctl stop firewalld.service

[root@node1 ~]# setenforce 0

[root@node1 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.12:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.12:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@node1 ~]# systemctl start etcd

[root@node1 ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since 三 2020-01-15 17:53:24 CST; 5s ago

#状态为Activenode2

[root@node1 ~]# systemctl stop firewalld.service

[root@node1 ~]# setenforce 0

[root@node1 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.13:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.13:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.11:2380,etcd02=https://192.168.1.12:2380,etcd03=https://192.168.1.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@node1 ~]# systemctl start etcd

[root@node1 ~]# systemctl status etcd

● etcd.service - Etcd Server

Loaded: loaded (/usr/lib/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since 三 2020-01-15 17:53:24 CST; 5s ago

#状态为Active4.在master01上验证群集信息

[root@master k8s]# cd etcd-cert/

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" cluster-health

member 9104d301e3b6da41 is healthy: got healthy result from https://192.168.1.11:2379

member 92947d71c72a884e is healthy: got healthy result from https://192.168.1.12:2379

member b2a6d67e1bc8054b is healthy: got healthy result from https://192.168.1.13:2379

cluster is healthy二.在2个node节点上部署docker

`安装依赖包`

[root@node1 ~]# yum install yum-utils device-mapper-persistent-data lvm2 -y

`设置阿里云镜像源`

[root@node1 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

`安装Docker-ce`

[root@node1 ~]# yum install -y docker-ce

`启动Docker并设置为开机自启动`

[root@node1 ~]# systemctl start docker.service

[root@node1 ~]# systemctl enable docker.service

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

`检查相关进程开启情况`

[root@node1 ~]# ps aux | grep docker

root 5551 0.1 3.6 565460 68652 ? Ssl 09:13 0:00 /usr/bin/docke d -H fd:// --containerd=/run/containerd/containerd.sock

root 5759 0.0 0.0 112676 984 pts/1 R+ 09:16 0:00 grep --color=auto docker

`镜像加速服务`

[root@node1 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://w1ogxqvl.mirror.aliyuncs.com"]

}

EOF

#网络优化部分

echo 'net.ipv4.ip_forward=1' > /etc/sysctl.cnf

sysctl -p

[root@node1 ~]# service network restart

Restarting network (via systemctl): [ 确定 ]

[root@node1 ~]# systemctl restart docker

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker三.安装flannel组件

1.在master服务器中写入分配的子网段到ETCD中,供flannel使用

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

查看写入的信息

[root@master etcd-cert]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379" get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

将flannel的软件包拷贝到所有node节点(只需要部署在node节点即可)

[root@master etcd-cert]# cd ../

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 55.6MB/s 00:00

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz [email protected]:/root

[email protected]'s password:

flannel-v0.10.0-linux-amd64.tar.gz 100% 9479KB 69.5MB/s 00:00

2.分别在2个node节点进行配置flannel

[root@node1 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

`创建k8s工作目录`

[root@node1 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node1 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@node1 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

`开启flannel网络功能`

[root@node1 ~]# bash flannel.sh https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

`配置docker连接flannel`

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

#service段落做如下改动

9 [Service]

10 Type=notify

11 # the default is not to use systemd for cgroups because the delegate issues s till

12 # exists and systemd currently does not support the cgroup feature set requir ed

13 # for containers run by docker

14 EnvironmentFile=/run/flannel/subnet.env #在13下添加此行

15 ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run /containerd/containerd.sock #15行中在-H前添加$DOCKER_NETWORK_OPTIONS

16 ExecReload=/bin/kill -s HUP $MAINPID

17 TimeoutSec=0

18 RestartSec=2

19 Restart=always

#修改完成后按Esc退出插入模式,输入:wq保存退出

[root@node1 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.32.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.32.1/24 --ip-masq=false --mtu=1450"

#此处bip指定启动时的子网

`重启docker服务`

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker

`查看flannel网络`

[root@node1 ~]# ifconfig

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.32.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::344b:13ff:fecb:1e2d prefixlen 64 scopeid 0x20<link>

ether 36:4b:13:cb:1e:2d txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 27 overruns 0 carrier 0 collisions 0

四.部署master组件

1.在master上操作,api-server生成证书,需要先上传master.zip到master节点上

[root@master k8s]# unzip master.zip

Archive: master.zip

inflating: apiserver.sh

inflating: controller-manager.sh

inflating: scheduler.sh

[root@master k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

`创建apiserver自签证书目录`

[root@master k8s]# mkdir k8s-cert

[root@master k8s]# cd k8s-cert/

[root@master k8s-cert]# ls #需要上传k8s-cert.sh到此目录下

k8s-cert.sh

`建立ca证书`

[root@master k8s-cert]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@master k8s-cert]# cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Nanjing",

"ST": "Nanjing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`证书签名(生成ca.pem ca-key.pem)`

[root@master k8s-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/02/05 10:15:09 [INFO] generating a new CA key and certificate from CSR

2020/02/05 10:15:09 [INFO] generate received request

2020/02/05 10:15:09 [INFO] received CSR

2020/02/05 10:15:09 [INFO] generating key: rsa-2048

2020/02/05 10:15:09 [INFO] encoded CSR

2020/02/05 10:15:09 [INFO] signed certificate with serial number 154087341948227448402053985122760482002707860296

`建立apiserver证书`

[root@master k8s-cert]# cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"192.168.1.11", #master1

"192.168.1.14", #master2

"192.168.1.100", #vip

"192.168.1.15", #lb

"192.168.1.16", #lb

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`证书签名(生成server.pem server-key.pem)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2020/02/05 11:43:47 [INFO] generate received request

2020/02/05 11:43:47 [INFO] received CSR

2020/02/05 11:43:47 [INFO] generating key: rsa-2048

2020/02/05 11:43:47 [INFO] encoded CSR

2020/02/05 11:43:47 [INFO] signed certificate with serial number 359419453323981371004691797080289162934778938507

2020/02/05 11:43:47 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

`建立admin证书`

[root@master k8s-cert]# cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

`证书签名(生成admin.pem admin-key.epm)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2020/02/05 11:46:04 [INFO] generate received request

2020/02/05 11:46:04 [INFO] received CSR

2020/02/05 11:46:04 [INFO] generating key: rsa-2048

2020/02/05 11:46:04 [INFO] encoded CSR

2020/02/05 11:46:04 [INFO] signed certificate with serial number 361885975538105795426233467824041437549564573114

2020/02/05 11:46:04 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

`建立kube-proxy证书`

[root@master k8s-cert]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "NanJing",

"ST": "NanJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

`证书签名(生成kube-proxy.pem kube-proxy-key.pem)`

[root@master k8s-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2020/02/05 11:47:55 [INFO] generate received request

2020/02/05 11:47:55 [INFO] received CSR

2020/02/05 11:47:55 [INFO] generating key: rsa-2048

2020/02/05 11:47:56 [INFO] encoded CSR

2020/02/05 11:47:56 [INFO] signed certificate with serial number 34747850270017663665747172643822215922289240826

2020/02/05 11:47:56 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2.生成apiserver证书,并开启scheduler和controller-manager组件

[root@master k8s-cert]# bash k8s-cert.sh

2020/02/05 11:50:08 [INFO] generating a new CA key and certificate from CSR

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 473883155883308900863805079252124099771123043047

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 66483817738746309793417718868470334151539533925

2020/02/05 11:50:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:08 [INFO] encoded CSR

2020/02/05 11:50:08 [INFO] signed certificate with serial number 245658866069109639278946985587603475325871008240

2020/02/05 11:50:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2020/02/05 11:50:08 [INFO] generate received request

2020/02/05 11:50:08 [INFO] received CSR

2020/02/05 11:50:08 [INFO] generating key: rsa-2048

2020/02/05 11:50:09 [INFO] encoded CSR

2020/02/05 11:50:09 [INFO] signed certificate with serial number 696729766024974987873474865496562197315198733463

2020/02/05 11:50:09 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@master k8s-cert]# ls *pem

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

[root@master k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/

[root@master k8s-cert]# cd ..

`解压kubernetes压缩包`

[root@master k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# cd /root/k8s/kubernetes/server/bin

`复制关键命令文件`

[root@master bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@master k8s]# cd /root/k8s

`随机生成序列号`

[root@master k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

9b3186df3dc799376ad43b6fe0108571

[root@master k8s]# vim /opt/kubernetes/cfg/token.csv

9b3186df3dc799376ad43b6fe0108571,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#序列号,用户名,id,角色

`二进制文件,token,证书都准备好,开启apiserver`

[root@master k8s]# bash apiserver.sh 192.168.1.11 https://192.168.1.11:2379,https://192.168.1.12:2379,https://192.168.1.13:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

`检查进程是否启动成功`

[root@master k8s]# ps aux | grep kube

`查看配置文件`

[root@master k8s]# cat /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://192.168.1.11:2379,https://192.168.18.148:2379,https://192.168.18.145:2379 \

--bind-address=192.168.1.11 \

--secure-port=6443 \

--advertise-address=192.168.1.11 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

`监听的https端口`

[root@master k8s]# netstat -ntap | grep 6443

`启动scheduler服务`

[root@master k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@master k8s]# ps aux | grep ku

postfix 6212 0.0 0.0 91732 1364 ? S 11:29 0:00 pickup -l -t unix -u

root 7034 1.1 1.0 45360 20332 ? Ssl 12:23 0:00 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

root 7042 0.0 0.0 112676 980 pts/1 R+ 12:23 0:00 grep --color=auto ku

[root@master k8s]# chmod +x controller-manager.sh

`启动controller-manager`

[root@master k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

`查看master 节点状态`

[root@master k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

五.部署node节点组件

1.在master01上把 kubelet、kube-proxy命令文件拷贝到node节点上去

[root@master k8s]# cd kubernetes/server/bin/

[root@master bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[email protected]'s password:

kubelet 100% 168MB 81.1MB/s 00:02

kube-proxy 100% 48MB 77.6MB/s 00:00

[root@master bin]# scp kubelet kube-proxy [email protected]:/opt/kubernetes/bin/

[email protected]'s password:

kubelet 100% 168MB 86.8MB/s 00:01

kube-proxy 100% 48MB 90.4MB/s 00:00

2.在node1上解压文件

[root@node1 ~]# ls

anaconda-ks.cfg flannel-v0.10.0-linux-amd64.tar.gz node.zip 公共 视频 文档 音乐

flannel.sh initial-setup-ks.cfg README.md 模板 图片 下载 桌面

[root@node1 ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh3.在master01上操作

[root@master bin]# cd /root/k8s/

[root@master k8s]# mkdir kubeconfig

[root@master k8s]# cd kubeconfig/

`上传kubeconfig.sh脚本到此目录中,并对其进行重命名`

[root@master kubeconfig]# ls

kubeconfig.sh

[root@master kubeconfig]# mv kubeconfig.sh kubeconfig

[root@master kubeconfig]# vim kubeconfig

#删除前9行,之前生成令牌的时候已经执行过

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=9b3186df3dc799376ad43b6fe0108571 \ #令牌中的序列号需要做更改是我们之前生成的令牌

--kubeconfig=bootstrap.kubeconfig

#修改完成后按Esc退出插入模式,输入:wq保存退出

----如何获取序列号----

[root@master kubeconfig]# cat /opt/kubernetes/cfg/token.csv

9b3186df3dc799376ad43b6fe0108571,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

#我们需要用到其中的序列号"9b3186df3dc799376ad43b6fe0108571"每个人的序列号是不同的

---------------------

`设置环境变量(可以写入到/etc/profile中)`

[root@master kubeconfig]# vim /etc/profile

#按大写字母G到最末行,按小写字母o在下行插入

export PATH=$PATH:/opt/kubernetes/bin/

#修改完成后按Esc退出插入模式,输入:wq保存退出

[root@master kubeconfig]# source /etc/profile

[root@master kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

[root@master kubeconfig]# kubectl get node

No resources found.

#此时还没有节点被添加

[root@master kubeconfig]# bash kubeconfig 192.168.1.11 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@master kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

`拷贝配置文件到两个node节点`

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

[email protected]'s password:

bootstrap.kubeconfig 100% 2168 2.2MB/s 00:00

kube-proxy.kubeconfig 100% 6270 3.5MB/s 00:00

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/opt/kubernetes/cfg/

[email protected]'s password:

bootstrap.kubeconfig 100% 2168 3.1MB/s 00:00

kube-proxy.kubeconfig 100% 6270 7.9MB/s 00:00

`创建bootstrap角色赋予权限用于连接apiserver请求签名(关键步骤)`

[root@master kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

3.在node01节点上操作

[root@node1 ~]# bash kubelet.sh 192.168.1.12

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

检查kubelet服务启动

[root@node1 ~]# ps aux | grep kube

[root@node1 ~]# systemctl status kubelet.service

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2020-02-05 14:54:45 CST; 21s ago

#状态为running运行中4.在master01上验证node1的证书请求

node1会自动寻找apiserver去进行申请证书,我们就可以检查到node01节点的请求

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-ZZnDyPkUICga9NeuZF-M8IHTmpekEurXtbHXOyHZbDg 18s kubelet-bootstrap Pending

#此时状态为Pending等待集群给该节点颁发证书

`继续查看证书状态`

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-ZZnDyPkUICga9NeuZF-M8IHTmpekEurXtbHXOyHZbDg 3m59s kubelet-bootstrap Approved,Issued

#此时状态为Approved,Issued已经被允许加入群集

`查看群集节点,成功加入node1节点`

[root@master kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.18.148 Ready <none> 6m54s v1.12.35.在node1节点操作,启动proxy服务

[root@node1 ~]# bash proxy.sh 192.168.1.12

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@node1 ~]# systemctl status kube-proxy.service

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2020-02-06 11:11:56 CST; 20s ago

#状态为running运行中6.把node1中的/opt/kubernetes目录复制到node2节点,并且kubelet,kube-proxy的service文件拷贝到node2中

[root@node1 ~]# scp -r /opt/kubernetes/ [email protected]:/opt/

[root@node1 ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service [email protected]:/usr/lib/systemd/system/

[email protected]'s password:

kubelet.service 100% 264 291.3KB/s 00:00

kube-proxy.service 100% 231 407.8KB/s 00:007.到node2上操作,进行修改:首先删除复制过来的证书,等会node2会自行申请证书

[root@node2 ~]# cd /opt/kubernetes/ssl/

[root@node2 ssl]# rm -rf *

`修改配置文件kubelet kubelet.config kube-proxy(三个配置文件)`

[root@node2 ssl]# cd ../cfg/

[root@node2 cfg]# vim kubelet

4 --hostname-override=192.168.1.13\ #第4行,主机名改为node2节点的IP地址

#修改完成后按Esc退出插入模式,输入:wq保存退出

[root@node2 cfg]# vim kubelet.config

4 address: 192.168.1.13 #第4行,地址改为node2节点的IP地址

#修改完成后按Esc退出插入模式,输入:wq保存退出

[root@node2 cfg]# vim kube-proxy

4 --hostname-override=192.168.1.13 #第4行,改为node2节点的IP地址

#修改完成后按Esc退出插入模式,输入:wq保存退出

`启动服务`

[root@node2 cfg]# systemctl start kubelet.service

[root@node2 cfg]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node2 cfg]# systemctl start kube-proxy.service

[root@node2 cfg]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

第八步:回到master上查看node2节点请求

[root@master k8s]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA 99s kubelet-bootstrap Pending

#此时出现新的授权许可加入群集

[root@master k8s]# kubectl certificate approve node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA

certificatesigningrequest.certificates.k8s.io/node-csr-QtKJLeSj130rGIccigH6-MKH7klhymwDxQ4rh4w8WJA approved8.在master01上查看群集中的节点

[root@master k8s]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.1.12 Ready <none> 28s v1.12.3

192.168.1.13 Ready <none> 26m v1.12.3

#此时两个节点都已加入到群集中六.部署第2台master

1.在master上操作

在master1上操作,复制kubernetes目录到master2

[root@master1 k8s]# scp -r /opt/kubernetes/ [email protected]:/opt

复制master1中的三个组件启动脚本kube-apiserver.service,kube-controller-manager.service,kube-scheduler.service到master2

[root@master1 k8s]# scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service [email protected]:/usr/lib/systemd/system/2.master2上操作,修改配置文件kube-apiserver中的IP

[root@master2 ~]# cd /opt/kubernetes/cfg/

[root@master2 cfg]# ls

kube-apiserver kube-controller-manager kube-scheduler token.csv

[root@master2 cfg]# vim kube-apiserver

5 --bind-address=192.168.1.14 \

7 --advertise-address=192.168.1.14 \

#第5和7行IP地址需要改为master2的地址

#修改完成后按Esc退出插入模式,输入:wq保存退出3.在master01上操作拷贝已有的etcd证书给master2使用

[root@master1 k8s]# scp -r /opt/etcd/ [email protected]:/opt/

[email protected]'s password:

etcd 100% 516 535.5KB/s 00:00

etcd 100% 18MB 90.6MB/s 00:00

etcdctl 100% 15MB 80.5MB/s 00:00

ca-key.pem 100% 1675 1.4MB/s 00:00

ca.pem 100% 1265 411.6KB/s 00:00

server-key.pem 100% 1679 2.0MB/s 00:00

server.pem 100% 1338 429.6KB/s 00:004. 在master02上启动三个组件服务

[root@master2 cfg]# systemctl start kube-apiserver.service

[root@master2 cfg]# systemctl enable kube-apiserver.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@master2 cfg]# systemctl status kube-apiserver.service

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2020-02-07 09:16:57 CST; 56min ago

[root@master2 cfg]# systemctl start kube-controller-manager.service

[root@master2 cfg]# systemctl enable kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@master2 cfg]# systemctl status kube-controller-manager.service

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2020-02-07 09:17:02 CST; 57min ago

[root@master2 cfg]# systemctl start kube-scheduler.service

[root@master2 cfg]# systemctl enable kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@master2 cfg]# systemctl status kube-scheduler.service

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since 五 2020-02-07 09:17:07 CST; 58min ago5.修改系统环境变量,并查看节点状态,来验证master02运行正常

[root@master2 cfg]# vim /etc/profile

#末尾添加

export PATH=$PATH:/opt/kubernetes/bin/

[root@master2 cfg]# source /etc/profile

[root@master2 cfg]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.1.12 Ready <none> 21h v1.12.3

192.168.1.13 Ready <none> 22h v1.12.3

#此时可以看到node1和node2的加入情况七.负载均衡部署

1.上传keepalived.conf和nginx.sh两个文件到lb1和lb2的root目录下

`lb1`

[root@lb1 ~]# ls

anaconda-ks.cfg keepalived.conf 公共 视频 文档 音乐

initial-setup-ks.cfg nginx.sh 模板 图片 下载 桌面

`lb2`

[root@lb2 ~]# ls

anaconda-ks.cfg keepalived.conf 公共 视频 文档 音乐

initial-setup-ks.cfg nginx.sh 模板 图片 下载 桌面2.lb1(192.168.1.15)操作

[root@lb1 ~]# systemctl stop firewalld.service

[root@lb1 ~]# setenforce 0

[root@lb1 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

#修改完成后按Esc退出插入模式,输入:wq保存退出

`重新加载yum仓库`

[root@lb1 ~]# yum list

`安装nginx服务`

[root@lb1 ~]# yum install nginx -y

[root@lb1 ~]# vim /etc/nginx/nginx.conf

#在12行下插入以下内容

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.11:6443; #此处为master1的ip地址

server 192.168.1.12:6443; #此处为master2的ip地址

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

#修改完成后按Esc退出插入模式,输入:wq保存退出

`检测语法`

[root@lb1 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@lb1 ~]# cd /usr/share/nginx/html/

[root@lb1 html]# ls

50x.html index.html

[root@lb1 html]# vim index.html

14 <h1>Welcome to mater nginx!</h1> #14行中添加master以作区分

#修改完成后按Esc退出插入模式,输入:wq保存退出

`启动服务`

[root@lb2 ~]# systemctl start nginx

浏览器验证访问,输入192.168.18.147,可以访问master的nginx主页

在这里插入图片描述

部署keepalived服务

[root@lb1 html]# yum install keepalived -y

`修改配置文件`

[root@lb1 html]# cd ~

[root@lb1 ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp:是否覆盖"/etc/keepalived/keepalived.conf"? yes

#用我们之前上传的keepalived.conf配置文件,覆盖安装完成后原有的配置文件

[root@lb1 ~]# vim /etc/keepalived/keepalived.conf

18 script "/etc/nginx/check_nginx.sh" #18行目录改为/etc/nginx/,脚本后写

23 interface ens33 #eth0改为ens33,此处的网卡名称可以使用ifconfig命令查询

24 virtual_router_id 51 #vrrp路由ID实例,每个实例是唯一的

25 priority 100 #优先级,备服务器设置90

31 virtual_ipaddress {

32 192.168.1.100/24 #vip地址改为之前设定好的192.168.18.100

#38行以下删除

#修改完成后按Esc退出插入模式,输入:wq保存退出

`写脚本`

[root@lb1 ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$") #统计数量

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#匹配为0,关闭keepalived服务

#写入完成后按Esc退出插入模式,输入:wq保存退出

[root@lb1 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@lb1 ~]# ls /etc/nginx/check_nginx.sh

/etc/nginx/check_nginx.sh #此时脚本为可执行状态,绿色

[root@lb1 ~]# systemctl start keepalived

[root@lb1 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:24:63:be brd ff:ff:ff:ff:ff:ff

inet 192.168.18.147/24 brd 192.168.18.255 scope global dynamic ens33

valid_lft 1370sec preferred_lft 1370sec

inet `192.168.1.100/24` scope global secondary ens33 #此时漂移地址在lb1中

valid_lft forever preferred_lft forever

inet6 fe80::1cb1:b734:7f72:576f/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::578f:4368:6a2c:80d7/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::6a0c:e6a0:7978:3543/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3.lb2(192.168.1.16)操作

[root@lb2 ~]# systemctl stop firewalld.service

[root@lb2 ~]# setenforce 0

[root@lb2 ~]# vim /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

#修改完成后按Esc退出插入模式,输入:wq保存退出

`重新加载yum仓库`

[root@lb2 ~]# yum list

`安装nginx服务`

[root@lb2 ~]# yum install nginx -y

[root@lb2 ~]# vim /etc/nginx/nginx.conf

#在12行下插入以下内容

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.18.128:6443; #此处为master1的ip地址

server 192.168.18.132:6443; #此处为master2的ip地址

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

#修改完成后按Esc退出插入模式,输入:wq保存退出

`检测语法`

[root@lb2 ~]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@lb2 ~]# vim /usr/share/nginx/html/index.html

14 <h1>Welcome to backup nginx!</h1> #14行中添加backup以作区分

#修改完成后按Esc退出插入模式,输入:wq保存退出

`启动服务`

[root@lb2 ~]# systemctl start nginx

浏览器验证访问,输入192.168.18.133,可以访问master的nginx主页

在这里插入图片描述

部署keepalived服务

[root@lb2 ~]# yum install keepalived -y

`修改配置文件`

[root@lb2 ~]# cp keepalived.conf /etc/keepalived/keepalived.conf

cp:是否覆盖"/etc/keepalived/keepalived.conf"? yes

#用我们之前上传的keepalived.conf配置文件,覆盖安装完成后原有的配置文件

[root@lb2 ~]# vim /etc/keepalived/keepalived.conf

18 script "/etc/nginx/check_nginx.sh" #18行目录改为/etc/nginx/,脚本后写

22 state BACKUP #22行角色MASTER改为BACKUP

23 interface ens33 #eth0改为ens33

24 virtual_router_id 51 #vrrp路由ID实例,每个实例是唯一的

25 priority 90 #优先级,备服务器为90

31 virtual_ipaddress {

32 192.168.1.100/24 #vip地址改为之前设定好的192.168.18.100

#38行以下删除

#修改完成后按Esc退出插入模式,输入:wq保存退出

`写脚本`

[root@lb2 ~]# vim /etc/nginx/check_nginx.sh

count=$(ps -ef |grep nginx |egrep -cv "grep|$$") #统计数量

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

#匹配为0,关闭keepalived服务

#写入完成后按Esc退出插入模式,输入:wq保存退出

[root@lb2 ~]# chmod +x /etc/nginx/check_nginx.sh

[root@lb2 ~]# ls /etc/nginx/check_nginx.sh

/etc/nginx/check_nginx.sh #此时脚本为可执行状态,绿色

[root@lb2 ~]# systemctl start keepalived

[root@lb2 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:9d:b7:83 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.16/24 brd 192.168.1.255 scope global dynamic ens33

valid_lft 958sec preferred_lft 958sec

inet6 fe80::578f:4368:6a2c:80d7/64 scope link

valid_lft forever preferred_lft forever

inet6 fe80::6a0c:e6a0:7978:3543/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

#此时没有192.168.18.100,因为地址在lb1(master)上

此时K8s 多节点部署已经全部完成

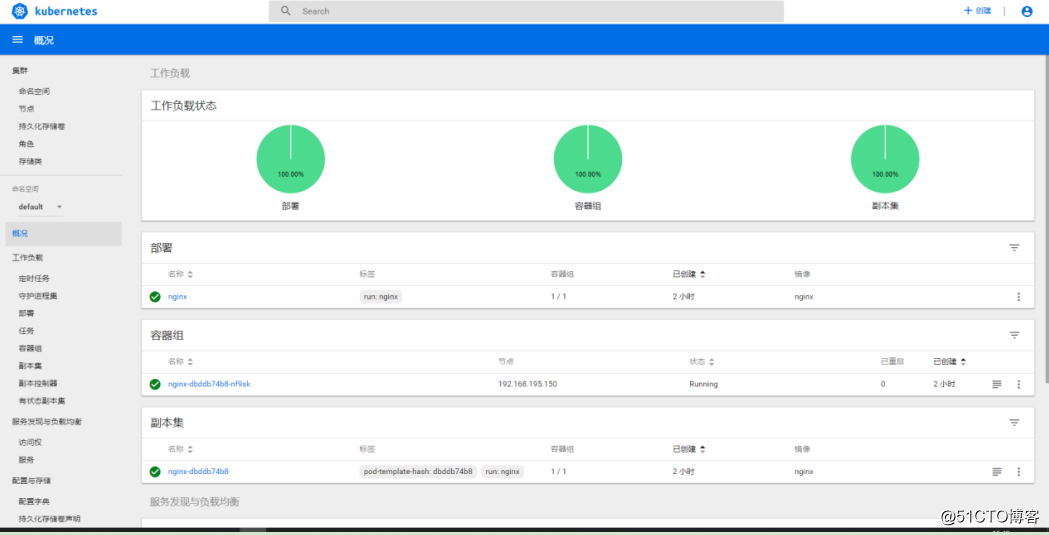

补充: k8sUI界面的搭建

1.在master01上操作

创建dashborad工作目录

[root@localhost k8s]# mkdir dashboard

拷贝官方的文件

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/dashboard

[root@localhost dashboard]# kubectl create -f dashboard-rbac.yaml

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

[root@localhost dashboard]# kubectl create -f dashboard-secret.yaml

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-key-holder created

[root@localhost dashboard]# kubectl create -f dashboard-configmap.yaml

configmap/kubernetes-dashboard-settings created

[root@localhost dashboard]# kubectl create -f dashboard-controller.yaml

serviceaccount/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

[root@localhost dashboard]# kubectl create -f dashboard-service.yaml

service/kubernetes-dashboard created

//完成后查看创建在指定的kube-system命名空间下

[root@localhost dashboard]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-65f974f565-m9gm8 0/1 ContainerCreating 0 88s

//查看如何访问

[root@localhost dashboard]# kubectl get pods,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/kubernetes-dashboard-65f974f565-m9gm8 1/1 Running 0 2m49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes-dashboard NodePort 10.0.0.243 <none> 443:30001/TCP 2m24s

//访问nodeIP就可以访问

https://192.168.1.12:30001/2.注意:谷歌浏览器无法访问的问题

[root@localhost dashboard]# vim dashboard-cert.sh

cat > dashboard-csr.json <<EOF

{

"CN": "Dashboard",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

K8S_CA=$1

cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config.json -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard

kubectl delete secret kubernetes-dashboard-certs -n kube-system

kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

#dashboard-controller.yaml 增加证书两行,然后apply

# args:

# # PLATFORM-SPECIFIC ARGS HERE

# - --auto-generate-certificates

# - --tls-key-file=dashboard-key.pem

# - --tls-cert-file=dashboard.pem

[root@localhost dashboard]# bash dashboard-cert.sh /root/k8s/k8s-cert/

2020/02/05 15:29:08 [INFO] generate received request

2020/02/05 15:29:08 [INFO] received CSR

2020/02/05 15:29:08 [INFO] generating key: rsa-2048

2020/02/05 15:29:09 [INFO] encoded CSR

2020/02/05 15:29:09 [INFO] signed certificate with serial number 150066859036029062260457207091479364937405390263

2020/02/05 15:29:09 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

secret "kubernetes-dashboard-certs" deleted

secret/kubernetes-dashboard-certs created

[root@localhost dashboard]# vim dashboard-controller.yaml

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=dashboard-key.pem

- --tls-cert-file=dashboard.pem

//重新部署

[root@localhost dashboard]# kubectl apply -f dashboard-controller.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

serviceaccount/kubernetes-dashboard configured

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

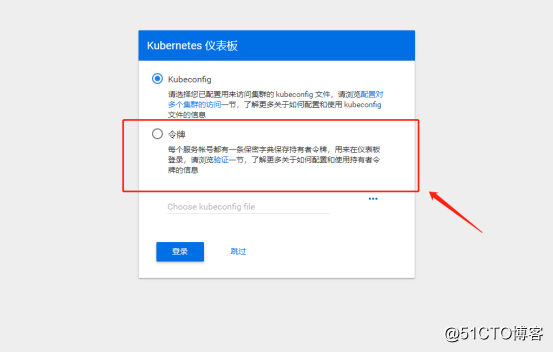

deployment.apps/kubernetes-dashboard configured3.重新访问,此时需要令牌

4.生成令牌

[root@localhost dashboard]# kubectl create -f k8s-admin.yaml

serviceaccount/dashboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

//保存

[root@localhost dashboard]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

dashboard-admin-token-qctfr kubernetes.io/service-account-token 3 65s

default-token-mmvcg kubernetes.io/service-account-token 3 7d15h

kubernetes-dashboard-certs Opaque 11 10m

kubernetes-dashboard-key-holder Opaque 2 63m

kubernetes-dashboard-token-nsc84 kubernetes.io/service-account-token 3 62m

//查看令牌

[root@localhost dashboard]# kubectl describe secret dashboard-admin-token-qctfr -n kube-system

Name: dashboard-admin-token-qctfr

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 73f19313-47ea-11ea-895a-000c297a15fb

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1359 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcWN0ZnIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNzNmMTkzMTMtNDdlYS0xMWVhLTg5NWEtMDAwYzI5N2ExNWZiIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.v4YBoyES2etex6yeMPGfl7OT4U9Ogp-84p6cmx3HohiIS7sSTaCqjb3VIvyrVtjSdlT66ZMRzO3MUgj1HsPxgEzOo9q6xXOCBb429m9Qy-VK2JxuwGVD2dIhcMQkm6nf1Da5ZpcYFs8SNT-djAjZNB_tmMY_Pjao4DBnD2t_JXZUkCUNW_O2D0mUFQP2beE_NE2ZSEtEvmesB8vU2cayTm_94xfvtNjfmGrPwtkdH0iy8sH-T0apepJ7wnZNTGuKOsOJf76tU31qF4E5XRXIt-F2Jmv9pEOFuahSBSaEGwwzXlXOVMSaRF9cBFxn-0iXRh0Aq0K21HdPHW1b4-ZQwA5.复制生成的token,输入到浏览器中,就可以看到UI界面了

Recommend

-

53

53

转自微信公众号“邦得会馆”

-

65

65

一.什么是shell?在linux内核与用户直接的解释器程序;通常指/bin/bash;相当于操作系统的“外壳”。二.怎么使用shell?shell的使用方式?1.命令行==交互式;逐条解释执行,效率低2.脚本==非交互式;批量执行,效率高;方便在后台静悄悄地运行。三.怎么切换shell?怎么...

-

33

33

Haproxy搭建web群集常见的Web集群调度器目前常见的Web集群调度器分为软件和硬件,软件通常使用开源的LVS,Haproxy,Nginx,硬件一般使用比较多的是F5,也有很多人使用国内的一些产品,如梭子,绿盟等Haproxy应用分析LVS在企业应用中负载能力很强,但存在不足LVS不支持...

-

15

15

Redis 超详细的手动搭建Cluster集群步骤 Redis Cluster是Redis的自带的官方分布...

-

12

12

3个步骤,教你提升界面质感! 设计牛奶盒 2021-06-28 1 评论...

-

11

11

ETH 以太坊RPC节点部署搭建说明-mainnet(开发用,替代infura,非挖矿) 方伟的博客 j2ee技术、网络、web等,同名的人真多,我的QQ是20025404

-

18

18

HECO 火币生态链RPC节点部署搭建说明-mainnet(开发用,非挖矿) 方伟的博客 j2ee技术、网络、web等,同名的人真多,我的QQ是20025404 ...

-

6

6

Arduino Mac上环境搭建 从角落里翻出以前的Wi-Fiduino,吃灰了好久,想重新拯救它。可是,Arduino编辑器没了,得重新安装。查了一晚上Arduino环境配置文档,感觉大家都在用win,Mac在这个圈并不受欢迎。。。...

-

4

4

Sorry, you have been blocked You are unable to access fanqiang.network Why have I been blocked? This website...

-

5

5

kafka集群节点临时下线操作流程及步骤kafka集群节点临时下线操作流程:首先,我们需要确保在将 Kafka 集群中的节点临时下线之前,集群处于健康状态。然后,我们可以按照以下步骤执行临时下线操作:1.停...

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK