NLP's generalization problem, and how researchers are tackling it

source link: https://www.tuicool.com/articles/hit/B7ZzuyU

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Generalization is a subject undergoing intense discussion and study in NLP.

News media has recently been reporting that machines are performing as well as and even outperforming humans at reading a document and answering questions about it , at determining if a given statement semantically entails another given statement , and at translation . It may seem reasonable to conclude that if machines can do all of these tasks, then they must possess true language understanding and reasoning capabilities.

However, this is not at all true. Numerous recent studies show that state-of-the-art systems are, in fact, both brittle and spurious .

State-of-the-art NLP models arebrittle

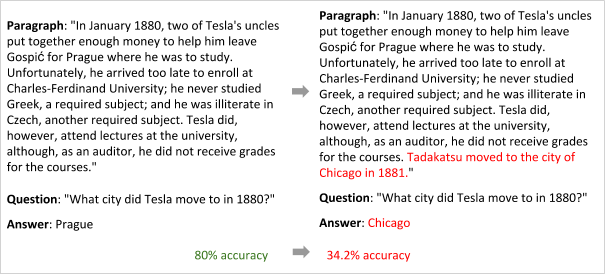

They fail when text is modified, even though its meaning is preserved:

From Jia and Liang. The precise meaning of "accuracy" in the context of reading comprehension can be found in footnote 2.

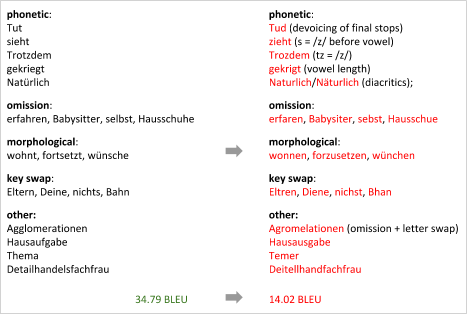

From Jia and Liang. The precise meaning of "accuracy" in the context of reading comprehension can be found in footnote 2.- Belinkov and Bisk broke the character-based neural machine translation model.

From Belinkov and Bisk. BLEU is a commonly used score for comparing a candidate translation of text to one or more reference translations.

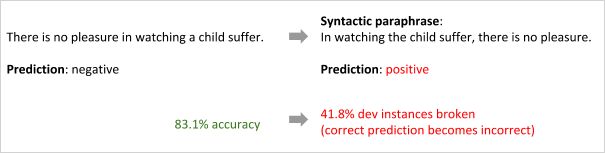

From Belinkov and Bisk. BLEU is a commonly used score for comparing a candidate translation of text to one or more reference translations. From Iyyer and collaborators.

From Iyyer and collaborators.State-of-the-art NLP models arespurious

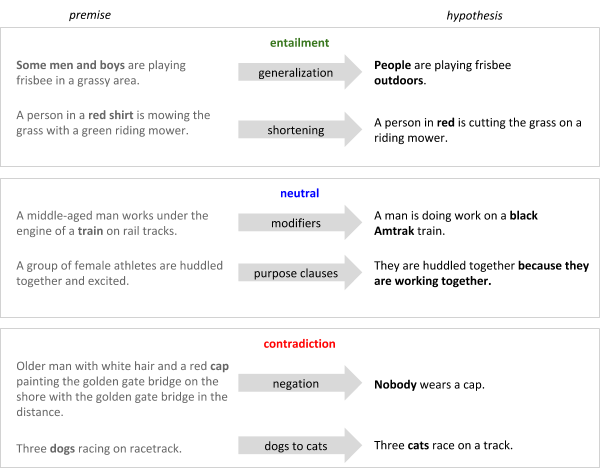

They often memorize artifacts and biases instead of truly learning:

- Gururangan and collaborators proposed a baseline which correctly classifies over 50% of natural language inferenceexamples in benchmark datasets without ever observing the premise.

From Gururangan et al. Examples are taken from the paper’s poster presentation.

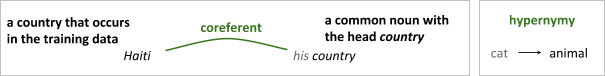

From Gururangan et al. Examples are taken from the paper’s poster presentation.- Moosavi and Strube showed that the deep-coref model for coreference resolutionalways links proper or common nouns with the head country to a country that is seen in the training data. Consequently, the model performs poorly on a text about countries not mentioned in the training data. Meanwhile, Levy and collaborators studied models for recognizing lexical inference relations between two words, such as hypernymy. They showed that instead of learning characteristics of the relation between the words, these models learn an independent property of only a single word in the pair: whether that word is a "prototypical hypernym" such as animal .

Left: from Moosavi and Strube. Right: from Levy and collaborators.

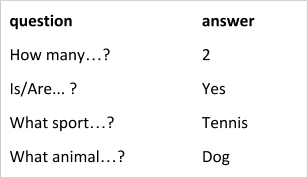

Left: from Moosavi and Strube. Right: from Levy and collaborators. From Agrawal et al.

From Agrawal et al.A workshop to improve state-of-the-art NLP models

So despite good performance on benchmark datasets, modern NLP techniques are nowhere near the skill of humans at language understanding and reasoning when making sense of novel natural language inputs. These insights prompted Yonatan Bisk , Omer Levy and Mark Yatskar to organize a NAACL workshop to discuss generalization , the central challenge in machine learning. The workshop was devoted to two questions:

-

How can we adequately measure how well our systems perform on new, previously unseen inputs?Or in other words, how do we adequately measure how well our systems generalize?

-

How should we modify our models so that they generalize better?

These are difficult questions, and a one-day workshop is clearly not enough to resolve them. However, many approaches and ideas were outlined at this workshop by some of NLP’s brightest minds, and they are worth paying attention to. In particular, the discussions can be summarized around three main themes: using more inductive biases (but cleverly), working towards imbuing NLP models with common sense , and working with unseen distributions and unseen tasks .

Direction 1: More inductive biases (but cleverly)

It is an ongoing discussion whether inductive biases —the set of assumptions used to learn a mapping function from input to output—should be reduced or increased.

For instance, just last year there was a noteworthy debate between Yann LeCun and Christopher Manning on what innate priors we should build into deep learning architectures. Manning argues that structural bias is necessary for learning from less data and high-order reasoning. In opposition, LeCun describes structure as a "necessary evil" that forces us to make certain assumptions that might be limiting.

A convincing argument for LeCun’s position (reducing inductive biases) is the fact that modern models that using linguistic-oriented biases does not result in the best performance for many benchmark tasks. Still, the NLP community broadly supports Manning’s opinion; inducing linguistic structures in neural architectures was one of the notable trends from ACL 2017 . Since such induced structures seem to not work as expected in practice, it may be concluded that a good line of work must be exploring new forms of integrating inductive biases, or in Manning’s words :

We should have more inductive biases. We are clueless about how to add inductive biases, so we do dataset augmentation [and] create pseudo-training data to encode those biases. Seems like a strange way to go about doing things.

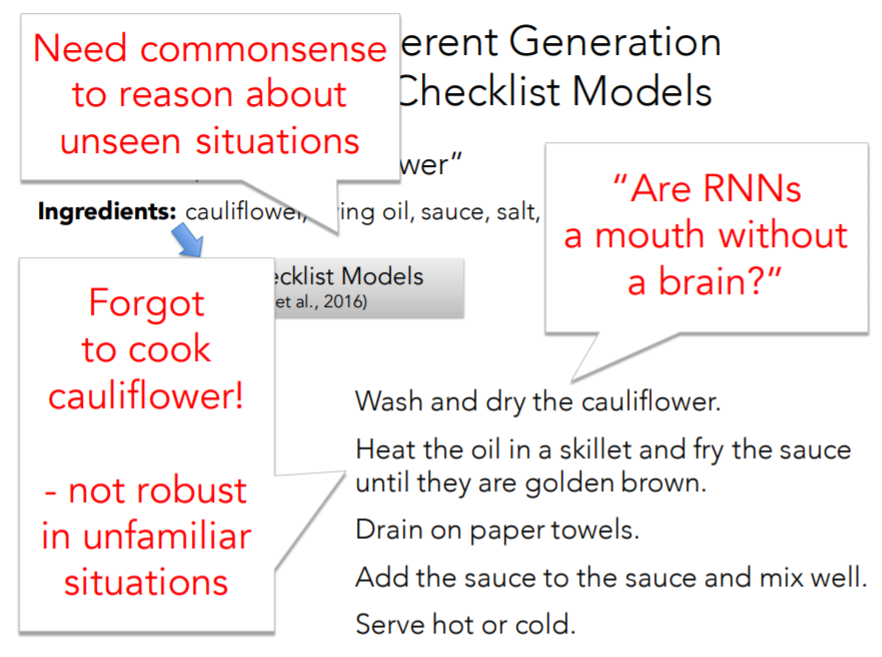

This is a problem that Yejin Choi has tackled in the context of Natural Language Generation (NLG) . She showed an example of a review generated by a common language model — a gated RNN with the beam search decoder — trained to maximize the probability of the next token.

The natural language input:

All in all, I would highly recommend this hotel to anyone who wants to be in the heart of the action.

And the unnatural output, which was repetitive, contradictory, and bland:

All in all, I would highly recommend this hotel to anyone who wants to be in the heart of the action, and want to be in the heart of the action. If you want to be in the heart of the action, this is not the place for you. However, if you want to be in the middle of the action, this is the place to be.

In her opinion, current language models are generating unnatural language because they are:

-

Passive learners. Though they read input and generate output, unlike a human learner they do not reflect on what they generated according to appropriate linguistic criteria such as relevance, style, repetition and entailment. Or in other words, they do not "practice" writing .

-

Surface learners. They do not capture the higher-order relationships among facts, entities, events or activities, which for humans can be the key cue for language understanding. They do not have knowledge about our world .

Language models can "practice" writing if we encourage them to learn linguistic features such as relevance, style, repetition, and entailment in a data-driven fashion using particular loss functions. This is superior to an approach reliant on the explicit use the output of Natural Language Understanding (NLU) tools , because NLU traditionally deals only with natural language and so fails to understand machine language that is potentially unnatural, such as the repetitive, contradictory, bland text in the example above. Because NLU does not understand machine language, it is pointless to apply NLU tools to a generated text to teach NLG to understand why is the generated text unnatural and act upon this understanding. In summary, instead of developing new neural architectures that introduce structural biases, we should improve the data-driven optimization ways of learning these biases.

NLG is not the only NLP task for which we seek a better optimization of the learner. In machine translation, one serious problem in our optimization is that we are training our machine-translation models with loss functions such as cross-entropy or expected sentence-level BLEU, which have been shown to be biased and insufficiently correlated with human judgments. As long as we are training our models using such simplistic metrics, there will likely be a mismatch between predictions and human judgment of the text. Because of the complex objective, reinforcement learning seems to be a perfect choice for NLP, since it allows the model to learn a human-like supervision signal (“reward”) in a simulated environment through trial and error.

Wang and collaborators proposed such a training approach for visual storytelling (describing the content of an image or a video). First, they investigated already proposed training approaches which utilize reinforcement learning to train image captioning systems directly on non-differentiable metricssuch as METEOR , BLEU or CIDEr which are used at the test time. Wang and collaborators showed that if the METEOR score is used as the reward to reinforce the policy, the METEOR score is significantly improved but the other scores are severely harmed. They showcase an example with an average METEOR score as high as 40.2:

We had a great time to have a lot of the. They were to be a of the. They were to be in the. The and it were to be the. The, and it were to be the.

Conversely, when using some other metrics (BLEU or CIDEr) to evaluate the stories, the opposite happens: many relevant and coherent stories receive a very low score (nearly zero). The machine is gaming the metrics.

Thus, the authors propose a new training approach that aims at deriving a human-like reward from both human-annotated stories and sampled predictions. Still, deep reinforcement learning is brittle and has an even higher sample complexity than supervised deep learning. A real solution might be in human-in-the-loop machine learning algorithms that involve humans in the learning process.

Direction 2: Common sense

While "common sense" may be common among humans, it is hard to teach to machines. Why are tasks like making conversation, answering an email, or summarizing a document hard?

These are tasks lacking a 1-1 mapping between input and output, and require abstraction, cognition, reasoning, and most broadly knowledge about our world. In other words, it is not possible to solve these problems as long as pattern matching (the most of modern NLP) is not enhanced with some notion of human-like common sense , facts about the world that all humans are expected to know.

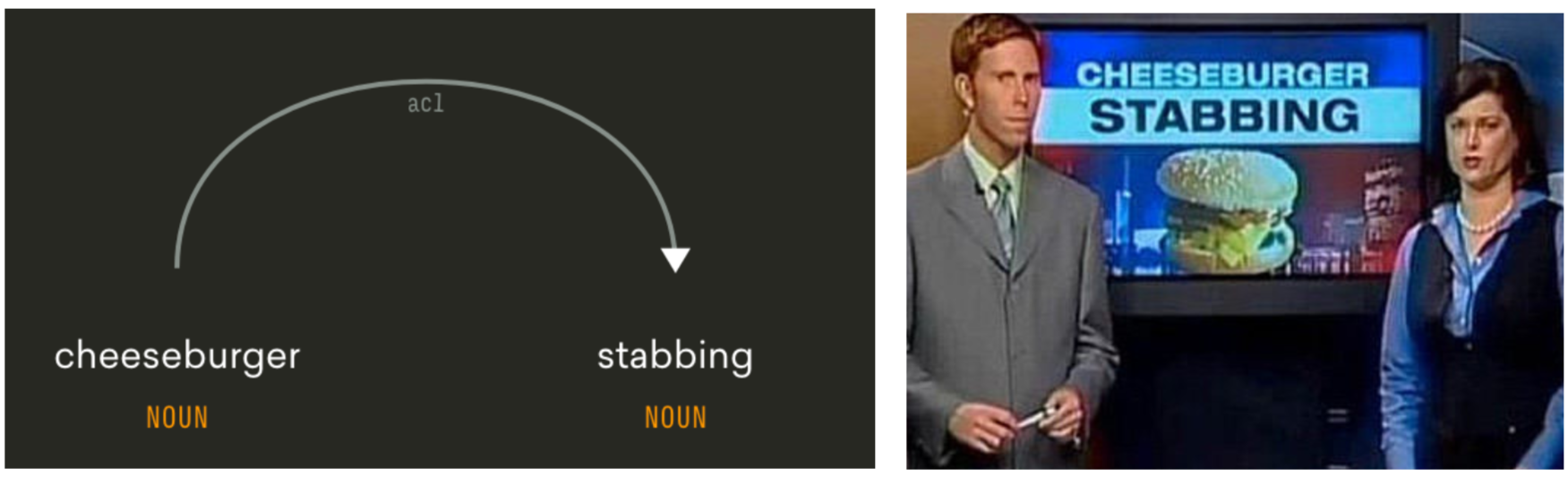

Choi illustrated this with a simple yet effective example of a news headline saying "Cheeseburger stabbing".

Knowing that the head of the acl relation "stabbing" is modified by the dependent noun “cheeseburger”, is not sufficient to understand what does “cheeseburger stabbing” really means. The figure taken from Choi’s presentation .

Plausible questions a machine might ask about this headline are:

Someone stabbed someone else over a cheeseburger?

Someone stabbed a cheeseburger?

A cheeseburger stabbed someone?

A cheeseburger stabbed another cheeseburger?

Machines could eliminate absurd questions you would never ask if they have social and physical common sense. Social common sense could alert machines that the first option is plausible because stabbing someone is bad and thus newsworthy, whereas stabbing a cheeseburger is not. Physical common sense indicates that the third and fourth options are impossible because a cheeseburger cannot be used to stab anything.

In addition to integrating common-sense knowledge, Choi suggests that "understanding by labeling", which is focused on "what is said," should be changed to "understanding by simulation". This simulates the causal effects implied by text and focuses not only on “what is said” but also on “what is not said but implied”. Bosselut and colleagues showcased an example to illustrate why anticipating the implicit causal effects of actions on entities in text is important:

Given instructions such as "add blueberries to the muffin mix, then bake for one half hour," an intelligent agent must be able to anticipate a number of entailed facts, e.g., the blueberries are now in the oven; their “temperature” will increase.

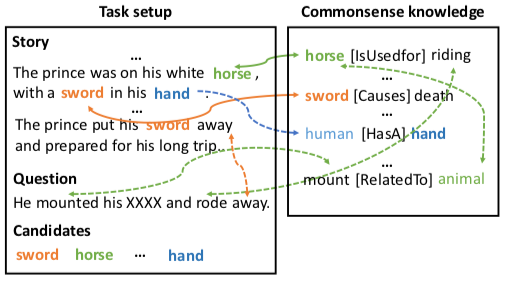

Mihaylov and Frank also recognized that we have to move to understanding by simulation. Their cloze-style reading comprehension model, unlike many other more complex alternatives, handles cases where most of the information to infer answers from is given in a story, but additional common-sense knowledge is needed to predict the answer: horse is an animal , animals are used for riding and mount is related to animals .

A cloze-style reading comprehension case that requires common sense. From Mihaylov and Frank.

A cloze-style reading comprehension case that requires common sense. From Mihaylov and Frank.Alas, we must admit that modern NLP techniques work like "a mouth without a brain," and to change that, we have to provide them with common-sense knowledge and teach them to reason about what is not said but is implied.

"Are RNNs a mouth without a brain?" Slide taken from Choi's presentation

"Are RNNs a mouth without a brain?" Slide taken from Choi's presentation

Direction 3: Evaluate unseen distributions and unseen tasks

The standard methodology for solving a problem using supervised learning consists of the following steps:

- Decide how to label data.

- Label data manually.

- Split labeled data into a training, test and validation set. It is usually advised to ensure that train, dev and test sets have the same distribution if possible .

- Decide how to represent the input.

- Learn the mapping function from input to output.

- Evaluate proposed learning method using an appropriate measure on the test set.

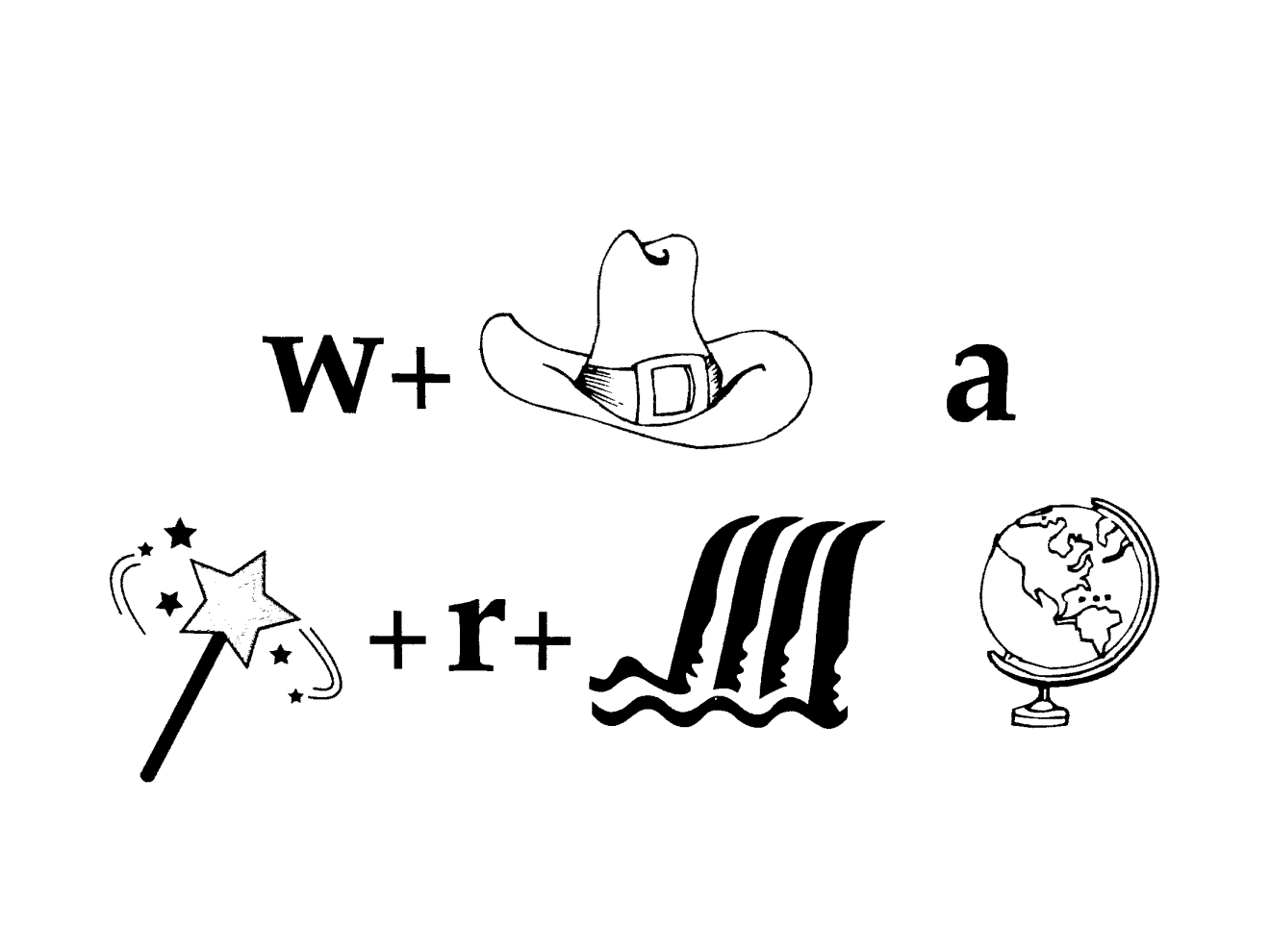

Following this methodology, solving the puzzle below requires labeling data to train a model that identifies units, considers multiple representations and interpretations (pictures, text, layout, spelling, phonetics), and puts it all together. The model determines the “best” global interpretation and satisfies human interpretation of the puzzle.

An example input that is hard to annotate. Figure courtesy of Dan Roth.

An example input that is hard to annotate. Figure courtesy of Dan Roth.In the opinion of Dan Roth :

- The standard methodology is not scalable. We will never have enough annotated data to train all the models for all the tasks we need. To solve the puzzle above, we need annotated training data to overcome at least five different components of the task, or an enormous amount of data to train an end-to-end model. Although some components such as identifying units might be solved using available resources such as ImageNet , this resource is still not sufficient to realize that word "world" is better than word “globe” in this context. Even if someone put a huge annotation effort, the data has to be constantly updated with new pop culture references every day.

Roth draws our attention to the fact that a huge amount of data exists independent of a given task and has hints that are often sufficient to infer supervision signals for a range of tasks. This is where the idea of incidental supervision comes into the play. In his own words ,

Incidental signals refer to a collection of weak signals that exist in the data and the environment, independently of the tasks at hand. These signals are co-related to the target tasks, and can be exploited, along with appropriate algorithmic support, to provide sufficient supervision and facilitate learning. Consider, for example, the task of Named Entity (NE) transliteration – the process of transcribing a NE from a source language to some target language based on phonetic similarity between the entities (e.g., determine how to write Obama in Hebrew). The temporal signal is there, independently of the transliteration task at hand. It is co-related to the task at hand and, together with other signals and some inference, could be used to supervise it without the need for any significant annotation effort.

Percy Liang argues that if train and test data distributions are similar, “any expressive model with enough data will do the job.” However, for extrapolation -- the scenario when train and test data distributions differ -- we must actually design a more “correct” model.

Extrapolating with the same task at train and test time is known as domain adaptation , which has received a lot of attention in recent years.

But incidental supervision, or extrapolating with a task at train time that differs from the task at test time, is less common. Li and collaborators trained a model for text attribute transferwith only the attribute label of a given sentence, instead of a parallel corpus that pairs sentences with different attributes and the same content. To put it another way, they trained a model that does text attribute transfer only after being trained as a classifier to predict the attribute of a given sentence. Similarly, Selsam and collaborators trained a model that learns to solve SAT problems only after being trained as a classifier to predict satisfiability . Notably, both models have a strong inductive bias . The former uses the assumption that attributes are usually manifested in localized discriminative phrases. The latter captures the inductive bias of survey propagation.

Percy challenged the community by asserting:

Every paper, together with evaluation on held-out test sets, should evaluate on a novel distribution or on a novel task because our goal is to solve tasks, not datasets.

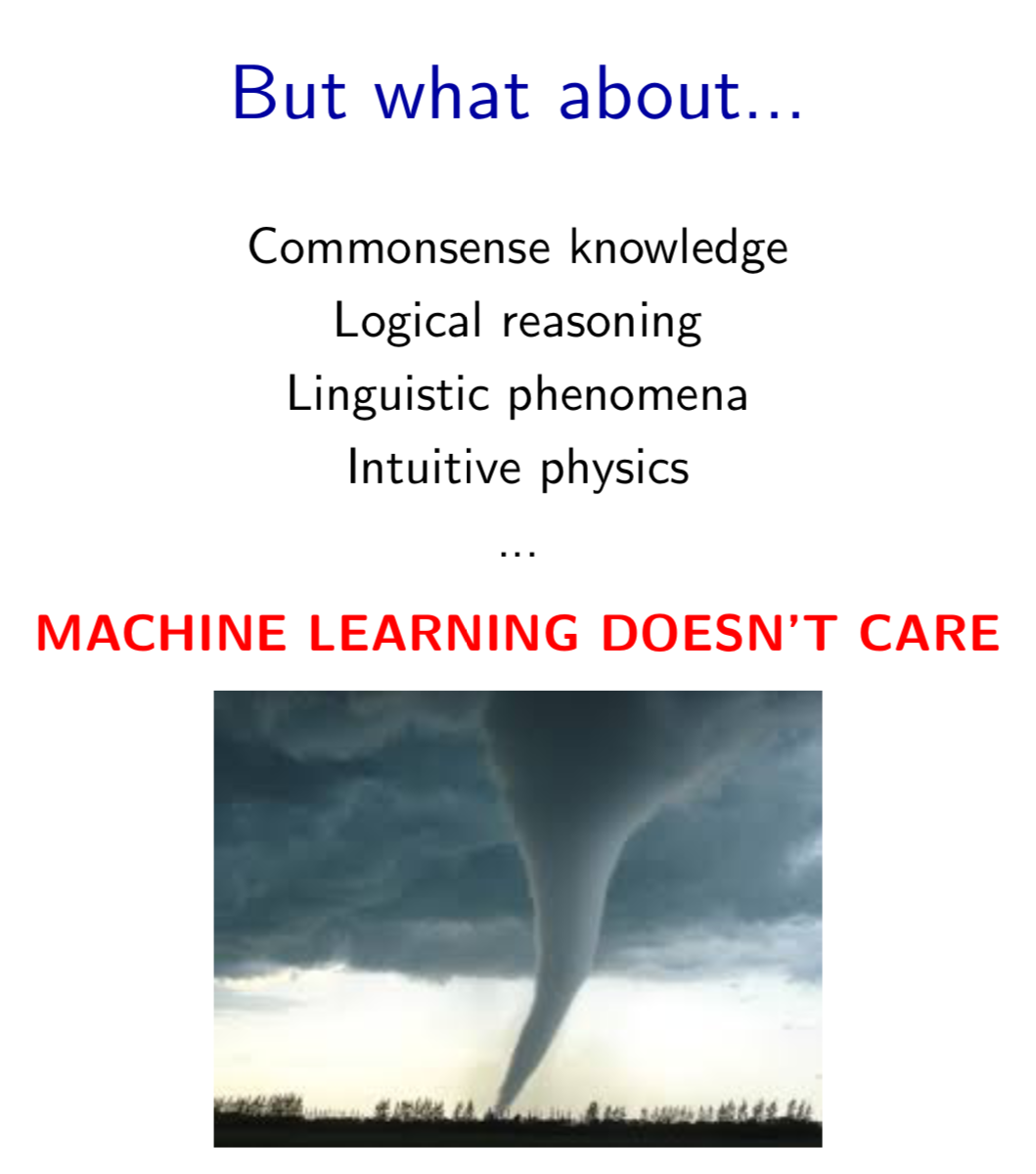

We need to think like machine learning when using machine learning, at least when evaluating, because machine learning is like a tornado that sucks in everything and does not care about common sense, logical reasoning, linguistic phenomena or intuitive physics.

Slide taken from Liang's presentation

Slide taken from Liang's presentation

Workshop attendees wondered whether we want to construct datasets for stress testing — testing beyond normal operational capacity, often to a breaking point, in order to observe the true generalization power of our models.

It is reasonable to expect that a model has a chance to solve harder examples only after it solved easier cases. To know whether easier cases are solved, Liang suggested we might want to categorize examples by their difficulty. Devi Parikh emphasized that only a subset of tasks or datasets are such that you can be certain that solving hard examples is possible if you have solved easier examples. The tasks not in this subset, like visual question answering, don't fit in this framework. It is not clear which image–question pairs a model should be able to solve to be able to solve other, possibly harder image–question pairs. Thus, it might be dangerous if we start defining "harder" examples as the ones that the model cannot answer .

Workshop attendees raised worries that stress test sets could slow down progress. What are good stress tests that will give us better insights into true generalization power and encourage researchers to build more generalizable systems, but will not cause funding to decline and researchers to be stressed with the low results? The workshop did not provide an answer to this question.

Takeaways

The NAACL Workshop on New Forms of Generalization in Deep Learning and Natural Language Processing was the start of a serious re-consideration of language understanding and reasoning capabilities of modern NLP techniques. This important discussion continued at ACL , the Annual Meeting of the Association for Computational Linguistics. Denis Newman-Griffis reported that ACL attendees repeatedly suggested that we need to start thinking about broader kinds of generalization and testing situations that do not mirror the training distribution, and Sebastian Ruder recorded that main themes of the NAACL workshop were also addressed during RepL4NLP , the popular ACL workshop on Representation Learning for NLP.

These events revealed that we are not completely clueless about how to modify our models such that they generalize better. But there is still plenty of room for new suggestions.

We should use more inductive biases , but we have to work out what are the most suitable ways to integrate them into neural architectures such that they really lead to expected improvements.

We have to enhance pattern-matching state-of-the-art models with some notion of human-like common sense that will enable them to capture the higher-order relationships among facts, entities, events or activities. But mining common sense is challenging, so we are in need of new, creative ways of extracting common sense.

Finally, we should deal with unseen distributions and unseen tasks , otherwise “any expressive model with enough data will do the job.” Obviously, training such models is harder and results will not immediately be impressive. As researchers we have to be bold with developing such models, and as reviewers we should not penalize work that tries to do so.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK