Making SoR Data Readily Available for Business Applications: Challenges and Solu...

source link: https://www.gigaspaces.com/blog/making-sor-data-readily-available-for-business-applications-challenges-and-solutions

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Making SoR Data Readily Available for Business Applications: Challenges and Solutions

Business applications are the backbone of the modern economy. From billing clients and overseeing customer relations to managing inventory and orders, it is software that runs much of today’s business functionality.

For these applications to work smoothly, they need continuous access to real-time internal and external information. Business applications are engaged in constant dialogue with the organization’s information storage and retrieval systems. Therefore, business application performance depends on the data integration processes and the systems of record (SoR) architectures.

Companies relying on traditional data architecture typically struggle with their systems of record. Legacy infrastructure is not designed to handle the size and volume of data requests modern applications require, and this slows down the supply of information and limits performance, producing issues such as:

- Preventing companies from using their data or fully maximizing it

- Limiting access to advanced tools (e.g., low code/no code platforms)

- Slowing the development and deployment of new services

- Struggling to maintain new processes post-launch

- Developing contingencies to deal with unexpected systems of record downtime

While most companies are undergoing digital transformation, becoming more distributed, Agile, and improving their access to data, it is down to data architects and IT teams to make these projects a reality. Ripping and replacing the entire on-prem legacy infrastructure is a risky proposition, but attempting to run today’s applications on yesterday’s systems of record creates significant business challenges.

The Challenges of Running Business Applications on Legacy Systems of Record Architecture

Legacy systems of record architecture and data integration processes prevent businesses from deploying truly modern applications. The disconnect comes from the shift from monolithic architecture and applications to modern applications structured as a series of microservices.

Previously, applications had a large, interconnected codebase. They contained tightly coupled classes and strong interdependencies. Nowadays, applications are a series of independently deployable services. Businesses segment software into independent microservices to speed up development cycles and reliably deliver large, complicated applications.

Slow systems of record struggle to deal with modern business applications leading to significant challenges:

Scalability

Applications hosted on traditional data architecture (i.e., on-prem or in a data center) are difficult and costly to scale. You are generally stuck with the hardware you have (or to lengthy wait times to acquire more), leading to wasted resources during times of low usage and an inability to scale with spikes in activity.

Combining this with organizations constantly generating more data that must be securely stored, the cost of just storing data can quickly become prohibitive, let alone making it readily available for business applications.

Slow Development Processes

The size and structure of legacy applications lead to lengthy development cycles. With interconnected architecture, many teams must work together to ensure everything runs as expected. Additionally, with technical debt to overcome, it isn’t easy to integrate modern CI/CD processes into workflows.

In contrast, modern applications facilitate Agile business operations. Teams are separated, working independently on their own microservice. Each of these can be updated and deployed on its own, meaning issues in one microservice do not spread to the entire application.

Security

Relying on legacy applications can also introduce security concerns. Their design may be unable to support more modern features, and they may contain software that is no longer supported, i.e., the developer or community behind it no longer updates the code to protect against newly discovered threats.

The Business Case Against Legacy Systems of Record

As you can see, a slow, outdated system of record is more than just a headache for your IT department. They put you at a competitive disadvantage, unable to scale your applications, deploy new services quickly, or guarantee the security of your network.

A report from Forrester found legacy application development increases costs while being unable to support cloud-native modern apps.

In general, legacy infrastructure is hard to maintain and even harder to extend. The performance limitations lead to poor user experiences and a lack of innovation, preventing dev teams from deploying new services or trying new ideas.

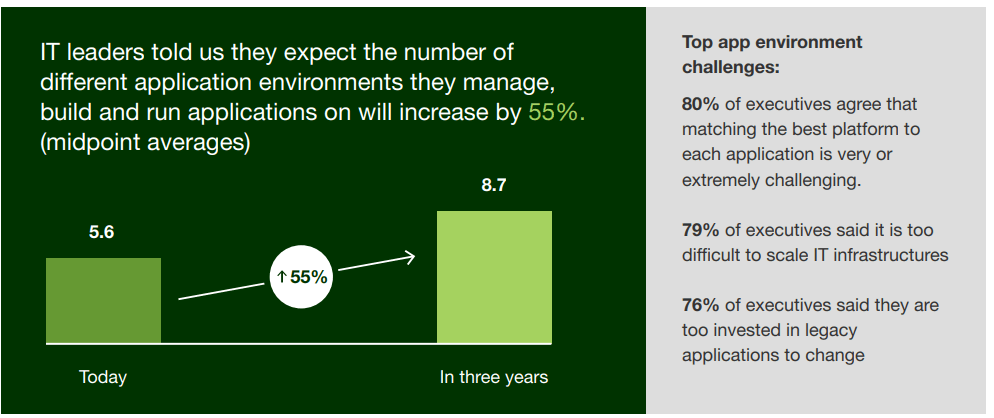

However, the architecture of traditional systems of record is not going anywhere. Forrester polled IT leaders and found that 76% believed they were too invested in legacy applications to change; 80% of executives stated it is too difficult to scale their IT infrastructure.

So, what’s the solution? It is risky and difficult for a business to throw away the entirety of its infrastructure and jump into the unknown. Instead, they must find a way to accelerate applications on top of traditional systems. Two of the more popular options for this are data lakes and data warehouses.

Can Data Lakes or Data Warehouses Keep Up With Modern Business Applications?

Data lakes, data warehouses, and semi-structured databases allow businesses to store some or all of their data in a centralized repository. Generally, these types of systems are optimized for complex analytical workloads, or OLAP (OnLine Analytical Processing), used in business intelligence, decision support, and data mining.

However, these systems are not suited to modern applications and event-driven architecture. They struggle with low-latency, high-concurrency API requests. Fast, high-volume workloads typically use OLTP (OnLine Transactional Processing) to handle a large number of parallel transactions while maintaining data integrity.

Replacing a slow system of record with data lakes and data warehouses does not offer the speed needed to run modern digital applications. Additionally, with applications often residing in external environments, organizations often choose to store a copy of their data on a DMZ sub-network or a public cloud.

Again, these systems are not designed to handle high volumes of requests in a short period. When the number of app users grows, or an application’s demand becomes more complex, the system’s performance rapidly degrades. A high volume of app requests strangles data lake and data warehouse performance, causing bottlenecks that produce high latency or potentially the loss of service.

Another problem occurs when data is spread across both traditional and modern data architecture. The overall response remains as slow as the slowest link, and downtime in one part of the data store can result in the loss of the entire service.

Finally, uploading data to data lakes and data warehouses occurs in batches or while they are offline. This prevents them from becoming a true real-time source of information, serving up out-of-date information. To overcome this issue, some developers began introducing small databases adjacent to business applications called “data marts” or “local cache.”

Unfortunately, these fail to solve the latency problems prevalent when using data lakes and data warehouses. They also create significant data duplication, reducing the efficiency of a network or, even worse, ruining data integrity across organizations with multiple systems of record containing conflicting information.

When it comes to transactional workloads, speed is paramount. Unfortunately, data lakes and data warehouses fail to deliver the necessary performance. Thankfully, other solutions are available that take advantage of modern data architecture to ensure fast, readily available information for business applications.

Rapidly Launching Digital Services With a Modern Data Architecture

How can organizations reorganize and modernize their data architecture to achieve the low-latency, scalable performance needed for current applications?

Listed below are three fundamental shifts data architects need to consider when designing modern data stores.

Composable Architecture

Composable architecture is the key feature of a new business paradigm aiming to break down applications and processes into smaller, more manageable processes to enable the parallel design of technology. It focuses on business acceleration by incorporating greater modularity, autonomy, orchestration, and discovery into business operations.

The bedrock of composable architecture is modernizing applications into a series of API-powered services. This approach to development accelerates the delivery of new applications while also reducing costs.

Applications are no longer large monolithic pieces of software with a complicated web of interdependent functions. They are composed of small agnostic building blocks that operate independently from each other. These blocks can often be recycled and reused for multiple applications, further reducing development time.

To ensure scalability and performance, applications are built to take advantage of unified data stores integrating different sources and formats to best support business operations.

While the bedrock of composable architecture is enterprise IT, the broader composable business model can be applied across businesses, including how the workforce is organized. Like the transition from monolithic apps to a collection of clearly defined microservices, composable architecture utilizes smaller teams that contain a range of expertise to enhance flexibility and resiliency.

Cross-team collaboration—the sharing of ideas and knowledge—is also encouraged, as are flatter organizational structures. Allowing less experienced employees to contribute enforces the concept of “best idea wins” regardless of its source.

The Decoupling Architectural Principle

The decoupling architectural principle defines development processes for microservices applications, including utilizing zero-trust security models, data separated per application, and many more. Decoupling also means minimizing dependencies across different systems. Removing these dependencies allows for:

- Simpler system changes

- Standardization across API-based system integrations

- A centralized hub for new services to be built

For decoupling to be successful, organizations must build an API layer that is rapidly served by accurate and up-to-date business data.

Unfortunately, most legacy systems of record were designed to serve internal operations. Now they must scale up to serve thousands or more customers expecting high-performance services. While an employee may tolerate high latency during data retrieval, customers have other options and will quickly switch to a competitor after a poor user experience.

Additionally, organizations often update their data stores with heavy batch operations during quieter business hours. These batch updates dramatically affect performance, slowing down systems.

Untangling Your Architecture With a Digital Integration Hub

Finally, organizations need to untangle their IT architecture to create a simple and fast flow of information from systems of record to applications. The best solution available is to build a new high-performance data layer between the legacy data stores and the modern applications, otherwise known as Digital Integration Hub (DIH).

A digital transformation solution that powers business acceleration without the risk of ripping and replacing legacy infrastructure, a Digital Integration Hub combines all the data held across a company’s systems into a unified, fast, real-time data store. The ideal technology to power modern applications and the rapid launch of new digital services.

Removing the link between digital applications and legacy infrastructure and building a new high-performance data layer solves data access problems while removing latency issues associated with data lakes and data warehouses.

Beyond that, Digital Integration Hubs provide real-time information without the need for downtime or batch updates. Using embedded data change capture tools, Digital Integration Hubs replicate any changes to the original systems of record. Plus, reconciliation mechanisms rectify discrepancies, ensuring there is always a single source of truth across the organization, reflected by the Digital Integration Hub.

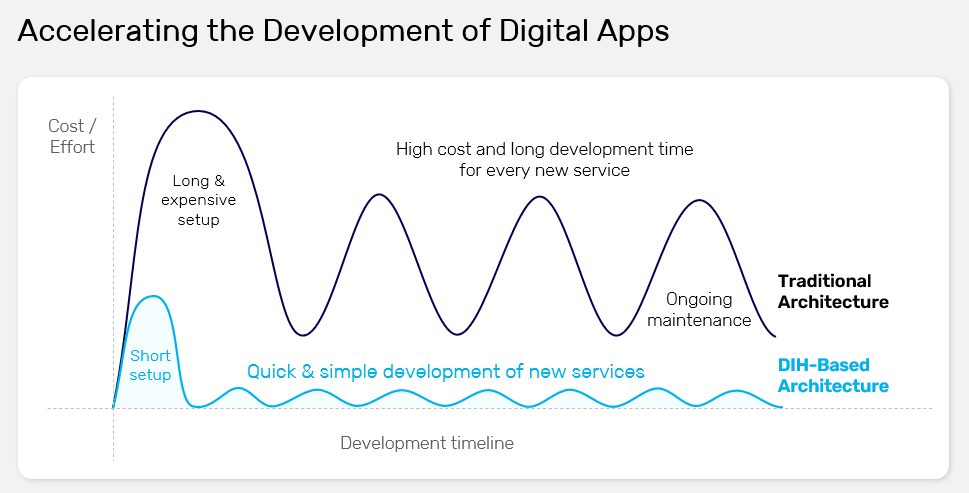

Digital Integration Hubs reduce the time, money, and effort it takes to launch new services. Rather than assigning engineers to find workarounds for legacy infrastructures, you can set them free to design innovative applications and build new valuable services. What previously required a long and expensive set-up followed by a lengthy development cycle and ongoing maintenance is reduced to quick and simple iterations.

Digital transformation powered by Digital Integration Hub architecture brings a whole new world of possibilities to your organization:

- High-performance data anywhere, any time

- Shorter development cycles and time to market

- Reduced cost to launch new services

- Run systems on any environment (cloud, on-prem, hybrid, etc.)

- Scalable services that won’t break with a spike in activity

- A single, unified repository representing all of your company’s data

Summary

The modern business world is defined by data. The organizations thriving are those who learn to manage their data and extract the most value from it. This requires removing the burden of legacy systems of record and delivering readily available data to all your applications.

Digital Integration Hub does just that without the risk of ripping and replacing all of your infrastructure. It creates a seamless, high-performance, scalable data store to power today’s and tomorrow’s apps and services.

The next step is to learn how to transition from traditional to modern data architecture and implement your new Digital Integration Hub data store. Check out our blog on these two next steps.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK