Delta – a Data Synchronization and Enrichment Platform by Netflix

source link: https://www.infoq.com/news/2019/11/data-synchronization-netflix/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

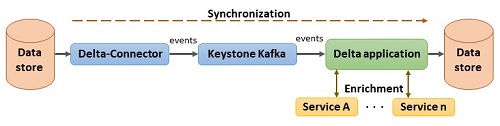

Large systems often utilize numerous data stores, each one used for specific things like storing a certain type of data, advanced search, or caching. There is sometimes a need to keep multiple data stores in sync, and to enrich data in a store by calling external services. To address these needs, Netflix has created Delta, an eventual consistent, event-driven data synchronization and enrichment platform. In a blog post, the team behind Delta, all senior software engineers at Netflix, describes the challenges and an overview of Detla's design .

There are several existing patterns and solutions for keeping two data stores in sync, such as dual writes, using log tables, and distributed transactions. The team saw issues and limitations with these solutions though, and therefore decided to create the Delta platform.

Besides synchronization of data stores, without the limitations in existing solutions Delta is designed to allow for data to be enriched by calling other services. During synchronization, change data capture (CDC) events are read from a data store using a Delta connector and Keystone (Netflix's data streaming pipeline using Kafka ), and stored in a topic . A Delta application then reads the events from the topic, calls other services to collect more data, and finally stores the enriched data in another data store. The team claims that the whole process is almost real-time, which means that data stores depending on synchronization are updated immediately after the original store.

Delta-Connector is a CDC service that captures changes from the transaction logs and dumps of a data store. Dumps are used because the team has found that logs typically do not contain the full history of changes. Normally the changes read are serialized as Delta events to relieve a consumer from handling different types of changes. The team notes that Delta also offers some advanced features, including the ability to trigger manual dumps at any time, no locks taken on tables, and high availability through the use of standby instances.

The transport layer of Delta events is based on the messaging platform in Netflix's Keystone platform. To ensure that events are guaranteed to arrive in derived stores, they offer a specially-built Kafka cluster with a configuration, increased replication factor and handling of broker instances to better deal with this requirement. In extreme situations events can still be lost, and they therefore use a message tracing system to detect this.

The processing layer in Delta is based on Netflix's SPaaS platform , which provides an Apache Flink integration with their ecosystem. The framework provides functionality for common processing needs and uses an annotation-driven domain specific language (DSL) to define a process. Benefits mentioned include users can focus on business logic, simplified operation, and optimization that is transparent to users.

Delta has been running in production since over a year, and the developing team believes it has been crucial for many Netflix Studio applications. It has simplified teams' implementation of use cases like search indexing, data warehousing, and event driven workflows.

In a follow-up, the authors intend to describe the key components of Delta in more detail.

There are several tools available for CDC, one being Debezium which was presented at the MicroXchg Conference 2019 in Berlin.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK