24-Point Enterprise SEO Audit For Large Sites & Organizations

source link: https://www.searchenginejournal.com/enterprise-seo-audit/451134/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Fixing Google Search Console’s Coverage Report ‘Excluded Pages’

Don't let your content go unnoticed. Learn about the excluded part of the Google Search Console Index Coverage report and fix your status.

-

SHARES

-

READS

Google Search Console lets you look at your website through Google’s eyes.

You get information about the performance of your website and details about page experience, security issues, crawling, or indexation.

The Excluded part of the Google Search Console Index Coverage report provides information about the indexing status of your website’s pages.

Learn why some of the pages of your website land in the Excluded report in Google Search Console – and how to fix it.

What Is The Index Coverage Report?

The Google Search Console Coverage report shows detailed information about the index status of the web pages of your website.

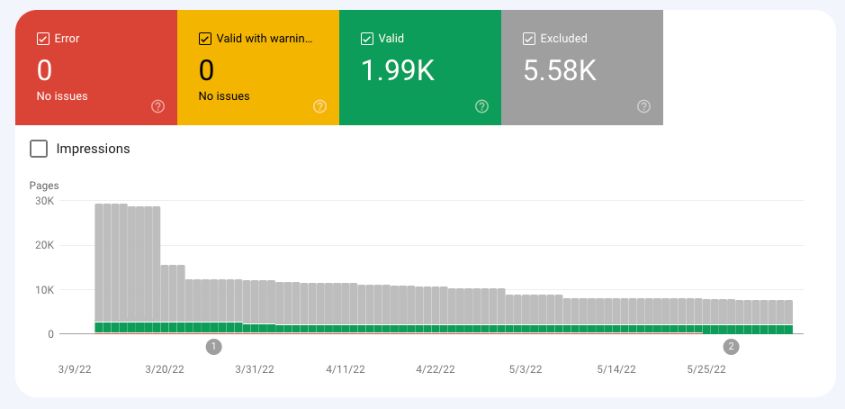

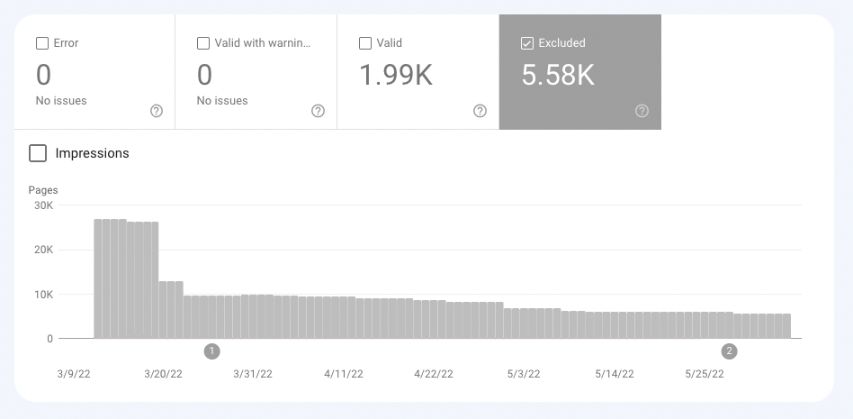

Your web pages can go into one of the following four buckets:

- Error: The pages that Google cannot index. You should review this report because Google thinks you may want these pages indexed.

- Valid with warnings: The pages that Google indexes, but there are some issues you should resolve.

- Valid: The pages that Google indexes.

- Excluded: The pages that are excluded from the index.

What Are Excluded Pages?

Google does not index pages in the Error and Excluded buckets.

The main difference between the two is:

- Google thinks pages in Error should be indexed but cannot because of an error you should review. For example, non-indexable pages submitted through an XML sitemap fall under Error.

- Google thinks pages in the Excluded bucket should indeed be excluded, and this is your intention. For example, non-indexable pages not submitted to Google will appear in the Excluded report.

Screenshot from Google Search Console, May 2022

However, Google doesn’t always get it right and pages that should be indexed sometimes go to Excluded.

Fortunately, Google Search Console provides the reason for placing pages in a specific bucket.

This is why it’s a good practice to carefully review the pages in all four buckets.

Let’s now dive into the Excluded bucket.

Possible Reasons For Excluded Pages

There are 15 possible reasons your web pages are in the Excluded group. Let’s take a closer look at each one.

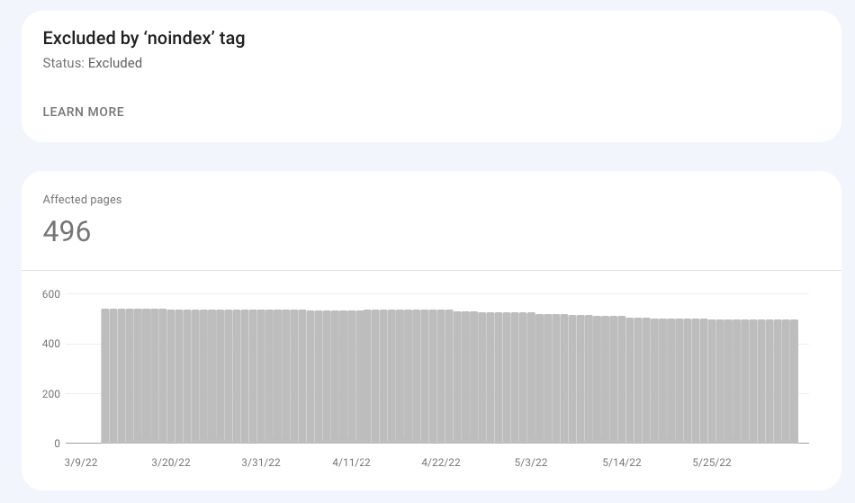

Excluded by “noindex” tag

These are the URLs that have a “noindex” tag.

Google thinks you actually want to exclude these pages from indexation because you don’t list them in the XML sitemap.

These may be, for example, login pages, user pages, or search result pages.

Suggested actions:

- Review these URLs to be sure you want to exclude them from Google’s index.

- Check if a “noindex” tag is still/actually present on those URLs.

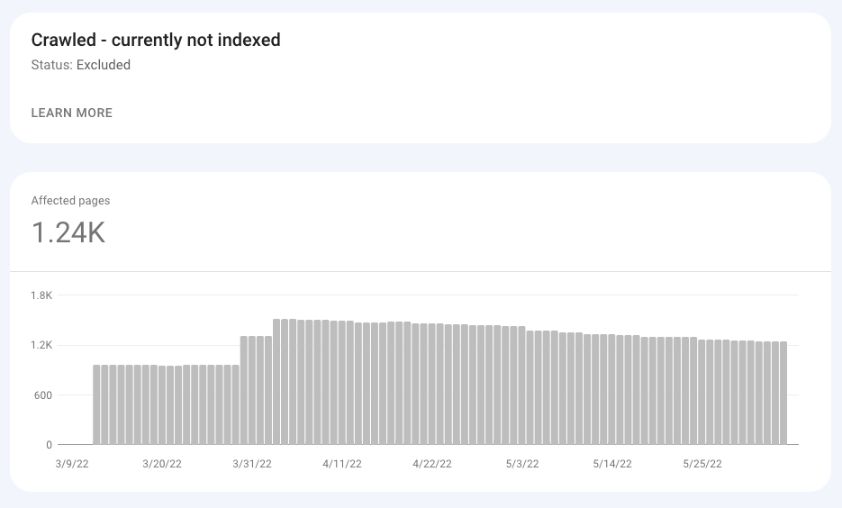

Crawled – Currently Not Indexed

Google has crawled these pages and still has not indexed them.

As Google says in its documentation, the URL in this bucket “may or may not be indexed in the future; no need to resubmit this URL for crawling.”

Top Freelancers for Every Business

Fiverr Business gives your team the flexibility to expand in-house capabilities and execute every project by connecting with vetted freelancers for every skill set you need.

Many SEO pros noticed that a site might have some serious quality issues if many normal and indexable pages go under Crawled – currently not indexed.

This could mean Google has crawled these pages and does not think they provide enough value to index.

Suggested actions:

- Review your website in terms of quality and E-A-T.

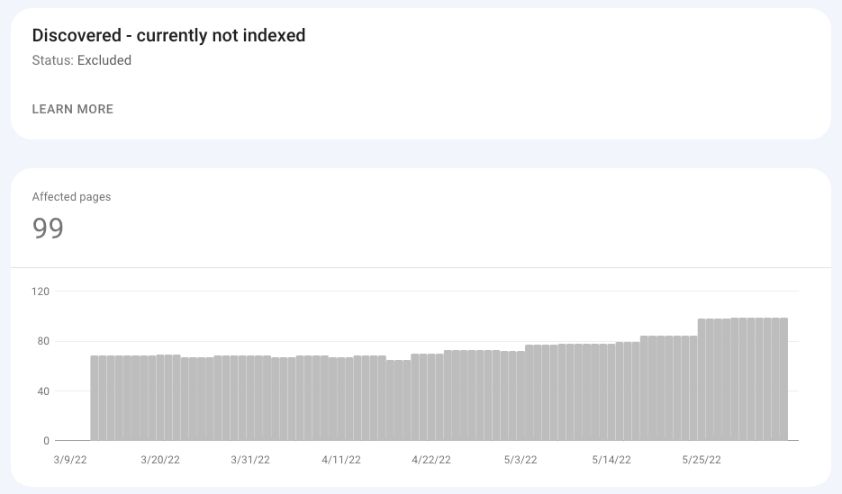

Discovered – Currently Not Indexed

As Google documentation says, the page under Discovered – currently not indexed “was found by Google, but not crawled yet.”

Google did not crawl the page not to overload the server. A huge number of pages under this bucket may mean your site has crawl budget issues.

Suggested actions:

- Check the health of your server.

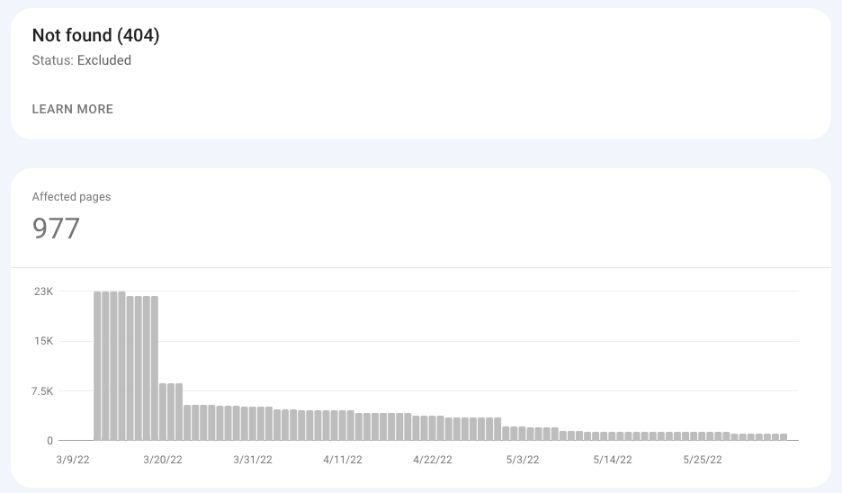

Not Found (404)

These are the pages that returned status code 404 (Not Found) when requested by Google.

These are not URLs submitted to Google (i.e., in an XML sitemap), but instead, Google discovered these pages (i.e., through another website that linked to an old page deleted a long time ago.

Suggested actions:

- Review these pages and decide whether to implement a 301 redirect to a working page.

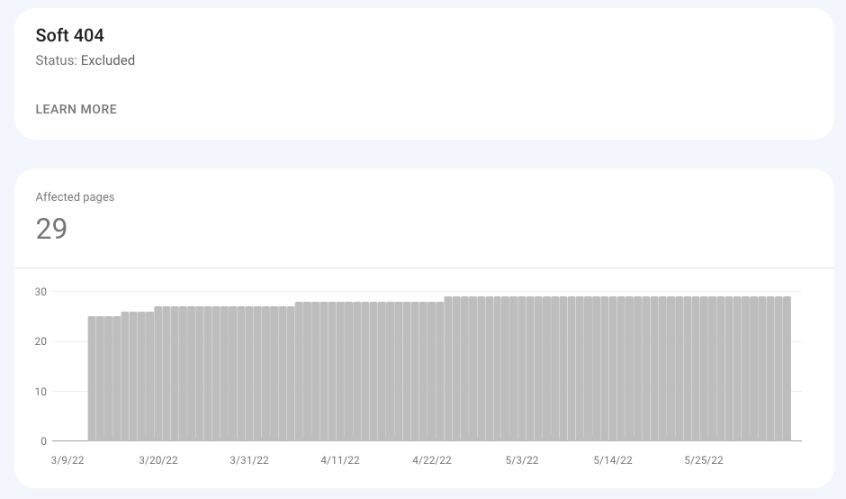

Soft 404

Soft 404, in most cases, is an error page that returns status code OK (200).

Alternatively, it can also be a thin page that contains little to no content and uses words like “sorry,” “error,” “not found,” etc.

Suggested actions:

- In the case of an error page, make sure to return status code 404.

- For thin content pages, add unique content to help Google recognize this URL as a standalone page.

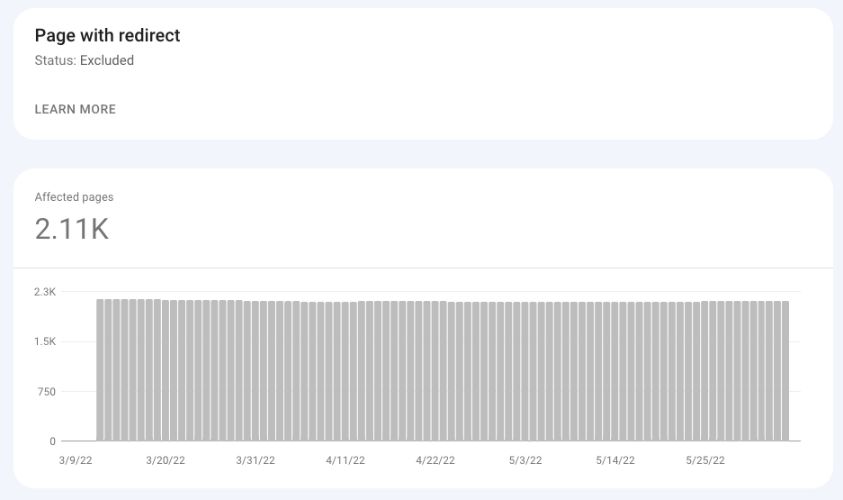

Page With Redirect

All redirected pages on your website will go to the Excluded bucket, where you can see all redirected pages that Google detected on your website.

Suggested actions:

- Review the redirected pages to make sure the redirects were implemented intentionally.

- Some WordPress plugins automatically create redirects when you change the URL, so you may want to review these occasionally.

Duplicate Without User-Selected Canonical

Google thinks these URLs are duplicates of other URLs on your website and, therefore, should not be indexed.

You did not set a canonical tag for these URLs, and Google selected the canonical based on other signals.

Suggested actions:

- Inspect these URLs to check what canonical URLs Google has selected for these pages.

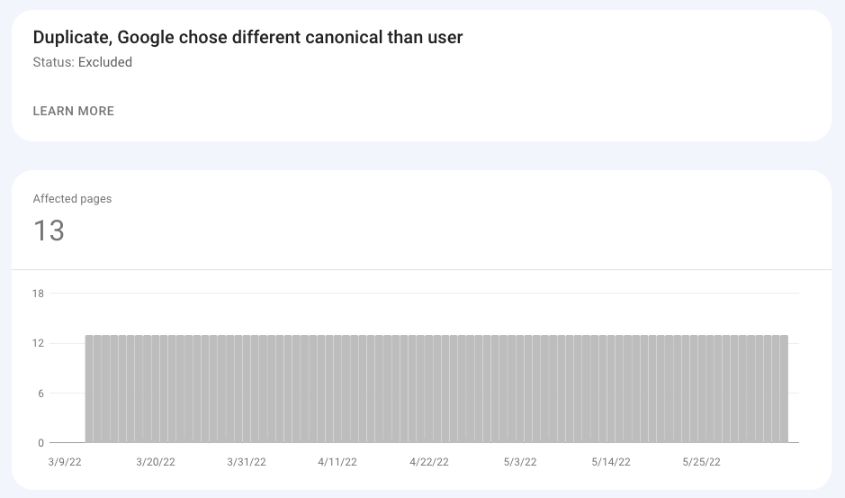

Duplicate, Google Chose Different Canonical Than User

In this case, you declared a canonical URL for the page, but even so, Google selected a different URL as the canonical. As a result, the Google-selected canonical is indexed, and the user-selected one is not.

Possible actions:

- Inspect the URL to check what canonical Google selected.

- Analyze possible signals that made Google choose a different canonical (i.e., external links).

Duplicate, Submitted URL Not Selected As Canonical

The difference between the above status and this status is that in the case of the latter, you submitted a URL to Google for indexation without declaring its canonical address, and Google thinks a different URL would make a better canonical.

As a result, the Google-selected canonical is indexed rather than the submitted URL.

Suggested actions:

- Inspect the URL to check what canonical Google has selected.

Alternate Page With Proper Canonical Tag

These are simply the duplicates of the pages that Google recognizes as canonical URLs.

These pages have the canonical addresses that point to the correct canonical URL.

Suggested actions:

- In most cases, no action is required.

Blocked By Robots.txt

These are the pages that robots.txt have blocked.

When analyzing this bucket, keep in mind that Google can still index these pages (and display them in an “impaired” way) if Google finds a reference to them on, for example, other websites.

Suggested actions:

- Verify if these pages are blocked using the robots.txt tester.

- Add a “noindex” tag and remove the pages from robots.txt if you want to remove them from the index.

Blocked By Page Removal Tool

This report lists the pages whose removal has been requested by the Removals tool.

Keep in mind that this tool removes the pages from search results only temporarily (90 days) and does not remove them from the index.

Suggested actions:

- Verify if the pages submitted via the Removals tool should be temporarily removed or have a ‘noindex’ tag.

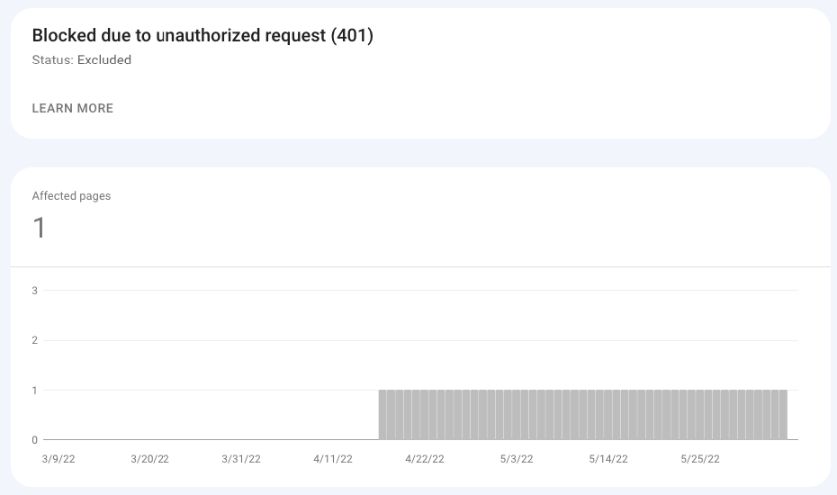

Blocked Due To Unauthorized Request (401)

In the case of these URLs, Googlebot was not able to access the pages because of an authorization request (401 status code).

Unless these pages should be available without authorization, you don’t need to do anything.

Google is simply informing you about what it encountered.

Suggested actions:

- Verify if these pages should actually require authorization.

Blocked Due To Access Forbidden (403)

This status code is usually the result of some server error.

403 is returned when credentials provided are not correct, and access to the page could not be granted.

As Google documentation states:

“Googlebot never provides credentials, so your server is returning this error incorrectly. This error should either be fixed, or the page should be blocked by robots.txt or noindex.”

What Can You Learn From Excluded pages?

Sudden and huge spikes in a specific bucket of Excluded pages may indicate serious site issues.

Here are three examples of spikes that may indicate severe problems with your website:

- A huge spike in Not Found (404) pages may indicate unsuccessful migration where URLs have been changed, but redirects to new addresses have not been implemented. This may also happen after, for example, an inexperienced person changed the slug of blog posts and as a result, changed the URLs of all blogs.

- A huge spike in the Discovered – currently not indexed or Crawled – currently not indexed may indicate that your site has been hacked. Make sure to review the example pages to check if these are actually your pages or were created as a result of a hack (i.e., pages with Chinese characters).

- A huge spike in Excluded by ‘noindex’ tag may also indicate unsuccessful launch and migration. This often happens when a new site goes to production together with “noindex” tags from the staging site.

The Recap

You can learn a lot about your website and how Googlebot interacts with it, thanks to the Excluded section of the GSC Coverage report.

Whether you are a new SEO or already have a few years of experience, make it your daily habit to check Google Search Console.

This can help you detect various technical SEO issues before they turn into real disasters.

More resources:

Featured Image: Milan1983/Shutterstock

Subscribe to SEJ

Get our daily newsletter from SEJ's Founder Loren Baker about the latest news in the industry!

Olga Zarzeczna

Olga is an SEO consultant at SEOSLY. She is a technical SEO specialist and an SEO auditor with 10+ years ... [Read full bio]

- Suggested Articles

-

SHARES

-

READS

If your website is struggling to rank in search engine results pages, an enterprise SEO audit can help you identify why.

For any SEO provider or in-house marketer who wants to audit an enterprise website, these 24 items should be on your checklist before moving forward with any SEO campaign.

What Is An Enterprise SEO Audit?

An SEO audit is an evaluation of a website to identify issues preventing it from ranking in search engine results.

Enterprise SEO audits are focused primarily on large, enterprise websites, meaning those with hundreds to thousands of landing pages.

Why Perform An SEO Audit?

Auditing your website is the first step in developing a successful SEO strategy.

Understanding the strengths and weaknesses of a website can help you tailor your SEO campaigns accordingly.

Performing an audit also helps your team direct your time, resources, and budget to the optimizations that will have the greatest impact.

What To Include In Your Enterprise SEO Audit

Auditing a large website can be very demanding, with over 200+ ranking factors in Google’s algorithm.

So to run a more sufficient audit, separate your audit into five parts: content, backlinks, technical SEO, page experience, and industry-specific standards.

You will need to rely on SEO software tools to run your audit successfully.

SEO platforms like SearchAtlas, Ahrefs, Screaming Frog, and others are a must for auditing any large website.

Content

Are You Targeting The Right Keywords?

The foundation of all successful SEO is strategic keyword targeting.

Not only do your target keywords need to be relevant to your products and services, but they also need to be realistic goals for your website.

So before you analyze whether your enterprise website is properly optimized, make sure your keyword goals are indeed reachable.

It’s possible that your target keywords are too competitive, or that you’re not targeting keywords with high enough search volume or conversion potential.

You can utilize a keyword tracking tool to see what search terms your enterprise website is already ranking for and earning organic traffic from.

Then, perform any necessary keyword research to find keywords that may be a better fit for your website.

Once you’ve ensured that improper keyword targeting is not the source of your poor SEO performance, you can move on through the remainder of your audit.

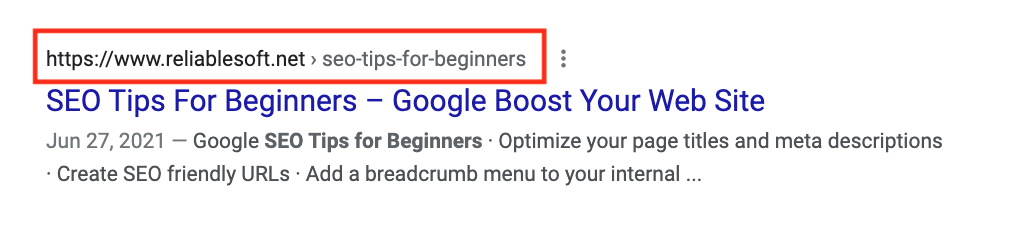

2. Do You Have SEO-Friendly URLs?

The URLs of your enterprise web pages should be unique, descriptive, short, and keyword-rich.

URLs are visible at the top of search results and can influence whether or not a user clicks through to the page.

Use hyphens between words to keep the URL paths readable and omit any unnecessary numbers.

3. Are Your Meta Tags Properly Optimized?

Google crawlers look to the title tags and meta descriptions to understand what your web content is about and its relevance to specific keywords.

Like URLs, these tags are also visible in the SERPs and influence whether or not a searcher clicks.

Your audit should include checking that your web page’s meta tags are unique and following SEO best practices.

Use a site auditor tool to identify the web pages to address to speed up the process.

Make sure to check for:

- Original and unique titles and meta descriptions for each web page.

- Proper character length: 50-60 for title tags and 150-160 for meta descriptions.

- Keyword or variation of target keyword including in both title and meta descriptions.

Google sometimes rewrites page titles and meta descriptions, but this only happens a small portion of the time.

Optimizing these meta tags is still an essential step in on-page SEO.

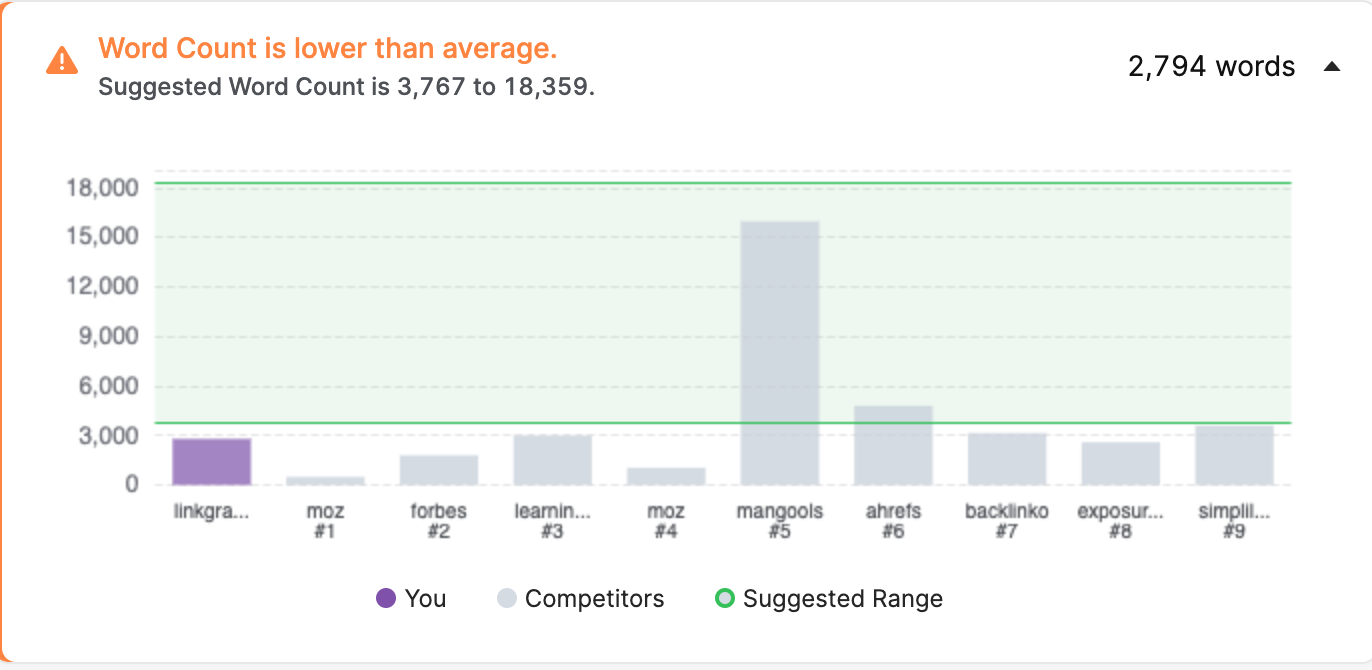

4. Is Your Content High-Quality And In-Depth?

Although content length is not a ranking factor, in-depth content often displays characteristics that Google likes, such as original insight, reporting, in-depth analysis, and comprehensive exploration of the topic.

There is no magic number when it comes to content length. Google’s Quality Rater Guidelines state that web pages should have a “satisfying” amount of content.

They also state:

“The amount of content necessary for the page to be satisfying depends on the topic and purpose of the page. A high quality page on a broad topic with a lot of available information will have more content than a high quality page on a narrower topic.”

Still not sure whether your content is long enough?

Look to your enterprise competitors who are already ranking and measure how long their content is compared to yours.

Then, aim to have content equal to or more in-depth than theirs.

5. Are Your Landing Pages Internally Linking To Each Other?

Internal links help Google find and index your pages. They also communicate website hierarchy, relevance signals, topical breadth, and spread around your PageRank.

Make sure you evaluate whether or not your pages are leveraging an internal linking strategy. Also, take a close look at the anchor text used to link to other pages of your website.

Your pages need to have internal links pointing to other pages, and be sure internal links are pointing to that page.

Otherwise, you will have orphan pages, meaning pages that Google cannot find and index because there is no linking pathway to them.

6. Does Your Content Show Expert Sourcing With External Links To Relevant Content?

Google also looks to external links to understand website content and the authority of websites.

External links should be only to relevant, authoritative sources, and your website links out to sites with higher Domain Authority scores than your own.

Otherwise, Google is less likely to trust your enterprise website if you appear to be keeping low-quality websites in your link neighborhood.

7. Are You Using Rich Media And Interactive Elements?

Google likes to see images, videos, and interactive elements like jump links on the page. These elements make content more engaging and easier to navigate.

However, if these elements slow down your page load times, they are counterproductive to your SEO efforts. That will be addressed in a later part of your audit.

8. Do Your Images Include Keyword-rich Alt Text?

Enterprise websites – particularly ecommerce sites – may feature thousands of images.

But because Google cannot see images, they rely on alt text to understand how those images relate to your web page content.

Your audit should include confirming that image alt text is not only present but descriptive and keyword-rich.

9. Are Your Pages Suffering From Keyword Cannibalization?

With hundreds to thousands of landing pages, there may be times when your landing pages are not only competing against your competitors but other landing pages on your website.

This is called keyword cannibalization and it happens when Google crawlers aren’t sure which page on your enterprise site is the most relevant.

Some tips for resolving keyword cannibalization:

- Find another keyword and re-optimize one of the competing pages.

- Consolidate the competing pages into one longer, in-depth page.

- Use a 301 redirect to point to the higher-performing or higher-converting page.

Backlinks

10. Do You Have Fewer Backlinks Than Your Competitors?

Google’s #1 ranking factor still remains the same: Backlinks.

If your enterprise website is competing against well-known incumbents in your industry, it’s likely they have a robust backlink profile, making it difficult for your website to compete in the SERPs.

You can use a backlink tool like Ahrefs to identify your competitor’s total backlinks and unique referring domains.

If there is a significant gap in backlinks or referring domains, this is likely a source for fewer keyword rankings or lower-ranking positions.

Dedicate a significant portion of your SEO campaign to link building and digital PR if you want to outrank your competition.

11. Does Your Website Have Toxic Backlinks?

Although backlinks are important for improving site authority, the wrong type of backlinks can also harm a website.

If your website has toxic backlinks from spammy, low-quality websites, Google may suspect your enterprise website to be guilty of backlink manipulation.

Google has gotten better at recognizing low-quality links, and after their 2021 Link Spam Update, Google also claims to nullify spammy links and not count them against websites.

However, there may still be moments when toxic backlinks should be disavowed.

You will want to focus on identifying where those toxic links are coming from and take the necessary steps to create and submit a disavow file.

Some SEO software tools can identify toxic links and create disavow text for you.

Disavowing the wrong way can actually harm your SEO performance, so if you are unfamiliar with this Google tool, make sure you seek the assistance of an SEO provider.

12. Is Your Anchor Text Distribution Diverse?

Google also looks to the anchor text of your backlinks to understand relevance and authority. Relevant anchor text is important, but not all webmasters will link to your pages in the same way.

If the majority of your anchor text is branded, that’s okay.

Look for too much exact-match anchor text or high CPC anchor text that Google crawlers may flag for suspected backlink manipulation.

If your anchor text does not display a healthy level of diversity, design a link building campaign around earning links with anchor text that improves diversity and signals healthy backlink practices to Google.

Technical SEO

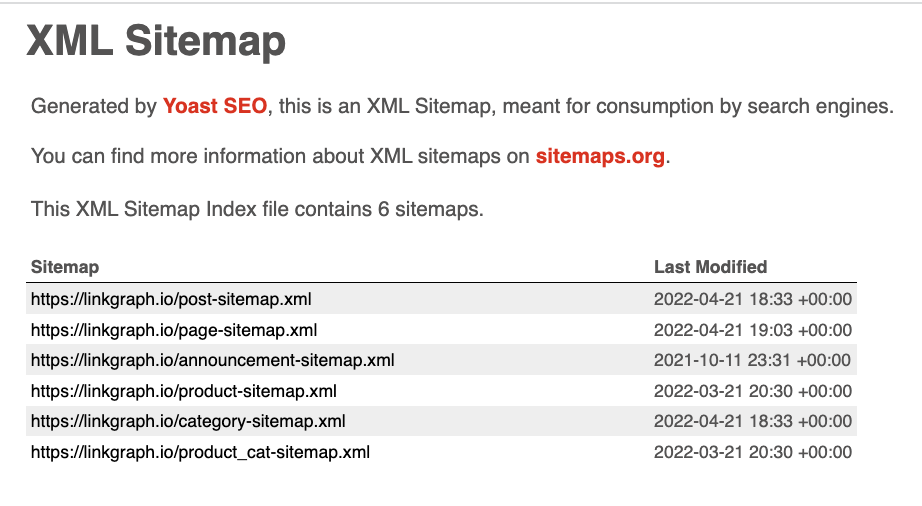

13. Have You Submitted An XML Sitemap And Did it Include The Right Pages?

Because enterprise websites have thousands of landing pages, one of the most common issues uncovered in enterprise SEO audits is related to search engine indexing.

That’s why generating and submitting an XML sitemap is important. It communicates your website hierarchy to search engine crawlers.

It also tells them which pages of your website are the most important to crawl regularly and index.

If your enterprise website adds new product pages or content to your website, you can also use your sitemap to show Google the new pages rather than wait for crawlers to discover them through internal links.

14. Have You Maxed Out Your Crawl Budget?

Google’s web crawlers will only spend so much time crawling your web pages, meaning your enterprise website may have pages that don’t end up in Google’s index.

Although improving your page speed and your site authority can lead to Google increasing your crawl budget, that takes time. So in the meantime, focus on making sure you’re using your crawl budget wisely.

If your audit uncovers essential pages that are not being indexed, your enterprise website may benefit from crawl budget optimization. You want your highest value, highest converting pages to end up in Google’s index.

15. Is Your Schema Markup Properly Setup?

A very powerful optimization that your enterprise website can utilize is schema markup.

If your enterprise website already includes schema on some of your pages, you will want to confirm that your schema is validated and eligible for rich results.

You can use Google’s rich result to test your pages that include schema markup to confirm they are properly validated but to be even more efficient, use your favorite site crawler to test all of your pages at once.

16. Do You Have Excessive Broken Links Or Redirects?

Over time, links naturally break as websites update their content or delete old pages.

It’s important to check your enterprise website to confirm your external and internal links are pointing to live pages.

Otherwise, it will appear to Google that your website is not well-maintained, and Google will be less likely to promote your web pages in the SERPs.

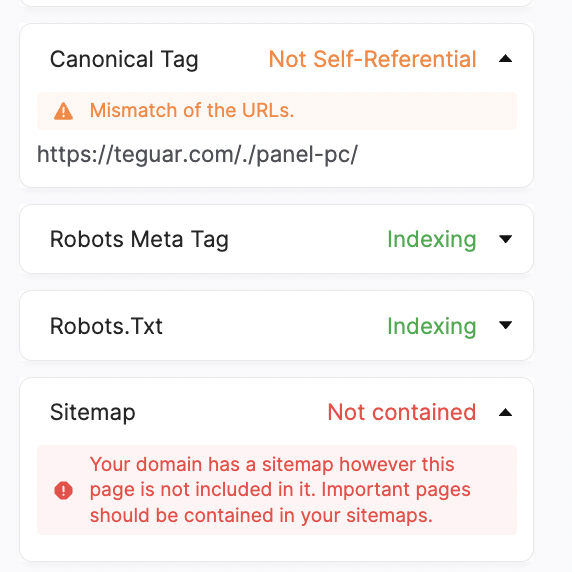

17. Do Similar Pages Include Canonical Tags?

Enterprise websites (particularly ecommerce sites) may have duplicate content that targets different regions or is programmatically built out.

If those pages don’t have canonical tags, they will look to Google as duplicate content.

It’s important to confirm that the best, most in-depth version of the page has a self-referential canonical tag. All of the similar pages include canonical tags that identify the master version of the page.

A site auditor tool like SearchAtlas can confirm whether your canonical URLs are properly implemented and if Google crawlers understand which page to promote in the SERPs.

18. Does Your Multilingual Content Leverage Hreflang Tags?

For multilingual enterprise websites, hreflang tags can help you show the right language content to the right searchers.

This improves your relevance signals and can have a huge impact on your conversion rates.

However, it’s easy to make mistakes when implementing hreflang and canonical tags.

As a general rule, only add hreflang tags to your web pages with self-referencing canonicals – not duplicate copies of the page.

Page Experience

19. Do Your Pages Meet Google’s Core Web Vitals Standards?

If your pages do not meet Google’s Core Web Vitals standards, they are unlikely to rank.

Google knows that load times, responsiveness, and visual stability impact the quality of a user’s experience, and thus the quality of a web page and whether or not it’s rank-worthy.

You can see your Core Web Vitals metrics in your Google Search Console account.

You can also use the free platform to validate any fixes and see whether or not they improve your CWV metrics.

20. Do Your Web Pages Include HTTP Or HTTPS Protocols?

A secure website is also essential to the quality of the user’s experience.

If your web pages are not utilizing HTTPS protocols, you are not providing users with a secure browsing experience.

As a result, Google is less likely to promote your pages.

21. Are Your Mobile Pages Responsive And High-Performing?

The majority of searches now happen on mobile devices.

Also, with mobile-first indexing, Google predominantly uses mobile pages in its index.

It is also more likely to use your mobile pages when determining where to rank your pages compared to your competitors.

Some common mobile mistakes that occur include:

- Unresponsive design.

- Intrusive pop-ups.

- Bad UI/UX elements like button size.

- Unplayable or missing content.

Industry

22. Are You Considered A Your-Money-Your-Life (YMYL) Website?

If your enterprise website is considered a health, financial, legal, or other YMYL website, Google has extremely high standards for the content that it will promote to searchers.

Although this does not impact all enterprise websites, it’s important to know whether your website falls under this banner to make sure you meet Google’s specific standards for your YMYL industry.

23. Does Your Website Show High Levels of E-A-T?

Google wants to see that your content is relevant to users’ keywords.

It also wants to see that your website, as a whole, displays industry expertise.

E-A-T stands for expertise, authority, and trust. It’s hard to quantify, but some more tangible factors include:

- In-depth, well-researched content (e.g. blogs, ebooks, long-form articles).

- Expert authorship and sourcing (e.g. an author byline that shows industry-specific expertise and credentials).

- A clear purpose and focus for each page.

- Off-site reputation signals (e.g. an article in a reputable online publication that mentions or links to your website).

If you’re still not sure what E-A-T looks like in your industry, look to the top-ranking content of your competitors to see the topical depth, expertise, and sourcing, and model your content accordingly.

24. Does Your Website Have Strong Reputation Signals?

If your goal is to rank for branded searches, other authoritative websites may feature content about your brand competing with yours in the SERPs.

If your enterprise has a Wikipedia page or press in online publications with high Domain Authority, those digital locations may rank higher than your domain.

If this is the case, you may need to take a more unique approach to link building to improve the branded signals of your content.

Optimizations like schema can also help ensure that information about your brand is featured at the top of the SERP, particularly if those websites that mention your enterprise brand do so negatively.

Final Thoughts On Conducting Your Enterprise Audit

Sitewide audits can be daunting, but they are worth the time and effort to craft a tailored, custom SEO campaign strategy.

Make sure to leverage the best SEO software tools throughout your audit to speed up the process and ensure the most accurate evaluation of your website.

Once your audit is complete, you can easily prioritize those optimizations that will be the most impactful.

More resources:

Featured Image: Yuriy K/Shutterstock

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK