A quick post about reducing MongoDB queries

source link: https://blog.appsignal.com/2013/06/13/reducing-mongodb-queries.html

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

A quick post about reducing MongoDB queries

Robert Beekman on Jun 13, 2013

“I absolutely love AppSignal.”

Discover AppSignal

Recently we noticed that our database was doing a lot of queries while processing our queue. Lets see if we can fix that.

Our background worker was looping through a given list of items and for each item it performed several MongoDB queries.

It generated:

- Three finds

- Two upserts

- One set

- One insert

This is six queries per item, where a batch of items consists of 10 to 100 items. This quickly results in a lot of queries!

The class looked something like this:

class EntryWorker

def perform(id, payload)

site = Site.find(id)

payload.entries.each do |entry|

ItemProcessor.new(site, entry).process!

end

end

end

Where the ItemProcessor does the processing and querying.

To reduce the number of queries we’ve wrapped the ItemProcessor with a class that tries to cache as much data for queries as possible. Here’s a simplified version:

class EntryWorker

def perform(id, payload)

site = Site.find(id)

@inserts = []

@increments = {}

payload.entries.each do |entry|

ItemProcessor.new(site, entry).process!

end

flush_inserts!

flush_increments!

end

end

The ItemProcessor populates the hashes and arrays set by the perform method and when the loop is complete, the data is flushed to the database.

Lets take the increments for example, the data format for this hash is:

{

[bson_id] => {

'hits' => 10,

'exceptions' => 1,

'slow' => 2

}

}

This way we can loop the increments at the end and push them to the database.

# Pushes the increments to MongoDB

def flush_incs!

@increments do |id, incs|

Model.

where(:id => id).

find_and_modify('$inc' => incs)

end

end

So instead of dozens of upserts that increment a number by 1, we now have a few with the total counts.

Mongoid doesn’t support bulk inserts, but the driver it depends on (Moped) does. If we drop down to the collection class we can use that to insert everything at once.

# Pushes all inserts to MongoDb at once def flush_inserts! Model.collection.insert(@inserts) end

Instead of having hundreds of inserts that take ~ 1ms. We now have one insert that takes only a couple of ms.

We did the same with all the finds,

all the data is fetched before the loop and stored in an array.

We use array.find to select the correct object and use that in the ItemProcessor.

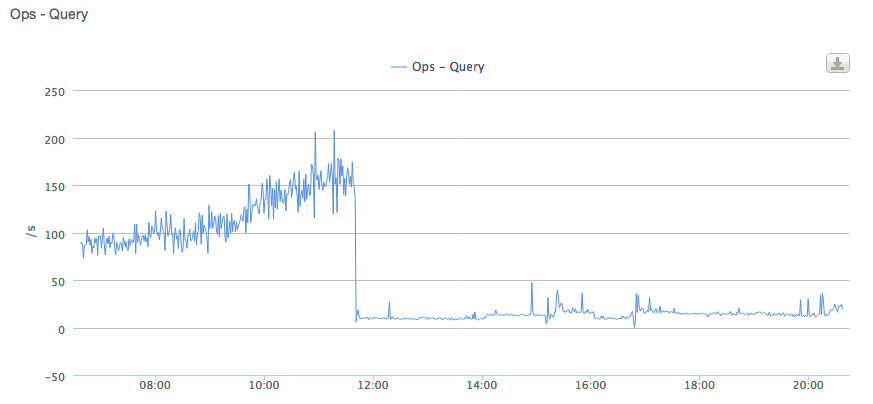

You can see the results below:

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK