Generating Cryptographically Secure Random Numbers With Coins and A Cup

source link: https://blog.sia.tech/generating-cryptographically-secure-random-numbers-with-coins-and-a-cup-4e223899509e

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Generating Cryptographically Secure Random Numbers With Coins and A Cup

Generally it’s a bad idea to generate your own randomness. Whether you are pulling things randomly out of your brain or using a physical device like a coin, you are likely introducing some form of bias and predictability which can greatly reduce the security of whatever you need randomness for (such as a passphrase or a bitcoin seed).

It turns out however that there are some neat mathematical tricks that allow us to generate cryptographic grade random numbers using just some coins, a cup, and pencil and paper. And the full procedure is simple enough that an eight year old could complete the task successfully.

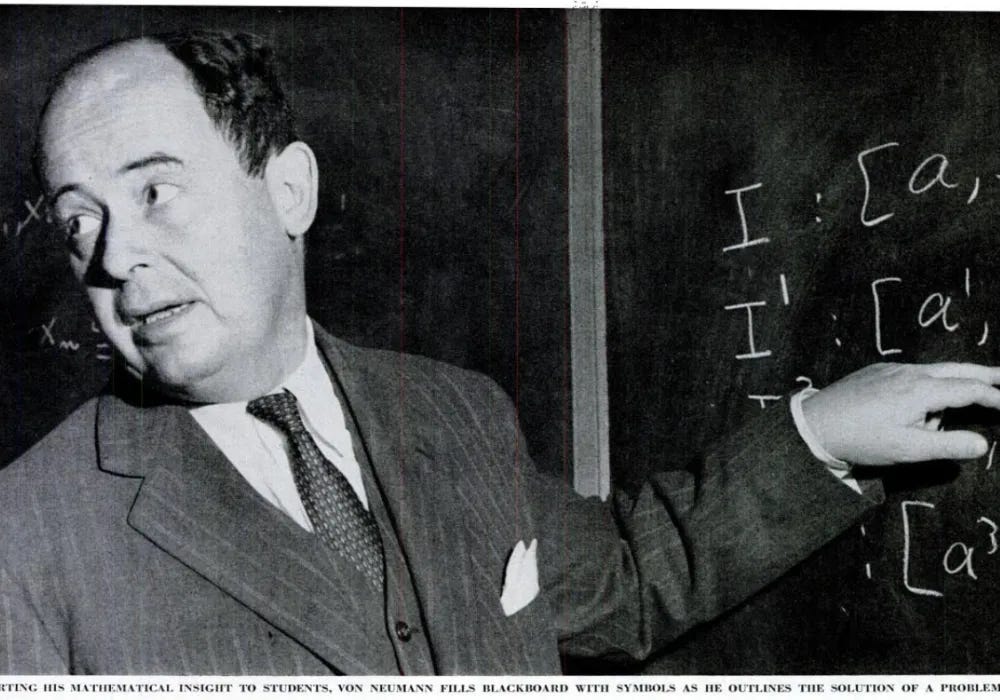

The Von Neumann Trick

Before I get into my technique, I want to cover something called the ‘Von Neumann Trick’, which is a strategy used to turn a biased coin into a fair coin. The technique is simple: flip a coin twice. If it comes up as heads-heads or tails-tails, throw away the result and start over. If it comes up as heads-tails, accept the result as ‘heads’. If it comes up as tails-heads, accept the result as ‘tails’.

Quick proof: the probability of getting heads-heads is p². The probability of getting tails-tails is (1-p)². The probability of getting heads-tails is p*(1-p), and the probability of getting tails-heads is (1-p)*p. For all values of ‘p’, the probability of ‘heads-tails’ is equally likely to the probability of ‘tails-heads’, even though the probability of ‘heads-heads’ may be significantly different than the probability of ‘tails-tails’.

Though mathematically pure, this trick is deficient in the real world. The trick depends on the coin flips having the exact same bias for each flip, and also depends on the coin flips being completely independent. In the real world however, the probability that a coin comes up as heads is not just a function of the coin itself, but is also a function of the environment and of the person flipping the coin. The bias is likely not static, and the coin flips are likely not independent.

As a degenerate example, imagine a person flipping a coin whose thumb over time gets tired, and eventually they end in a pattern that nearly always results in the coin flipping exactly 3 times in the air, such that the resulting flip pattern is heads-tails-heads-tails-etc. Under these circumstances, using the Von Neumann trick to generate a large random number will not result in a cryptographically secure random number, instead you will get the result ‘heads-heads-heads-heads-etc’.

If we want to generate secure cryptographic numbers by hand, we need a technique that is robust to both bias and also robust to correlation/patterns across coin flips.

Taek’s Tornado

What we’re going to do is take 5 coins, ideally all of different shapes and sizes, and put them into a large cup. You want the cup to be large so that as you shake the coins around, the coins get jumbled in a highly random way. Give the cup 5 good shakes where the coins are tumbling around, and then dump the coins out and count the number of heads. If there are an even number of heads, record a ‘0’ for the random bit. If there are an odd number of heads, record a ‘1’ for the random bit.

A cryptographically secure random number needs to be at least 128 bits in length, which means we’re going to have to do this 128 times. From there we can convert the 128 bits to something more human friendly like a bitcoin seed or passphrase. I explain that in the section titled ‘Going from a Bitstring to a Bitcoin Seed Phrase’.

I used the tornado technique to generate 16 random bits and got the following result:

Entropy Generation Inside the Tornado

The first big thing that we do with the Taek’s Tornado is that we use 5 coins per bit instead of one coin per bit. When generating random numbers, the fundamental thing we are collecting is entropy. 5 coins produce significantly more entropy than 1 coin, which gives us a better chance at arriving at an unbiased result.

The second big thing that we do is flip all of the coins together in one large cup. This creates a chaotic environment for the coins, where they will be bouncing off of each other in random ways, and generally reducing the impact of human consistency. If you flip a single coin many times in a row, as you gain practice you will likely start flipping the coin in a consistent way, introducing not only bias to your flips but correlation between your flips, which is very bad for randomness. Humans are much less likely to produce consistent or correlated results when using turbulent technique like shaking a cup.

Third, we count out an explicit number of shakes for the cup. The actual number isn’t so important, the key is that you pick a large number and stick to it. The main risk if you do not pick a large number of shakes is that you get lazy and only shake the cup a few times for each trial, and thereby fail to introduce entropy to your results. Lazy shaking can significantly reduce the security of the resulting random number.

Measuring the Quantity of Entropy

Once we have dumped the coins out of the cup, we need some way to collect the entropy and combine it into a single simulated coin toss. And we’re going to do that by using modular arithmetic, which has the property of being entropy preserving.

And as it happens, determining whether the total number of heads is even or odd is modular arithmetic. When we say “there are an even number of heads”, we are actually saying “the number of heads mod 2 equals zero”.

When we say “entropy preserving”, we mean that the total amount of randomness only goes up as we combine multiple results. So for example, if one of the coins is already perfectly unbiased, it means the result of combining this coin with all of the other coins will also be completely unbiased, regardless of how bad or non-random the other coins are!*

And it also means that when combining two biased coins together, the result will simulate a third coin which has less bias than either original coin. And in fact, the amount of bias in the simulated coin goes down exponentially as you increase the number of physical coins you are combining together. By using 5 coins, we ensure that even if every single coin is individually highly biased, the simulated coin will still have strong randomness.

This method of combining entropy also neatly handles correlated coin flips. Because our method does not care what order coins are added together, any correlation between coins is effectively reduced to additional bias. Coin flips that are correlated are predictable, and therefore do not contribute much to the randomness, however they also don’t interfere with the entropy produced by the other coins, and will not result in a degenerate situation like what can happen when using the Von Neumann trick.

*This assumes that the other coins do not know the result of the unbiased coin. If you have a sentient coin that knows the status of all other coins and can flip itself at the last second in a malicious way, the tornado will not be able to generate randomness. Luckily to the best of our knowledge coins are not sentient in this way.

Estimating Overall Bias

If we know how much randomness or bias is introduced in each coin toss, we can compute how much bias exists in the final result. In practice, we don’t know the exact bias and dependence of each coin toss, however we can make some reasonable assumptions about how bad they are at worst, and then use that to compute a lower bound on how random the final result is.

When using modular arithmetic to combine the results of coins, the equation for determining the bias of the result is to take the half the product of twice each coin’s ‘distance from unbiased’ and add that to 0.5.

As an example, let’s take 2 coins that each have a bias of 75%. The ‘distance from unbiased’ for each coin is 0.25. We double that for each coin to get 0.5, then we multiply them together to get 0.25. Then we halve the whole product to get 0.125. Finally, we add 0.5 to get 62.5%. The full equation would be (0.5 + (1/2) * (2*0.25)*(2*0.25)) = 62.5%.

As another example, let’s take 3 coins with biases 60%, 70%, and 80%. The equation for combining these coin flips using modular addition would be (0.5 + (1/2) * (2*0.1)*(2*0.2)*(2*0.3)) = 52.4% bias for the simulated coin.

In my own experiments, it seemed that each coin had a bias of at most 55%. That means the bias of combining 5 coins is 50.0005%, which is almost perfect.

Measuring Security from Bias

The main reason that we generate secure randomness is to give ourselves protection against an attacker who is trying to guess our secret. And we can use a mathematical concept called Shannon Entropy to determine exactly how difficult it is for an attacker to guess correctly.

The Shannon Entropy of a random event can be computed by running the probability of each outcome through the equation p * log2(1/p) and summing the results together. In the example of a perfectly fair coin, we add the Shannon Entropy of the probability of heads to the Shannon Entropy of the probability of tails to get the total amount of entropy produced by the fair coin: 0.5 * log2(1/0.5) + 0.5 * log2(1/0.5) = 1. A completely fair coin produces 1 bit of entropy per coin flip. A coin with a bias of 75% produces the equation 0.75 * log2(1/0.75) + 0.25 * log2(1/0.25) = ~0.811 bits of entropy per coin flip.

Assuming complete independence between coin flips, we can simply add up the bits from each coin flip to compute the total entropy. So if we use a coin with a 75% bias to create a 128 bit “random” number, the total amount of entropy is actually 0.811*128 = ~103.8 bits of entropy. If the coin flips are not independent, we can convert the correlation to an additional bias. So for example, if correlation between flips means that the effective bias is 85%, the total entropy from 128 coin flips is ~78 bits of entropy.

When we say that a random number has 78 bits of entropy, what we really mean is that an attacker has a 1 in 2⁷⁸ chance of guessing the random number. If we are using our random number to create a bitcoin seed, an attacker with a very fast supercomputer may be able to guess 2⁷⁰ times per second, meaning that attacker could guess our bitcoin seed in under 5 minutes. In cryptography, we typically consider a number secure if it has 128 bits of entropy, though this is somewhat arbitrary and really anything above 120 bits is exceptionally secure.

If we apply this math to the tornado, and assume that the total biases of each tornado trial is 53.125% (the result of combining 5 coins each with a 75% bias), the total amount of entropy in a final 128 bit number is going to be ~127.6 bits. Despite highly imperfect coins, the resulting random number is nearly perfect. Even if we assume that the individual coins in the tornado each have a bias of 85%, we still get ~117.3 bits of entropy in our final random number, which for most people is more than enough to be considered secure.

Trusting the Entropy

Sometimes people make mistakes when reviewing random numbers. There’s a famous story from World War 2 where operators were manually adjusting the random numbers produced by machines because they didn’t “look” random enough. Sometimes there would be long strings of the same number all in a row, and the operators would “fix” these occurrences by inserting some more random-seeming variety.

I couldn’t figure out whether that story is true, but it hammers home a point: true randomness sometimes doesn’t “feel” random enough. If you generate a random string that is 128 bits in length, you are highly likely to find a run of zeroes or ones that is at least 8 long, and finding a run that is 15 long or longer is uncommon but still very possible and not something to be worried about.

Using Dice Instead of Coins

Optionally, we can use dice instead of coins. If possible, this is actually preferred, because dice are a lot more chaotic than coins and generally are more immune to consistency and correlation that may be introduced by a human. For a coin to change from one result to another, it needs to rotate a full 180 degrees, and this rotation has to happen along the right axis. For a 6 sided die to change values, it only needs to rotate 90 degrees, and this rotation can happen along any axis. The better randomness properties of dice is one of the main reasons you see dice in casinos, but you don’t usually see coin flips in casinos.

You can do the same modular arithmetic to combine the results of dice tosses that you use to combine the results of coin tosses. When you throw multiple dice, sum up the values, and then count the result as a ‘0’ if the sum is even, or a ‘1’ if the sum is odd.

Using Computers to Improve Entropy Extraction

If we’re willing to use computers to help us derive our random number, we can do even better. We know from Shannon Entropy that 2 coins with 75% bias each have 0.811 bits of entropy. In total, that means there is up to 1.622 bits of entropy that can be extracted from these two coin tosses. With our modular addition, we are able to simulate a coin with a bias of 62.5%, allowing us to extract ~0.954 bits of entropy total.

We can extract the full entropy by feeding the results of the coin tosses into a secure hash function like sha256. It should be noted that in order to extract the full entropy, you need to also record which coin had which result, which means you need to be able to tell your coins apart after you throw them. For example if one coin is a penny and the other is a nickel, you can extract the full entropy of these two tosses by feeding a string like “pH-nT” into sha256. The resulting output will have the full 1.622 bits of randomness.

If you throw the coins multiple times, you can use a string like “pH-nT:pH-nH:pT-nH” to represent all of your trials. The resulting hash function will have more and more entropy, all the way until it reaches the cap of 256 bits, which is the maximum amount of entropy that can be produced by sha256.

If we’re willing to mix a camera into our setup, we can extract even more entropy per coin toss. We can throw the coins, and then take a picture of them. In the picture, not only will we capture the outcomes of the coin toss, but we will also capture the physical locations of the coins, which itself is random and adds entropy. And we get even more entropy from things like what angle the picture was taken at, what the lighting in the room was like, and we even get entropy boosts from things like noise/fuzz due to the camera sensor being imperfect.

The best part is that we don’t need any sort of fancy algorithm to extract all of this entropy. We can just take the entire image and throw it into sha256, and the hash function will automatically soak up the full entropy of the picture, including sources of entropy that we aren’t even aware of. In practice, merely taking a single picture of a blank wall is likely to produce more than 128 bits of entropy, the coins are more commemorative than they are required.

Why We Might Not Trust Computers

The reason someone might want to generate a bitcoin seed by hand is because they don’t trust computers. And that distrust comes from a major caveat that we mentioned earlier in this blog post: combining sources of entropy is only secure if there is no sentience in your system. And in the case of computers, malware and/or developer incompetence represents potential sentience that can corrupt your random numbers and give you an insecure bitcoin seed.

If we are using a camera, some malicious element in the camera could theoretically be modifying the image after it is taken, adding a little bit of noise here-or-there to corrupt the result and ensure the final image has a somewhat predictable sha256 hash.

Another attack vector could be malware that computes the incorrect sha256 hash. Since we can’t verify by hand or in our heads the result of hashing an image, we have to trust the output that the computer gives us, and a computer that is infected with malware may lie so that we use an insecure seed.

In practice, you may think that the chances of such malware existing in your key generating setup is very small, and you’d probably be right. Much of the advantage of doing things by hand boils down to fun and learning.

Going from a Bitstring to a Bitcoin Seed Phrase

By this point, we’ve generated a secure 128 bit string of ones and zeros and now we need to convert that to an actual usable password. And Bitcoin seed phrases are one of the easiest ways to convert a bitstring to a human friendly set of words.

The first thing you need to do is break your bitstring into pieces that are 11 bits large each. You will end up with 11 strings that are each 11 bits long, and 1 string at the end which is only 7 bits long. For the sake of brevity, I’m going to use an example that is 18 bits long — one string of 11, and one string of 7:

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0

To convert these bitstrings into seed words, we are going to have to do a little bit of math, and also use this lookup table. We’re going to look at the bitstrings as though they are binary and convert them to a number, and then look up the corresponding word for that number. For the final word we’ll need to do something special, which we’ll explain later.

For the first 11 bitstrings, we can convert them to binary by adding up the values that correspond to the 1’s. The first digit in our bitstring is worth ‘1024’, and each digit after it is worth half of the previous digit. Here’s a table that shows my string and the values that correspond to each digit:

Since we ignore the zeroes, my string is going to be 1024 + 512 + 256 + 128 + 16 + 2 = 1938. And then, because the lookup table starts the list at ‘1’ instead of ‘0’, we’re going to need to add an extra 1 to our number to correct for the alignment of the lookup table. So my final number is ‘1939’, and that corresponds to the word “venture”.

The final bitstring is only 7 bits, because the remaining 4 bits are a checksum. If you don’t care about a checksum, you can pretend that the remaining 4 bits are ‘0’ and use the method above to find the corresponding word. This will not impact the overall security of your passphrase.

If you specifically want to make a valid bitcoin seed, you’ll need to compute the checksum. Unfortunately, the checksum is computed using sha256, which is not easy to do by hand. Thankfully, there are only 16 possible options for the checksum, so it’s actually possible to just try all 16 options and wait until your wallet accepts one of them. To figure out which 16 words to use, we do the same process except leave the final 4 bits empty.

1 1 0 1 1 1 0 : - - - -

| | | | | | | | | | |

1024 512 256 128 64 32 16 : - - - -

For my checksum word the value is 1024 + 512 + 128 + 64 + 32 = 1760. And then we have to add 1 to account for the table alignment, so my final number is ‘1761’ and my potential set of words are the 16 words starting with ‘swing’. There is a guarantee that exactly one of the 16 words starting with swing will produce a valid bitcoin seed. You can feed each attempted seed to an offline bitcoin wallet, and then use the whichever seed the wallet accepts as your true bitcoin seed.

Creating a Human Friendly Checksum

It wasn’t quite satisfying to me to end this journey with a machine verified checksum, so I went the full distance and also created a checksum algorithm that is both useful and can be easily computed by hand. The biggest challenge about this checksum is that it’s not supported by any wallets, and therefore is purely for fun.

If there are any wallets that would like to support this checksum in seed phrases, they will need to be able to distinguish between seeds checksummed by sha256 and seeds checksummed using the human friendly method. I propose that seeds which use the human computable checksum (which we can call Taek4) suffix the final word in the seed with a “-t”. Using my short seed as an example, the full result would be “venture talent-t”.

Before I jump into the algorithm that we will be using to create our checksum, I want to cover a few properties that help make the checksum useful. The first is that every bit of our checksum should be influenced by every single word in the seed phrase. This ensures that each bit in our checksum is helping to catch mistakes made by the user. And the second is that each bit in the checksum should have as much independence as possible from the other bits in the checksum. If the bits don’t have good independence, the total number of errors that the checksum is capable of catching will be lower.

We know from information theory that a single bit in a checksum is capable of catching at most half of all mistakes. And we can accomplish this trivially by using a parity bit. To do this, we count up the total number of ones in our full bitstring. If the number of ones is even, our first checksum bit is ‘0’, and if the number is odd, the first checksum bit is ‘1’. In my example above, the total number of ones is eleven, which means the first checksum bit is ‘1’.

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0:- - - -

^ ^ ^ ^ ^ ^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^ ^ ^ ^:1 - - -

Information theory tells us that if we add a second checksum bit, we can catch at most half of the remaining mistakes.We could achieve this by taking the parity bit of the first half of our seed, but this has the shortcoming that it only tracks errors with the first half of our seed words. Instead, what we want to do is take the parity of every-other bit in our seed. In our example, we end up with ‘0’ for the second parity bit.

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0:- - - -

^ ^ ^ ^ ^ ^ :::: ^ ^ ^ :- 0 - -

^ ^ ^ ^ ^ ^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^ ^ ^ ^:1 - - -

By taking the parity of every other bit, we cover a full 1/2 of remaining potential mistakes, and we also ensure that every single seed word has influence over the outcome of this checksum.

I will note at this point that because the seed words are 11 bits each, some of the seed words will have 6 bits which influence the second checksum bit, and some seed words will have 5 bits which influence the second checksum bit. At least for checksums that are computed by hand, I believe this is unavoidable. If seed words were 12 bits instead (requiring a lookup table of 4096 words instead of 2048 words), we would have more consistent coverage.

With our third parity bit, we once again can catch at most half of all remaining mistakes. And you may think that we can achieve this by grabbing the parity bit of all the odd values, but actually this would add no new information! If we know the parity bit of the entire seed, and we also know the parity bit of all the even bits, then we can compute the parity bit of all the odd bits by XOR’ing those two bits together. Instead, we will want to use ‘grab 2 skip 2’ strategy to add the most possible diversity. In our example, we end up with ‘1’ as the third parity bit.

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0:- - - -

^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^:- - 1 -

^ ^ ^ ^ ^ ^ :::: ^ ^ ^ :- 0 - -

^ ^ ^ ^ ^ ^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^ ^ ^ ^:1 - - -

I want to take a quick pause to look at the pattern of the arrows above. We know that our current algorithm does a good job of checking for the maximum possible number of mistakes because every consecutive set of four bits is each governed by a different pattern created by the checksum, and none of the patterns are inverses of eachother.

For our final parity bit, we will use a ‘grab 4 skip 4’ strategy, which gives us the same properties as all of the other bits. That gives our fourth parity bit a value of ‘1’.

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0:- - - -

^ ^ ^ ^ ^ ^ ^ :::: ^ ^ ^:- - - 1

^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^:- - 1 -

^ ^ ^ ^ ^ ^ :::: ^ ^ ^ :- 0 - -

^ ^ ^ ^ ^ ^ ^ ^ ^ ^ ^ :::: ^ ^ ^ ^ ^ ^ ^:1 - - -Final Seed:

1 1 1 1 0 0 1 0 0 1 0 :::: 1 1 0 1 1 1 0 1 0 1 1Final Seed Phrase:

venture talent-t

If you are wondering why we didn’t use a ‘grab 3 skip 3’ strategy for the final parity bit, the answer is that the ‘grab 3 skip 3’ method doesn’t have nearly as much independence from the other bits as the ‘grab 4 skip 4’ strategy. That is, the ‘grab 4 skip 4’ strategy is capable of catching half of all remaining mistakes, but the ‘grab 3 skip 3’ strategy will catch fewer than half of all remaining mistakes.

Conclusion

Whether or not you have any intention to generate your own random numbers by hand, hopefully you found this post interesting and educational. The information theory that we explored has practical applications throughout computer science, especially when you are dealing with data compression or cryptography.

Personally, I find generating my own entropy to be deeply satisfying, not only because it grants me peace of mind but also because it gives me a greater sense of ownership and a deeper connection with the secure secrets that drive significant chunks of my life.

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK