Planning the Hardware Requirements for the VMware Cloud Foundation Management Do...

source link: https://brandonwillmott.com/2020/09/22/designing-your-vmware-cloud-foundation-management-domain/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Planning the Hardware Requirements for the VMware Cloud Foundation Management Domain

When talking with customers about deploying a standard VMware Cloud Foundation (VCF) architecture, the topic of the hardware requirements and details for the management domain are usually glossed over. There’s a basic understanding that it will consist of 4 hosts with sufficient resources to run the SDDC components for the workload domains that it manages. When it comes time for purchasing though, what is the actual hardware that will run the management domain and does VMware have recommended specs for hardware for the management domain?

The specs for the VMware Cloud Foundation management domain hardware for VCF 3.x and earlier are available in the VCF documentation but the documentation hasn’t been updated yet for 4.0. Instead, it’s tucked away in the Prerequisite Checklist tab of the Planning and Preparation worksheet found in the VMware Cloud Foundation documentation. As of September 2020, for VCF 4.0, that information is:

Server ComponentMinimum RequirementsServer Type4x vSAN ReadyNodes – All FlashCPUAny supportedMemory256 GB per serverStorage (Boot)32 GB SATA-DOM or SD CardStorage (Cache)1.2 TB Raw (2x disk groups, 600 GB cache each)Storage (Capacity)10 TB Raw (2x disk groups, 5 TB each)NIC2x 10 GbE and 1x 1 GbE BMC

What’s Deployed in a Management Domain During Bring-Up?

At a high level we know that VCF includes vSphere (ESXi and vCenter), vSAN, SDDC Manager, and NSX-T but what VMs actually gets deployed and what are the specs of those VMs when bring-up is performed? The following table illustrates those components and the compute/storage requirements:

ComponentvCPUMemoryStorageSDDC Manager416 GB800 GBvCenter Server (small)419 GB480 GBNSX-T Manager 01 (medium)624 GB300 GBNSX-T Manager 02624 GB300 GBNSX-T Manager 03624 GB300 GBNSX-T Edge Node 01 (medium)48 GB200 GBNSX-T Edge Node 0248 GB200 GBTotal34123 GB2580 GB

Note: NSX Edges are not deployed automatically for an NSX-T VI workload domain but I have included their requirements here. You can deploy them manually after the VI workload domain is created. NSX Edges are optional and only needed to enable overlay VI networks and public networks for north-south traffic. Subsequent NSX-T VI workload domains share the NSX-T Edges deployed for the first workload domain.

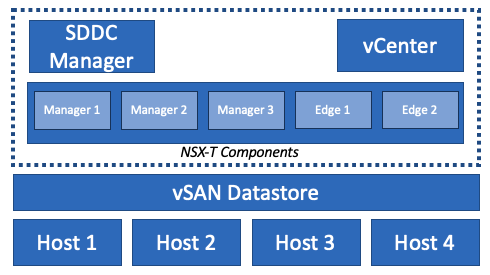

Logically, our management domain will look like this:

Determining Cluster Resource Utilization

Now that we know that information, the lingering question is: how much compute and storage capacity does this hardware BOM give us and how many VI workload domains will it support?

The easiest way to answer this question is by using the vSAN Sizer! Using the vSAN Sizer, we can specify some initial cluster parameters such as socket count, cores per socket, CPU headroom, and vSAN slack space along with our workloads and the sizer will recommend a vSAN ReadyNode model and count to meet our needs. With the vSAN Sizer, I can’t specify the exact hardware I want so it’s going to be off a bit. For example, I can’t get exactly 10 TB per node nor 600 GB cache. Instead, I was able to base this build off the following AF-4 ReadyNode:

ReadyNodeAF-4Cores per Node24Memory per Node256 GBStorage (Cache)600 GB (2x disk groups, 300 GB cache each)Storage (Capacity)7.68 TB (2x disk groups, 3.84 TB each)

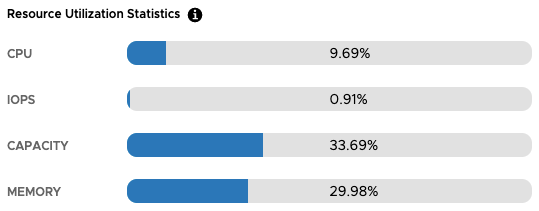

After specifying those parameters, my 4 node cluster utilization with the 7 VMs that make up VMware Cloud Foundation is as follows:

That’s a good start, but how does this BOM scale and what are it’s limits for supporting VI workload domains?

Resources Required for Adding VI Workload Domains

For each VI workload domain deployed, a new vCenter Server and an NSX-T instance (3x NSX-T Managers) is deployed:

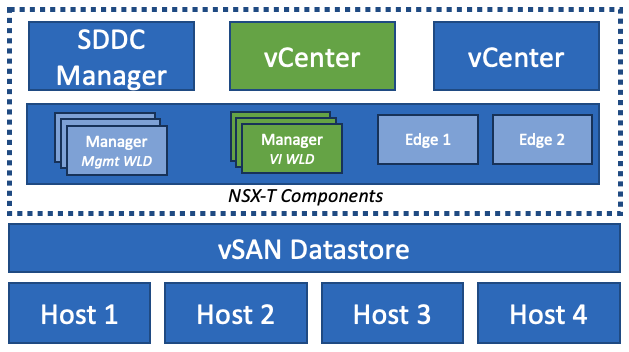

Management Domain Resource Utilization with One VI Workload Domain

Now that we know that a vCenter Server and 3x NSX-T Managers will be deployed in the management workload domain for the new VI workload domain, what are the specs of the vCenter server and NSX-T Managers that get deployed with the new VI workload domain? By default, SDDC Manager will deploy a medium-sized vCenter Server and large NSX-T Managers:

NamevCPUMemoryStoragevCenter (medium)828 GB700 GBNSX-T Manager (large)1248 GB300 GBNSX-T Manager1248 GB300 GBNSX-T Manager1248 GB300 GBTotal44172 GB1600 GB

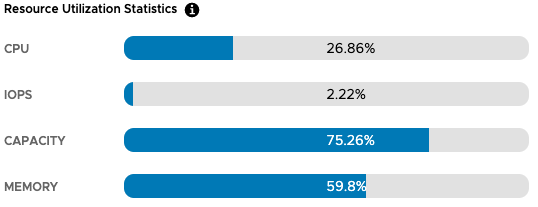

After adding these 4 VMs to our management domain, our utilization is as follows:

Subsequent VI workload domains can follow this pattern of one vCenter Server and 3x NSX-T Managers but VI workload domains can share NSX-T instances with each other. However, the management domain NSX-T instances can’t be shared with VI workload domains!

In many cases, one VI workload domain will be sufficient as it can contain up to 64 clusters. But, I’m looking to see how many workload domains this BOM can support so let’s see!

Pushing the Limits of the BOM

For this exercise, I assumed that the architecture allows for NSX-T Managers to be shared so I added one vCenter Server (medium) until I reached compute or storage capacity limits. Based on my testing with the vSAN Sizer, 7 VI Workload Domains is the limit for this BOM as capacity reaches 75% (using FTT=1, RAID-1).

Because the management domain is HCI-based, we can easily scale up by adding cache and capacity disks to each host or scale out by adding hosts.

Takeaways

VMware’s hardware BOM for the management domain can easily satisfy the requirements for the management domain and one VI workload domain resource. Without making any changes to storage policies (and thus requiring additional hosts to meet N+1 recommendations), the hardware BOM can provide resources for up to 7 VI workload domains (with shared NSX-T instances).

I hope this exercise is helpful to others on their journey to a software defined datacenter with VMware Cloud Foundation! In part 2 of this topic, we’ll explore what the management domain looks like with vRealize Suite!

If you have comments or questions, please reach out to me on Twitter!

References

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK