Blog | Homotopy Type Theory

source link: https://homotopytypetheory.org/blog/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Erik Palmgren Memorial Conference

Erik Palmgren, professor of Mathematical Logic at Stockholm University, passed away unexpectedly in November 2019.

This conference will be an online workshop to remember and celebrate Erik’s life and work. There will be talks by Erik’s friends and colleagues, as well as time for discussions and exchange of memories during breaks. Talks may be about any topics that Erik would have enjoyed, including but not limited to (in Erik’s words):

– Type theory and its models. The relation between type theory and homotopy theory.

– Categorical logic and category-theoretic foundations.

– Constructive mathematics, especially formal topology and reverse constructive mathematics.

– Nonstandard analysis, especially its constructive aspects

– Philosophy of mathematics related to constructivism.

Online, 19–21 Nov 2020

HoTT Dissertation Fellowship

Update (Nov. 13): Application deadline extended to December 1, 2020.

PhD students close to finishing their thesis are invited to apply for a Dissertation Fellowship in Homotopy Type Theory. This fellowship, generously funded by Cambridge Quantum Computing and Ilyas Khan, will provide $18,000 to support a graduate student finishing a dissertation on a topic broadly related to homotopy type theory. For instance, it can be used to fund a semester free from teaching duties, or to extend a PhD period by a few months.

Applications are due by November 15, 2020, with decisions to be announced in mid-December; the fellowship period can be any time between January 1, 2021 and June 30, 2022 at the discretion of the applicant. To apply, please send the following:

- A research statement of no more than two pages, describing your thesis project and its relationship to homotopy type theory, when you are planning to finish your PhD, and what the money would be used for. Please also mention your current and past sources of support during your PhD, and say a little about your research plans post-graduation.

- A current CV.

- A letter from your advisor in support of your thesis plan, and confirming that your department will be able to use the money as planned.

The recipient of the fellowship will be selected by a committee consisting of Steve Awodey, Thierry Coquand, Emily Riehl, and Mike Shulman, and will be announced here.

Application materials should be submitted by email to: [email protected] .

The Cantor-Schröder-Bernstein Theorem for ∞-groupoids

The classical Cantor-Schröder-Bernstein Theorem (CSB) of set theory, formulated by Cantor and first proved by Bernstein, states that for any pair of sets, if there is an injection of each one into the other, then the two sets are in bijection.

There are proofs that use excluded middle but not choice. That excluded middle is absolutely necessary was recently established by Pierre Pradic and Chad E. Brown.

The appropriate principle of excluded middle for HoTT/UF says that every subsingleton (or proposition, or truth value) is either empty or pointed. The statement that every type is either empty or pointed is much stronger, and amounts to global choice, which is incompatible with univalence (Theorem 3.2.2 of the HoTT book). In fact, in the presence of global choice, every type is a set by Hedberg’s Theorem, but univalence gives types that are not sets. Excluded middle middle, however, is known to be compatible with univalence, and is validated in Voevodsky’s model of simplicial sets. And so is (non-global) choice, but we don’t need it here.

Can the Cantor-Schröder-Bernstein Theorem be generalized from sets to arbitrary homotopy types, or ∞-groupoids, in the presence of excluded middle? This seems rather unlikely at first sight:

- CSB fails for 1-categories.

In fact, it already fails for posets. For example, the intervalsand

are order-embedded into each other, but they are not order isomorphic.

- The known proofs of CSB for sets rely on deciding equality of elements of sets, but, in the presence of excluded middle, the types that have decidable equality are precisely the sets, by Hedberg’s Theorem.

In set theory, a map is an injection if and only if it is left-cancellable, in the sense that

implies

. But, for types

and

that are not sets, this notion is too weak, and, moreover, is not a proposition as the identity type

has multiple elements in general. The appropriate notion of embedding for a function

of arbitrary types

and

is given by any of the following two equivalent conditions:

- The map

is an equivalence for any

.

- The fibers of

are all subsingletons.

A map of sets is an embedding if and only if it is left-cancellable. However, for example, any map that picks a point

is left-cancellable, but it is an embedding if and only if the point

is homotopy isolated, which amounts to saying that the identity type

is contractible. This fails, for instance, when the type

is the homotopical circle, for any point

, or when

is a univalent universe and

is the two-point type, or any type with more than one automorphism.

It is the second characterization of embedding given above that we exploit here.

The Cantor-Schröder-Bernstein Theorem for homotopy types, or ∞-groupoids. For any two types, if each one is embedded into the other, then they are equivalent, in the presence of excluded middle.

We adapt Halmos’ proof in his book Naive Set Theory to our more general situation. We don’t need to invoke univalence, the existence of propositional truncations or any other higher inductive type for our construction. But we do rely on function extensionality.

Let and

be embeddings of arbitrary types

and

.

We say that is a

-point if for any

and

with

, the

-fiber of

is inhabited. Using function extensionality and the assumption that

is an embedding, we see that being a

-point is property rather than data, because subsingletons are closed under products.

Considering and

, we see that if

is a

-point then the

-fiber of

is inhabited, and hence we get a function

of

-points of

into

. By construction, we have that

. In particular if

is a

-point for a given

, we get

, and because

, being an embedding, is left-cancellable, we get

.

Now define by

if

is a

-point, and

, otherwise.

To conclude the proof, it is enough to show that is left-cancellable and split-surjective, as any such map is an equivalence.

To see that is left-cancellable, it is enough to show that the images of

and

in the definition of

don’t overlap, because

and

are left-cancellable. For that purpose, let

be a non-

-point and

be a

-point, and, for the sake of contradiction, assume

. Then

. Now, because if

were a

-point then so would be

, we conclude that it isn’t, and hence neither is

, which contradicts the assumption.

To see that is a split surjection, say that

is an

-point if there are designated

and

with

and the

-fiber of

empty. This is data rather than property, and so this notion could not have been used for the construction of

. But every non-

-point is a

-point, applying excluded middle to the

-fiber of

in the definition of

-point.

Claim. If is not a

-point, then there is a designated point

of the

-fiber of

such that

is not a

-point either. To prove this, first notice that it is impossible that

is not an

-point, by the above observation. But this is not enough to conclude that it is an

-point, because excluded middle applies to subsingletons only, which the notion of

-point isn’t. However, it is readily seen that if

is an

-point, then there is a designated point

in the

-fiber of

. From this it follows that it impossible that the subtype of the fiber consisting of the elements

with

not a

-point is empty. But the

-fiber of

is a proposition because

is an embedding, and hence so is the subtype, and therefore the claim follows by double-negation elimination.

We can now resume the proof that is a split surjection. For any

, we check whether or not

is a

-point. If it is, we map

to

, and if it isn’t we map

to the point

given by the claim.

This concludes the proof.

So, in this argument we don’t apply excluded middle to equality directly, which we wouldn’t be able to as the types and

are not necessarily sets. We instead apply it to (1) the property of being a

-point, defined in terms of the fibers of

, (2) a fiber of

, and (3) a subtype of a fiber of

. These three types are propositions because the functions

and

are embeddings rather than merely left-cancellable maps.

A version of this argument is available in Agda.

HoTT 2019 Last Call

Last call for submissions

INTERNATIONAL CONFERENCE AND SUMMER SCHOOL

ON HOMOTOPY TYPE THEORY

12-17 August 2019

Carnegie Mellon University, Pittsburgh USA

https://hott.github.io/HoTT-2019

Submission of talks and registration are open for the International

Homotopy Type Theory conference (HoTT 2019), to be held August 12-17,

2019, at Carnegie Mellon University in Pittsburgh, USA. Contributions

are welcome in all areas related to homotopy type theory, including

but not limited to:

* Homotopical and higher-categorical semantics of type theory

* Synthetic homotopy theory

* Applications of univalence and higher inductive types

* Cubical type theories and cubical models

* Formalization of mathematics and computer science in homotopy type

theory / univalent foundations

Please submit 1-paragraph abstracts through EasyChair here:

https://easychair.org/conferences/?conf=hott2019

The submission deadline is 1 June 2019; we expect to

notify accepted submissions by 15 June. This conference is run on the

“mathematics model”: full papers will not be submitted, submissions

will not be refereed, and submission is not a publication. Please

email [email protected] with any questions.

STUDENT PAPER AWARD

A prize of $500 (and distinguished billing in the conference program)

will be awarded to the best paper submitted by a student (or recently

graduated student). To be eligible, you must include in your

submission (or send separately to [email protected]) a link

to a preprint of your paper (e.g. on arXiv or a private web space).

REGISTRATION, ACCOMODATION, AND TRAVEL

Registration for the conference and the summer school is now open at

https://hott.github.io/HoTT-2019/registration/. A limited amount of

financial support is available for students and postdoctoral

researchers; application instructions are available at the web site,

as is information about accomodation and travel options.

INVITED SPEAKERS

Ulrik Buchholtz (TU Darmstadt, Germany)

Dan Licata (Wesleyan University, USA)

Andrew Pitts (University of Cambridge, UK)

Emily Riehl (Johns Hopkins University, USA)

Christian Sattler (University of Gothenburg, Sweden)

Karol Szumilo (University of Leeds, UK)

IMPORTANT DATES

Submission deadline: 1 June

Notification Date: 15 June

Final abstracts due: 1 July

Early Registration deadline: 1 July (reduced fee)

Late Registration deadline: 1 August (increased fee)

Conference: 12-17 August 2019

SUMMER SCHOOL

There will be an associated Homotopy Type Theory Summer School in

the preceding week, August 7th to 10th. The instructors and topics

will be:

Cubical methods: Anders Mortberg (Carnegie Mellon University, USA)

Formalization in Agda: Guillaume Brunerie (Stockholm University, Sweden)

Formalization in Coq: Kristina Sojakova (Cornell University, USA)

Higher topos theory: Mathieu Anel (Carnegie Mellon University, USA)

Semantics of type theory: Jonas Frey (Carnegie Mellon University, USA)

Synthetic homotopy theory: Egbert Rijke (University of Illinois, USA)

SCIENTIFIC COMMITTEE

Steve Awodey (Carnegie Mellon University, USA)

Andrej Bauer (University of Ljubljana, Slovenia)

Thierry Coquand (University of Gothenburg, Sweden)

Nicola Gambino (University of Leeds, UK)

Peter LeFanu Lumsdaine (Stockholm University, Sweden)

Michael Shulman (University of San Diego, USA), chair

We look forward to seeing you in Pittsburgh!

Introduction to Univalent Foundations of Mathematics with Agda

I am going to teach HoTT/UF with Agda at the Midlands Graduate School in April, and I produced lecture notes that I thought may be of wider use and so I am advertising them here.

The source files I used to generate the above html version of the notes are available at github, so that questions, issues and pull requests for fixes, improvements and feature requests are publicly available there.

Geometry in Modal HoTT now on Zoom

The workshop Geometry in Modal HoTT taking place next week at cmu will be available for online participation via Zoom! The recorded talks will be available on youtube (provided the speakers give their consent) sometime after the workshop.

HoTT 2019 Call for Submissions

Submissions of talks are now open for the International Homotopy Type Theory conference (HoTT 2019), to be held from August 12th to 17th, 2019, at Carnegie Mellon University in Pittsburgh, USA. Contributions are welcome in all areas related to homotopy type theory, including but not limited to:

- Homotopical and higher-categorical semantics of type theory

- Synthetic homotopy theory

- Applications of univalence and higher inductive types

- Cubical type theories and cubical models

- Formalization of mathematics and computer science in homotopy type theory / univalent foundations

Please submit 1-paragraph abstracts through the EasyChair conference system here:

https://easychair.org/conferences/?conf=hott2019

The submission deadline is 1 April 2019; we expect to notify accepted submissions by 1 May. If you need an earlier decision for some reason (e.g. to apply for funding), please submit your abstract by 15 March and send an email to [email protected] notifying us that you need an early decision.

This conference is run on the “mathematics model” rather than the “computer science model”: full papers will not be submitted, submissions will not be refereed, and submission is not a publication (although a proceedings volume might be organized afterwards). More information, including registration, accomodation options, and travel, will be available as the conference approaches at the web site https://hott.github.io/HoTT-2019/ .

Please email [email protected] with any questions.

Cubical Agda

Last year I wrote a post about cubicaltt on this blog. Since then there have been a lot of exciting developments in the world of cubes. In particular there are now two cubical proof assistants that are currently being developed in Gothenburg and Pittsburgh. One of them is a cubical version of Agda developed by Andrea Vezzosi at Chalmers and the other is a system called redtt developed by my colleagues at CMU.

These systems differ from cubicaltt in that they are proper proof assistants for cubical type theory in the sense that they support unification, interactive proof development via holes, etc… Cubical Agda inherits Agda’s powerful dependent pattern matching functionality, and redtt has a succinct notation for defining functions by eliminators. Our goal with cubicaltt was never to develop yet another proof assistant, but rather to explore how it could be to program and work in a core system based on cubical type theory. This meant that many things were quite tedious to do in cubicaltt, so it is great that we now have these more advanced systems that are much more pleasant to work in.

This post is about Cubical Agda, but more or less everything in it can also be done (with slight modifications) in redtt. This extension of Agda has actually been around for a few years now, however it is just this year that the theory of HITs has been properly worked out for cubical type theory:

On Higher Inductive Types in Cubical Type Theory

Inspired by this paper (which I will refer as “CHM”) Andrea has extended Cubical Agda with user definable HITs with definitional computation rules for all constructors. Working with these is a lot of fun and I have been doing many of the proofs in synthetic homotopy theory from the HoTT book cubically. Having a system with native support for HITs makes many things a lot easier and most of the proofs I have done are significantly shorter. However, this post will not focus on HITs, but rather on a core library for Cubical Agda that we have been developing over the last few months:

https://github.com/agda/cubical

The core part of this library has been designed with the aim to:

1. Expose and document the cubical primitives of Agda.

2. Provide an interface to HoTT as presented in the book (i.e. “Book HoTT”), but where everything is implemented with the cubical primitives under the hood.

The idea behind the second of these was suggested to me by Martín Escardó who wanted a file which exposes an identity type with the standard introduction principle and eliminator (satisfying the computation rule definitionally), together with function extensionality, univalence and propositional truncation. All of these notions should be represented using cubical primitives under the hood which means that they all compute and that there are no axioms involved. In particular this means that one can import this file in an Agda developments relying on Book HoTT and no axioms should then be needed; more about this later.

Our cubical library compiles with the latest development version of Agda and it is currently divided into 3 main parts:

Cubical.BasicsCubical.CoreCubical.HITsThe first of these contain various basic results from HoTT/UF, like isomorphisms are equivalences (i.e. have contractible fibers), Hedberg’s theorem (types with decidable equality are sets), various proofs of different formulations of univalence, etc. This part of the library is currently in flux as I’m adding a lot of results to it all the time.

The second one is the one I will focus on in this post and it is supposed to be quite stable by now. The files in this folder expose the cubical primitives and the cubical interface to HoTT/UF. Ideally a regular user should not have to look too closely at these files and instead just import Cubical.Core.Everything or Cubical.Core.HoTT-UF.

The third folder contains various HITs (S¹, S², S³, torus, suspension, pushouts, interval, join, smash products…) with some basic theory about these. I plan to write another post about this soon, so stay tuned.

As I said above a regular user should only really need to know about the Cubical.Core.Everything and Cubical.Core.HoTT-UF files in the core library. The Cubical.Core.Everything file exports the following things:

-- Basic primitives (some are from Agda.Primitive)open import Cubical.Core.Primitives public-- Basic cubical preludeopen import Cubical.Core.Prelude public-- Definition of equivalences, Glue types and-- the univalence theoremopen import Cubical.Core.Glue public-- Propositional truncation defined as a-- higher inductive typeopen import Cubical.Core.PropositionalTruncation public-- Definition of Identity types and definitions of J,-- funExt, univalence and propositional truncation-- using Id instead of Pathopen import Cubical.Core.Id publicI will explain the contents of the Cubical.Core.HoTT-UF file in detail later in this post, but I would first like to clarify that it is absolutely not necessary to use that file as a new user. The point of it is mainly to provide a way to make already existing HoTT/UF developments in Agda compute, but I personally only use the cubical primitives provided by the Cubical.Core.Everything file when developing something new in Cubical Agda as I find these much more natural to work with (especially when reasoning about HITs).

Cubical Primitives

It is not my intention to write another detailed explanation of cubical type theory in this post; for that see my previous post and the paper (which is commonly referred to as “CCHM”, after the authors of CCHM):

Cubical Type Theory: a constructive interpretation of the univalence axiom

The main things that the CCHM cubical type theory extends dependent type theory with are:

- An interval pretype

- Kan operations

- Glue types

- Cubical identity types

The first of these is what lets us work directly with higher dimensional cubes in type theory and incorporating this into the judgmental structure is really what makes the system tick. The Cubical.Core.Primitives and Cubical.Core.Prelude files provide 1 and 2, together with some extra stuff that are needed to get 3 and 4 up and running.

Let’s first look at the cubical interval I. It has endpoints i0 : I and i1 : I together with connections and reversals:

_∧_ : I → I → I_∨_ : I → I → I~_ : I → Isatisfying the structure of a De Morgan algebra (as in CCHM). As Agda doesn’t have a notion of non-fibrant types (yet?) the interval I lives in Setω.

There are also (dependent) cubical Path types:

PathP : ∀ {ℓ} (A : I → Set ℓ) → A i0 → A i1 → Set ℓfrom which we can define non-dependent Paths:

Path : ∀ {ℓ} (A : Set ℓ) → A → A → Set ℓPath A a b = PathP (λ _ → A) a bA non-dependent path Path A a b gets printed as a ≡ b. I would like to generalize this at some point and have cubical extension types (inspired by A type theory for synthetic ∞-categories). These extension types are already in redtt and has proved to be very natural and useful, especially for working with HITs as shown by this snippet of redtt code coming from the proof that the loop space of the circle is the integers:

def decode-square: (n : int)→ [i j] s1 [| i=0 → loopn (pred n) j| i=1 → loopn n j| j=0 → base| j=1 → loop i]= ...Just like in cubicaltt we get short proofs of the basic primitives from HoTT/UF:

refl : ∀ {ℓ} {A : Set ℓ} (x : A) → x ≡ xrefl x = λ _ → xsym : ∀ {ℓ} {A : Set ℓ} {x y : A} → x ≡ y → y ≡ xsym p = λ i → p (~ i)cong : ∀ {ℓ ℓ'} {A : Set ℓ} {B : A → Set ℓ'} {x y : A}(f : (a : A) → B a)(p : x ≡ y) →PathP (λ i → B (p i)) (f x) (f y)cong f p = λ i → f (p i)funExt : ∀ {ℓ ℓ'} {A : Set ℓ} {B : A → Set ℓ'}{f g : (x : A) → B x}(p : (x : A) → f x ≡ g x) →f ≡ gfunExt p i x = p x iNote that the proof of functional extensionality is just swapping the arguments to p!

Partial elements and cubical subtypes

[In order for me to be able to explain the other features of Cubical Agda in some detail I have to spend some time on partial elements and cubical subtypes, but as these notions are quite technical I would recommend readers who are not already familiar with them to just skim over this section and read it more carefully later.]

One of the key operations in the cubical set model is to map an element of the interval to an element of the face lattice (i.e. the type of cofibrant propositions F ⊂ Ω). This map is written (_ = 1) : I → F in CCHM and in Cubical Agda it is written IsOne r. The constant 1=1 is a proof that (i1 = 1), i.e. of IsOne i1.

This lets us then work with partial types and elements directly (which was not possible in cubicaltt). The type Partial φ A is a special version of the function space IsOne φ → A with a more extensional judgmental equality. There is also a dependent version PartialP φ A which allows A to be defined only on φ. As these types are not necessarily fibrant they also live in Setω. These types are easiest to understand by seeing how one can introduce them:

sys : ∀ i → Partial (i ∨ ~ i) Set₁sys i (i = i1) = Set → Setsys i (i = i0) = SetThis defines a partial type in Set₁ which is defined when (i = i1) ∨ (i = i0). We define it by pattern matching so that it is Set → Set when (i = i1) and Set when (i = i0). Note that we are writing (i ∨ ~ i) and that the IsOne map is implicit. If one instead puts a hole as right hand side:

sys : ∀ i → Partial (i ∨ ~ i) Set₁sys i x = {! x !}and ask Agda what the type of x is (by putting the cursor in the hole and typing C-c C-,) then Agda answers:

Goal: Set₁—————————————————————————————————————————————x : IsOne (i ∨ ~ i)i : II usually introduce these using pattern matching lambdas so that I can write:

sys' : ∀ i → Partial (i ∨ ~ i) Set₁sys' i = \ { (i = i0) → Set; (i = i1) → Set → Set }This is very convenient when using the Kan operations. Furthermore, when the cases overlap they must of course agree:

sys2 : ∀ i j → Partial (i ∨ (i ∧ j)) Set₁sys2 i j = \ { (i = i1) → Set; (i = i1) (j = i1) → Set }In order to get this to work Andrea had to adapt the pattern-matching of Agda to allow us to pattern-match on the faces like this. It is however not yet possible to use C-c C-c to automatically generate the cases for a partial element, but hopefully this will be added at some point.

Using the partial elements there are also cubical subtypes as in CCHM:

_[_↦_] : ∀ {ℓ} (A : Set ℓ) (φ : I) (u : Partial φ A) →Agda.Primitive.SetωA [ φ ↦ u ] = Sub A φ uSo that a : A [ φ ↦ u ] is a partial element a : A that agrees with u on φ. We have maps in and out of the subtypes:

inc : ∀ {ℓ} {A : Set ℓ} {φ} (u : A) →A [ φ ↦ (λ _ → u) ]ouc : ∀ {ℓ} {A : Set ℓ} {φ : I} {u : Partial φ A} →A [ φ ↦ u ] → AIt would be very nice to have subtyping for these, but at the moment the user has to write inc/ouc explicitly. With this infrastructure we can now consider the Kan operations of cubical type theory.

Kan operations

In order to support HITs we use the Kan operations from CHM. The first of these is a generalized transport operation:

transp : ∀ {ℓ} (A : I → Set ℓ) (φ : I) (a : A i0) → A i1When calling transp A φ a Agda makes sure that A is constant on φ and when calling this with i0 for φ we recover the regular transport function, furthermore when φ is i1 this is the identity function. Being able to control when transport is the identity function is really what makes this operation so useful (see the definition of comp below) and why we got HITs to work so nicely in CHM compared to CCHM.

We also have homogeneous composition operations:

hcomp : ∀ {ℓ} {A : Set ℓ} {φ : I}(u : I → Partial A φ) (a : A) → AWhen calling hcomp A φ u a Agda makes sure that a agrees with u i0 on φ. This is like the composition operations in CCHM, but the type A is constant. Note that this operation is actually different from the one in CHM as φ is in the interval and not the face lattice. By the way the partial elements are set up the faces will then be compared under the image of IsOne. This subtle detail is actually very useful and gives a very neat trick for eliminating empty systems from Cubical Agda (this has not yet been implemented, but it is discussed here).

Using these two operations we can derive the heterogeneous composition

operation:

comp : ∀ {ℓ : I → Level} (A : ∀ i → Set (ℓ i)) {φ : I}(u : ∀ i → Partial φ (A i))(u0 : A i0 [ φ ↦ u i0 ]) → A i1comp A {φ = φ} u u0 =hcomp(λ i → λ { (φ = i1) →transp (λ j → A (i ∨ j)) i (u _ 1=1) })(transp A i0 (ouc u0))This decomposition of the Kan operations into transport and homogeneous composition seems crucial to get HITs to work properly in cubical type theory and in fact redtt is also using a similar decomposition of their Kan operations.

We can also derive both homogeneous and heterogeneous Kan filling using hcomp and comp with connections:

hfill : ∀ {ℓ} {A : Set ℓ} {φ : I}(u : ∀ i → Partial φ A)(u0 : A [ φ ↦ u i0 ])(i : I) → Ahfill {φ = φ} u u0 i =hcomp (λ j → λ { (φ = i1) → u (i ∧ j) 1=1; (i = i0) → ouc u0 })(ouc u0)fill : ∀ {ℓ : I → Level} (A : ∀ i → Set (ℓ i)) {φ : I}(u : ∀ i → Partial φ (A i))(u0 : A i0 [ φ ↦ u i0 ])(i : I) → A ifill A {φ = φ} u u0 i =comp (λ j → A (i ∧ j))(λ j → λ { (φ = i1) → u (i ∧ j) 1=1; (i = i0) → ouc u0 })(inc {φ = φ ∨ (~ i)} (ouc {φ = φ} u0))Using these operations we can do all of the standard cubical stuff, like composing paths and defining J with its computation rule (up to a Path):

compPath : ∀ {ℓ} {A : Set ℓ} {x y z : A} →x ≡ y → y ≡ z → x ≡ zcompPath {x = x} p q i =hcomp (λ j → \ { (i = i0) → x; (i = i1) → q j })(p i)module _ {ℓ ℓ'} {A : Set ℓ} {x : A}(P : ∀ y → x ≡ y → Set ℓ') (d : P x refl) whereJ : {y : A} → (p : x ≡ y) → P y pJ p = transp (λ i → P (p i) (λ j → p (i ∧ j))) i0 dJRefl : J refl ≡ dJRefl i = transp (λ _ → P x refl) i dThe use of a module here is not crucial in any way, it’s just an Agda trick to make J and JRefl share some arguments.

Glue types and univalence

The file Cubical.Core.Glue defines fibers and equivalences (as they were originally defined by Voevodsky in his Foundations library, i.e. as maps with contractible fibers). Using this we export the Glue types of Cubical Agda which lets us extend a total type by a partial family of equivalent types:

Glue : ∀ {ℓ ℓ'} (A : Set ℓ) {φ : I} →(Te : Partial φ (Σ[ T ∈ Set ℓ' ] T ≃ A)) →Set ℓ'This comes with introduction and elimination forms (glue and unglue). With this we formalize the proof of a variation of univalence following the proof in Section 7.2 of CCHM. The key observation is that unglue is an equivalence:

unglueIsEquiv : ∀ {ℓ} (A : Set ℓ) (φ : I)(f : PartialP φ (λ o → Σ[ T ∈ Set ℓ ] T ≃ A)) →isEquiv {A = Glue A f} (unglue {φ = φ})equiv-proof (unglueIsEquiv A φ f) = λ (b : A) →let u : I → Partial φ Au i = λ{ (φ = i1) → equivCtr (f 1=1 .snd) b .snd (~ i) }ctr : fiber (unglue {φ = φ}) bctr = ( glue (λ { (φ = i1) → equivCtr (f 1=1 .snd) b .fst }) (hcomp u b), λ j → hfill u (inc b) (~ j))in ( ctr, λ (v : fiber (unglue {φ = φ}) b) i →let u' : I → Partial (φ ∨ ~ i ∨ i) Au' j = λ { (φ = i1) → equivCtrPath (f 1=1 .snd) b v i .snd (~ j); (i = i0) → hfill u (inc b) j; (i = i1) → v .snd (~ j) }in ( glue (λ { (φ = i1) → equivCtrPath (f 1=1 .snd) b v i .fst }) (hcomp u' b), λ j → hfill u' (inc b) (~ j)))The details of this proof is best studied interactively in Agda and by first understanding the proof in CCHM. The reason this is a crucial observation is that it says that any partial family of equivalences can be extended to a total one from Glue [ φ ↦ (T,f) ] A to A:

unglueEquiv : ∀ {ℓ} (A : Set ℓ) (φ : I)(f : PartialP φ (λ o → Σ[ T ∈ Set ℓ ] T ≃ A)) →(Glue A f) ≃ AunglueEquiv A φ f = ( unglue {φ = φ} , unglueIsEquiv A φ f )and this is exactly what we need to prove the following formulation of the univalence theorem:

EquivContr : ∀ {ℓ} (A : Set ℓ) → isContr (Σ[ T ∈ Set ℓ ] T ≃ A)EquivContr {ℓ} A =( ( A , idEquiv A), λ w i →let f : PartialP (~ i ∨ i) (λ x → Σ[ T ∈ Set ℓ ] T ≃ A)f = λ { (i = i0) → ( A , idEquiv A ) ; (i = i1) → w }in ( Glue A f , unglueEquiv A (~ i ∨ i) f) )This formulation of univalence was proposed by Martín Escardó in (see also Theorem 5.8.4 of the HoTT Book):

https://groups.google.com/forum/#!msg/homotopytypetheory/HfCB_b-PNEU/Ibb48LvUMeUJ

We have also formalized a quite slick proof of the standard formulation of univalence from EquivContr (see Cubical.Basics.Univalence). This proof uses that EquivContr is contractibility of singletons for equivalences, which combined with subst can be used to prove equivalence induction:

contrSinglEquiv : ∀ {ℓ} {A B : Set ℓ} (e : A ≃ B) →(B , idEquiv B) ≡ (A , e)contrSinglEquiv {A = A} {B = B} e =isContr→isProp (EquivContr B) (B , idEquiv B) (A , e)EquivJ : ∀ {ℓ ℓ′} (P : (A B : Set ℓ) → (e : B ≃ A) → Set ℓ′)(r : (A : Set ℓ) → P A A (idEquiv A))(A B : Set ℓ) (e : B ≃ A) →P A B eEquivJ P r A B e =subst (λ x → P A (x .fst) (x .snd))(contrSinglEquiv e) (r A)We then use that the Glue types also gives a map ua which maps the identity equivalence to refl:

ua : ∀ {ℓ} {A B : Set ℓ} → A ≃ B → A ≡ Bua {A = A} {B = B} e i =Glue B (λ { (i = i0) → (A , e); (i = i1) → (B , idEquiv B) })uaIdEquiv : ∀ {ℓ} {A : Set ℓ} → ua (idEquiv A) ≡ refluaIdEquiv {A = A} i j =Glue A {φ = i ∨ ~ j ∨ j} (λ _ → A , idEquiv A)Now, given any function au : ∀ {ℓ} {A B : Set ℓ} → A ≡ B → A ≃ B satisfying auid : ∀ {ℓ} {A B : Set ℓ} → au refl ≡ idEquiv A we directly get that this is an equivalence using the fact that any isomorphism is an equivalence:

module Univalence(au : ∀ {ℓ} {A B : Set ℓ} → A ≡ B → A ≃ B)(auid : ∀ {ℓ} {A B : Set ℓ} → au refl ≡ idEquiv A) wherethm : ∀ {ℓ} {A B : Set ℓ} → isEquiv authm {A = A} {B = B} =isoToIsEquiv {B = A ≃ B} au ua(EquivJ (λ _ _ e → au (ua e) ≡ e)(λ X → compPath (cong au uaIdEquiv)(auid {B = B})) _ _)(J (λ X p → ua (au p) ≡ p)(compPath (cong ua (auid {B = B})) uaIdEquiv))We can then instantiate this with for example the au map defined using J (which is how Vladimir originally stated the univalence axiom):

eqweqmap : ∀ {ℓ} {A B : Set ℓ} → A ≡ B → A ≃ Beqweqmap {A = A} e = J (λ X _ → A ≃ X) (idEquiv A) eeqweqmapid : ∀ {ℓ} {A : Set ℓ} → eqweqmap refl ≡ idEquiv Aeqweqmapid {A = A} = JRefl (λ X _ → A ≃ X) (idEquiv A)univalenceStatement : ∀ {ℓ} {A B : Set ℓ} →isEquiv (eqweqmap {ℓ} {A} {B})univalenceStatement = Univalence.thm eqweqmap eqweqmapidNote that eqweqmapid is not proved by refl, instead we need to use the fact that the computation rule for J holds up to a Path. Furthermore, I would like to emphasize that there is no problem with using J for Path’s and that the fact that the computation rule doesn’t hold definitionally is almost never a problem for practical formalization as one rarely use it as it is often more natural to just use the cubical primitives. However, in Section 9.1 of CCHM we solve this by defining cubical identity types satisfying the computation rule definitionally (following a trick of Andrew Swan).

Cubical identity types

The idea behind the cubical identity types is that an element of an identity type is a pair of a path and a formula which tells us where this path is constant, so for example reflexivity is just the constant path together with the fact that it is constant everywhere (note that the interval variable comes before the path as the path depends on it):

refl : ∀ {ℓ} {A : Set ℓ} {x : A} → Id x xrefl {x = x} = ⟨ i1 , (λ _ → x) ⟩These types also come with an eliminator from which we can prove J such that it is the identity function on refl, i.e. where the computation rule holds definitionally (for details see the Agda code in Cubical.Core.Id). We then prove that Path and Id are equivalent types and develop the theory that we have for Path for Id as well, in particular we prove the univalence theorem expressed with Id everywhere (the usual formulation can be found in Cubical.Basics.UnivalenceId).

Note that the cubical identity types are not an inductive family like in HoTT which means that we cannot use Agda’s pattern-matching to match on them. Furthermore Cubical Agda doesn’t support inductive families yet, but it should be possible to adapt the techniques of Cavallo/Harper presented in

Higher Inductive Types in Cubical Computational Type Theory

in order to extend it with inductive families. The traditional identity types could then be defined as in HoTT and pattern-matching should work as expected.

Propositional truncation

The core library only contains one HIT: propositional truncation (Cubical.Core.PropositionalTruncation). As Cubical Agda has native support for user defined HITs this is very convenient to define:

data ∥_∥ {ℓ} (A : Set ℓ) : Set ℓ where∣_∣ : A → ∥ A ∥squash : ∀ (x y : ∥ A ∥) → x ≡ yWe can then prove the recursor (and eliminator) using pattern-matching:

recPropTrunc : ∀ {ℓ} {A : Set ℓ} {P : Set ℓ} →isProp P → (A → P) → ∥ A ∥ → PrecPropTrunc Pprop f ∣ x ∣ = f xrecPropTrunc Pprop f (squash x y i) =Pprop (recPropTrunc Pprop f x) (recPropTrunc Pprop f y) iHowever I would not only use recPropTrunc explicitly as we can just use pattern-matching to define functions out of HITs. Note that the cubical machinery makes it possible for us to define these pattern-matching equations in a very nice way without any ap‘s. This is one of the main reasons why I find it a lot more natural to work with HITs in cubical type theory than in Book HoTT: the higher constructors of HITs construct actual elements of the HIT, not of its identity type!

This is just a short example of what can be done with HITs in Cubical Agda, I plan to write more about this in a future post, but for now one can look at the folder Cubical/HITs for many more examples (S¹, S², S³, torus, suspension, pushouts, interval, join, smash products…).

Constructive HoTT/UF

By combining everything I have said so far we have written the file Cubical.Core.HoTT-UF which exports the primitives of HoTT/UF defined using cubical machinery under the hood:

open import Cubical.Core.Id publicusing ( _≡_ -- The identity type.; refl -- Unfortunately, pattern matching on refl is not available.; J -- Until it is, you have to use the induction principle J.; transport -- As in the HoTT Book.; ap; _∙_; _⁻¹; _≡⟨_⟩_ -- Standard equational reasoning.; _∎; funExt -- Function extensionality-- (can also be derived from univalence).; Σ -- Sum type. Needed to define contractible types, equivalences; _,_ -- and univalence.; pr₁ -- The eta rule is available.; pr₂; isProp -- The usual notions of proposition, contractible type, set.; isContr; isSet; isEquiv -- A map with contractible fibers-- (Voevodsky's version of the notion).; _≃_ -- The type of equivalences between two given types.; EquivContr -- A formulation of univalence.; ∥_∥ -- Propositional truncation.; ∣_∣ -- Map into the propositional truncation.; ∥∥-isProp -- A truncated type is a proposition.; ∥∥-recursion -- Non-dependent elimination.; ∥∥-induction -- Dependent elimination.)The idea is that if someone has some code written using HoTT/UF axioms in Agda they can just import this file and everything should compute properly. The only downside is that one has to rewrite all pattern-matches on Id to explicit uses of J, but if someone is willing to do this and have some cool examples that now compute please let me know!

That’s all I had to say about the library for now. Pull-requests and feedback on how to improve it are very welcome! Please use the Github page for the library for comments and issues:

https://github.com/agda/cubical/issues

If you find some bugs in Cubical Agda you can use the Github page of Agda to report them (just check that no-one has already reported the bug):

Impredicative Encodings, Part 3

In this post I will argue that, improving on previous work of Awodey-Frey-Speight, (higher) inductive types can be defined using impredicative encodings with their full dependent induction principles — in particular, eliminating into all type families without any truncation hypotheses — in ordinary (impredicative) Book HoTT without any further bells or whistles. But before explaining that and what it means, let me review the state of the art.

Differential Geometry in Modal HoTT

As some of you might remember, back in 2015 at the meeting of the german mathematical society in Hamburg, Urs Schreiber presented three problems or “exercises” as he called it back then. There is a page about that on the nLab, if you want to know more. In this post, I will sketch a solution to some part of the first of these problems, while the occasion of writing it is a new version of my article about this, which now comes with a long introduction.

Urs Schreiber’s problems were all about formalizing results in higher differential geometry, that make also sense in the quite abstract setting of differential cohesive toposes and cohesive toposes.

A differential cohesive topos is a topos with some extra structure given by three monads and three comonads with some nice properties and adjunctions between them. There is some work concerned with having this structure in homotopy type theory. A specialized cohesive homotopy type theory concerned with three of the six (co-)monads, called real-cohesive homotopy type theory was introduced by Mike Shulman.

What I want to sketch here today is concerned only with one of the monads of differential cohesion. I will call this monad coreduction and denote it with . By the axioms of differential cohesion, it has a left and a right adjoint and is idempotent. These properties are more than enough to model a monadic modality in homotopy type theory. Monadic modalities were already defined at the end of section 7 in the HoTT-Book and named just “modalities” and it is possible to have a homotopy type theory with a monadic modality just by adding some axioms — which is known not to work for non-trivial comonadic modalities.

So let us assume that is a monadic modality in HoTT. That means that we have a map

and a unit

such that a property holds, that I won’t really go into in this post — but here it is for completeness: For any dependent type on some type

, such that the unit maps

are equivalences for all

, the map

is an equivalence. So the inverse to this map is an induction principle, that only holds for dependent types subject to the condition above.

The n-truncations and double negation are examples of monadic modalities.

At this point (or earlier), one might ask: “Where is the differential geometry”? The answer is that in this setting, all types carry differential geometric structure that is accessible via and

. This makes sense if we think of some very special interpretations of

and

(and HoTT), where the unit

is given as the quotient map from a space

to its quotient

by a relation that identifies infinitesimally close points in

.

Since we have this abstract monadic modality, we can turn this around and define the notion of two points being infinitesimally close, denoted “

” in terms of the units:

where “” denotes the identity type (of

in this case). The collection of all points y in a type X that are infinitesimally close to a fixed x in X, is called the formal disk at x. Let us denote it with

:

Using some basic properties of monadic modalities, one can show, that any map preserves inifinitesimal closeness, i.e.

is inhabited. For any x in A, we can use this to get a map

which behaves a lot like the differential of a smooth function. For example, the chain rule holds

and if f is an equivalence, all induced are also equivalences. The latter corresponds to the fact that the differential of a diffeomorphism is invertible.

If we have a 0-group G with unit e, the left tranlations are a family of equivalences that consistently identify

with all other formal disks

in G given by the differentials

.

This is essentially a generalization of the fact, that the tangent bundle of a Lie-group is trivialized by left translations and a solution to the first part of the first of Urs Schreiber’s problems I mentioned in the beginning.

With the exception of the chain rule, all of this was in my dissertation, which I defended in 2017. A couple of month ago, I wrote an article about this and put it on the arxiv and since monday, there is an improved version with an introduction that explains what monads you can think of and relates the setup to Synthetic Differential Geometry.

There is also a recording on youtube of a talk I gave about this in Bonn.

HoTT 2019

Save the date! Next summer will be the first:

International Conference on Homotopy Type Theory

(HoTT 2019)

Carnegie Mellon University

12 – 17 August 2019

There will also be an associated:

HoTT Summer School

7 – 10 August 2019

More details to follow soon!

UF-IAS-2012 wiki archived

The wiki used for the 2012-2013 Univalent Foundations program at the Institute for Advanced Study was hosted at a provider called Wikispaces. After the program was over, the wiki was no longer used, but was kept around for historical and archival purposes; much of it is out of date, but it still contains some content that hasn’t been reproduced anywhere else.

Unfortunately, Wikispaces is closing, so the UF-IAS-2012 wiki will no longer be accessible there. With the help of Richard Williamson, we have migrated all of its content to a new archival copy hosted on the nLab server:

Let us know if you find any formatting or other problems.

A self-contained, brief and complete formulation of Voevodsky’s univalence axiom

I have often seen competent mathematicians and logicians, outside our circle, making technically erroneous comments about the univalence axiom, in conversations, in talks, and even in public material, in journals or the web.

For some time I was a bit upset about this. But maybe this is our fault, by often trying to explain univalence only imprecisely, mixing the explanation of the models with the explanation of the underlying Martin-Löf type theory, with none of the two explained sufficiently precisely.

There are long, precise explanations such as the HoTT book, for example, or the various formalizations in Coq, Agda and Lean.

But perhaps we don’t have publicly available material with a self-contained, brief and complete formulation of univalence, so that interested mathematicians and logicians can try to contemplate the axiom in a fully defined form.

So here is an attempt of a self-contained, brief and complete formulation of Voevodsky’s Univalence Axiom in the arxiv.

This has an Agda file with univalence defined from scratch as an ancillary file, without the use of any library at all, to try to show what the length of a self-contained definition of the univalence type is. Perhaps somebody should add a Coq “version from scratch” of this.

There is also a web version UnivalenceFromScratch to try to make this as accessible as possible, with the text and the Agda code together.

The above notes explain the univalence axiom only. Regarding its role, we recommend Dan Grayson’s introduction to univalent foundations for mathematicians.

HoTT at JMM

At the 2018 U.S. Joint Mathematics Meetings in San Diego, there will be an AMS special session about homotopy type theory. It’s a continuation of the HoTT MRC that took place this summer, organized by some of the participants to especially showcase the work done during and after the MRC workshop. Following is the announcement from the organizers.

We are pleased to announce the AMS Special Session on Homotopy Type Theory, to be held on January 11, 2018 in San Diego, California, as part of the Joint Mathematics Meetings (to be held January 10 – 13).

Homotopy Type Theory (HoTT) is a new field of study that relates constructive type theory to abstract homotopy theory. Types are regarded as synthetic spaces of arbitrary dimension and type equality as homotopy equivalence. Experience has shown that HoTT is able to represent many mathematical objects of independent interest in a direct and natural way. Its foundations in constructive type theory permit the statement and proof of theorems about these objects within HoTT itself, enabling formalization in proof assistants and providing a constructive foundation for other branches of mathematics.

This Special Session is affiliated with the AMS Mathematics Research Communities (MRC) workshop for early-career researchers in Homotopy Type Theory organized by Dan Christensen, Chris Kapulkin, Dan Licata, Emily Riehl and Mike Shulman, which took place last June.

The Special Session will include talks by MRC participants, as well as by senior researchers in the field, on various aspects of higher-dimensional type theory including categorical semantics, computation, and the formalization of mathematical theories. There will also be a panel discussion featuring distinguished experts from the field.

Further information about the Special Session, including a schedule and abstracts, can be found at: http://jointmathematicsmeetings.org/meetings/national/jmm2018/2197_program_ss14.html.

Please note that the early registration deadline is December 20, 2017.If you have any questions about about the Special Session, please feel free to contact one of the organizers. We look forward to seeing you in San Diego.

Simon Cho (University of Michigan)

Liron Cohen (Cornell University)

Ed Morehouse (Wesleyan University)

Impredicative Encodings of Inductive Types in HoTT

I recently completed my master’s thesis under the supervision of Steve Awodey and Jonas Frey. A copy can be found here.

Known impredicative encodings of various inductive types in System F, such as the type

of natural numbers do not satisfy the relevant -computation rules. The aim of this work is to refine the System F encodings by moving to a system of HoTT with an impredicative universe, so that the relevant

-rules are satisfied (along with all the other rules). As a result, the so-determined types have their expected universal properties. The main result is the construction of a type of natural numbers which is the initial algebra for the expected endofunctor

.

For the inductive types treated in the thesis, we do not use the full power of HoTT; we need only postulate -types, identity types, “large”

-types over an impredicative universe

and function extensionality. Having large

-types over an impredicative universe

means that given a type

and a type family

, we may form the dependent function type

Note that this type is in even if

is not.

We obtain a translation of System F types into type theory by replacing second order quantification by dependent products over (or alternatively over the subtype of

given by some h-level).

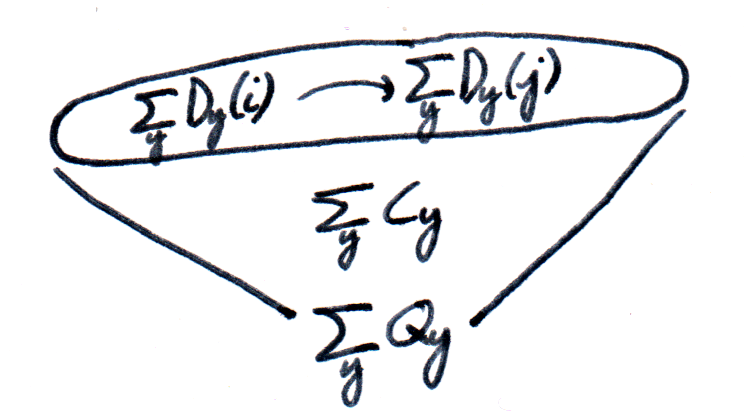

For brevity, we will focus on the construction of the natural numbers (though in the thesis, the coproduct of sets and the unit type is first treated with special cases of this method). We consider categories of algebras for endofunctors:

where the type of objects of is given by

(the type of sets (in )) and morphisms are simply functions between sets.

We can write down the type of -algebras:

and homomorphisms between algebras and

:

which together form the category .

We seek the initial object in . Denote this by

and moreover let

be the forgetful functor to

and

be the covariant Yoneda embedding. We reason as follows:

using the fact that the diagonal functor is left adjoint to the limit functor for the last step. With this, we have a proposal for the definition of the underlying set of the initial -algebra as the limit of the forgetful functor. Using the fact that

is defined as a limit, we obtain an algebra structure

. As

creates limits,

is guaranteed to be initial in

.

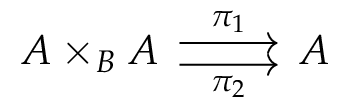

But we want to define in type theory. We do this using products and equalizers as is well known from category theory. Explicitly, we take the equalizer of the following two maps between products:

given by:

The equalizer is, of course:

which inhabits . Impredicativity is crucial for this: it guarantees that the product over

lands in

.

This method can be used to construct an initial algebra, and therefore a fixed-point, for any endofunctor ! We won’t pursue this remarkable fact here, but only consider the case at hand, where the functor

is

. Then the equalizer

becomes our definition of the type of natural numbers (so let us rename

to

for the remainder). Observe that this encoding can be seen as a subtype of (a translation of) the System F encoding given at the start. Indeed, the indexing object

of

is equivalent to

, by

With this, we can define a successor function and zero element, for instance:

(the successor function takes a little more work). We can also define a recursor , given any

and

. In other words, the introduction rules hold, and we can eliminate into other sets. Further, the

-rules hold definitionally – as expected, since they hold for the System F encodings.

Finally we come to the desired result, the -rule for

:

Theorem. Let and

. Moreover, let

such that:

for any . Then

Note that the -rule holds propositionally. By Awodey, Gambino, and Sojakova we therefore also have, equivalently, the induction principle for

, aka the dependent elimination rule. As a corollary, we can prove the universal property that any

-algebra homomorphism is propositionally equal to the appropriate recursor (as a

-algebra homomorphism). Again we emphasise the need for impredicativity: in the proof of

, we have to be able to plug

into quantifiers over

.

A semantic rendering of the above is that we have built a type that always determines a natural numbers object—whereas the System F encoding need not always do so (see Rummelhoff). In an appendix, we discuss a realizability semantics for the system we work in. Building more exotic types (that need not be sets) becomes more complicated; we leave this to future work.

A hands-on introduction to cubicaltt

Some months ago I gave a series of hands-on lectures on cubicaltt at Inria Sophia Antipolis that can be found at:

https://github.com/mortberg/cubicaltt/tree/master/lectures

The lectures cover the main features of the system and don’t assume any prior knowledge of Homotopy Type Theory or Univalent Foundations. Only basic familiarity with type theory and proof assistants based on type theory is assumed. The lectures are in the form of cubicaltt files and can be loaded in the cubicaltt proof assistant.

cubicaltt is based on a novel type theory called Cubical Type Theory that provides new ways to reason about equality. Most notably it makes various extensionality principles, like function extensionality and Voevodsky’s univalence axiom, into theorems instead of axioms. This is done such that these principles have computational content and in particular that we can transport structures between equivalent types and that these transports compute. This is different from when one postulates the univalence axiom in a proof assistant like Coq or Agda. If one just adds an axiom there is no way for Coq or Agda to know how it should compute and one looses the good computational properties of type theory. In particular canonicity no longer holds and one can produce terms that are stuck (e.g. booleans that are neither true nor false but don’t reduce further). In other words this is like having a programming language in which one doesn’t know how to run the programs. So cubicaltt provides an operational semantics for Homotopy Type Theory and Univalent Foundations by giving a computational justification for the univalence axiom and (some) higher inductive types.

Cubical Type Theory has a model in cubical sets with lots of structure (symmetries, connections, diagonals) and is hence consistent. Furthermore, Simon Huber has proved that Cubical Type Theory satisfies canonicity for natural numbers which gives a syntactic proof of consistency. Many of the features of the type theory are very inspired by the model, but for more syntactically minded people I believe that it is definitely possible to use cubicaltt without knowing anything about the model. The lecture notes are hence written with almost no references to the model.

The cubicaltt system is based on Mini-TT:

"A simple type-theoretic language: Mini-TT" (2009) Thierry Coquand, Yoshiki Kinoshita, Bengt Nordström and Makoto Takeya In "From Semantics to Computer Science; Essays in Honour of Gilles Kahn"

Mini-TT is a variant Martin-Löf type theory with datatypes and cubicaltt extends Mini-TT with:

- Path types

- Compositions

- Glue types

- Id types

- Some higher inductive types

The lectures cover the first 3 of these and hence correspond to sections 2-7 of:

"Cubical Type Theory: a constructive interpretation of the univalence axiom" Cyril Cohen, Thierry Coquand, Simon Huber and Anders Mörtberg To appear in post-proceedings of TYPES 2016 https://arxiv.org/abs/1611.02108

I should say that cubicaltt is mainly meant to be a prototype implementation of Cubical Type Theory in which we can do experiments, however it was never our goal to implement a competitor to any of the more established proof assistants. Because of this there are no implicit arguments, type classes, proper universe management, termination checker, etc… Proofs in cubicaltt hence tend to get quite verbose, but it is definitely possible to do some fun things. See for example:

- binnat.ctt – Binary natural numbers and isomorphism to unary numbers. Example of data and program refinement by doing a proof for unary numbers by computation with binary numbers.

- setquot.ctt – Formalization of impredicative set quotients á la Voevodsky.

- hz.ctt –

defined as an (impredicative set) quotient of

nat * nat. - category.ctt – Categories. Structure identity principle. Pullbacks. (Due to Rafaël Bocquet)

- csystem.ctt – Definition of C-systems and universe categories. Construction of a C-system from a universe category. (Due to Rafaël Bocquet)

For a complete list of all the examples see:

https://github.com/mortberg/cubicaltt/tree/master/examples

For those who cannot live without implicit arguments and other features of modern proof assistants there is now an experimental cubical mode shipped with the master branch of Agda. For installation instructions and examples see:

https://agda.readthedocs.io/en/latest/language/cubical.html

https://github.com/Saizan/cubical-demo

In this post I will give some examples of the main features of cubicaltt, but for a more comprehensive introduction see the lecture notes. As cubicaltt is an experimental prototype things can (and probably will) change in the future (e.g. see the paragraph on HITs below).

The basic type theory

The basic type theory on which cubicaltt is based has Π and ∑ types (with eta and surjective pairing), a universe U, datatypes, recursive definitions and mutually recursive definitions (in particular inductive-recursive definitions). Note that general datatypes and (mutually recursive) definitions are not part of the version of Cubical Type Theory in the paper.

Below is an example of how natural numbers and addition are defined:

data nat = zero| suc (n : nat)add (m : nat) : nat -> nat = splitzero -> msuc n -> suc (add m n)If one loads this in the cubicaltt read-eval-print-loop one can compute things:

> add (suc zero) (suc zero)EVAL: suc (suc zero)Path types

The homotopical interpretation of equality tells us that we can think of an equality proof between a and b in a type A as a path between a and b in a space A. cubicaltt takes this literally and adds a primitive Path type that should be thought of as a function out of an abstract interval with fixed endpoints.

We call the elements of the interval names/directions/dimensions and typically use i, j, k to denote them. The elements of the interval

are generated by the following grammar (where dim is a dimension like i, j, k…):

r,s := 0

| 1

| dim

| - r

| r /\ s

| r \/ s

The endpoints are 0 and 1, – corresponds to symmetry (r in is mapped to

1-r), while /\ and \/ are so called “connections”. The connections can be thought of mapping r and s in to

min(r,s) and max(r,s) respectively. As Path types behave like functions out of the interval there is both path abstraction and application (just like for function types). Reflexivity is written:

refl (A : U) (a : A) : Path A a a = <i> aand corresponds to a constant path:

<i> a a -----------> a

with the intuition is that <i> a is a function \(i : . However for deep reasons the interval isn’t a type (as it isn’t fibrant) so we cannot write functions out of it directly and hence we have this special notation for path abstraction.) -> a

If we have a path from a to b then we can compute its left end-point by applying it to 0:

face0 (A : U) (a b : A) (p : Path A a b) : A = p @ 0This is of course convertible to a. We can also reverse a path by using symmetry:

sym (A : U) (a b : A) (p : Path A a b) : Path A b a =<i> p @ -iAssuming that some arguments could be made implicit this satisfies the equality

sym (sym p) == pjudgmentally. This is one of many examples of equalities that hold judgmentally in cubicaltt but not in standard type theory where sym would be defined by induction on p. This is useful for formalizing mathematics, for example we get the judgmental equality C^op^op == C for a category C that cannot be obtained in standard type theory with the usual definition of category without using any tricks (see opposite.ctt for a formal proof of this).

We can also directly define cong (or ap or mapOnPath):

cong (A B : U) (f : A -> B) (a b : A) (p : Path A a b) :Path B (f a) (f b) = <i> f (p @ i)Once again this satisfies some equations judgmentally that we don’t get in standard type theory where this would have been defined by induction on p:

cong id p == pcong g (cong f p) == cong (g o f) pFinally the connections can be used to construct higher dimensional cubes from lower dimensional ones (e.g. squares from lines). If p : Path A a b then <i j> p @ i /\ j is the interior of the square:

p

a -----------------> b

^ ^

| |

| |

<j> a | | p

| |

| |

| |

a -----------------> a

<i> a

Here i corresponds to the left-to-right dimension and j corresponds to the down-to-up dimension. To compute the left and right sides just plug in i=0 and i=1 in the term inside the square:

<j> p @ 0 /\ j = <j> p @ 0 = <j> a (p is a path from a to b) <j> p @ 1 /\ j = <j> p @ j = p (using eta for Path types)

These give a short proof of contractibility of singletons (i.e. that the type (x : A) * Path A a x is contractible for all a : A), for details see the lecture notes or the paper. Because connections allow us to build higher dimensional cubes from lower dimensional ones they are extremely useful for reasoning about higher dimensional equality proofs.

Another cool thing with Path types is that they allow us to give a direct proof of function extensionality by just swapping the path and lambda abstractions:

funExt (A B : U) (f g : A -> B)(p : (x : A) -> Path B (f x) (g x)) :Path (A -> B) f g = <i> \(a : A) -> (p a) @ iTo see that this makes sense we can compute the end-points:

(<i> \(a : A) -> (p a) @ i) @ 0 = \(a : A) -> (p a) @ 0= \(a : A) -> f a= f

and similarly for the right end-point. Note that the last equality follows from eta for Π types.

We have now seen that Path types allows us to define the constants of HoTT (like cong or funExt), but when doing proofs with Path types one rarely uses these constants explicitly. Instead one can directly prove things with the Path type primitives, for example the proof of function extensionality for dependent functions is exactly the same as the one for non-dependent functions above.

We cannot yet prove the principle of path induction (or J) with what we have seen so far. In order to do this we need to be able to turn any path between types A and B into a function from A to B, in other words we need to be able to define transport (or cast or coe):

transport : Path U A B -> A -> BComposition, filling and transport

The computation rules for the transport operation in cubicaltt is introduced by recursion on the type one is transporting in. This is quite different from traditional type theory where the identity type is introduced as an inductive family with one constructor (refl). A difficulty with this approach is that in order to be able to define transport in a Path type we need to keep track of the end-points of the Path type we are transporting in. To solve this we introduce a more general operation called composition.

Composition can be used to define the composition of paths (hence the name). Given paths p : Path A a b and q : Path A b c the composite is obtained by computing the missing top line of this open square:

a c

^ ^

| |

| |

<j> a | | q

| |

| |

| |

a ----------------> b

p @ i

In the drawing I’m assuming that we have a direction i : in context that goes left-to-right and that the j goes down-to-up (but it’s not in context, rather it’s implicitly bound by the comp operation). As we are constructing a Path from a to c we can use the i and put p @ i as bottom. The code for this is as follows:

compPath (A : U) (a b c : A)(p : Path A a b) (q : Path A b c) : Path A a c =<i> comp (<_> A) (p @ i)[ (i = 0) -> <j> a, (i = 1) -> q ]One way to summarize what compositions gives us is the so called “box principle” that says that “any open box has a lid”. Here “box” means (n+1)-dimensional cube and the lid is an n-dimensional cube. The comp operation takes as second argument the bottom of the box and then a list of sides. Note that the collection of sides doesn’t have to be exhaustive (as opposed to the original cubical set model) and one way to think about the sides is as a collection of constraints that the resulting lid has to satisfy. The first argument of comp is a path between types, in the above example this path is constant but it doesn’t have to be. This is what allows us to define transport:

transport (A B : U) (p : Path U A B) (a : A) : B =comp p a []Combining this with the contractibility of singletons we can easily prove the elimination principle for Path types. However the computation rule does not hold judgmentally. This is often not too much of a problem in practice as the Path types satisfy various judgmental equalities that normal Id types don’t. Also, having the possibility to reason about higher equalities directly using path types and compositions is often very convenient and leads to very nice and new ways to construct proofs about higher equalities in a geometric way by directly reasoning about higher dimensional cubes.

The composition operations are related to the filling operations (as in Kan simplicial sets) in the sense that the filling operations takes an open box and computes a filler with the composition as one of its faces. One of the great things about cubical sets with connections is that we can reduce the filling of an open box to its composition. This is a difference compared to the original cubical set model and it provides a significant simplification as we only have to explain how to do compositions in open boxes and not also how to fill them.

Glue types and univalence

The final main ingredient of cubicaltt are the Glue types. These are what allows us to have a direct algorithm for composition in the universe and to prove the univalence axiom. These types add the possibility to glue types along equivalences (i.e. maps with contractible fibers) onto another type. In particular this allows us to directly define one of the key ingredients of the univalence axiom:

ua (A B : U) (e : equiv A B) : Path U A B =<i> Glue B [ (i = 0) -> (A,e), (i = 1) -> (B,idEquiv B) ]This corresponds to the missing line at the top of:

A B

| |

e | | idEquiv B

| |

V V

B --------> B

B

The sides of this square are equivalences while the bottom and top are lines in direction i (so this produces a path from A to B as desired).

We have formalized three proofs of the univalence axiom in cubicaltt:

- A very direct proof due to Simon Huber and me using higher dimensional glueing.

- The more conceptual proof from section 7.2 of the paper in which we show that the

ungluefunction is an equivalence (formalized by Fabian Ruch). - A proof from

uaand its computation rule (uabeta). Both of these constants are easy to define and are sufficient for the full univalence axiom as noted in a post by Dan Licata on the HoTT google group.

All of these proofs can be found in the file univalence.ctt and are explained in the paper (proofs 1 and 3 are in Appendix B).

Note that one often doesn’t need full univalence to do interesting things. So just like for Path types it’s often easier to just use the Glue primitives directly instead of invoking the full univalence axiom. For instance if we have proved that negation is an involution for bool we can directly get a non-trivial path from bool to bool using ua (which is just a Glue):

notEq : Path U bool bool = ua boob bool notEquivAnd we can use this non-trivial equality to transport true and compute the result:

> transport notEq trueEVAL: falseThis is all that the lectures cover, in the rest of this post I will discuss the two extensions of cubicaltt from the paper and their status in cubicaltt.

Identity types and higher inductive types

As pointed out above the computation rule for Path types doesn’t hold judgmentally. Luckily there is a neat trick due to Andrew Swan that allows us to define a new type that is equivalent to Path A a b for which the computation rule holds judgmentally. For details see section 9.1 of the paper. We call this type Id A a b as it corresponds to Martin-Löf’s identity type. We have implemented this in cubicaltt and proved the univalence axiom expressed exclusively using Id types, for details see idtypes.ctt.

For practical formalizations it is probably often more convenient to use the Path types directly as they have the nice primitives discussed above, but the fact that we can define Id types is very important from a theoretical point of view as it shows that cubicaltt with Id is really an extension of Martin-Löf type theory. Furthermore as we can prove univalence expressed using Id types we get that any proof in univalent type theory (MLTT extended with the univalence axiom) can be translated into cubicaltt.

The second extension to cubicaltt are HITs. We have a general syntax for adding these and some of them work fine on the master branch, see for example:

- circle.ctt – The circle as a HIT. Computation of winding numbers.

- helix.ctt – The loop space of the circle is equal to Z.

- susp.ctt – Suspension and n-spheres.

- torsor.ctt – Torsors. Proof that S1 is equal to BZ, the classifying

space of Z. (Due to Rafaël Bocquet) - torus.ctt – Proof that Torus = S1 * S1 in only 100 loc (due to Dan

Licata).

However there are various known issues with how the composition operations compute for recursive HITs (e.g. truncations) and HITs where the end-points contain function applications (e.g. pushouts). We have a very experimental branch that tries to resolve these issues called “hcomptrans”. This branch contains some new (currently undocumented) primitives that we are experimenting with and so far it seems like these are solving the various issues for the above two classes of more complicated HITs that don’t work on the master branch. So hopefully there will soon be a new cubical type theory with support for a large class of HITs.

That’s all I wanted to say about cubicaltt in this post. If someone plays around with the system and proves something cool don’t hesitate to file a pull request or file issues if you find some bugs.

Type theoretic replacement & the n-truncation

This post is to announce a new article that I recently uploaded to the arxiv:

https://arxiv.org/abs/1701.07538

The main result of that article is a type theoretic replacement construction in a univalent universe that is closed under pushouts. Recall that in set theory, the replacement axiom asserts that if is a class function, assigning to any set

a new set

, then the image of any set

, i.e. the set

is again a set. In homotopy type theory we consider instead a map

from a small type

into a locally small type

, and our main result is the construction of a small type

with the universal property of the image of

.

We say that a type is small if it is in , and for the purpose of this blog post smallness and locally smallness will always be with respect to

. Before we define local smallness, let us recall the following rephrasing of the `encode-decode method’, which we might also call the Licata-Shulman theorem:

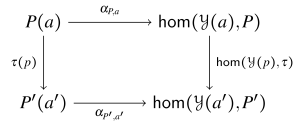

Theorem. Let be a type with

, and let

be a type with

. Then the following are equivalent.

- The total space

is contractible.

- The canonical map

defined by path induction, mapping

to

, is a fiberwise equivalence.

Note that this theorem follows from the fact that a fiberwise map is a fiberwise equivalence if and only if it induces an equivalence on total spaces. Since for path spaces the total space will be contractible, we observe that any fiberwise equivalence establishes contractibility of the total space, i.e. we might add the following equivalent statement to the theorem.

- There (merely) exists a family of equivalences

. In other words,

is in the connected component of the type family

.

There are at least two equivalent ways of saying that a (possibly large) type is locally small:

- For each

there is a type

and an equivalence

.

- For each

there is a type

; for each

there is a term

, and the canonical dependent function

defined by path induction by sending

to

is an equivalence.

Note that the data in the first structure is clearly a (large) mere proposition, because there can be at most one such a type family , while the equivalences in the second structure are canonical with respect to the choice of reflexivity

. To see that these are indeed equivalent, note that the family of equivalences in the first structure is a fiberwise equivalence, hence it induces an equivalence on total spaces. Therefore it follows that the total space

is contractible. Thus we see by Licata’s theorem that the canoncial fiberwise map is a fiberwise equivalence. Furthermore, it is not hard to see that the family of equivalences

is equal to the canonical family of equivalences. There is slightly more to show, but let us keep up the pace and go on.

Examples of locally small types include any small type, any mere proposition regardless of their size, the universe is locally small by the univalence axiom, and if is small and

is locally small then the type

is locally small. Observe also that the univalence axiom follows if we assume the `uncanonical univalence axiom’, namely that there merely exists a family of equivalences

. Thus we see that the slogan ‘identity of the universe is equivalent to equivalence’ actually implies univalence.

Main Theorem. Let be a univalent universe that is closed under pushouts. Suppose that

, that

is a locally small type, and let

. Then we can construct

- a small type

,

- a factorization

- such that

is an embedding that satisfies the universal property of the image inclusion, namely that for any embedding

, of which the domain is possibly large, if

factors through

, then so does

.

Recall that factors through an embedding

in at most one way. Writing

for the mere proposition that

factors through

, we see that

satisfies the universal property of the image inclusion precisely when the canonical map

is an equivalence.

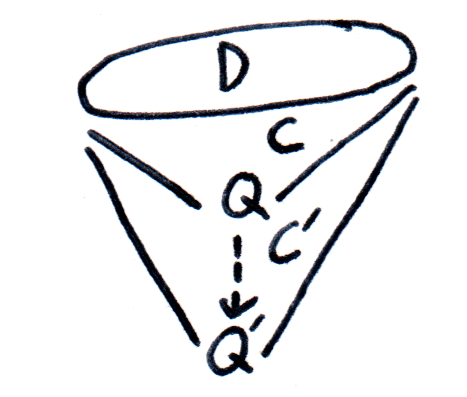

Most of the paper is concerned with the construction with which we prove this theorem: the join construction. By repeatedly joining a map with itself, one eventually arrives at an embedding. The join of two maps and

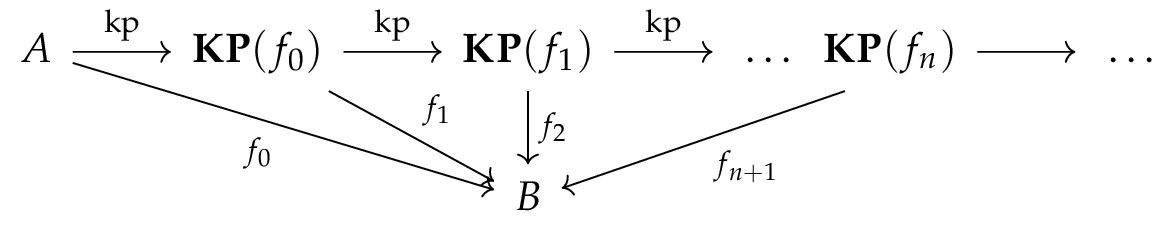

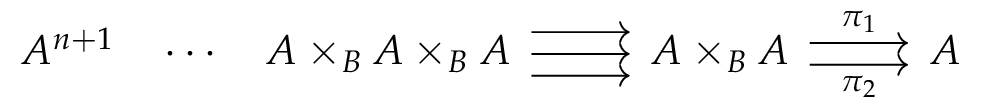

is defined by first pulling back, and then taking the pushout, as indicated in the following diagram

In the case , the type

is equivalent to the usual join of types

. Just like the join of types, the join of maps with a common codomain is associative, commutative, and it has a unit: the unique map from the empty type into

. The join of two embeddings is again an embedding. We show that the last statement can be strengthened: the maps

that are idempotent in a canonical way (i.e. the canonical morphism

in the slice category over

is an equivalence) are precisely the embeddings.

Below, I will indicate how we can use the above theorem to construct the n-truncations for any on any univalent universe that is closed under pushouts. Other applications include the construction of set-quotients and of Rezk-completion, since these are both constructed as the image of the Yoneda-embedding, and it also follows that the univalent completion of any dependent type

can be constructed as a type in

, namely

, without needing to resort to more exotic higher inductive types. In particular, any connected component of the universe is equivalent to a small type.

Theorem. Let be a univalent universe that is closed under pushouts. Then we can define for any

- an n-truncation operation

,

- a map

- such that for any

, the type

is n-truncated and satisfies the (dependent) universal property of n-truncation, namely that for every type family

of possibly large types such that each

is n-truncated, the canonical map

given by precomposition byis an equivalence.

Construction. The proof is by induction on . The case

is trivial (take

). For the induction hypothesis we assume an n-truncation operation with structure described in the statement of the theorem.

First, we define by

. As we have seen, the universe is locally small, and therefore the type

is locally small. Therefore we can define

.

For the proof that is indeed

-truncated, and satisfies the universal property of the n-truncation we refer to the article.

Parametricity, automorphisms of the universe, and excluded middle