An in-Depth look at our Docker and ECS stack for Golang

source link: https://medium.com/smsjunk/an-in-depth-look-at-our-docker-and-ecs-stack-for-golang-b89dfe7cff5c

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

An in-Depth look at our Docker and ECS stack for Golang

We use the Go programing language for a lot of the high throughput pieces of our infrastructure with SMS Junk Filter - https://smsjunk.com. When we decided to start this project we knew that we would be receiving a lot of traffic from lots of mobile phones when we perform our cloud SMS Spam Filtering. Therefore, we decided from the beginning to leverage once again Amazon Web Services and their infrastructure to achieve auto-scaling and ease of deployment.

In the past, we have used AWS ElasticBeanstalk (see our previous article: Handling 1 Million Requests per Minute with Golang) but we have encountered a few small issues with this deployment scenario. Don’t take me wrong, I still think that ElasticBeanstalk is a very good and viable option for certain projects, but as Docker in AWS has matured, their Elastic Container Service (ECS) has been pulling us in that direction recently. We have deployed many projects under ECS and Docker in the past year, and I think we have finally reached a good simple stack for Go projects that allows us to quickly develop and deploy these systems.

We use a couple of interesting Golang packages in our standard stack:

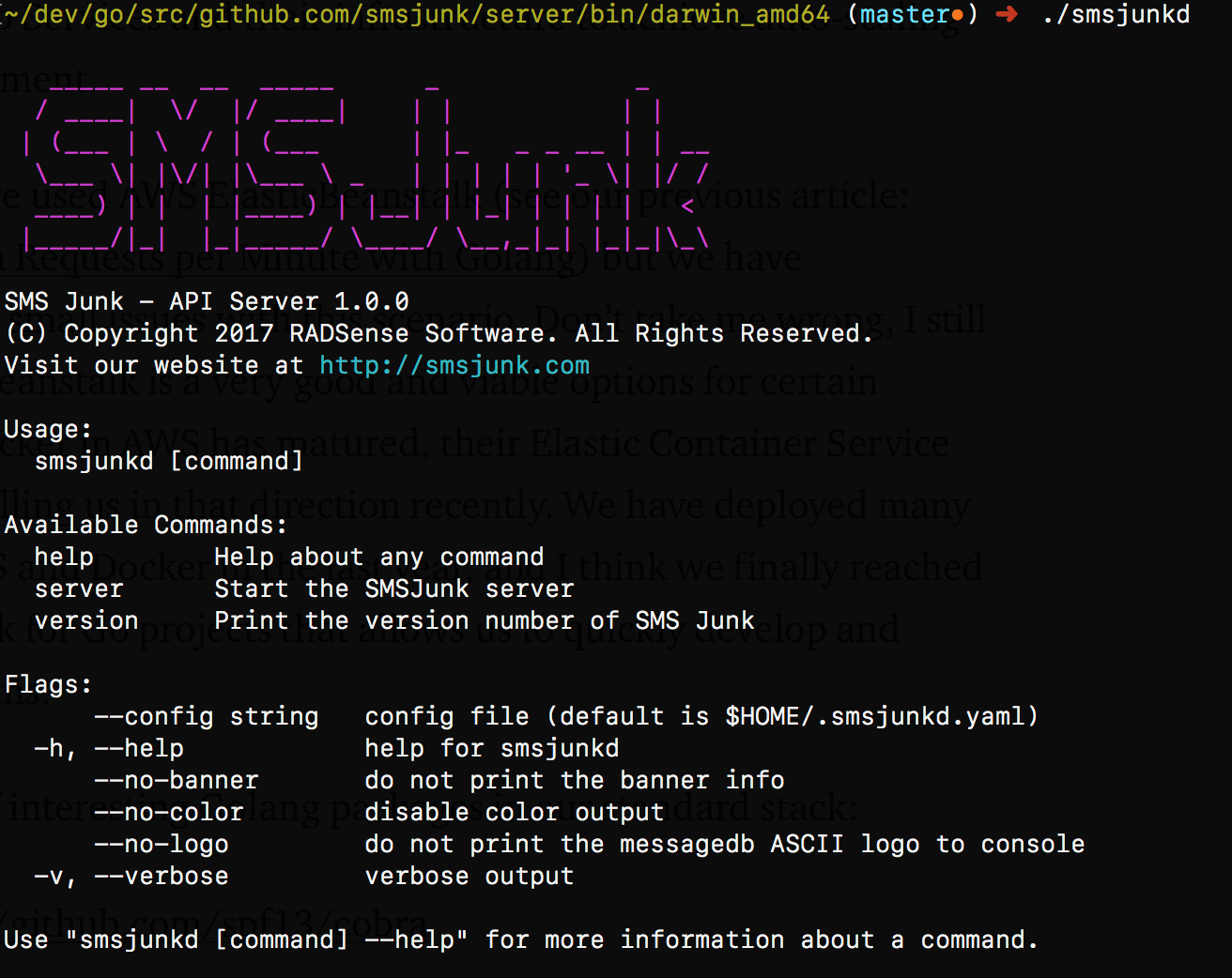

Our Binary Command Line

Cobra and Viper packages handles the CLI commands that our executable has. We usually have a few commands that prints information about the binary itself, when it was build, and other debugging parameters that we can turn on in our ECS cluster in case we want to debug something.

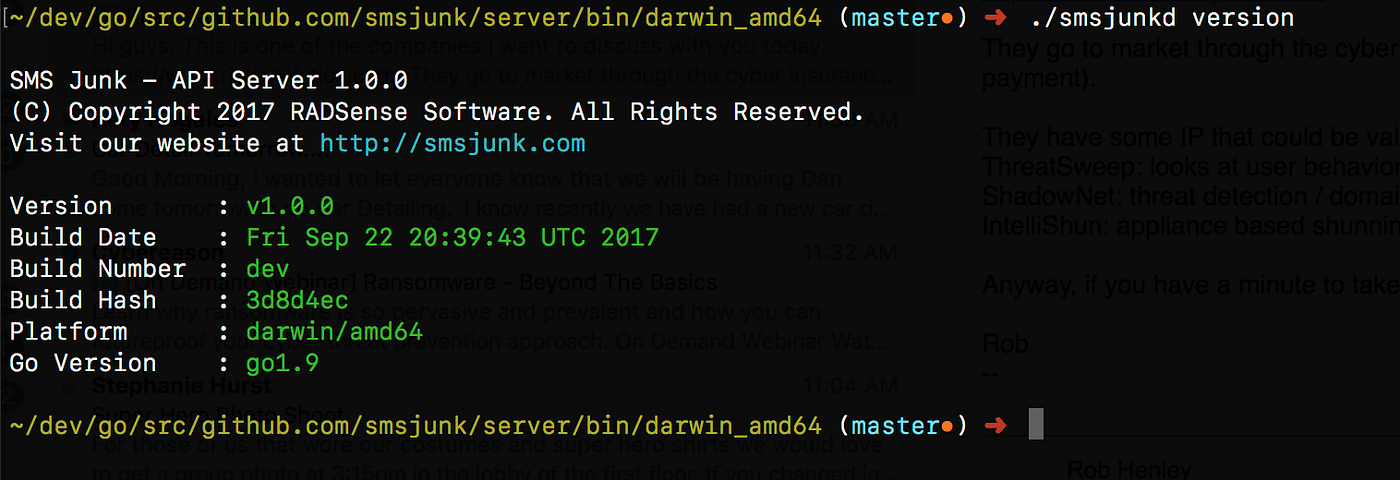

At any point we can get information about a binary that is running in our cluster by invoking the command “version” on the binary.

We have been using the Chi package as our slim web framework. Over the years we have tried different ones like Gorilla, Echo, Gin, Macaron, Negroni, Martini, etc… The list goes on and on. When the Golang Context package was introduced into the Standard Library in Go 1.7, we found that Chi is more similar to the standard Golang way of doing things, maintaining the standard HandlerFunc signature and allowing us to do similar things we were doing with Gin and Echo packages.

router := chi.NewRouter()router.Use(Heartbeat("/some-health-endpoint"))

router.Use(middleware.RequestID)

router.Use(middleware.RealIP)

router.Use(middleware.Logger)

router.Use(middleware.NoCache)// get the configuration for Throttling limits

if cfg.GetBool("throttleConnections") {

throttleLimit := cfg.GetInt("throttleLimit")

throttleBacklogLimit := cfg.GetInt("throttleBacklogLimit")

throttleBacklogTimeout := cfg.GetDuration("throttleTimeout") router.Use(middleware.ThrottleBacklog(throttleLimit,

throttleBacklogLimit, throttleBacklogTimeout))

}router.Use(middleware.Timeout(60 * time.Second))

router.Use(middleware.Recoverer)fmt.Printf("Listening on port 5000\n")

err := http.ListenAndServe(":5000", router)

if err != nil {

fmt.Printf("ERROR: %v", err)

}

One important piece of this router stack is the Heartbeat middleware that implements health checks. Our infrastructure relies heavily on this for our Application Load Balancers to detect that an application is healthy and whether it should continue sending traffic to the app.

As you can see below, this is the Heartbeat function. If the middlewre detects that the request is trying to reach the health endpoint, we perform any checks, quickly return “ok” and finish the request processing without going to further middlewares. The reason we do this, is so we don’t pollute the logs with millions of log entries for the health checks, since the Logging middleware occurs after the heartbeat middleware.

func Heartbeat(endpoint string) func(http.Handler) http.Handler {

f := func(h http.Handler) http.Handler {

fn := func(w http.ResponseWriter, r *http.Request) { if (r.Method == "GET" || r.Method == "HEAD") &&

strings.EqualFold(r.URL.Path, endpoint) { // other checks are performed here w.Header().Set("Content-Type", "text/plain")

w.WriteHeader(http.StatusOK)

w.Write([]byte("ok"))

return

} h.ServeHTTP(w, r)

}

return http.HandlerFunc(fn)

}

return f

}

Building via Makefile

We have created this standard Makefile that we use to compile the majority of our Golang projects. Most of the time we simply use this Makefile with very small modifications depending on the type of project we have. Let’s take a look at some important pieces.

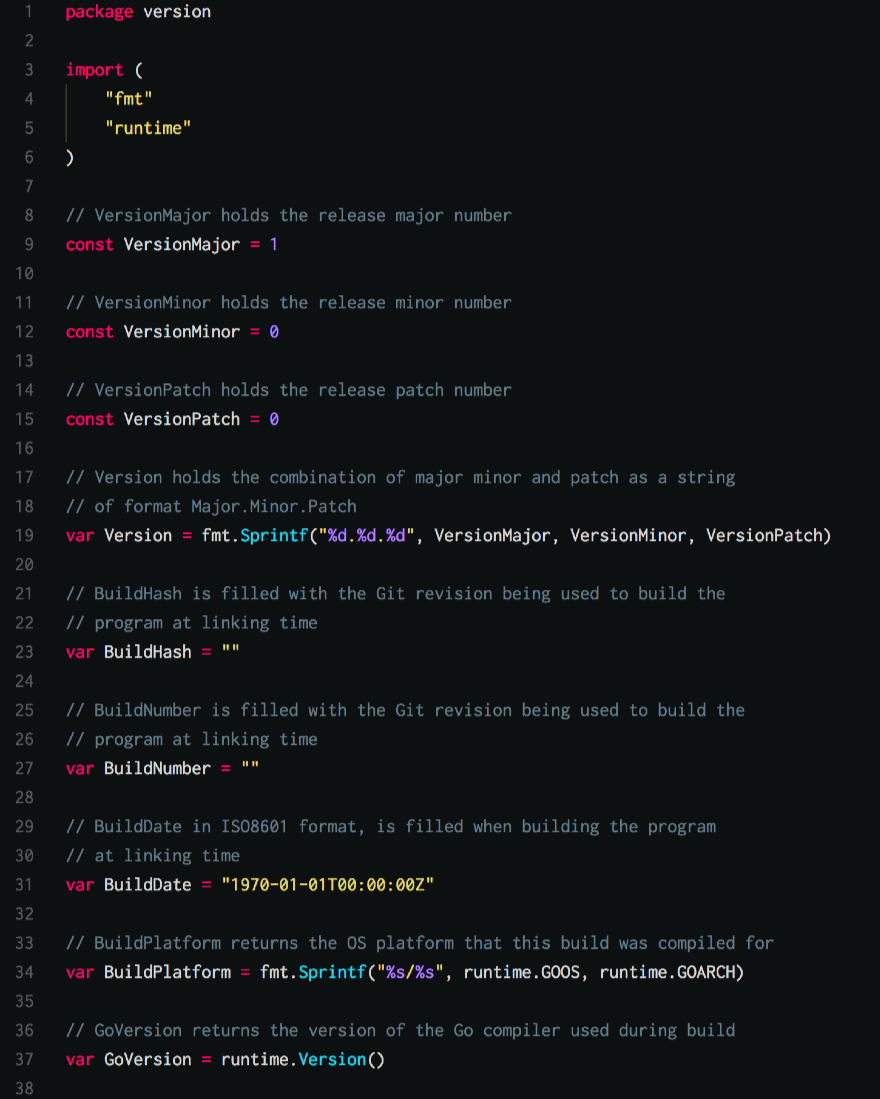

First we are setting up some of our build flags. We rarely change these settings as it works with all of our compilation stacks. We are pretty much trying to extract from our Git repo some information about the commit hash, current date and what type of OS we are building this on and what OS are we targeting if we are cross-compiling it. We do all of our programming under macOS but we have used this Makefile to cross-compile binaries for Linux and Windows.

# Build Flags

BUILD_DATE = $(shell date -u)

BUILD_HASH = $(shell git rev-parse --short HEAD)

BUILD_NUMBER ?= $(BUILD_NUMBER:)# If we don't set the build number it defaults to dev

ifeq ($(BUILD_NUMBER),)

BUILD_NUMBER := dev

endifNOW = $(shell date -u '+%Y%m%d%I%M%S')DOCKER := docker

GO := go

GO_ENV := $(shell $(GO) env GOOS GOARCH)

GOOS ?= $(word 1,$(GO_ENV))

GOARCH ?= $(word 2,$(GO_ENV))

GOFLAGS ?= $(GOFLAGS:)

ROOT_DIR := $(realpath .)# GOOS/GOARCH of the build host, used to determine whether

# we're cross-compiling or not

BUILDER_GOOS_GOARCH="$(GOOS)_$(GOARCH)"

Below we are setting some other compiler and linker flags that we do need for our project. For SMS Junk Filter APIs we are cross-compiling to Linux and we didn’t want to have any dynamic libraries, so we are compiling and linking it statically, as you can see how we define the EXTLDFLAGS. We do this because on how we create a Docker image that will run this service, more on that later.

PKGS = $(shell $(GO) list . ./cmd/... ./pkg/... | grep -v /vendor/)TAGS ?= "netgo"

BUILD_ENV =

ENVFLAGS = CGO_ENABLED=1 $(BUILD_ENV)ifneq ($(GOOS), darwin)

EXTLDFLAGS = -extldflags "-lm -lstdc++ -static"

else

EXTLDFLAGS =

endifGO_LINKER_FLAGS ?= --ldflags '$(EXTLDFLAGS) -s -w \

-X "github.com/smsjunk/pkg/version.BuildNumber=$(BUILD_NUMBER)" \

-X "github.com/smsjunk/pkg/version.BuildDate=$(BUILD_DATE)" \

-X "github.com/smsjunk/pkg/version.BuildHash=$(BUILD_HASH)"'BIN_NAME := smsjunkd

You can see above that we are also stamping our binaries by setting the build variables in our version package from the build flags we defined earlier in the Makefile. This is the standard version package that we are using in all of our Golang projects.

ENVFLAGS = CGO_ENABLED=1 $(BUILD_ENV)

Also note that we have defined CGO_ENABLED=1. This is because we use CGO to compile and link a C/C++ library, and yet another reason for us to statically compile our project.

In order to build we just simply issue the command:

> make build

Which pretty much runs the following command, utilizing all the build flags that we have already defined. This will output the binary under the bin folder for the correct OS and Architecture folder.

go build -a -installsuffix cgo -tags $(TAGS) $(GOFLAGS) $(GO_LINKER_FLAGS) -o bin/$(GOOS)_$(GOARCH)/$(BIN_NAME) .

You can see the full Makefile below in this Gist: https://gist.github.com/mcastilho/7562ec8c8ae297d7e6bd269d61756863

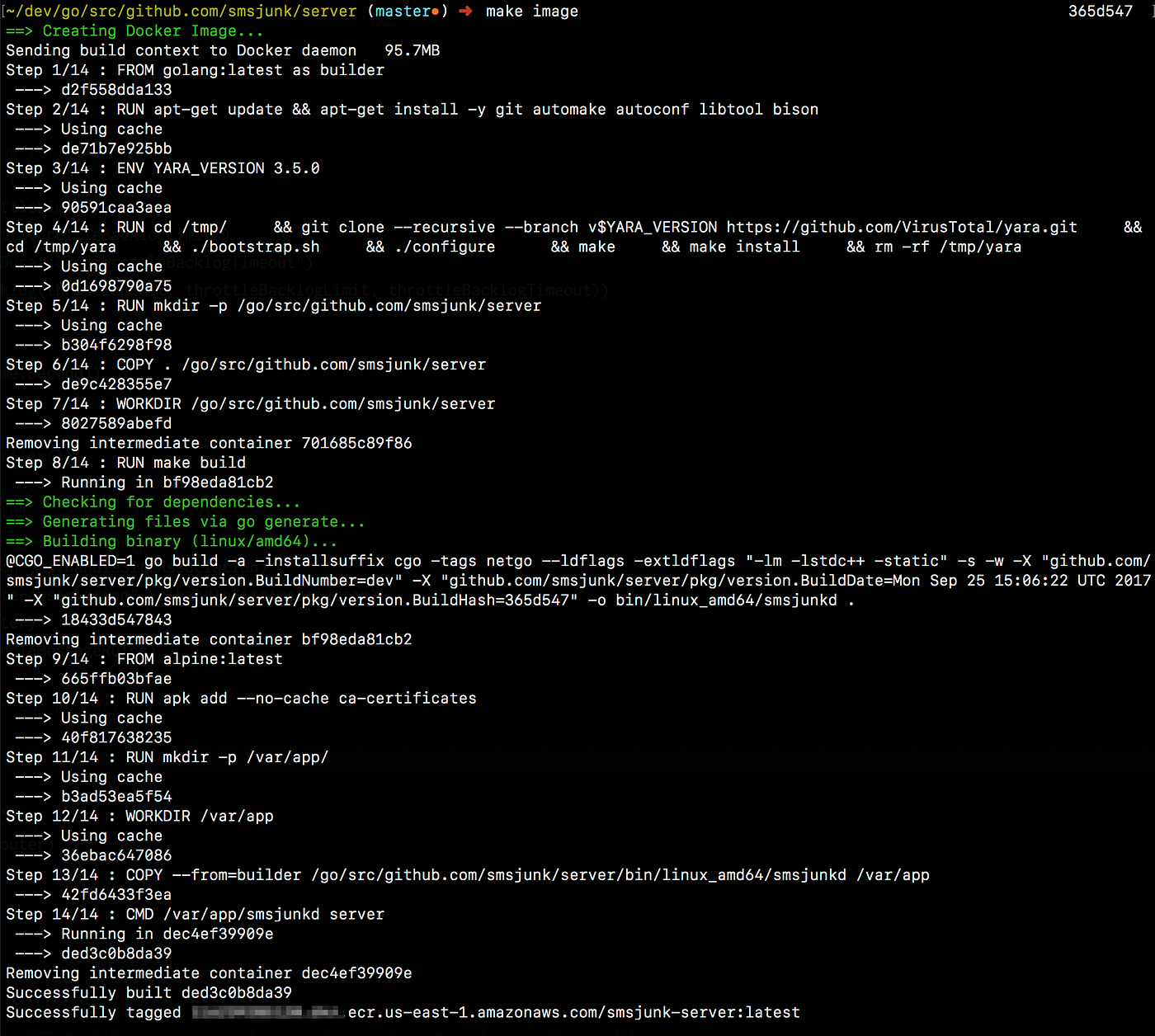

Multi-Stage Builds with Docker

Starting with Docker 17.05, we now have the ability to perform Multi-Stage Docker builds from a single Dockerfile.

The Builder Image

We are using the standard Golang Docker image to perform our builds. In this particular project, we are also installing a 3rd party library called Yara, that we extensively use in our text pattern matching engine. This library is one of the reason we are performing a fully static compilation of our Golang server, so we can run without any dynamic library dependencies.

FROM golang:latest as builderRUN apt-get update && \

apt-get install -y git automake autoconf libtool bisonENV YARA_VERSION 3.5.0

RUN cd /tmp/ \

&& git clone --recursive --branch v$YARA_VERSION \

https://github.com/VirusTotal/yara.git \

&& cd /tmp/yara \

&& ./bootstrap.sh \

&& ./configure \

&& make \

&& make install \

&& rm -rf /tmp/yaraRUN mkdir -p /go/src/github.com/smsjunk/server

COPY . /go/src/github.com/smsjunk/server

WORKDIR /go/src/github.com/smsjunk/server# RUN make setup

RUN make build

The Final Container Image

In the second step of our Dockerfile, after we have completed the compilation portion, we are using a popular Docker image called Alpine, which is only a few megabytes in size, and allows us to quickly deploy to ECS with minimal upload sizes. If you are using CGO (like we are in this case), you need to make sure to statically compile your Golang binaries to run under Alpine, as you may encounter problems with C++ runtime libraries and other issues.

FROM alpine:latestRUN apk add --no-cache ca-certificates# Create app dir

RUN mkdir -p /var/app/WORKDIR /var/app# Copy Binary

COPY --from=builder /go/src/github.com/smsjunk/server/bin/linux_amd64/smsjunkd /var/app# Run Binary

CMD ["/var/app/smsjunkd", "server"]

From our macOS terminal we can now cross-compile our source code in a Linux container and create a slim Docker image to run our application, just by typing the command below

> make image

The output describes what is going on during a Docker build. Once the build is complete, we have a Docker image in our local Docker environment that can be used to run our code in AWS Elastic Container Services.

Pushing Images to Amazon Elastic Container Registry (ECR)

In order to deploy our new container image to AWS, we need push this new image to AWS Elastic Container Registry (ECR) that we have previously created in the AWS console.

The local Docker environment needs to login to the ECR remote registry so we can push our images from the command line, therefore we need to run the following command:

> aws ecr get-login --no-include-email

This will generate an authentication string that you can use to login to ECR via your local Docker environment, allowing us to use docker push commands. All we have to do now is invoke the command below:

> make release

Under the hood, our Makefile command is executing the following, simply pushing the image tagged with the $REGISTRY_REPO url that we have defined earlier.

docker push $(REGISTRY_REPO)

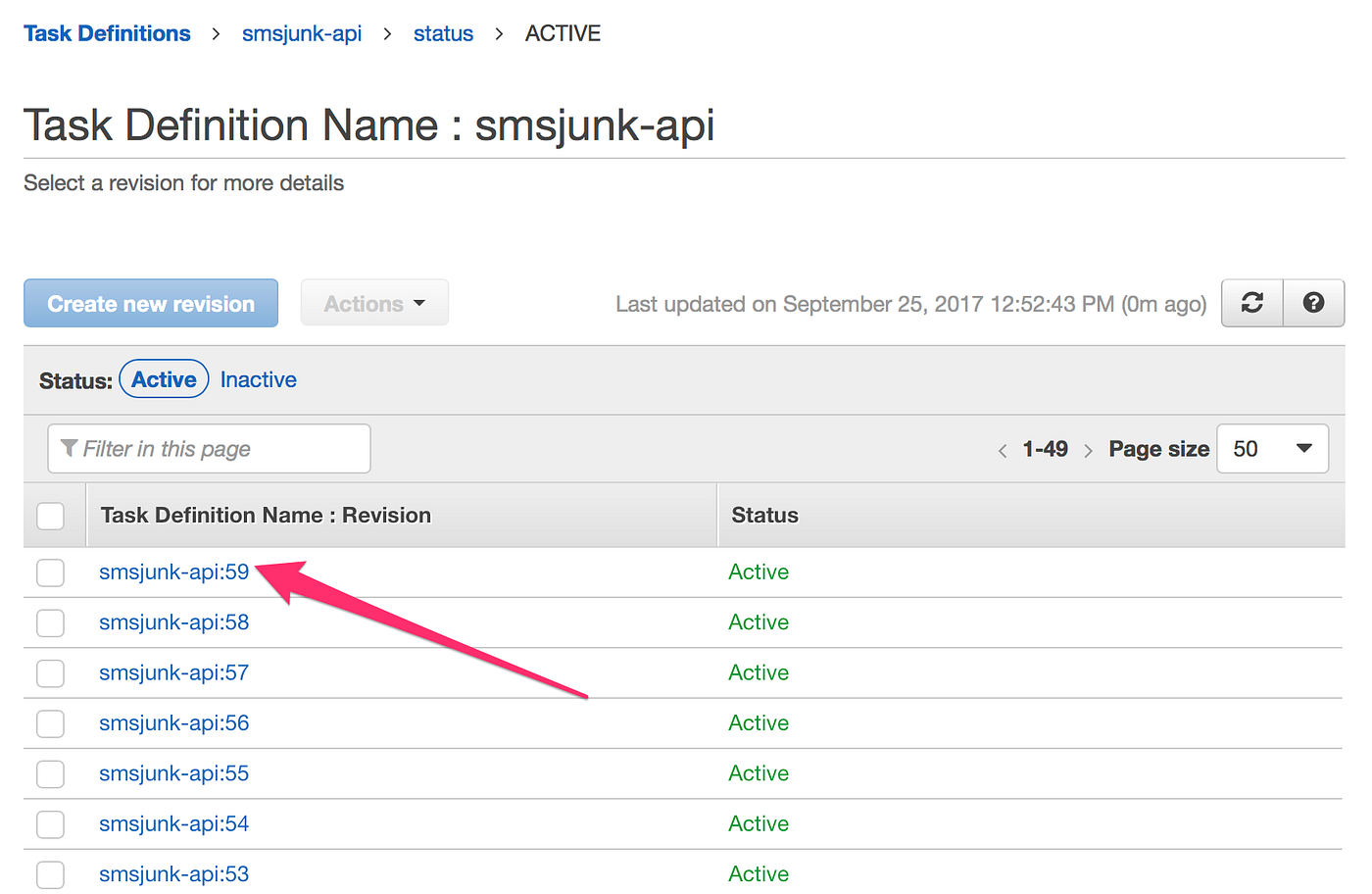

Once we have pushed the new docker image to ECR, we can create a new revision of our Task Definition that uses the new container image. In the screenshot below, we have created version #59.

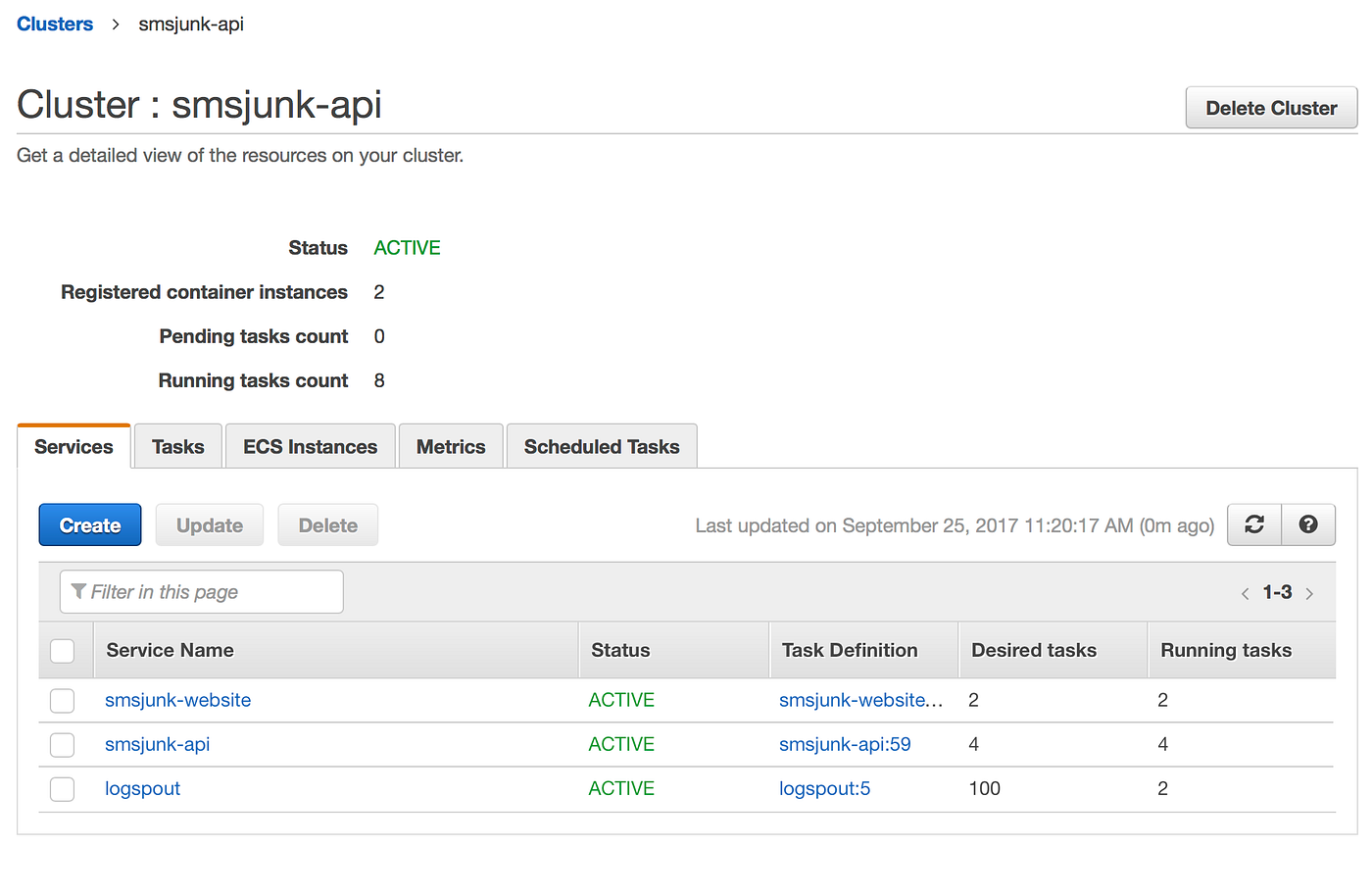

Then we can go to the ECS Cluster management page, and update the ECS Service defined by this Task Definition.

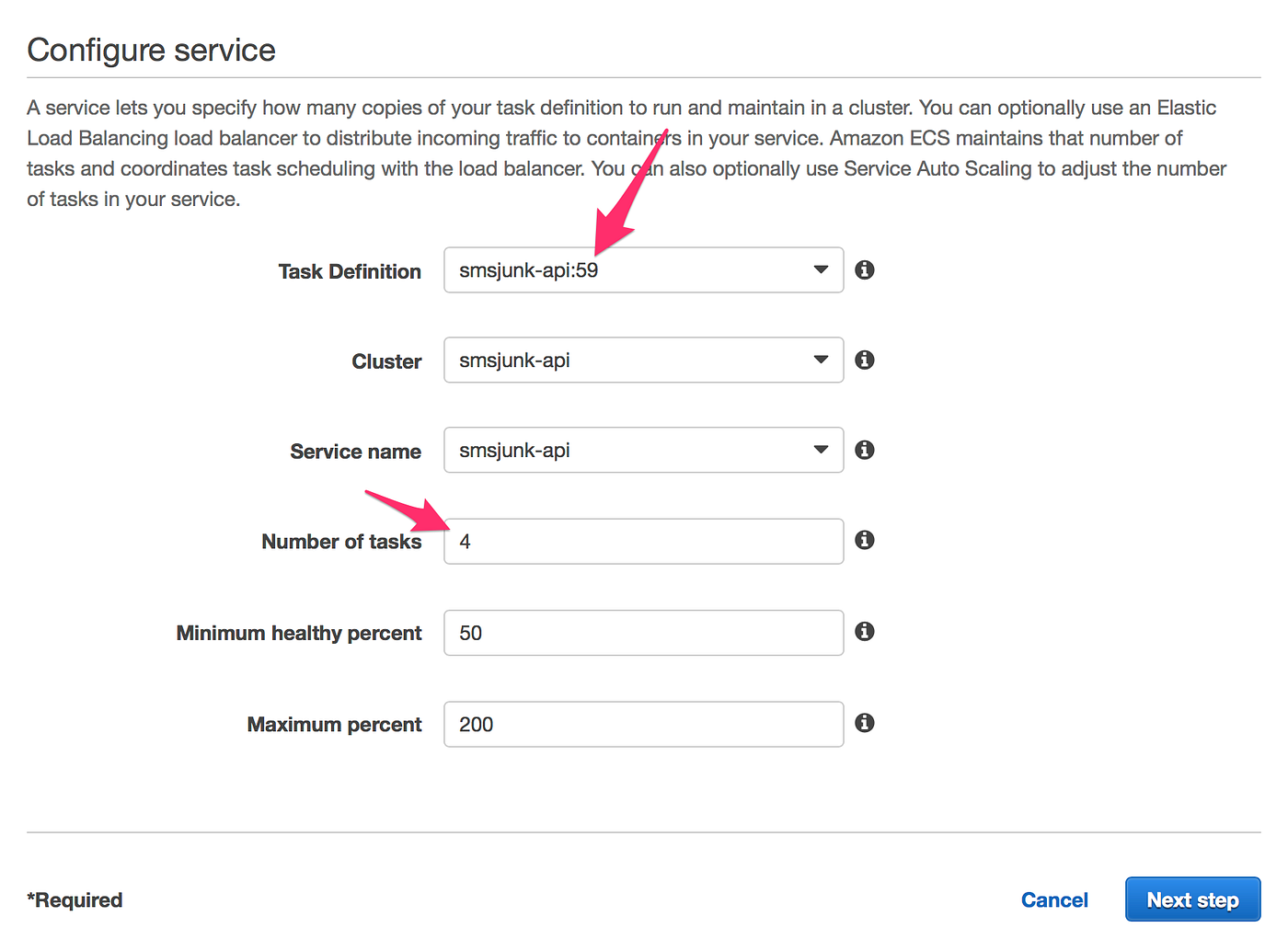

Once we have updated the task definition, we initiate the service update, AWS let us choose which version of the Task definition we would like to run now in the cluster, and how many instances of this new container we would like to have running in the cluster.

Automating Deployments via Terraform

The steps above that we perform in the Amazon Web Services console is not hard to do, it only requires a few clicks and they are usually the same simple steps. This can be easily automated using AWS CLI commands, or easy custom scripts, etc. We have a few projecst that we don’t update frequently so we do it manually via the AWS console, but others that are more important we want to integrate into our Continuous Integration builds.

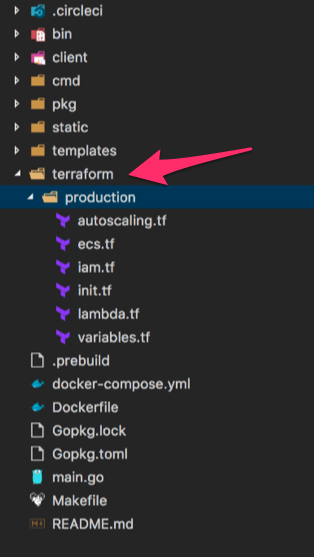

For the last several months we have been using Terraform to automate the deployments of these Golang stacks into our ECS clusters, completely removing the need to log in to the AWS console to perform updates on Task definitions and ECS Services. Everything can be configured via Terraform.

You can find more about Terraform by Hashipcorp here: https://www.terraform.io

This is a topic for another more complete article about Terraform, but to give you an idea on how we are using Terraform, we usually create a folder in the root of our projects that contains our entire AWS infrastructure required for this project, from VPC, subnets, IAM roles and permissions, auto-scaling groups, S3 buckets and our ECS Task Definitions and Services.

From the command line, we can now simply execute the following commands to perform a complete build inside Docker, push the resulting image to ECR, and invoke Terraform to analyze the current state of the AWS infrastructure and plan all the changes that needs to happen in AWS to be able to deploy and run the new image.

> make image> make release> terraform plan> terraform apply

This is called Infrastructure as Code, and any serious development team that is using AWS should consider taking a look and understand how great this tool can be to perform all the required steps to manage your infrastructure and deployment process.

Capturing Logs from ECS Containers

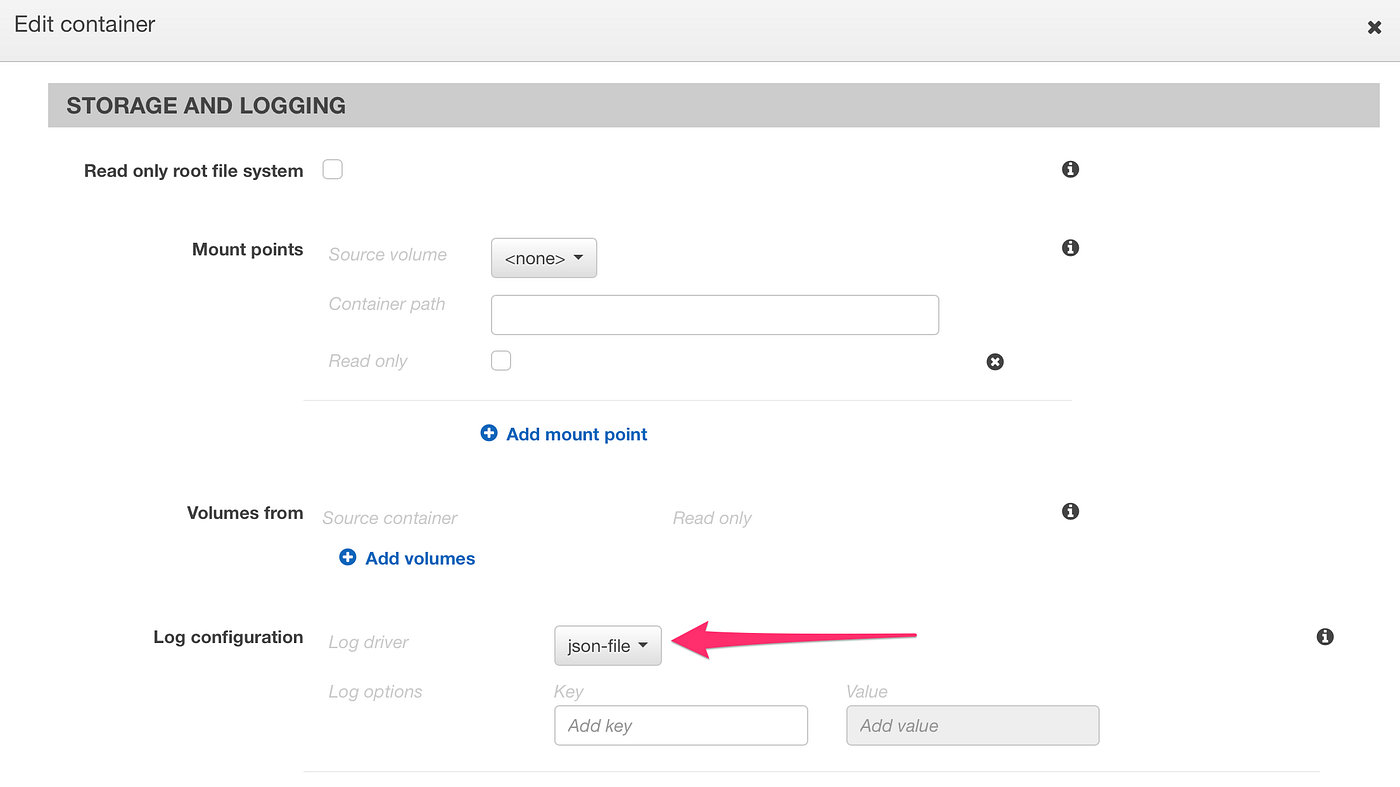

Once our Golang API servers are running in our ECS cluster we need a way to capture the logs generated by the containers. In order to do this, we need to configure in the Task Definition for the server instructing ECS that we want the log driver to be json-file as you can see in the picture below.

We then use Logspout, a very nice open source written also in Golang, that allows us to capture all the logs generated by the containers and send them to a log aggregation endpoint. We use the following public Docker image to run Logspout in our Docker environment.

gliderlabs/logspout:latest

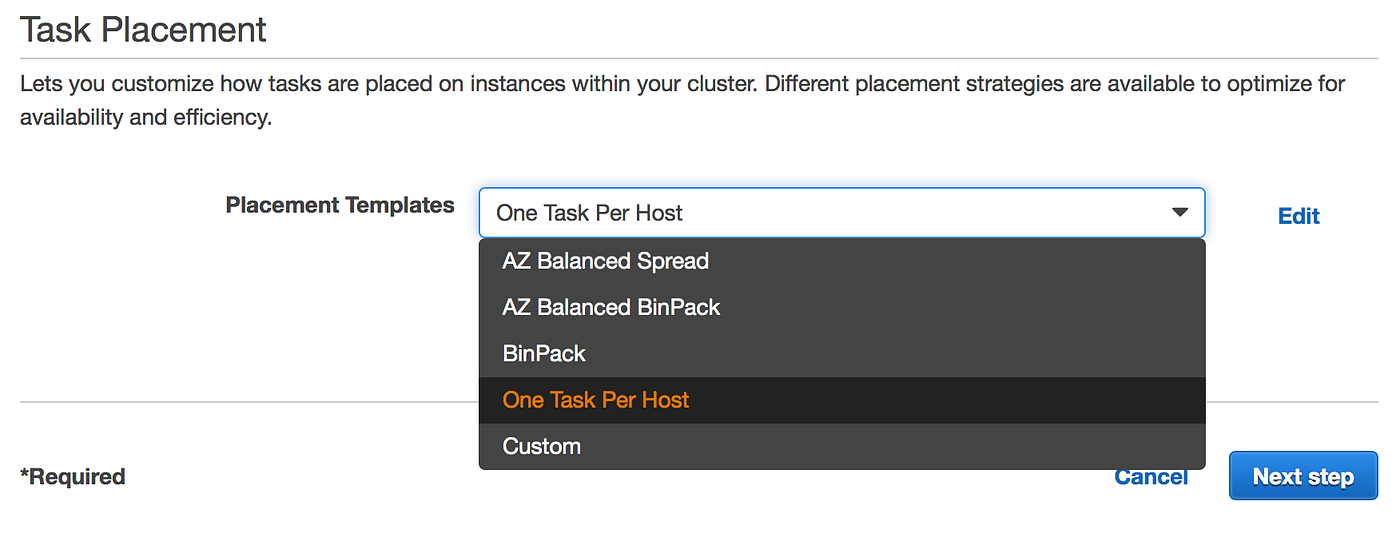

We run a single instance of Logspout docker container on every ECS Cluster node. When you define your ECS Service you can instruct ECS to run only 1 instance per host.

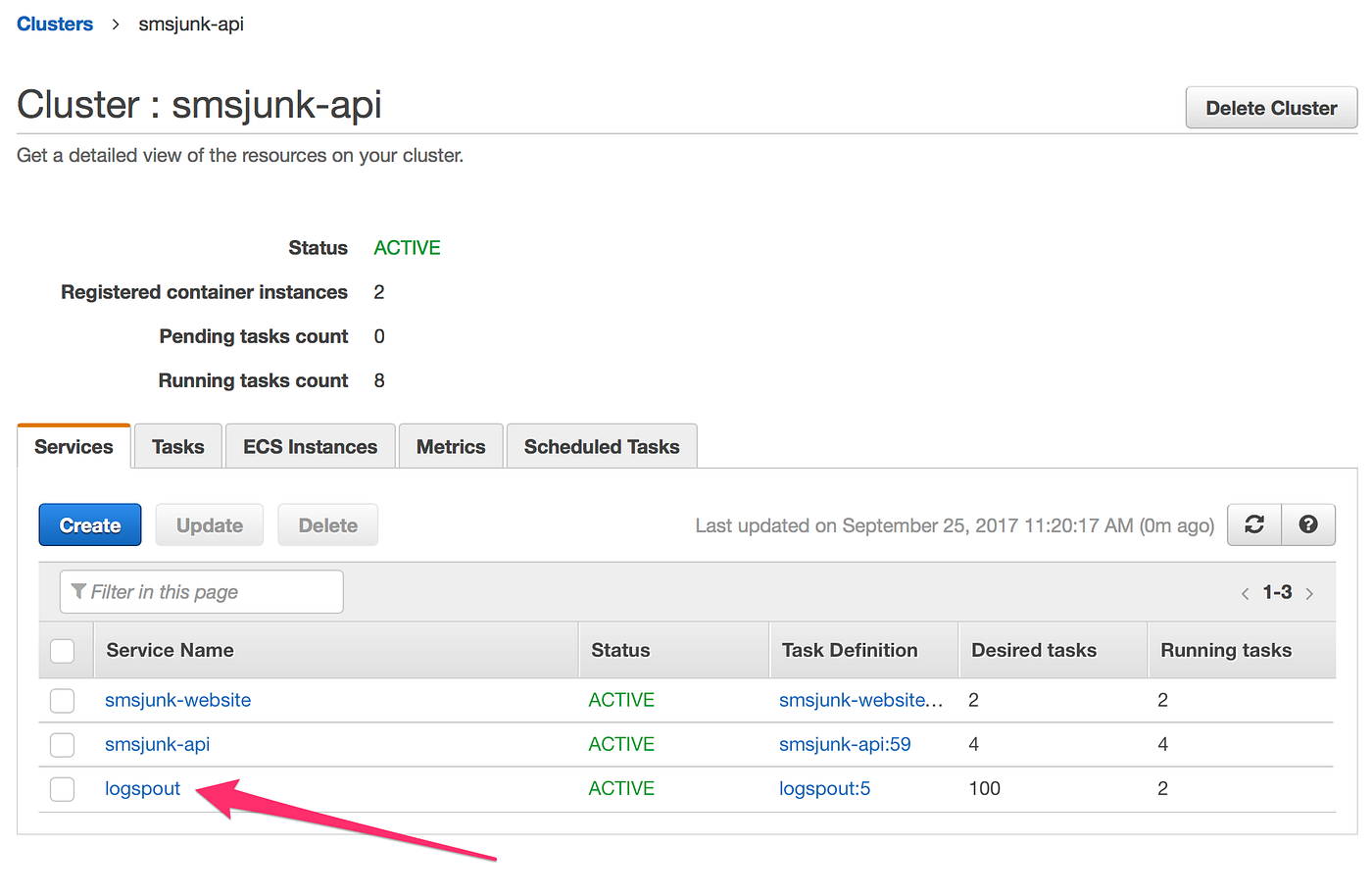

This is a view of our SMS Junk API ECS Cluster. We are currently running in this particular cluster just the API server and the website. All the logs generated by both the webserver and API server are captured by Logspout.

Logspout captures the logs from the Docker Log Driver mechanism and forwards them via SYSLOG. In our case we are using Papertrail, a 3rd party service that allows log management and has some really nice searching and alerting features. We have used it successfuly in many of our projects over the years. We have also used other log vendors such as LogEntries and Logz.io, but I find Papertrail being the easiest one to use and configure.

Conclusion

Lots of organizations don’t have the luxury of having a big DevOps team and streamlining our stack and deployment process has allowed us to focus on our code, releasing new features and not having to spend too much time on how we are going to deploy every new release of the software.

There are a few things that we want to look at further in AWS, especially how we store secrets and make them available to the Golang app. AWS has something called ParameterStore which we can keep secrets safely and expose to the running apps using the AWS SDK. Currently, we use extensively environment variables to pass configuration to our running containers, but a mix of env variables and ParameterStore settings would be ideal for us.

Our SMS Junk Filter app is available in the AppStore here:

https://itunes.apple.com/us/app/sms-junk-filter/id1248160670?mt=8

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK