Java Microbenchmark Harness (JMH)

source link: https://www.geeksforgeeks.org/java-microbenchmark-harness-jmh/

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Java Microbenchmark Harness (JMH)

The JMH Java harness is used to create, execute, and analyze benchmarks in the nano, micro, milli, and macro domains that are written in Java and other JVM-targeting languages. A benchmark is a measurement or reference point that may be used to compare or evaluate something. Programming terminology for benchmarking is “performance code segment comparison.” Thus, the overall approach of micro-benchmarking is even more concentrated on measuring the performance of tiny code units of an application.

Example of Java Microbenchmark Harness (JMH)

Java

import org.openjdk.jmh.annotations.*;

import java.util.concurrent.TimeUnit;

/**

* A simple JMH benchmark to compare the performance of summing an array using a loop vs. using streams.

*/

@BenchmarkMode(Mode.AverageTime)

@OutputTimeUnit(TimeUnit.MILLISECONDS)

@State(Scope.Thread)

public class SimpleBenchmark {

private static final int ARRAY_SIZE = 1_000_000;

private int[] data;

/**

* Setup method to initialize data for the benchmark.

*/

@Setup(Level.Trial)

public void setup() {

data = new int[ARRAY_SIZE];

for (int i = 0; i < ARRAY_SIZE; i++) {

data[i] = i;

}

}

/**

* Benchmark method to sum the array using a loop.

*

* @return The sum of the array elements

*/

@Benchmark

public long sumUsingLoop() {

long sum = 0;

for (int value : data) {

sum += value;

}

return sum;

}

/**

* Benchmark method to sum the array using streams.

*

* @return The sum of the array elements

*/

@Benchmark

public long sumUsingStreams() {

return java.util.Arrays.stream(data).sum();

}

/**

* Main method to run the benchmark.

*

* @param args Command line arguments

* @throws Exception If an error occurs during benchmark execution

*/

public static void main(String[] args) throws Exception {

org.openjdk.jmh.Main.main(args);

}

}

Key features and Components of Java Microbenchmark Harness

- Forking: Benchmarks may be run across several JVM forks with JMH support. By doing this, interference between benchmark runs is reduced and more accurate findings are produced.

- Profiling Support: JMH facilitates comprehensive analysis of the benchmarked code by integrating with profilers such as Java Flight Recorder (JFR) and others.

- Profiling Support: JMH facilitates comprehensive analysis of the benchmarked code by integrating with profilers such as Java Flight Recorder (JFR) and others.

- @Setup Annotation: Indicates the steps that need to be taken in order to set up the test state before each benchmark procedure.

- @TearDown Annotation: Defines procedures to be executed in order to free up resources following each benchmark method.

- Benchmark Parameters: JMH gives us the ability to use the @Param annotation to parametrize our benchmarks. This makes it easier to perform the same benchmark with various input values.

Implementation of Java Microbenchmark Harness (JMH)

Step 1: Add Maven Dependencies

Simply defining the requirements will get us started, and we can really continue using Java 8:

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-core</artifactId>

<version>1.37</version>

</dependency>

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-generator-annprocess</artifactId>

<version>1.37</version>

</dependency>

Step 2: Execute all benchmarks

First, we must launch the org.openjdk.jmh process in order to run all benchmarks. The function Main.main().

Java

import org.openjdk.jmh.annotations.*;

@State(Scope.Benchmark)

// This class represents a stateful object for benchmarking.

public class InitializationBenchmark {

@Benchmark

// @Benchmark annotation marks the method as a benchmark to be executed by JMH.

@BenchmarkMode(Mode.AverageTime)

// @BenchmarkMode specifies the benchmark mode as AverageTime, measuring the average time taken per operation.

public void init() {

// This benchmark method is used to measure the average time taken for an initialization operation.

// In this case, the method does nothing (no operation is performed for the sake of benchmarking).

// The goal is to measure the baseline performance of the benchmarking infrastructure.

// You might replace the comment "// Do nothing" with the actual initialization code you want to benchmark.

}

}

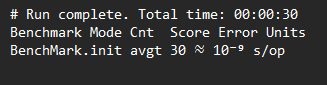

Output:

- init() method,The benchmark method is employed to determine the typical duration of a startup process.

- @Benchmark Annotations is the init() function is designated as a benchmark that JMH will perform by virtue of the @Benchmark annotation.

Step 3: Use a State object

Let’s say we choose to hash a password several hundred times in order to provide additional defense against dictionary attacks on a password database. We may use a State object to investigate the performance impact:

Java

import org.openjdk.jmh.annotations.*;

import com.google.common.hash.Hasher;

import com.google.common.hash.Hashing;

@State(Scope.Benchmark)

// This class represents the state for benchmarking purposes.

public class ExecutionPlan {

@Param({ "10", "20", "30", "50", "100" })

// This annotation specifies the parameters for the benchmark iterations.

// The 'iterations' variable will take on the values 10, 20, 30, 50, and 100 during benchmarking.

public int iterations;

// This variable holds a Hasher instance for the Murmur3 hash function.

public Hasher murmur3;

// A sample password string for hashing.

public String password = "5vnr5yui3yui2i";

@Setup(Level.Invocation)

// This setup method is executed before each benchmark invocation.

public void setUp() {

// Initialize the Murmur3 Hasher before each benchmark iteration.

murmur3 = Hashing.murmur3_128().newHasher();

}

}

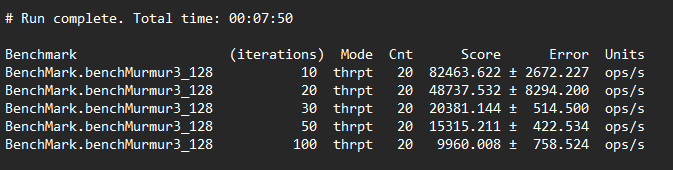

Output:

- The @State(Scope.Benchmark) annotation on the ExecutionPlan class indicates that it is the benchmarking state.

- During benchmark runs, the @Param annotation is used to specify various values for the iterations variable.

- The setup method that will be used to initialize the Murmur3 Hasher prior to each benchmark invocation is indicated by the @Setup(Level.Invocation) annotation.

Step 4: Use dead code elimination

It’s critical to understand optimizations while executing microbenchmarks. They might have a very deceptive effect on the benchmark findings. In order to provide some context, let’s look at an example:

Java

@Benchmark

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@BenchmarkMode(Mode.AverageTime)

public void doNothing() {

// This benchmark method represents doing nothing.

// It is used to measure the baseline performance overhead of the benchmarking infrastructure.

// The annotations specify that the time unit is in nanoseconds and the benchmark mode is average time.

// The method itself doesn't perform any meaningful computation, serving as a reference for minimal overhead.

}

@Benchmark

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@BenchmarkMode(Mode.AverageTime)

public void objectCreation() {

// This benchmark method measures the time taken for creating a new Object.

// It is useful for assessing the performance impact of object instantiation in the benchmarked environment.

// The annotations specify that the time unit is in nanoseconds and the benchmark mode is average time.

// The method creates a new Object, and the benchmarking infrastructure measures the time it takes.

}

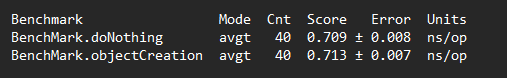

Output:

- @OutputTimeUnit Annotation, gives the time unit in which the benchmark results will be reported.The benchmark results will be presented in nanoseconds because it is set to TimeUnit.NANOSECONDS in this instance.

- objectCreation()method,This benchmarking technique calculates how long it takes to create a new object.

- @BenchmarkMode Annotations, specifies the mode of operation for the benchmarks.

Step 5: Encapsulate the constant state

We may enclose the constant state inside a state object to stop it from folding indefinitely:

Java

@State(Scope.Benchmark)

public static class Log {

// This class represents a stateful object for benchmarking.

// It has a public field 'x' initialized to 10.

public int x = 10;

}

@Benchmark

// This annotation marks the method as a benchmark to be executed by JMH.

public double log(Log input) {

// This benchmark method calculates the natural logarithm (base e) of the 'x' field

// in the provided Log object and returns the result.

return Math.log(input.x);

}

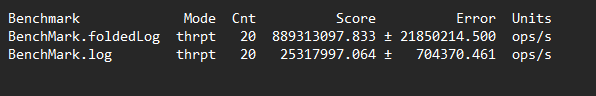

Output:

- The annotation @State(Scope.Benchmark) designates the Log class as a stateful object for benchmarking purposes.

- public static class Log A public field called x in the Log class is initialized to the value 8.

- @Benchmark, the log method is designated as a benchmark by this annotation. T

- Taking an instance of the Log class as input, the log method uses Math.log to compute the natural logarithm of the x field and returns the result.

Feeling lost in the vast world of Backend Development? It's time for a change! Join our Java Backend Development - Live Course and embark on an exciting journey to master backend development efficiently and on schedule.

What We Offer:

- Comprehensive Course

- Expert Guidance for Efficient Learning

- Hands-on Experience with Real-world Projects

- Proven Track Record with 100,000+ Successful Geeks

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK