Training large models in Azure Machine Learning

source link: https://techcommunity.microsoft.com/t5/ai-machine-learning-blog/training-large-models-in-azure-machine-learning/ba-p/3834865

Go to the source link to view the article. You can view the picture content, updated content and better typesetting reading experience. If the link is broken, please click the button below to view the snapshot at that time.

Training large models in Azure Machine Learning

The advancement of large-scale transformer-based deep learning models, which have been meticulously trained on substantial datasets, has undeniably revolutionized the field by achieving unparalleled performance across diverse tasks. Notably, these models have experienced a remarkable growth in size, expanding exponentially over recent years. As platforms such as Hugging Face and Open AI emerge, there arises an increasingly pressing demand for customers to embark on the training and meticulous fine-tuning of these expansive models on an unprecedented scale. This affords organizations the opportunity to tailor the model according to their unique specifications, allowing meticulous control over vital parameters encompassing size, latency, and cost considerations.

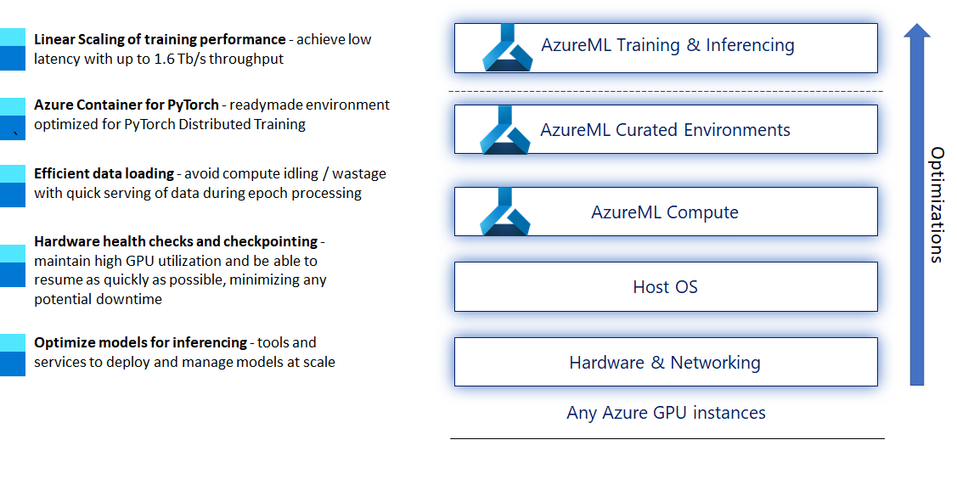

Prominent models of immense scale, including GPT-3, GPT-4, ChatGPT, DALL-e, Codex, Prometheus, Florence, Turing, among others, have been trained and operationalized within the robust Azure AI infrastructure. This cutting-edge infrastructure serves as the foundation for the Azure Machine Learning (Azure ML) service, embodying the same state-of-the-art principles. Azure ML offers a comprehensive suite of services, featuring a managed training service that seamlessly integrates into a holistic training environment, exhibiting exceptional scalability through near linear scaling for distributed training workloads. Simultaneously, it provides unparalleled support for enterprise-grade ML Ops, encompassing meticulous auditing, comprehensive tracking of lineage, and resource sharing capabilities, bolstering its position as a premier choice in the industry.

Below, we’ll explore some of the challenges presented by these large models. We’ve also created step-by-step guide that covers all aspects of the data science lifecycle, available here: aka.ms/azureml-largescale.

- Linear scaling

- Distributed training efficiency

- Efficient data loading

- Hardware health checks and checkpointing

- Deploy and manage large models at scale

Decrease training time while preserving model quality with linear scaling

The premise of linear scaling is that as the number of GPUs increases, the training time should decrease near-linearly while maintaining model quality and accuracy. AzureML provides access to compute clusters with A100 GPUs, which come with high-throughput and low latency on network bandwidth. These VMs are equipped with InfiniBand interconnects with up to 1.6 Tb/s inter-node throughput, allowing them to achieve almost linear scaling of training performance.

Improve training efficiency with distributed training

Environment management for large training scenarios takes time and it is iterative in nature. To this end, AzureML offers an out-of-the-box managed environment that is specifically designed for large-scale training scenarios: the Azure Container for PyTorch (ACPT). This environment includes state-of-the-art technology and optimization tools such as DeepSpeed, LoRA, ONNX Runtime and NebulaML which are ready to integrate into your training scripts. By leveraging these tools, you can ensure that your training process is both efficient and effective, allowing you to achieve the best possible results. You can learn more about these here.

Maintain high GPU utilization with efficient data loading

Efficient data loading is crucial for optimizing training performance. To ensure this, AzureML has a built-in data runtime capability that reads and caches the right amount of data, even if the requested read operation was for less. In addition, we have implemented various data loading techniques during training to serve data to the GPU in a manner that optimizes its utilization. It is essential to avoid GPU idling and the wastage of resources by serving data to the GPU as quickly as possible, especially during epoch processing.

Minimize training disruptions due to hardware failures with efficient checkpointing

We understand the importance of ensuring that hardware failures do not disrupt your training process. To this end, we perform regular health checks during training to identify any potential issues. In the event of a hardware failure, we utilize the saved model state to restart the training process from the most recent healthy checkpoint. Historically, ML engineers had to balance how frequently they should be saving state to keep the GPUs busy and at the same time avoid wasting computation if hardware failures would have occurred, kicking in state restore. Now, with NebulaML they can increase the frequency of saving the training state without much penalty on the GPU utilization. Nebula checkpointing is up to 1000 times faster than standard model checkpointing. As a result, the training process can maintain high GPU utilization and be able to resume as quickly as possible, minimizing any potential downtime.

Deploy and manage large models at scale

After training a large model, operationalizing it can be a challenging task due to complexity and resource requirements. AzureML provides various tools and services to help users deploy and manage their models at scale. One of them is using ONNX, an open standard format for representing machine learning models. ONNX Runtime is a high-performance inference engine for deploying ONNX models to production and has been used extensively in high-scale Microsoft services such as Bing, Office, and Azure AI Services. Another such tool is DeepSpeed-MII, a framework that makes low-latency, low-cost inference of powerful models easily accessible. DeepSpeed MII supports thousands of widely used models and they can be deployed on AzureML with just a few lines of code.

Get the guide for end to end large scale distributed training

Given the scale and complexity of large-scale distributed training, we have compiled our learnings into a comprehensive best practices guide for our customers. This step-by-step guide covers all aspects of the data science lifecycle, from resource setup and data loading to training optimizations, evaluation, and inference optimizations. By following these best practices and leveraging the techniques and optimizations outlined in the guide, you can ensure that your training process is efficient and effective, ultimately leading to better results with lower costs for your organization via an enterprise grade MLOps lifecycle. You can access it at aka.ms/azureml-largescale

Coming soon

We are not stopping here! We have some exciting new features which are coming soon. Read on to get more details and get a chance to use them before everyone else.

- Elastic Training: This allows you to launch distributed training jobs in a fault-tolerant and elastic manner. The job is able to start as soon as one machine is available and is allowed to expand up to the maximum number of nodes requested, without being stopped or restarted. This is particularly useful in the context of using preemptable nodes (Low-Priority or Spot VMs) enabling resilient multi-node training with up to 80% cost reduction If you wish to try out this feature, please sign up here to get access - https://aka.ms/elastic-aml

- Ray on AzureML: Ray is an ML Framework for scaling and accelerating AI applications. It is very popular in the open-source community and we’re happy to announce built-in support for developing Ray applications on AzureML. If you wish to try out this feature, please sign up using - https://aka.ms/aml-ray

Recommend

About Joyk

Aggregate valuable and interesting links.

Joyk means Joy of geeK